ENV介绍:root@bpf1:~# uname -aLinux bpf1 5.11.0-051100-generic #202102142330 SMP Sun Feb 14 23:33:21 UTC 2021 x86_64 x86_64 x86_64 GNU/Linuxroot@bpf1:~# kubectl get nodes -o wideNAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIMEbpf1 Ready control-plane,master 19h v1.23.2 192.168.2.61 <none> Ubuntu 20.04.3 LTS 5.11.0-051100-generic docker://20.10.12bpf2 Ready <none> 19h v1.23.2 192.168.2.62 <none> Ubuntu 20.04.3 LTS 5.11.0-051100-generic docker://20.10.12root@bpf1:~#root@bpf1:~# kubectl -nkube-system exec -it cilium-dqnsk -- cilium statusDefaulted container "cilium-agent" out of: cilium-agent, mount-cgroup (init), clean-cilium-state (init)KVStore: Ok DisabledKubernetes: Ok 1.23 (v1.23.2) [linux/amd64]Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "discovery/v1::EndpointSlice", "networking.k8s.io/v1::NetworkPolicy"]KubeProxyReplacement: Strict [ens33 192.168.2.61 (Direct Routing)]Host firewall: DisabledCilium: Ok 1.11.1 (v1.11.1-76d34db)NodeMonitor: DisabledCilium health daemon: OkIPAM: IPv4: 6/254 allocated from 10.0.1.0/24,BandwidthManager: DisabledHost Routing: BPFMasquerading: BPF [ens33] 10.0.1.0/24 [IPv4: Enabled, IPv6: Disabled]Controller Status: 39/39 healthyProxy Status: OK, ip 10.0.1.159, 0 redirects active on ports 10000-20000Hubble: DisabledEncryption: DisabledCluster health: 2/2 reachable (2022-01-22T08:42:27Z)root@bpf1:~#

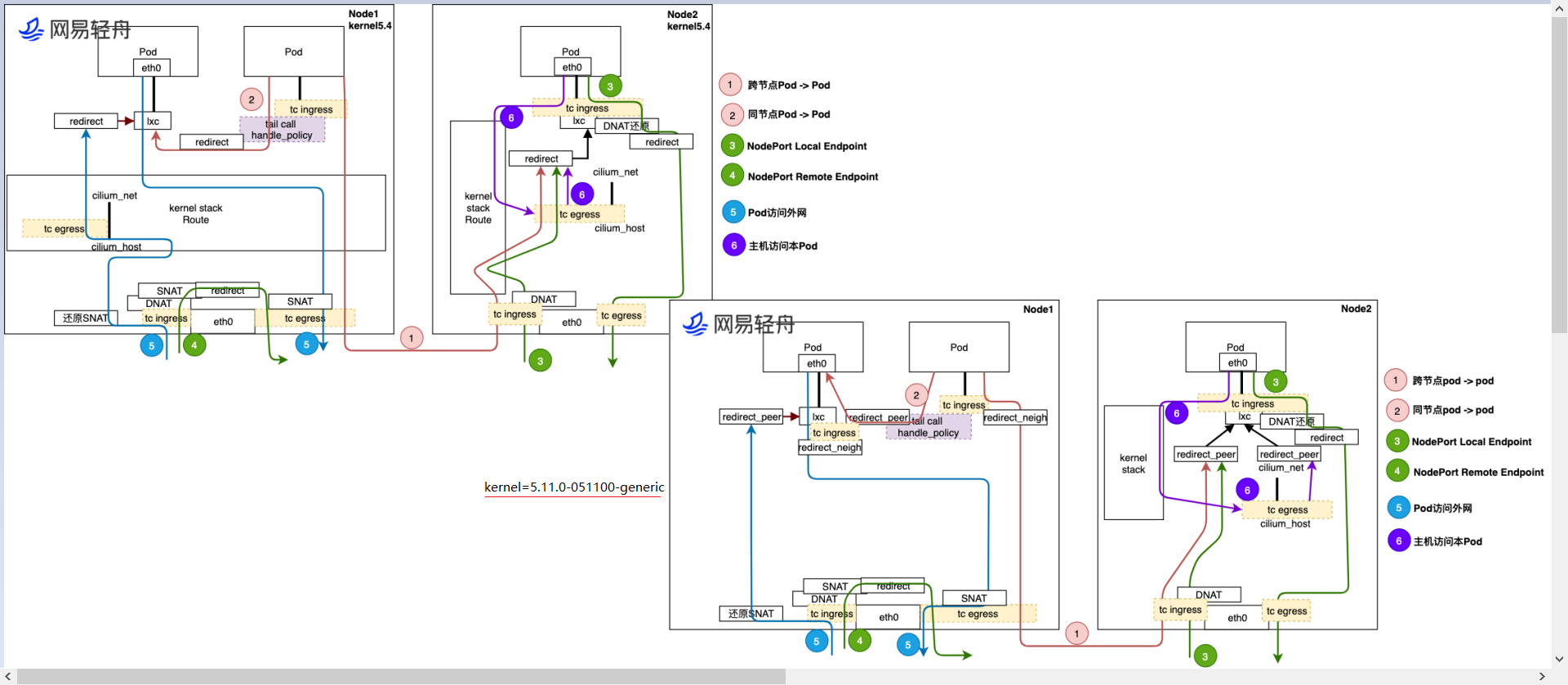

- 1: 5.11 Kernel Cilium CNI[kernel=5.11>5.10] ```properties 我们的环境是基于Ubuntu 20.04,然后手工升级到内核5.11.0-051100-generic的版本,所以具备bpf_redirect_peer() 和 bpf_redirect_neigh()这两个helper函数的能力。此能力是来自Cilium host Routing的Feature引入。 但是此时我们需要从目前我们已知的几个唯独去理解和验证此数据包的datapat。 1.基于tcpdump的抓包 2.基于cilium monitor抓取 3.使用pwru抓取

- [x] **1.2: tcpdump 抓包验证**

```properties

[1.Pod ip信息:]

root@bpf1:~# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cni-6vf5d 1/1 Running 0 19h 10.0.1.180 bpf1 <none> <none>

same 1/1 Running 0 18h 10.0.1.135 bpf1 <none> <none>

root@bpf1:~# kubectl exec -it same -- ping -c 1 10.0.1.180

PING 10.0.1.180 (10.0.1.180): 56 data bytes

64 bytes from 10.0.1.180: seq=0 ttl=63 time=0.152 ms

--- 10.0.1.180 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.152/0.152/0.152 ms

root@bpf1:~#

2.在Pod same 上的eth0以及其对应的lxc网卡抓包:

[2.1:pod same的eth0网卡抓包:]

root@bpf1:~# kubectl exec -ti same -- tcpdump -pne -i eth0

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), snapshot length 262144 bytes

09:06:46.016123 7a:a2:51:7c:d4:40 > 0e:fc:49:5b:00:dd, ethertype IPv4 (0x0800), length 98: 10.0.1.135 > 10.0.1.180: ICMP echo request, id 20480, seq 0, length 64

09:06:46.016205 0e:fc:49:5b:00:dd > 7a:a2:51:7c:d4:40, ethertype IPv4 (0x0800), length 98: 10.0.1.180 > 10.0.1.135: ICMP echo reply, id 20480, seq 0, length 64

09:06:51.043602 7a:a2:51:7c:d4:40 > 0e:fc:49:5b:00:dd, ethertype ARP (0x0806), length 42: Request who-has 10.0.1.159 tell 10.0.1.135, length 28

09:06:51.043666 0e:fc:49:5b:00:dd > 7a:a2:51:7c:d4:40, ethertype ARP (0x0806), length 42: Reply 10.0.1.159 is-at 0e:fc:49:5b:00:dd, length 28

^C

4 packets captured

4 packets received by filter

0 packets dropped by kernel

root@bpf1:~#

[2.2:在pod same的lxc网卡抓包:]

root@bpf1:~# kubectl exec -ti same -- ethtool -S eth0

NIC statistics:

peer_ifindex: 28

rx_queue_0_xdp_packets: 0

rx_queue_0_xdp_bytes: 0

rx_queue_0_drops: 0

rx_queue_0_xdp_redirect: 0

rx_queue_0_xdp_drops: 0

rx_queue_0_xdp_tx: 0

rx_queue_0_xdp_tx_errors: 0

tx_queue_0_xdp_xmit: 0

tx_queue_0_xdp_xmit_errors: 0

28: lxcda6f5295b4b7@if27: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 0e:fc:49:5b:00:dd brd ff:ff:ff:ff:ff:ff link-netnsid 4

inet6 fe80::cfc:49ff:fe5b:dd/64 scope link

valid_lft forever preferred_lft forever

root@bpf1:~# tcpdump -pne -i lxcda6f5295b4b7

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on lxcda6f5295b4b7, link-type EN10MB (Ethernet), capture size 262144 bytes

09:06:46.016127 7a:a2:51:7c:d4:40 > 0e:fc:49:5b:00:dd, ethertype IPv4 (0x0800), length 98: 10.0.1.135 > 10.0.1.180: ICMP echo request, id 20480, seq 0, length 64

09:06:51.043606 7a:a2:51:7c:d4:40 > 0e:fc:49:5b:00:dd, ethertype ARP (0x0806), length 42: Request who-has 10.0.1.159 tell 10.0.1.135, length 28

09:06:51.043665 0e:fc:49:5b:00:dd > 7a:a2:51:7c:d4:40, ethertype ARP (0x0806), length 42: Reply 10.0.1.159 is-at 0e:fc:49:5b:00:dd, length 28

^C

3 packets captured

3 packets received by filter

0 packets dropped by kernel

root@bpf1:~#

[2.3:在cni-6vf5d pod的eth0上抓包]

root@bpf1:~# kubectl exec -it cni-6vf5d -- tcpdump -pne -i eth0

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), snapshot length 262144 bytes

09:06:46.016127 d2:f1:29:4b:bd:9d > fe:55:fa:02:ee:e5, ethertype IPv4 (0x0800), length 98: 10.0.1.135 > 10.0.1.180: ICMP echo request, id 20480, seq 0, length 64

09:06:46.016204 fe:55:fa:02:ee:e5 > d2:f1:29:4b:bd:9d, ethertype IPv4 (0x0800), length 98: 10.0.1.180 > 10.0.1.135: ICMP echo reply, id 20480, seq 0, length 64

09:06:51.043585 fe:55:fa:02:ee:e5 > d2:f1:29:4b:bd:9d, ethertype ARP (0x0806), length 42: Request who-has 10.0.1.159 tell 10.0.1.180, length 28

09:06:51.043654 d2:f1:29:4b:bd:9d > fe:55:fa:02:ee:e5, ethertype ARP (0x0806), length 42: Reply 10.0.1.159 is-at d2:f1:29:4b:bd:9d, length 28

^C

4 packets captured

4 packets received by filter

0 packets dropped by kernel

root@bpf1:~#

[2.4:在cni-6vf5d pod的lxc上抓包]

root@bpf1:~# kubectl exec -it cni-6vf5d -- ethtool -S eth0

NIC statistics:

peer_ifindex: 24

rx_queue_0_xdp_packets: 0

rx_queue_0_xdp_bytes: 0

rx_queue_0_drops: 0

rx_queue_0_xdp_redirect: 0

rx_queue_0_xdp_drops: 0

rx_queue_0_xdp_tx: 0

rx_queue_0_xdp_tx_errors: 0

tx_queue_0_xdp_xmit: 0

tx_queue_0_xdp_xmit_errors: 0

24: lxcf9d0a91de5fb@if23: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether d2:f1:29:4b:bd:9d brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::d0f1:29ff:fe4b:bd9d/64 scope link

valid_lft forever preferred_lft forever

root@bpf1:~# tcpdump -pne -i lxcf9d0a91de5fb

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on lxcf9d0a91de5fb, link-type EN10MB (Ethernet), capture size 262144 bytes

09:06:46.016205 fe:55:fa:02:ee:e5 > d2:f1:29:4b:bd:9d, ethertype IPv4 (0x0800), length 98: 10.0.1.180 > 10.0.1.135: ICMP echo reply, id 20480, seq 0, length 64

09:06:51.043591 fe:55:fa:02:ee:e5 > d2:f1:29:4b:bd:9d, ethertype ARP (0x0806), length 42: Request who-has 10.0.1.159 tell 10.0.1.180, length 28

09:06:51.043652 d2:f1:29:4b:bd:9d > fe:55:fa:02:ee:e5, ethertype ARP (0x0806), length 42: Reply 10.0.1.159 is-at d2:f1:29:4b:bd:9d, length 28

^C

3 packets captured

3 packets received by filter

0 packets dropped by kernel

root@bpf1:~#

- 2.cilium monitor trace 验证

```properties

[1.Pod ip信息:]

root@bpf1:~# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cni-6vf5d 1/1 Running 0 19h 10.0.1.180 bpf1

same 1/1 Running 0 18h 10.0.1.135 bpf1 root@bpf1:~# kubectl exec -it same — ping -c 1 10.0.1.180 PING 10.0.1.180 (10.0.1.180): 56 data bytes 64 bytes from 10.0.1.180: seq=0 ttl=63 time=0.152 ms

—- 10.0.1.180 ping statistics —- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 0.152/0.152/0.152 ms root@bpf1:~# #################################################################################################

root@bpf1:/home/cilium# cilium endpoint list

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

2836 Disabled Disabled 31009 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default 10.0.1.135 ready

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:run=same

3663 Disabled Disabled 37483 k8s:app=cni 10.0.1.180 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

root@bpf1:/home/cilium#

24: lxcf9d0a91de5fb@if23:

root@bpf1:~# kubectl get pods -owide | grep bpf1

cni-6vf5d 1/1 Running 0 20h 10.0.1.180 bpf1

cni-6vf5d[eth0] IP:10.0.1.180 MAC:FE:55:FA:02:EE:E5 cni-6vf5d[lxc] IP: MAC:d2:f1:29:4b:bd:9d

same[eth0] - same[lxc] — cni-6vf5d[eht0] cni-6vf5d[eht0] - cni-6vf5d[lxc] — same[eth0] ################################################################################################# 我们从这里开始分析,以下从cilium monitor分析得出:这里有一个分析的思路就是怎么表示在哪里处理。所以这里我们使用cilium 中定义的ENDPOINT的ID来区分: 所以这里我们: [2836 Disabled Disabled 31009 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default 10.0.1.135 ready ] [3663 Disabled Disabled 37483 k8s:app=cni 10.0.1.180 ready ] 一个是:2836{same-10.0.1.135},一个是3663{cni-6vf5d-10.0.1.180}:

CPU 02: MARK 0x0 FROM 2836 DEBUG: Conntrack lookup 1/2: src=10.0.1.135:22016 dst=10.0.1.180:0 # 1.从这里开始分析,这里原地址是same pod(10.0.1.135)dst:10.0.1.180,这里经过部分iptables处理。

CPU 02: MARK 0x0 FROM 2836 DEBUG: Conntrack lookup 2/2: nexthdr=1 flags=1

CPU 02: MARK 0x0 FROM 2836 DEBUG: CT verdict: New, revnat=0

CPU 02: MARK 0x0 FROM 2836 DEBUG: Successfully mapped addr=10.0.1.180 to identity=37483 # 2.在cilium中的dst地址被标识成:31009。这是cilium的一个特色。这样当pod重启ip地址变了,policy不变。

CPU 02: MARK 0x0 FROM 2836 DEBUG: Conntrack create: proxy-port=0 revnat=0 src-identity=31009 lb=0.0.0.0

CPU 02: MARK 0x0 FROM 2836 DEBUG: Attempting local delivery for container id 3663 from seclabel 31009

CPU 02: MARK 0x0 FROM 3663 DEBUG: Conntrack lookup 1/2: src=10.0.1.135:22016 dst=10.0.1.180:0 # 3.这里注意到我们的ID变了。变成了3663,变成另外一个Pod的ENDPOINT ID。说明此时到另外一个Pod了。 CPU 02: MARK 0x0 FROM 3663 DEBUG: Conntrack lookup 2/2: nexthdr=1 flags=0 # 3.此时我们需要理解的是,另外一个Pod收到了本端Pod发的ICMP的Request。 CPU 02: MARK 0x0 FROM 3663 DEBUG: CT verdict: New, revnat=0

CPU 02: MARK 0x0 FROM 3663 DEBUG: Conntrack create: proxy-port=0 revnat=0 src-identity=31009 lb=0.0.0.0

Ethernet {Contents=[..14..] Payload=[..86..] SrcMAC=d2:f1:29:4b:bd:9d DstMAC=fe:55:fa:02:ee:e5 EthernetType=IPv4 Length=0}

IPv4 {Contents=[..20..] Payload=[..64..] Version=4 IHL=5 TOS=0 Length=84 Id=4532 Flags=DF FragOffset=0 TTL=63 Protocol=ICMPv4 Checksum=4795 SrcIP=10.0.1.135 DstIP=10.0.1.180 Options=[] Padding=[]}

ICMPv4 {Contents=[..8..] Payload=[..56..] TypeCode=EchoRequest Checksum=58835 Id=22016 Seq=0}

Failed to decode layer: No decoder for layer type Payload

CPU 02: MARK 0x0 FROM 3663 to-endpoint: 98 bytes (98 captured), state new, interface lxcf9d0a91de5fb, , identity 31009->37483, orig-ip 10.0.1.135, to endpoint 3663 CPU 02: MARK 0x0 FROM 3663 DEBUG: Conntrack lookup 1/2: src=10.0.1.180:0 dst=10.0.1.135:22016 # 4.此时我们可以看到,对端Pod此时响应的ICMP Replay的时候经过的iptables。 CPU 02: MARK 0x0 FROM 3663 DEBUG: Conntrack lookup 2/2: nexthdr=1 flags=1 CPU 02: MARK 0x0 FROM 3663 DEBUG: CT entry found lifetime=16850186, revnat=0 CPU 02: MARK 0x0 FROM 3663 DEBUG: CT verdict: Reply, revnat=0 CPU 02: MARK 0x0 FROM 3663 DEBUG: Successfully mapped addr=10.0.1.135 to identity=31009 # 5.此时数据就会通过bpf_redirect_peer()函数redirect到本端same pod的eth0接口。

CPU 02: MARK 0x0 FROM 3663 DEBUG: Attempting local delivery for container id 2836 from seclabel 37483

CPU 02: MARK 0x0 FROM 2836 DEBUG: Conntrack lookup 1/2: src=10.0.1.180:0 dst=10.0.1.135:22016 # 6.此时数据回到本端same pod,此时经过是本端Pod的iptables,注意此时是经过的Pod内部的。这里说的iptables。

CPU 02: MARK 0x0 FROM 2836 DEBUG: Conntrack lookup 2/2: nexthdr=1 flags=0

CPU 02: MARK 0x0 FROM 2836 DEBUG: CT entry found lifetime=16850186, revnat=0

CPU 02: MARK 0x0 FROM 2836 DEBUG: CT verdict: Reply, revnat=0

Ethernet {Contents=[..14..] Payload=[..86..] SrcMAC=0e:fc:49:5b:00:dd DstMAC=7a:a2:51:7c:d4:40 EthernetType=IPv4 Length=0} IPv4 {Contents=[..20..] Payload=[..64..] Version=4 IHL=5 TOS=0 Length=84 Id=28166 Flags= FragOffset=0 TTL=63 Protocol=ICMPv4 Checksum=63080 SrcIP=10.0.1.180 DstIP=10.0.1.135 Options=[] Padding=[]} ICMPv4 {Contents=[..8..] Payload=[..56..] TypeCode=EchoReply Checksum=60883 Id=22016 Seq=0} Failed to decode layer: No decoder for layer type Payload CPU 02: MARK 0x0 FROM 2836 to-endpoint: 98 bytes (98 captured), state reply, interface lxcda6f5295b4b7, , identity 37483->31009, orig-ip 10.0.1.180, to endpoint 2836

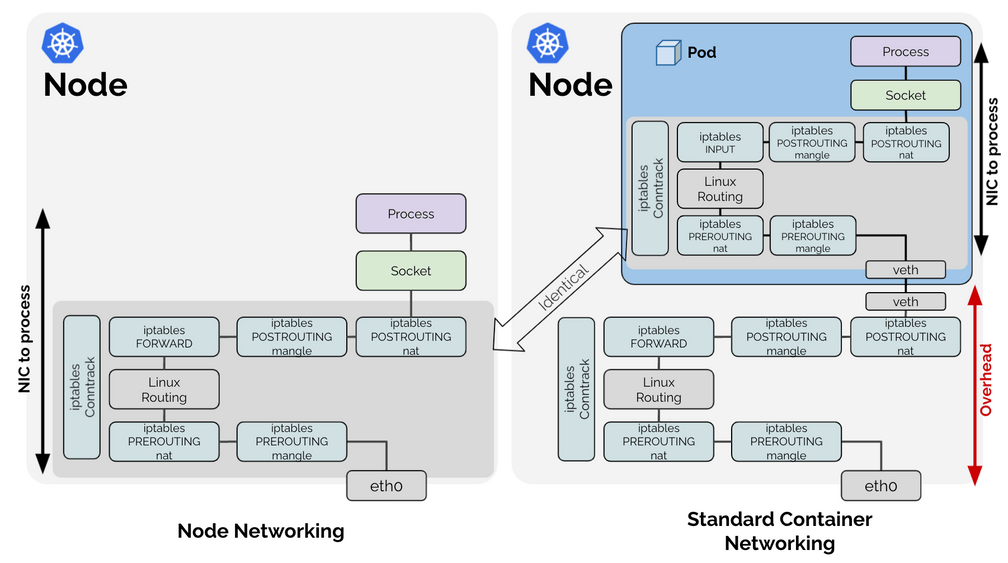

关于这里:我们需要明确一下 我们Pod所在的NS中是也有自己的stack的,同样也有相应的iptables规则。由于此种形式,我们才反复的强调,由于pod 网络的引入,我们说网络平面下降,其实实际上,我们这里有两层的概念。此前我们一直说的是在underlay网络的引入就造成网络平面下降的问题,实际上,对应的NS中由于多处理了一次stack的逻辑,使得网络性能下降。这和我们此前传统的process是有区别的,目前看起来是多处理了一次stack的逻辑。 我们引用一下cilium的一篇Blog:https://cilium.io/blog/2021/05/11/cni-benchmark

```properties

从上图我们可以知道:

但从iptables处理的角度来看:明显有一次overhead的iptables处理,从而使得数据的处理相交于传统的process是有相对于的性能损耗,而使用bfp_redirect_peer() 的时候,本端的pod需要通过地址pod内部的iptables,而不再需要经过HOST NS中的iptables处理,这样处理起来速度就接近我们说的node 的通信效率了。只不过,我们在本端Pod的eth0 和其对应的lxc网卡有context的switch。

- 3.iptables trace ```properties 使用iptables TRACE的形式我们可以通过: 在对应主机上:我们先看Cilium的形式: modprobe nf_log_ipv4 iptables -t raw -I PREROUTING -p icmp -j TRACE 如果想要删除此条iptables规则: iptables -t raw -D PREROUTING 1 然后观察主机上的 tailf /var/log/message

之类可以参考:https://www.yuque.com/wei.luo/cilium/ail10y

- [x] **4.pwru 验证**

```properties

1.下载pwru:

wget https://github.com/cilium/pwru/releases/download/v0.0.2/pwru

chmod +x pwru && mv pwru /usr/bin

2.requirements:[对于当前使用的Ubuntu 20.04 LTS with 5.11的内核,以下配置均已是默认值。[cat /boot/config-5.11.0-051100-generic]]

Requirements

pwru requires >= 5.5 kernel to run. For --output-skb >= 5.9 kernel is required.

The following kernel configuration is required.

Option Note

CONFIG_DEBUG_INFO_BTF=y Available since >= 5.3

CONFIG_KPROBES=y

CONFIG_PERF_EVENTS=y

CONFIG_BPF=y

CONFIG_BPF_SYSCALL=y

3.查看帮助:

root@bpf1:~# ./pwru -h

Usage of ./pwru:

--filter-dst-ip string filter destination IP addr

--filter-dst-port uint16 filter destination port

--filter-func string filter the kernel functions that can be probed; the filter can be a regular expression (RE2)

--filter-mark uint32 filter skb mark

--filter-proto string filter L4 protocol (tcp, udp, icmp, icmp6)

--filter-src-ip string filter source IP addr

--filter-src-port uint16 filter source port

--output-meta print skb metadata

--output-relative-timestamp print relative timestamp per skb

--output-skb print skb

--output-stack print stack

--output-tuple print L4 tuple

pflag: help requested

root@bpf1:~#

我们来capture:

root@bpf1:~# kubectl exec -it same -- ping -c 1 10.0.1.180

PING 10.0.1.180 (10.0.1.180): 56 data bytes

64 bytes from 10.0.1.180: seq=0 ttl=63 time=0.397 ms

--- 10.0.1.180 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.397/0.397/0.397 ms

root@bpf1:~#

我们分别trace 俩同node上的地址,注意均需要使用源地址:

root@bpf1:~# ./pwru --filter-src-ip 10.0.1.135 --output-tuple

2022/01/22 12:18:01 Attaching kprobes...

586 / 586 [----------------------------------------------------------------------------------------------------------------------------] 100.00% 34 p/s

2022/01/22 12:18:19 Attached (ignored 13)

2022/01/22 12:18:19 Listening for events..

SKB PROCESS FUNC TIMESTAMP

0xffff9afcb4519b00 [ping] __ip_local_out 82857431306974 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [ping] ip_output 82857431329345 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [ping] nf_hook_slow 82857431336940 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [ping] __cgroup_bpf_run_filter_skb 82857431342831 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [ping] neigh_resolve_output 82857431346738 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [ping] __neigh_event_send 82857431349974 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [ping] eth_header 82857431356406 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [ping] skb_push 82857431359141 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [ping] dev_queue_xmit 82857431362337 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [ping] netdev_core_pick_tx 82857431366926 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [ping] netif_skb_features 82857431369631 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [ping] passthru_features_check 82857431373147 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] skb_network_protocol 82857431375512 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] validate_xmit_xfrm 82857431379529 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] dev_hard_start_xmit 82857431381774 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] skb_clone_tx_timestamp 82857431385921 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] __dev_forward_skb 82857431389578 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] skb_scrub_packet 82857431392654 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] eth_type_trans 82857431395990 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] netif_rx 82857431399286 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] tcf_classify_ingress 82857431422440 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] skb_ensure_writable 82857431442066 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] skb_ensure_writable 82857431444090 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] skb_ensure_writable 82857431445583 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] skb_ensure_writable 82857431447036 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] skb_do_redirect 82857431456393 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] ip_rcv 82857431461122 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] sock_wfree 82857431464839 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] ip_route_input_noref 82857431468406 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] ip_route_input_rcu 82857431471251 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] fib_validate_source 82857431476190 10.0.1.135:0->10.0.1.180:0(icmp)

0xffff9afcb4519b00 [<empty>] ip_local_deliver 82857431481590 10.0.1.135:0->10.0.1.180:0(icmp)

^C2022/01/22 12:19:29 Received signal, exiting program..

*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*#*

root@bpf1:~# ./pwru --filter-src-ip 10.0.1.180 --output-tuple

2022/01/22 12:18:31 Attaching kprobes...

586 / 586 [----------------------------------------------------------------------------------------------------------------------------] 100.00% 36 p/s

2022/01/22 12:18:48 Attached (ignored 13)

2022/01/22 12:18:48 Listening for events..

SKB PROCESS FUNC TIMESTAMP

0xffff9afcb4519400 [ping] __ip_local_out 82857431535271 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] ip_output 82857431554777 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] nf_hook_slow 82857431557562 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] __cgroup_bpf_run_filter_skb 82857431560338 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] neigh_resolve_output 82857431562492 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] __neigh_event_send 82857431564175 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] eth_header 82857431566659 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] skb_push 82857431568293 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] dev_queue_xmit 82857431570056 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] netdev_core_pick_tx 82857431572020 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] netif_skb_features 82857431574133 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] passthru_features_check 82857431575877 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] skb_network_protocol 82857431577530 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] validate_xmit_xfrm 82857431579353 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] dev_hard_start_xmit 82857431580926 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] skb_clone_tx_timestamp 82857431582740 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] __dev_forward_skb 82857431584443 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] skb_scrub_packet 82857431586016 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [ping] eth_type_trans 82857431587759 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] netif_rx 82857431589562 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] tcf_classify_ingress 82857431595734 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] skb_ensure_writable 82857431617414 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] skb_ensure_writable 82857431620039 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] skb_ensure_writable 82857431621582 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] skb_ensure_writable 82857431623305 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] skb_do_redirect 82857431628125 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] ip_rcv 82857431630359 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] sock_wfree 82857431632663 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] ip_route_input_noref 82857431634757 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] ip_route_input_rcu 82857431636560 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] fib_validate_source 82857431639406 10.0.1.180:0->10.0.1.135:0(icmp)

0xffff9afcb4519400 [<empty>] ip_local_deliver 82857431641780 10.0.1.180:0->10.0.1.135:0(icmp)

^C2022/01/22 12:19:31 Received signal, exiting program..

这个相对复杂,得比较熟悉内核的代码方可。这里不做赘述。