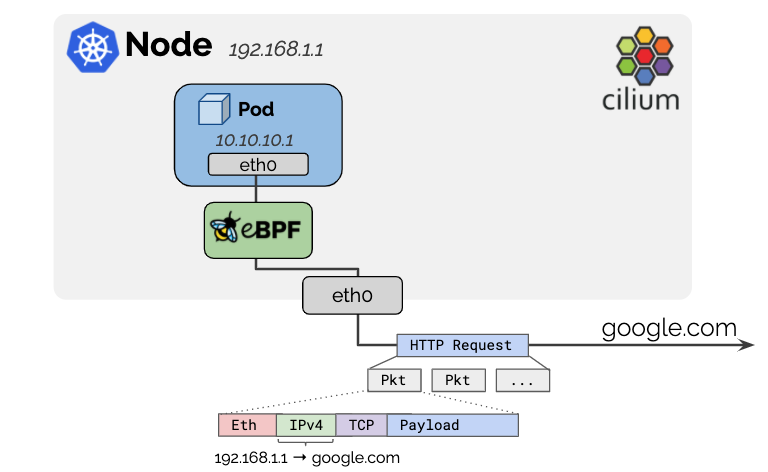

IPv4 addresses used for pods are typically allocated from RFC1918 private address blocks and thus, not publicly routable. Cilium will automatically masquerade the source IP address of all traffic that is leaving the cluster to the IPv4 address of the node as the node’s IP address is already routable on the network.root@bpf1:/home/cilium# cilium-agent -h | grep enable-ipv4-masquerade--enable-ipv4-masquerade Masquerade IPv4 traffic from endpoints leaving the host (default true)root@bpf1:/home/cilium#

ENV:CIlium Native Routing[host Routing Feature Enabled]

root@bpf1:~# kubectl -nkube-system logs cilium-6hdtv | grep enable-ipv4-masquerade

level=info msg=" --enable-ipv4-masquerade='true'" subsys=daemon

root@bpf1:~#

Setting the routable CIDR

The default behavior is to exclude any destination within the IP allocation CIDR of the local node. If the pod IPs are routable across a wider network, that network can be specified with the option: ipv4-native-routing-cidr: 10.0.0.0/8 in which case all destinations within that CIDR will not be masqueraded.

// 默认情况下:会排除CIDR中的所有地址,所以:我们配置完集群以后,创建出阿里的pod的跨节点通信是不需要进行NAT的。而如果你需要对更大范围内的地址不进行NAT操作,我们可以通过选项:ipv4-native-routing-cidr来配置。我们的环境中暂时未配置:[那么就只是对CIDR为dst的地址不进行NAT操作。]

root@bpf1:~# kubectl -nkube-system logs cilium-6hdtv | grep ipv4-native-routing-cidr

level=info msg=" --ipv4-native-routing-cidr=''" subsys=daemon

root@bpf1:~#

可以通过cilium status中 10.0.0.0/16 不进行NAT处理。

Masquerading: BPF [ens33] 10.0.0.0/16 [IPv4: Enabled, IPv6: Disabled] # 表明只是在ens33接口上进行NAT的接口。

[Note:]

When eBPF-masquerading is enabled, traffic from pods to the External IP of cluster nodes will also not be masqueraded. The eBPF implementation differs from the iptables-based masquerading on that aspect. This limitation is tracked at GitHub issue 17177.

- 1.eBPF-based Mode ```properties he eBPF-based implementation is the most efficient implementation. It requires Linux kernel 4.19 and can be enabled with the bpf.masquerade=true helm option.【反映出来的便是: enable-bpf-masquerade: “true”】

The current implementation depends on the BPF NodePort feature. The dependency will be removed in the future (GitHub issue 13732).

Masquerading can take place only on those devices which run the eBPF masquerading program. This means that a packet sent from a pod to an outside will be masqueraded (to an output device IPv4 address), if the output device runs the program. If not specified, the program will be automatically attached to the devices selected by the BPF NodePort device detection metchanism. To manually change this, use the devices helm option. Use cilium status to determine which devices the program is running on:

root@bpf3:/home/cilium# cilium status

KVStore: Ok Disabled

Kubernetes: Ok 1.23 (v1.23.2) [linux/amd64]

Kubernetes APIs: [“cilium/v2::CiliumClusterwideNetworkPolicy”, “cilium/v2::CiliumEndpoint”, “cilium/v2::CiliumNetworkPolicy”, “cilium/v2::CiliumNode”, “core/v1::Namespace”, “core/v1::Node”, “core/v1::Pods”, “core/v1::Service”, “discovery/v1::EndpointSlice”, “networking.k8s.io/v1::NetworkPolicy”]

KubeProxyReplacement: Strict [ens33 192.168.2.63 (Direct Routing)]

Host firewall: Disabled

Cilium: Ok 1.11.0 (v1.11.0-27e0848)

NodeMonitor: Listening for events on 128 CPUs with 64x4096 of shared memory

Cilium health daemon: Ok

IPAM: IPv4: 3/254 allocated from 10.0.1.0/24,

BandwidthManager: Disabled

Host Routing: BPF

Masquerading: BPF [ens33] 10.0.0.0/16 [IPv4: Enabled, IPv6: Disabled] # 表明只是在ens33接口上进行NAT的接口。[两种模式,一种是eBPF。一种是在Leagcy Mode下使用IPtables]

Controller Status: 24/24 healthy

Proxy Status: OK, ip 10.0.1.72, 0 redirects active on ports 10000-20000

Hubble: Ok Current/Max Flows: 4095/4095 (100.00%), Flows/s: 14.78 Metrics: Disabled

Encryption: Disabled

Cluster health: 2/3 reachable (2022-02-12T09:11:46Z)

Name IP Node Endpoints

bpf1 192.168.2.61 reachable unreachable

root@bpf3:/home/cilium#

The eBPF-based masquerading can masquerade packets of the following IPv4 L4 protocols:

TCP

UDP

ICMP (only Echo request and Echo reply)

By default, all packets from a pod destined to an IP address outside of the ipv4-native-routing-cidr range are masqueraded, except for packets destined to other cluster nodes. The exclusion CIDR is shown in the above output of cilium status (10.0.0.0/16).

[Note:] When eBPF-masquerading is enabled, traffic from pods to the External IP of cluster nodes will also not be masqueraded. The eBPF implementation differs from the iptables-based masquerading on that aspect. This limitation is tracked at GitHub issue 17177. https://github.com/cilium/cilium/issues/17177 所以这里有两处不会被NAT,一个是CIDR。一个是NodeIP。

- [x] **3.fine-grained control Mode**

```properties

# HELM 中开启: ipMasqAgent.enabled=true 反映的便是: enable-ip-masq-agent: "true"

enable-ip-masq-agent: "true"

To allow more fine-grained control, Cilium implements ip-masq-agent in eBPF which can be enabled with the ipMasqAgent.enabled=true helm option.

The eBPF-based ip-masq-agent supports the nonMasqueradeCIDRs and masqLinkLocal options set in a configuration file. A packet sent from a pod to a destination which belongs to any CIDR from the nonMasqueradeCIDRs is not going to be masqueraded. If the configuration file is empty, the agent will provision the following non-masquerade CIDRs:

10.0.0.0/8

172.16.0.0/12

192.168.0.0/16

100.64.0.0/10

192.0.0.0/24

192.0.2.0/24

192.88.99.0/24

198.18.0.0/15

198.51.100.0/24

203.0.113.0/24

240.0.0.0/4

In addition, if the masqLinkLocal is not set or set to false, then 169.254.0.0/16 is appended to the non-masquerade CIDRs list.

The agent uses Fsnotify to track updates to the configuration file, so the original resyncInterval option is unnecessary.

我们可以首先开启 ipMasqAgent 以后,我们再使用configmap去配置:

$ cat agent-config/config

nonMasqueradeCIDRs:

- 10.0.0.0/8

- 172.16.0.0/12

- 192.168.0.0/16

masqLinkLocal: false

$ kubectl create configmap ip-masq-agent --from-file=agent-config --namespace=kube-system

$ # Wait ~60s until the ConfigMap is mounted into a cilium pod

$ kubectl -n kube-system exec -ti cilium-xxxxx -- cilium bpf ipmasq list

IP PREFIX/ADDRESS

10.0.0.0/8

169.254.0.0/16

172.16.0.0/12

192.168.0.0/16

- 4.NOTE

```properties

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/disk-pressure:NoSchedule op=Exists

Events: Type Reason Age From Messagenode.kubernetes.io/memory-pressure:NoSchedule op=Exists node.kubernetes.io/not-ready:NoExecute op=Exists node.kubernetes.io/pid-pressure:NoSchedule op=Exists node.kubernetes.io/unreachable:NoExecute op=Exists node.kubernetes.io/unschedulable:NoSchedule op=Exists

Normal SandboxChanged 32m kubelet Pod sandbox changed, it will be killed and re-created. Normal Pulled 32m kubelet Container image “burlyluo/nettoolbox” already present on machine Normal Created 32m kubelet Created container nettoolbox Normal Started 32m kubelet Started container nettoolbox Normal Killing 103s kubelet Stopping container nettoolbox Warning FailedKillPod 7s (x8 over 73s) kubelet error killing pod: failed to “KillPodSandbox” for “d2efe3c4-cedc-43ed-ad3f-0e4af2f74654” with KillPodSandboxError: “rpc error: code = Unknown desc = networkPlugin cni failed to teardown pod \”cni-89wbx_default\” network: failed to find plugin \”cilium-cni\” in path [/opt/cni/bin]”

在Cilium中,我们应该先删除Cilium的CNI,然后再删除其他应用的Pod。其他的CNI貌似没有这个限制。 ```