Part I Introduction to a problem

Vision is one of the senses. Having vision (or sight) means to be able to see. Seeing gives animals knowledge of the world. Some simple animals can only tell light from dark, but with vertebrates, the visual system is able to form images. A long time ago, when first animals evolved the ability to perceive the light patterns from the outside environment and interpret them into images, it gave them a distinct evolutionary advantage - finding food and escaping predators was easier with vision. Obviously visual perception is useful to have in robots as well, for navigation, manipulation and other tasks. How can we add vision to out robot though if it doesn’t have eyes?

Part II Explaining the knowledge

How do animals see the world?

In order for us to see, light enters our eyes through the black spot in the middle which is called the pupil, which is basically a hole in the eye. The pupil can change size with the help of a muscle called the iris. By opening and closing the pupil, the iris can control the amount of light that enters the eye. Once light passes through pupil, fluid in the eye and the lens, it lands on the back part of the eye called the retina. The retina uses light sensitive cells called rods and cones to see. The rods are extra sensitive to light and help us to see when it’s dark. The cones help us to see color. There are three types of cones each helping us to see a different color of light: red, green, and blue. When light of particular wavelength hits a cone or a rod, it fires a signal down the optic nerve to the brain. The optic nerve is a bundle of nerve fibres from all over the retina.

When the information from the light leaves the retina, it goes to the brain. It travels along the optic chiasma until it reaches the optic cortex at the rear of the brain. The information is then processed to find out the shapes and colors of objects. From that and from memory, it can tell of what kind the object is. For example, it can somehow tell a tree from a house. The path on which this kind of information flows is called ventral stream.

The brain can also tell where objects are. For example, it can tell how far away an object is (this is called hand-eye coordination). This is needed when catching a ball or manipulating objects. The path on which this kind of information flows is called dorsal stream.

How do machines see the world?

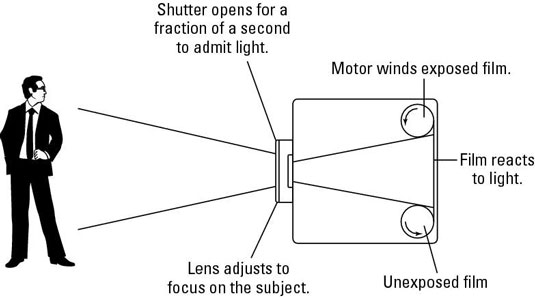

It wouldn’t come as huge surprise to students learning course about a Bionic robot, that cameras are in fact very similar to biological eyes in their working principle. In an old film camera, a press of a button (a shutter button) makes a hole (the aperture) open briefly at the front of the camera, allowing light to enter through the lens (a thick piece of glass or plastic mounted on the front). The light causes reactions to take place in the chemicals on the film, thus storing the picture in front of you.

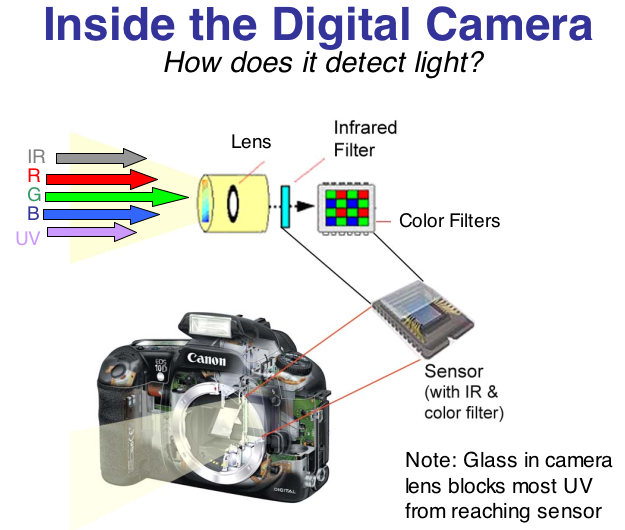

Analog cameras helped us to capture memorable moments for dozens of years, before relatively recently they were replaced by digital cameras. In a digital camera, the light after entering cameras through a lens falls on an image sensor, which breaks it up into millions of pixels (a pixel is a smallest element of a digital picture). The sensor measures the color and brightness of each pixel and stores it as a number.

A digital photograph is effectively an enormously long string of numbers describing the exact details of each pixel it contains.

Part III Solving a problem

Task 1: Check environment light levels

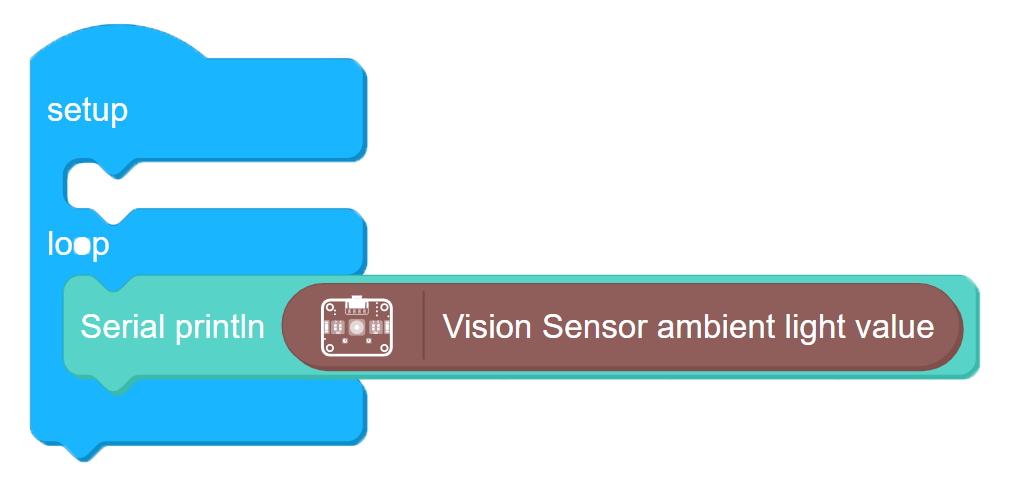

The Vision sensor module we have in Bittle Sensor pack is a digital camera sensor coupled with a microcontroller - as a result it can not only capture the image, but also process it and output a result, for example, proximity to an obstacle, ambient light levels, location of certain objects and so on. For the first task we will use Vision sensor to measure light levels in the environment and display them on a Serial monitor.  Connect Vision sensor to Grove I2C socket on BIttle mainboard and upload the following code:

Connect Vision sensor to Grove I2C socket on BIttle mainboard and upload the following code:

5-1

Task 2: Make a simple obstacle avoidance program

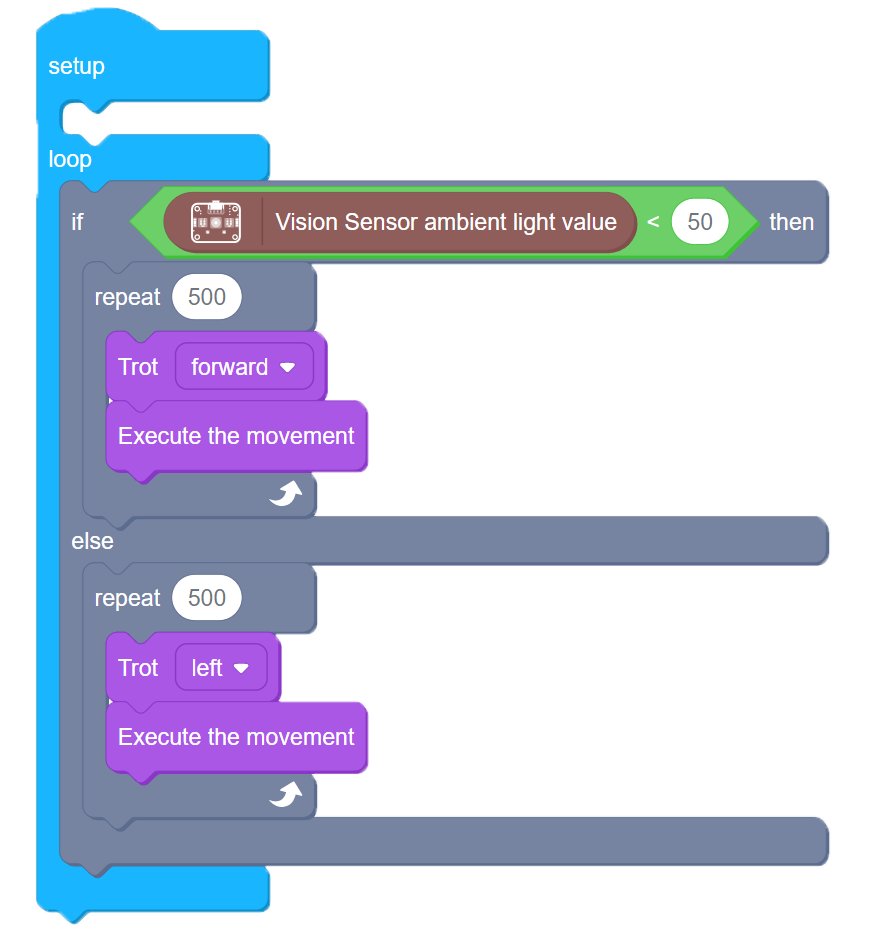

Next we’re going to use Vision sensor to detect obstacles in front of Bittle and steer the robot away from the obstacle. Vision sensor operates slowly than Ultrasonic sensor, that’s why as a result of execution of the following code, the robot is going to stop for a second to measure the distance to a nearest object in front of it

5-2

and if it is less than pre-set threshold, continue trotting forward. Otherwise it will turn left.

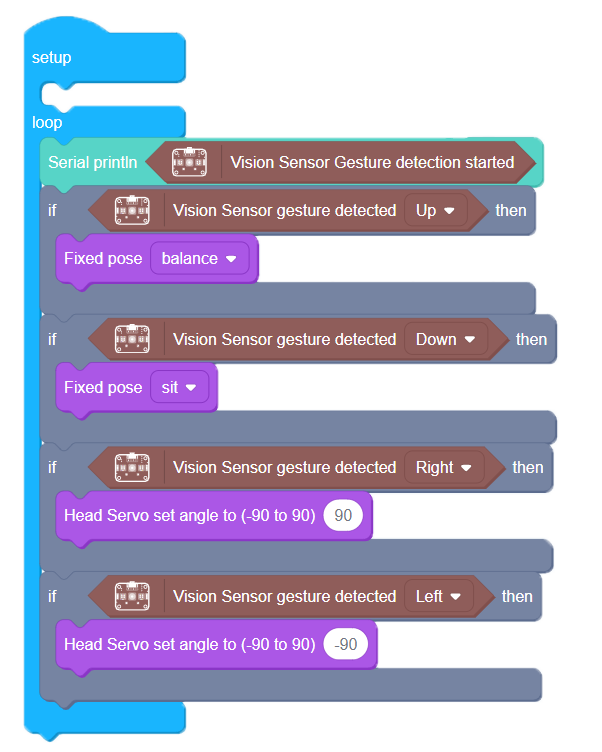

Task 3: Control Bittle with gestures

Finally we will use Vision sensor to detect swiping gestures and control the robot according to detected gesture. Vision Sensor Gesture detection started block is necessary since it receives the data from the sensor, then we use boolean blocks (i.e. Vision sensor gesture detected [Up]) to execute movement commands on Bittle.

5-3

After you upload the code, swipe right/left/up/down with an open palm in front of Vision sensor to control Bittle. See the below video for end result demonstration:

Part IV Expanding the knowledge

Measure ambient light levels on left and right of current BIttle location (using Head servo block) and make Bittle walk towards a more illuminated direction.