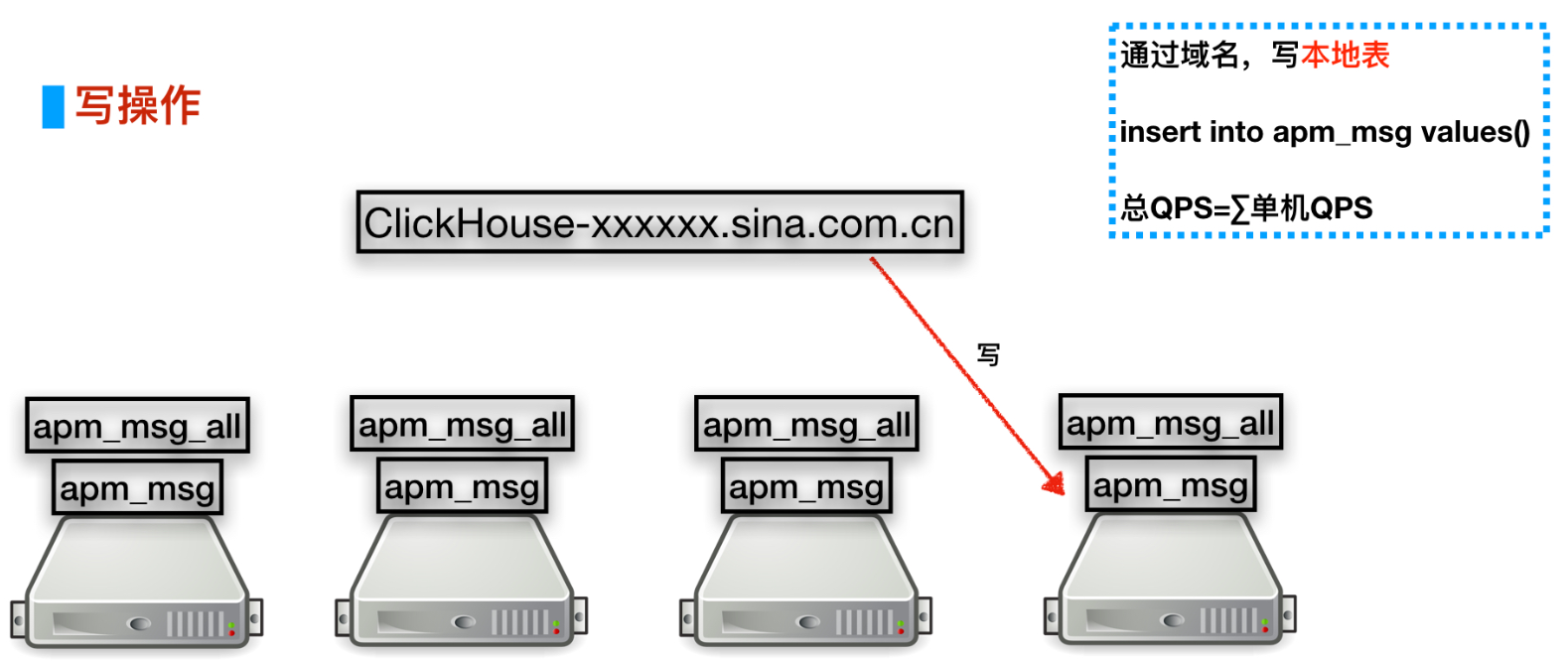

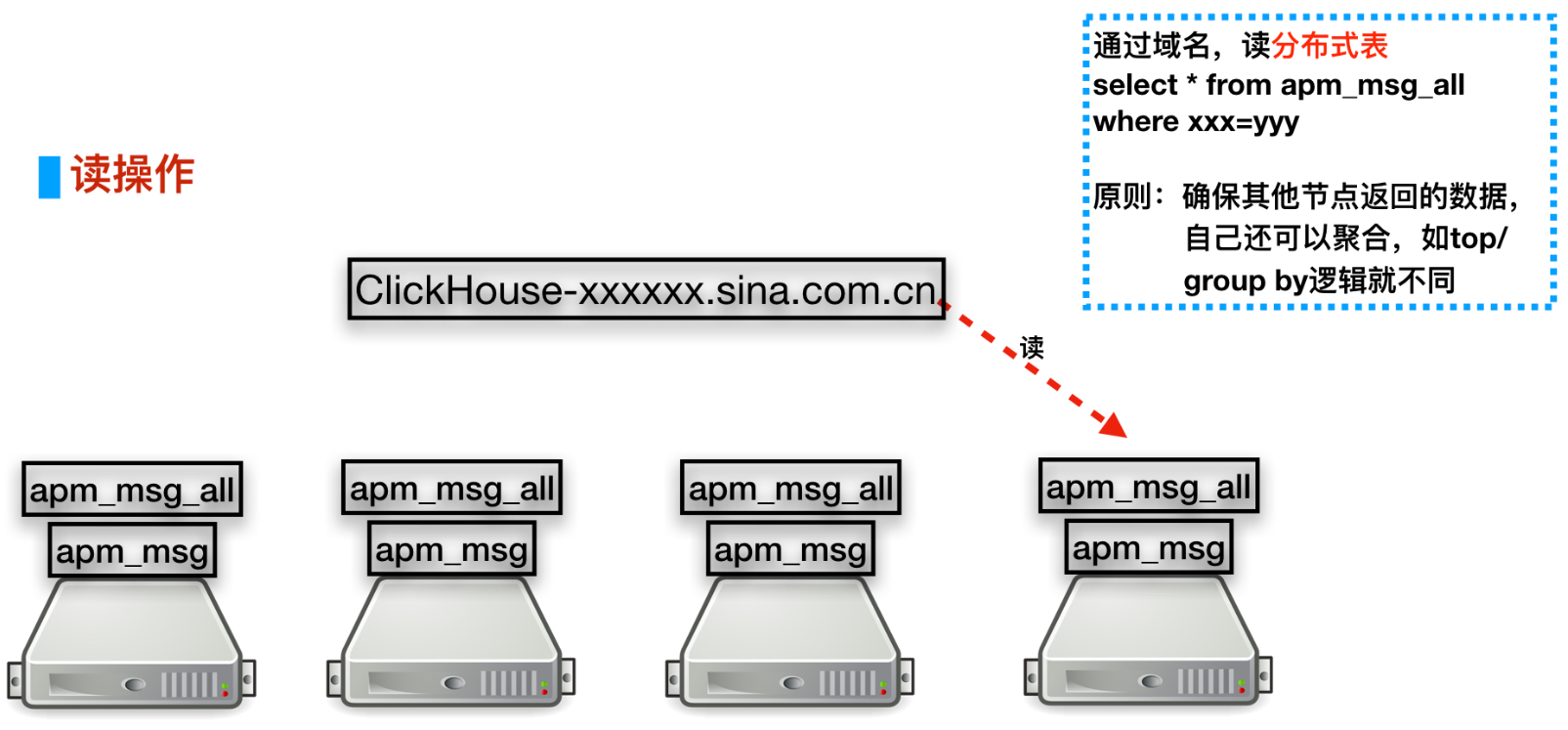

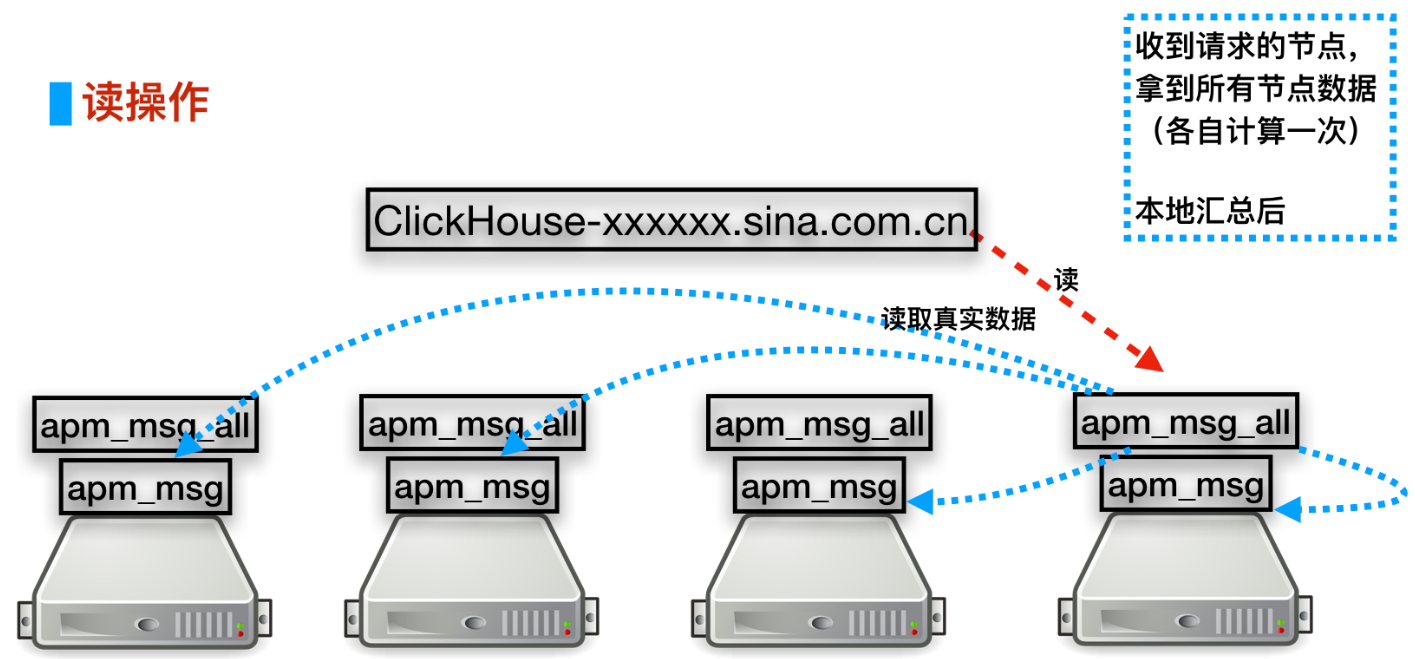

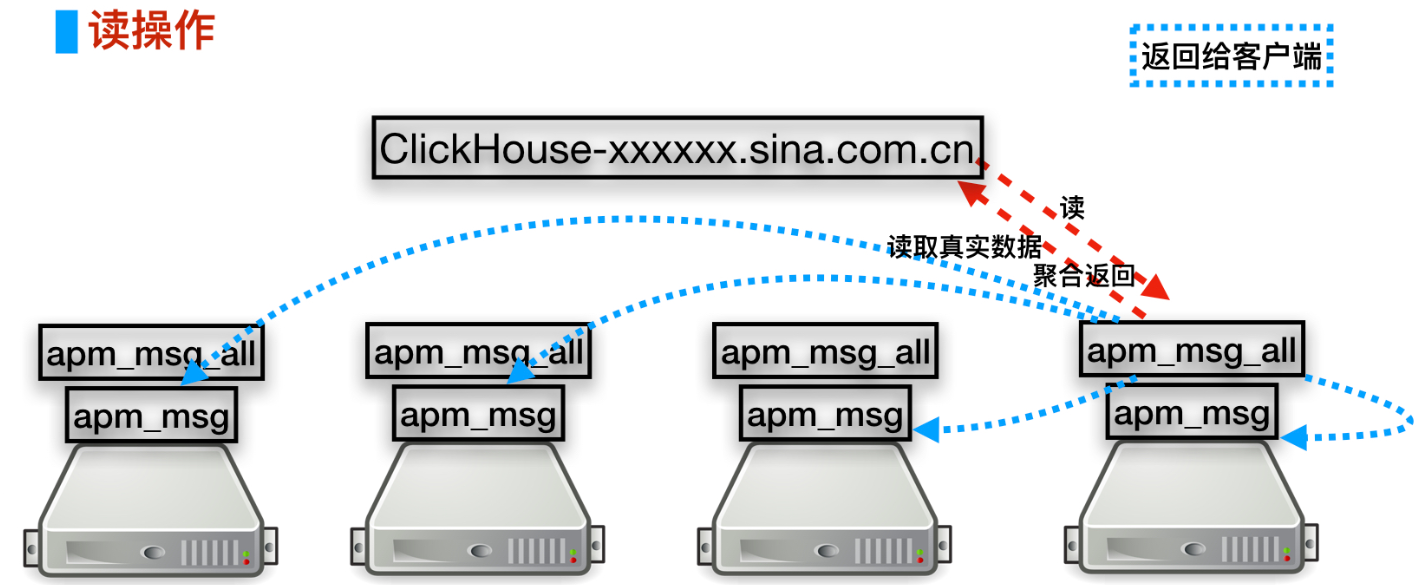

通过全局配置文件,达到集群相互知晓,各自维护各自得数据,让用户自己写入

水平扩展性很好,查询/写入能力随机器数量线性增加

即集群机器越多,性能越高,集群性能=∑单机性能

存在的问题

直接写分布式表,造成数据不均

新增节点历史数据不会自带迁移,造成不均衡

过渡group by会导致大量数据交换

单机部署—单分片

<clickhouse_remote_servers><dtstack><shard><internal_replication>true</internal_replication><replica><host>172.16.8.179</host><port>9000</port></replica></shard></dtstack></clickhouse_remote_servers>

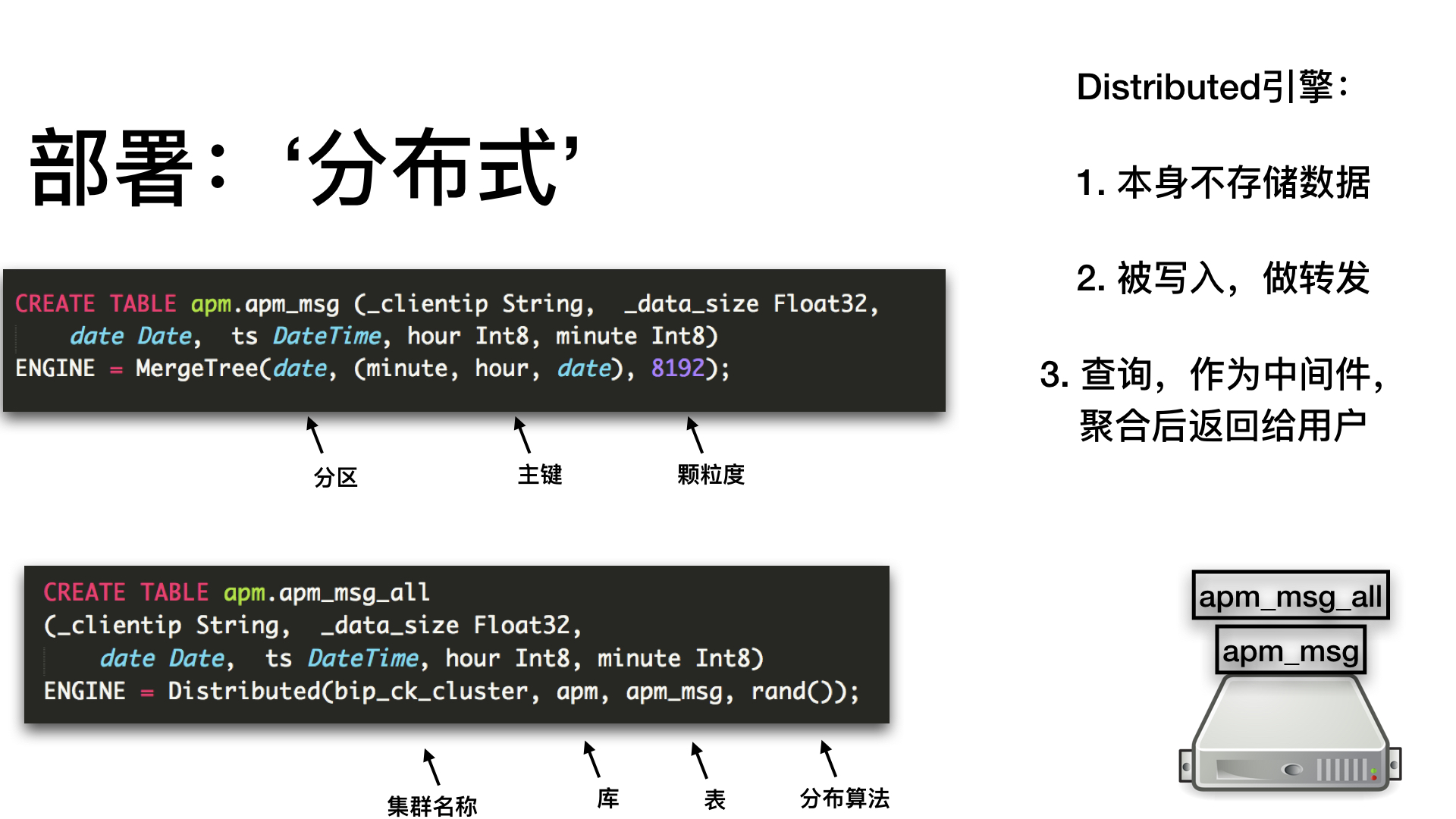

分布式表配置

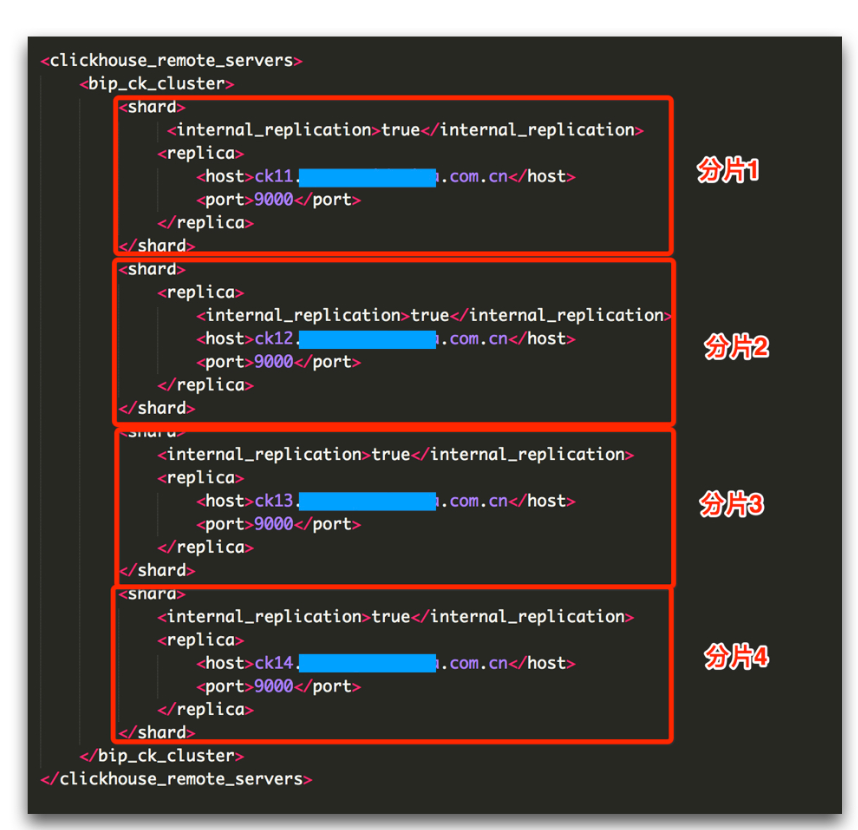

分布式配置

两个分配两个副本

<clickhouse_remote_servers><test><shard><weight>1</weight><internal_replication>true</internal_replication><replica><host>172.16.8.68</host><port>9000</port></replica><replica><host>172.16.8.69</host><port>9000</port></replica></shard><shard><weight>2</weight><internal_replication>true</internal_replication><replica><host>172.16.8.71</host><port>9000</port></replica><replica><host>172.16.8.72</host><secure>1</secure><port>9440</port></replica></shard></test></clickhouse_remote_servers>

dtstack: 定义集群名称,需要与建表中的集群名称保持一致 shard: 定义分片 weight: 定义分片的权重,负责控制数据发送的比例 internal_replication: ReplicaMergeTree建议设置成true,将数据写入其中一个副本,通过副本之间复制同步数据。如果设置成false,依赖分布式表将数据插入多个副本中(如果配置多个副本) replica: 定义副本 host: 定义副本所在主机IP或者域名 port: 定义副本程序所使用端口

测试SQL

创建复制表:CREATE TABLE local_1.test (at_date Date,at_timestamp Float64,appname String,keeptype String) ENGINE = ReplicatedMergeTree('/ck/tables/1/test/{shard}/hits', '{replica}') PARTITION BY concat(toString(at_date), keeptype) ORDER BY (at_date, at_timestamp, intHash64(toInt64(at_timestamp))) SAMPLE BY intHash64(toInt64(at_timestamp)) SETTINGS index_granularity = 8192创建分布式表:CREATE TABLE distributed_1.test (at_date Date,at_timestamp Float64,appname String,keeptype String) ENGINE = Distributed(dtstack,'local_1','test',rand())插入数据:INSERT INTO local_1.test (at_date,at_timestamp,appname,keeptype) VALUES('2019-09-28',156960000739,'test','business');

参考链接

Clickhouse中文社区:

https://clickhouse.yandex/docs/zh/operations/table_engines/distributed/

https://clickhouse.yandex/docs/zh/operations/table_engines/replication/

Clickhouse英文社区:

https://clickhouse.yandex/docs/en/operations/table_engines/distributed/

https://clickhouse.yandex/docs/en/operations/table_engines/replication/