本地root证书过期

centos The certificate issuer’s certificate has expired. Check your system date and time.

sudo yum install ca-certificates

sudo update-ca-trust extract

podman centos 7 源:

https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/CentOS_7/x86_64/

内部:

安装podman:

http://mirror.centos.org/centos/7/extras/x86_64/Packages/

wget http://mirror.centos.org/centos/7/os/x86_64/Packages/nftables-0.8-14.el7.x86_64.rpm

wget http://mirror.centos.org/centos/7/os/x86_64/Packages/libnftnl-1.0.8-3.el7.x86_64.rpm

yum localinstall -y libnftnl-1.0.8-3.el7.x86_64.rpm

yum localinstall -y nftables-0.8-14.el7.x86_64.rpm

wget http://mirror.centos.org/centos/7/extras/x86_64/Packages/fuse3-libs-3.6.1-4.el7.x86_64.rpm

yum localinstall -y fuse3-libs-3.6.1-4.el7.x86_64.rpm

wget http://mirror.centos.org/centos/7/extras/x86_64/Packages/fuse3-devel-3.6.1-4.el7.x86_64.rpm

yum localinstall -y fuse3-devel-3.6.1-4.el7.x86_64.rpm

wget http://mirror.centos.org/centos/7/extras/x86_64/Packages/fuse-overlayfs-0.7.2-6.el7_8.x86_64.rpm

yum localinstall -y fuse-overlayfs-0.7.2-6.el7_8.x86_64.rpm

wget https://linuxsoft.cern.ch/cern/centos/7/updates/x86_64/Packages/audit-libs-2.8.4-4.el7.x86_64.rpm

yum localinstall -y audit-libs-2.8.4-4.el7.x86_64.rpm

wget http://mirror.centos.org/centos/7/extras/x86_64/Packages/container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm

yum localinstall -y container-selinux-2.119.2-1.911c772.el7_8.noarch.rpm

curl -L -o /etc/yum.repos.d/devel:kubic:libcontainers:stable.repo https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/CentOS_7/devel:kubic:libcontainers:stable.repo

yum -y install runc

yum -y install podman

curl -s -L https://nvidia.github.io/nvidia-docker/centos7/nvidia-docker.repo | tee /etc/yum.repos.d/nvidia-docker.repo

yum install nvidia-container-toolkit

确认安装成功:

cat /usr/share/containers/oci/hooks.d/oci-nvidia-hook.json

内部admin账号启动容器

curl -o /etc/yum.repos.d/rhel7.6-rootless-preview.repo

yum install -y shadow-utils46-newxidmap slirp4netns

echo “admin:10000:65535” > /etc/subuid

echo “admin:10000:65535” > /etc/subgid

podman system migrate

export XDG_DATA_HOME=/home/admin/ray-pack/

podman run -v /proc:/proc -v /home/admin/logs:/home/admin/logs —pid=host -it —security-opt=seccomp=unconfined —net=host —cgroup-manager=cgroupfs registry.cn-hangzhou.aliyuncs.com/rayproject/dumb-init bash

seccomp=unconfined 表示不启用默认的seccomp控制

内部sudo启动:

https://qastack.cn/server/579555/cgroup-no-space-left-on-device

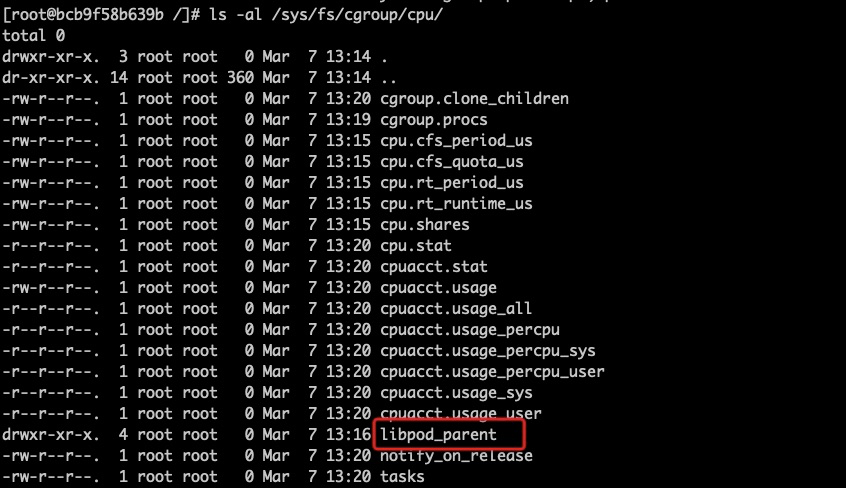

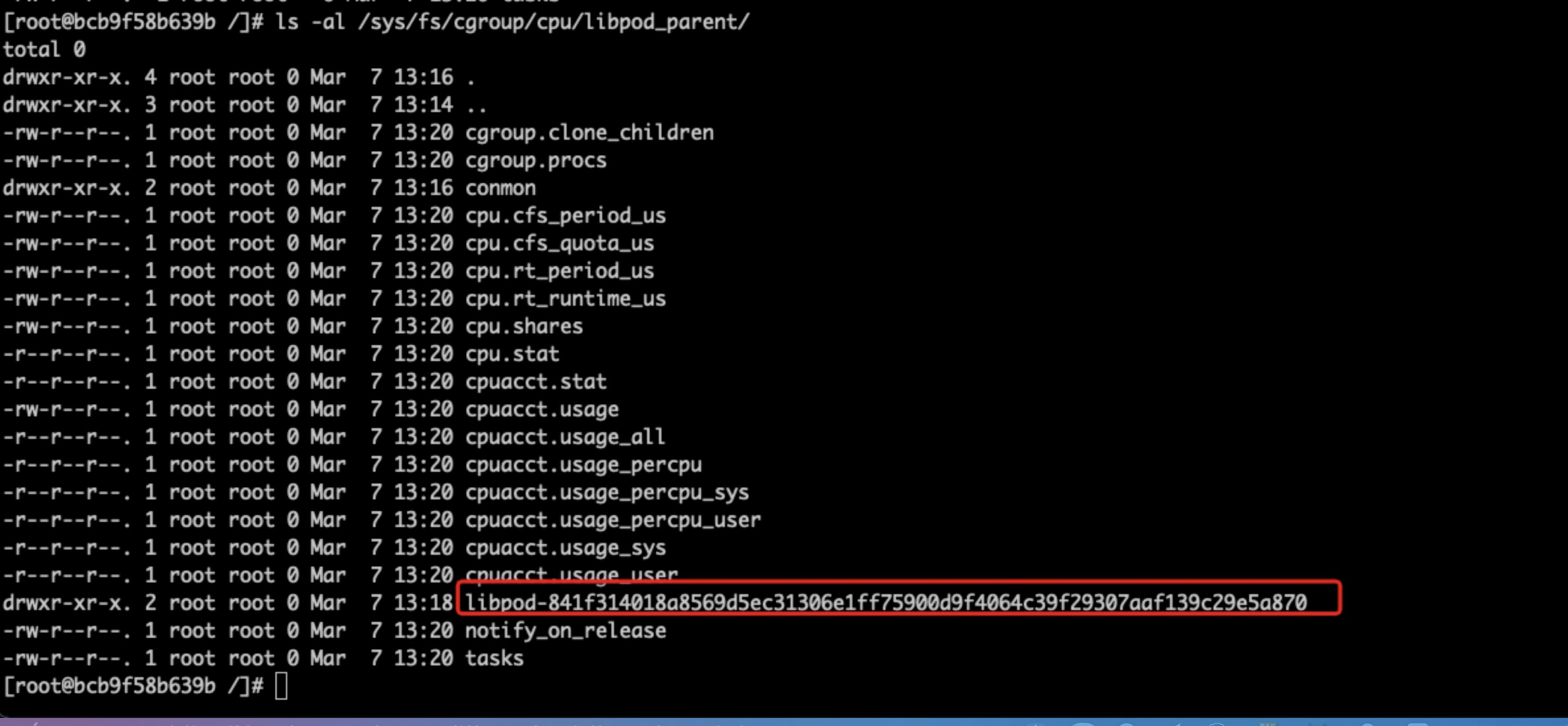

echo 0 > /sys/fs/cgroup/cpu/libpod_parent/conmon/cpuset.mems

echo “3-5,8-13,15-16,18-31” > /sys/fs/cgroup/cpu/libpod_parent/conmon/cpuset.cpus

https://github.com/containers/podman/issues/8472#issuecomment-747421051

podman run -it —security-opt=seccomp=unconfined —net=host —cgroup-manager=cgroupfs registry.cn-hangzhou.aliyuncs.com/rayproject/dumb-init bash

开源:

启动docker privileged容器

docker run -it -v podman_volume:/var/lib/containers —privileged —shm-size=512M ray_docker_test bash

docker_nest是一个安装了podman的docker镜像

开源的docker,privileged模式会自动把cgroup挂载成rw,否则是ro。

开源非privileged模式:

docker run -it -v /root/podman_test:/var/lib/containers —cap-add SYS_ADMIN —security-opt apparmor=unconfined —security-opt seccomp=unconfined —shm-size=512M ray_docker_test bash

--cap-add SYS_ADMIN 需要

“—security-opt apparmor=unconfined” 这个是否需要?需要,否则无法挂载overlayfs

—device /dev/fuse 是否需要?

cgroup 现在还是挂载成ro,需要在容器内重新挂载:

umount /sys/fs/cgroup/pids

mount -t cgroup -o rw,nosuid,nodev,noexec,relatime,pids cgroup /sys/fs/cgroup/pids

declare -a arr=(“pids” “cpu,cpuacct” “blkio” “freezer” “memory” “hugetlb” “net_cls,net_prio” “devices” “rdma” “cpuset” “perf_event”)

now loop through the above array

for i in “${arr[@]}”

do

echo “$i”

umount /sys/fs/cgroup/$i

mount -t cgroup -o rw,nosuid,nodev,noexec,relatime,$i cgroup /sys/fs/cgroup/$i

done

podman关闭keyring

/usr/share/containers/containers.conf

在docker容器内通过podman启动容器

使用docker容器的networker,挂在docker容器的文件目录到podman容器

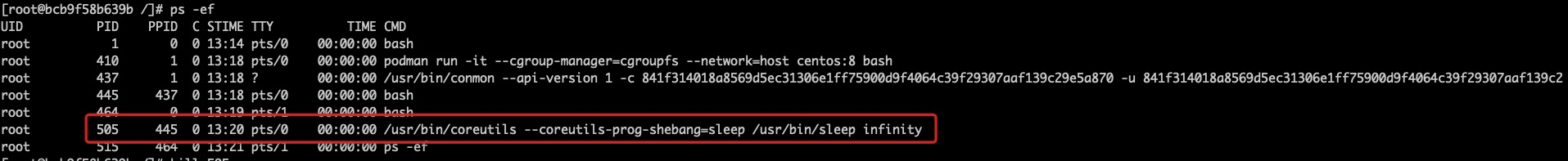

podman run -it —log-level=debug —cgroup-manager=cgroupfs —network=host centos:8 bash

GPU加载:

podman run -it —cgroup-manager=cgroupfs —network=host -e NVIDIA_VISIBLE_DEVICES=0 centos:8 nvidia-smi

docker容器的cgroup

在docker容器内查看podman容器的进程:

cd python/

pip install -e . —verbose

ps -ef|grep ray|grep -v bazel|awk ‘{print $2}’|xargs kill

export RAY_OBJECT_STORE_ALLOW_SLOW_STORAGE=1

export RAY_BACKEND_LOG_LEVEL=debug

ray start —head —port=6379

问题:

- 如果开启 -t , 会出现 Handling terminal attach,然后

性能测试:

顺序写io:

fio -filename=/home/admin/ray-pack/test -direct=1 -iodepth 1 -thread -rw=write -ioengine=psync -bs=16k -size=2G -numjobs=10 -runtime=60 -group_reporting -name=mytest

container内:

fio-2.2.8Starting 10 threadsJobs: 10 (f=10): [W(10)] [100.0% done] [0KB/63952KB/0KB /s] [0/3997/0 iops] [eta 00m:00s]mytest: (groupid=0, jobs=10): err= 0: pid=115694: Wed Jun 9 17:16:31 2021write: io=3747.1MB, bw=63953KB/s, iops=3997, runt= 60011msecclat (usec): min=24, max=109055, avg=2499.64, stdev=14878.96lat (usec): min=25, max=109056, avg=2500.30, stdev=14878.94clat percentiles (usec):| 1.00th=[ 60], 5.00th=[ 76], 10.00th=[ 83], 20.00th=[ 90],| 30.00th=[ 95], 40.00th=[ 101], 50.00th=[ 106], 60.00th=[ 113],| 70.00th=[ 124], 80.00th=[ 137], 90.00th=[ 161], 95.00th=[ 247],| 99.00th=[98816], 99.50th=[99840], 99.90th=[100864], 99.95th=[100864],| 99.99th=[102912]bw (KB /s): min= 4896, max= 8288, per=10.01%, avg=6400.52, stdev=920.82lat (usec) : 50=0.32%, 100=37.45%, 250=57.31%, 500=1.88%, 750=0.31%lat (usec) : 1000=0.12%lat (msec) : 2=0.10%, 4=0.01%, 10=0.01%, 100=2.14%, 250=0.36%cpu : usr=0.10%, sys=0.72%, ctx=254858, majf=0, minf=0IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%issued : total=r=0/w=239866/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0latency : target=0, window=0, percentile=100.00%, depth=1Run status group 0 (all jobs):WRITE: io=3747.1MB, aggrb=63952KB/s, minb=63952KB/s, maxb=63952KB/s, mint=60011msec, maxt=60011msecDisk stats (read/write):nvme0n1: ios=2/257855, merge=0/17159, ticks=0/26128, in_queue=23601, util=4.69%

在nested-container内

fio-2.2.8Starting 10 threadsJobs: 10 (f=10): [W(10)] [100.0% done] [0KB/63936KB/0KB /s] [0/3996/0 iops] [eta 00m:00s]mytest: (groupid=0, jobs=10): err= 0: pid=118: Wed Jun 9 17:08:55 2021write: io=3754.2MB, bw=63999KB/s, iops=3999, runt= 60068msecclat (usec): min=25, max=114379, avg=2497.62, stdev=14506.47lat (usec): min=25, max=114379, avg=2498.33, stdev=14506.48clat percentiles (usec):| 1.00th=[ 60], 5.00th=[ 76], 10.00th=[ 84], 20.00th=[ 93],| 30.00th=[ 101], 40.00th=[ 111], 50.00th=[ 122], 60.00th=[ 133],| 70.00th=[ 145], 80.00th=[ 177], 90.00th=[ 402], 95.00th=[ 884],| 99.00th=[95744], 99.50th=[98816], 99.90th=[99840], 99.95th=[100864],| 99.99th=[100864]bw (KB /s): min= 4512, max= 8608, per=10.01%, avg=6406.14, stdev=814.02lat (usec) : 50=0.28%, 100=27.45%, 250=57.34%, 500=6.59%, 750=2.15%lat (usec) : 1000=2.40%lat (msec) : 2=1.25%, 4=0.03%, 10=0.01%, 100=2.30%, 250=0.20%cpu : usr=0.12%, sys=0.82%, ctx=256956, majf=0, minf=0IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%issued : total=r=0/w=240268/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0latency : target=0, window=0, percentile=100.00%, depth=1Run status group 0 (all jobs):WRITE: io=3754.2MB, aggrb=63998KB/s, minb=63998KB/s, maxb=63998KB/s, mint=60068msec, maxt=60068msecDisk stats (read/write):nvme0n1: ios=5/258372, merge=0/17882, ticks=1/41867, in_queue=39242, util=7.13%

顺序读io:

fio -filename=/home/admin/ray-pack/test -direct=1 -iodepth 1 -thread -rw=read -ioengine=psync -bs=16k -size=2G -numjobs=10 -runtime=60 -group_reporting -name=mytest

container内:

fio-2.2.8Starting 10 threadsJobs: 10 (f=10): [R(10)] [100.0% done] [32000KB/0KB/0KB /s] [2000/0/0 iops] [eta 00m:00s]mytest: (groupid=0, jobs=10): err= 0: pid=128573: Wed Jun 9 17:20:35 2021read : io=1874.4MB, bw=31982KB/s, iops=1998, runt= 60011msecclat (usec): min=20, max=115329, avg=5001.01, stdev=21190.01lat (usec): min=20, max=115329, avg=5001.17, stdev=21190.01clat percentiles (usec):| 1.00th=[ 92], 5.00th=[ 101], 10.00th=[ 106], 20.00th=[ 112],| 30.00th=[ 119], 40.00th=[ 125], 50.00th=[ 133], 60.00th=[ 143],| 70.00th=[ 157], 80.00th=[ 175], 90.00th=[ 213], 95.00th=[79360],| 99.00th=[101888], 99.50th=[101888], 99.90th=[101888], 99.95th=[101888],| 99.99th=[103936]bw (KB /s): min= 2240, max= 5992, per=10.00%, avg=3199.42, stdev=304.83lat (usec) : 50=0.07%, 100=3.92%, 250=88.88%, 500=1.89%, 750=0.13%lat (usec) : 1000=0.02%lat (msec) : 2=0.05%, 4=0.03%, 100=2.54%, 250=2.47%cpu : usr=0.04%, sys=0.36%, ctx=126880, majf=0, minf=40IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%issued : total=r=119955/w=0/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0latency : target=0, window=0, percentile=100.00%, depth=1Run status group 0 (all jobs):READ: io=1874.4MB, aggrb=31982KB/s, minb=31982KB/s, maxb=31982KB/s, mint=60011msec, maxt=60011msecDisk stats (read/write):nvme0n1: ios=119989/17858, merge=18/17090, ticks=15570/606, in_queue=12851, util=2.62%

在nested-container内

fio-2.2.8Starting 10 threadsJobs: 10 (f=10): [R(10)] [100.0% done] [32000KB/0KB/0KB /s] [2000/0/0 iops] [eta 00m:00s]mytest: (groupid=0, jobs=10): err= 0: pid=99: Wed Jun 9 17:23:03 2021read : io=1875.2MB, bw=31995KB/s, iops=1999, runt= 60014msecclat (usec): min=23, max=105261, avg=4998.84, stdev=21164.41lat (usec): min=23, max=105261, avg=4999.00, stdev=21164.40clat percentiles (usec):| 1.00th=[ 94], 5.00th=[ 103], 10.00th=[ 108], 20.00th=[ 114],| 30.00th=[ 120], 40.00th=[ 127], 50.00th=[ 135], 60.00th=[ 145],| 70.00th=[ 159], 80.00th=[ 177], 90.00th=[ 219], 95.00th=[ 4192],| 99.00th=[100864], 99.50th=[101888], 99.90th=[101888], 99.95th=[102912],| 99.99th=[103936]bw (KB /s): min= 2272, max= 5714, per=10.01%, avg=3201.33, stdev=314.44lat (usec) : 50=0.09%, 100=2.77%, 250=89.58%, 500=2.15%, 750=0.16%lat (usec) : 1000=0.06%lat (msec) : 2=0.10%, 4=0.08%, 10=0.01%, 100=2.63%, 250=2.37%cpu : usr=0.05%, sys=0.39%, ctx=127310, majf=0, minf=40IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%issued : total=r=120010/w=0/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0latency : target=0, window=0, percentile=100.00%, depth=1Run status group 0 (all jobs):READ: io=1875.2MB, aggrb=31995KB/s, minb=31995KB/s, maxb=31995KB/s, mint=60014msec, maxt=60014msecDisk stats (read/write):nvme0n1: ios=119972/18498, merge=28/18130, ticks=15961/680, in_queue=13159, util=2.75%