完整代码:

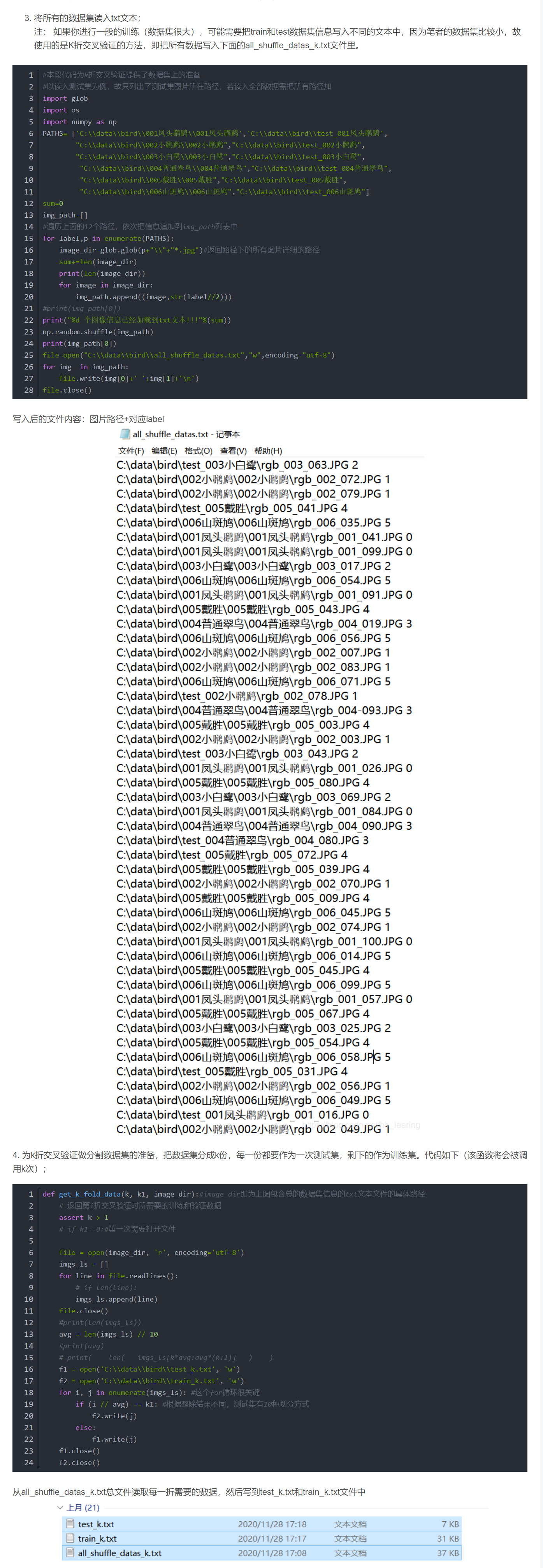

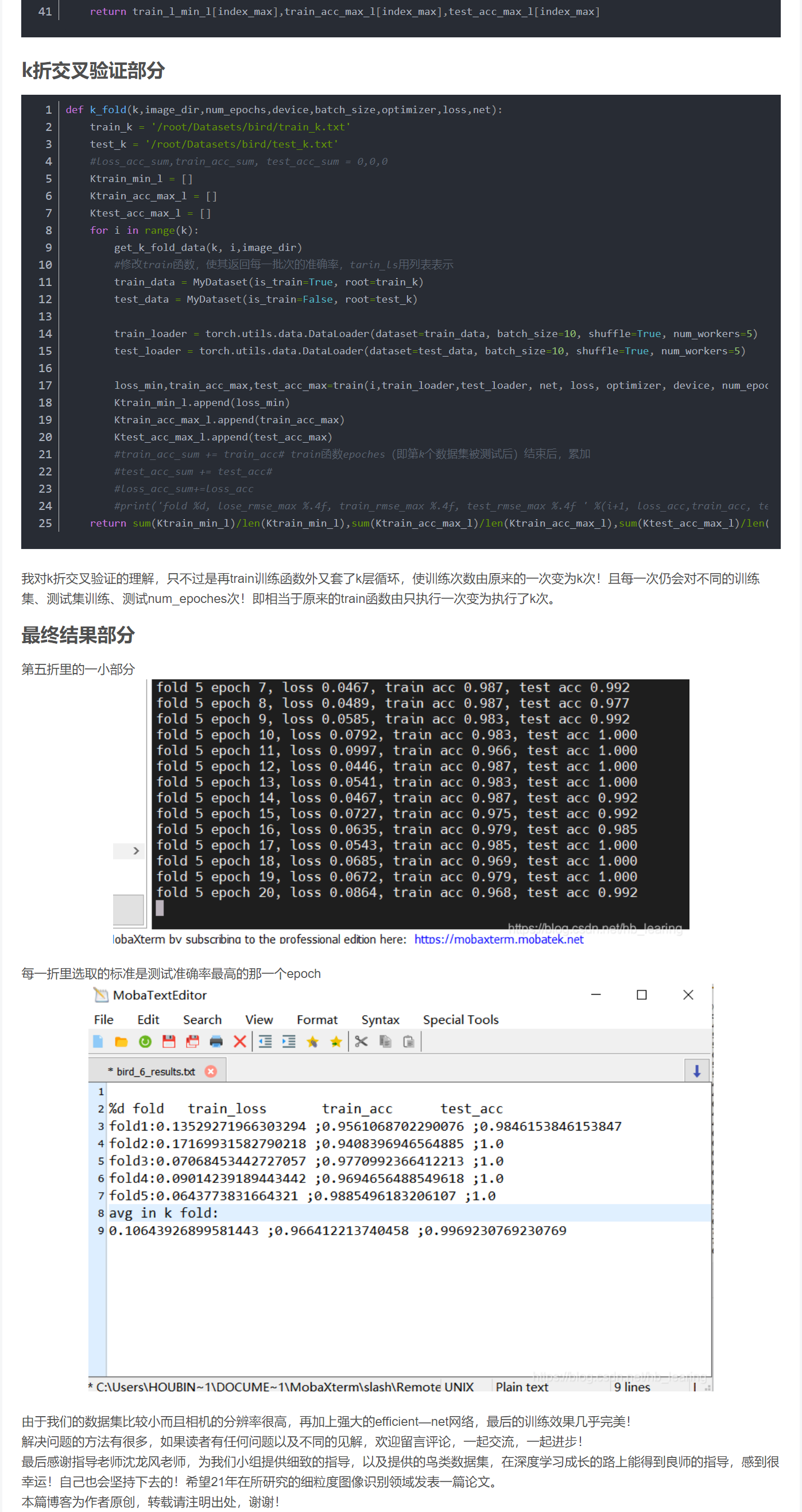

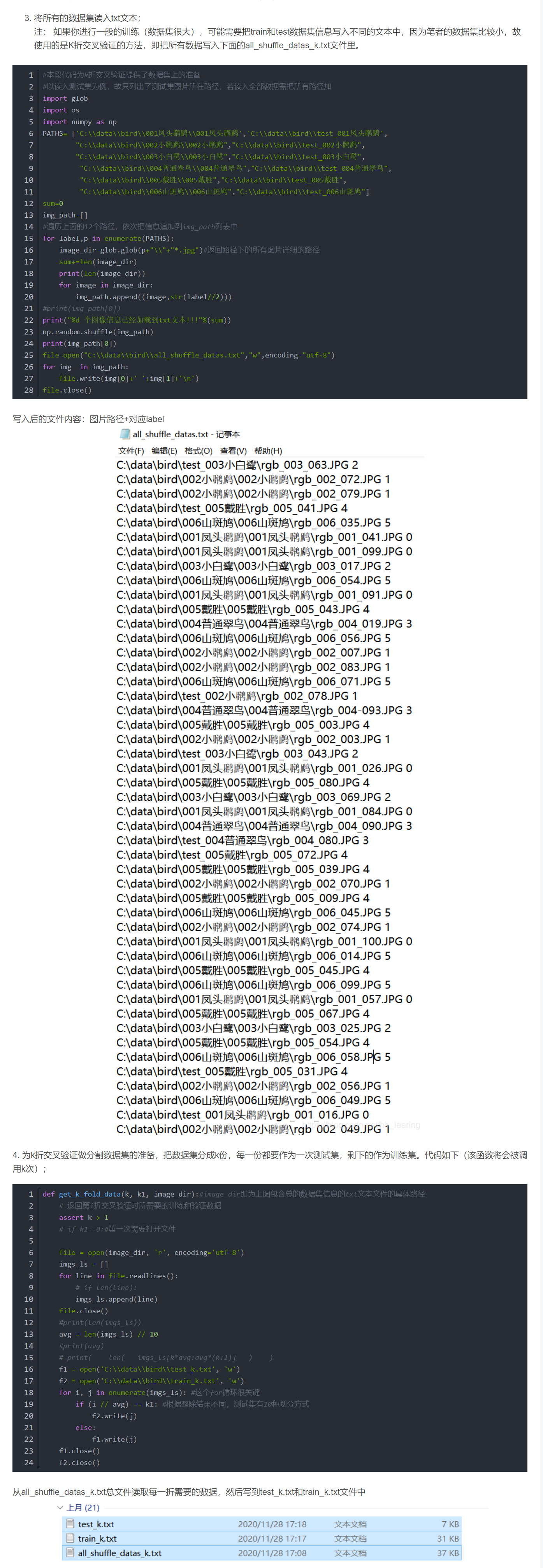

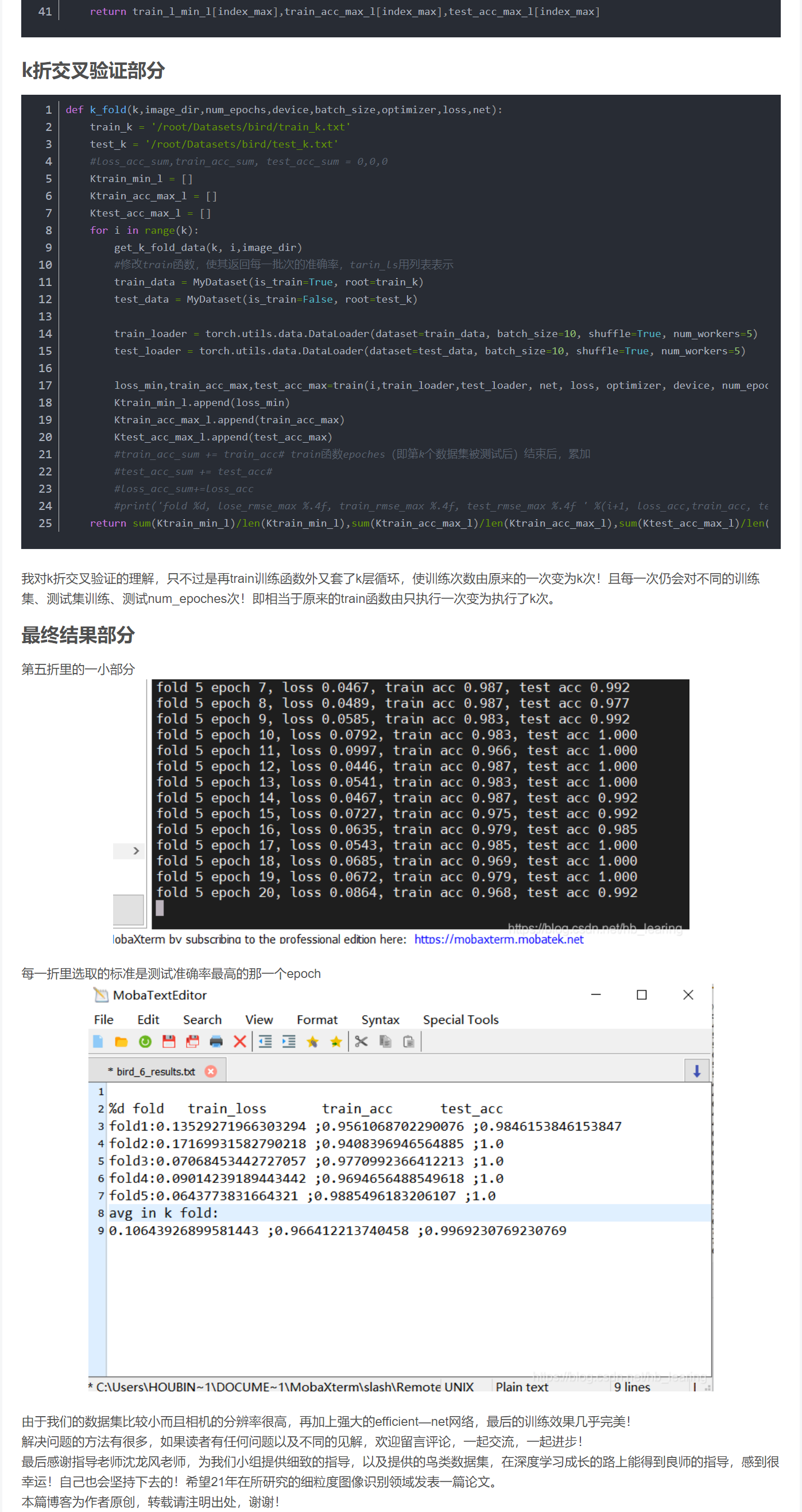

import osfrom PIL import Imageimport torchimport torchvisionimport sysfrom efficientnet_pytorch import EfficientNetdevice = torch.device('cuda' if torch.cuda.is_available() else 'cpu')from PIL import Imagefrom torch import optimfrom torch import nnos.environ['CUDA_VISIBLE_DEVICES'] = '0,1'import d2lzh_pytorch as d2lfrom time import timeimport timeimport csvclass MyDataset(torch.utils.data.Dataset): # 创建自己的类:MyDataset,这个类是继承的torch.utils.data.Dataset def __init__(self, is_train,root): # 初始化一些需要传入的参数 super(MyDataset, self).__init__() fh = open(root, 'r', encoding="utf-8") # 按照传入的路径和txt文本参数,打开这个文本,并读取内容 imgs = [] # 创建一个名为img的空列表,一会儿用来装东西 for line in fh: # 按行循环txt文本中的内容 line = line.rstrip() # 删除 本行string 字符串末尾的指定字符,这个方法的详细介绍自己查询python words = line.split() # 通过指定分隔符对字符串进行切片,默认为所有的空字符,包括空格、换行、制表符等 imgs.append((words[0], int(words[1]))) # 把txt里的内容读入imgs列表保存,具体是words几要看txt内容而定 self.imgs = imgs self.is_train = is_train if self.is_train: self.train_tsf = torchvision.transforms.Compose([ torchvision.transforms.RandomResizedCrop(524, scale=(0.1, 1), ratio=(0.5, 2)), torchvision.transforms.ToTensor() ]) else: self.test_tsf = torchvision.transforms.Compose([ torchvision.transforms.Resize(size=524), torchvision.transforms.CenterCrop(size=500), torchvision.transforms.ToTensor()]) def __getitem__(self, index): # 这个方法是必须要有的,用于按照索引读取每个元素的具体内容 feature, label = self.imgs[index] # fn是图片path #fn和label分别获得imgs[index]也即是刚才每行中word[0]和word[1]的信息 feature = Image.open(feature).convert('RGB') # 按照path读入图片from PIL import Image # 按照路径读取图片 if self.is_train: feature = self.train_tsf(feature) else: feature = self.test_tsf(feature) return feature, label def __len__(self): # 这个函数也必须要写,它返回的是数据集的长度,也就是多少张图片,要和loader的长度作区分 return len(self.imgs)def get_k_fold_data(k, k1, image_dir): # 返回第i折交叉验证时所需要的训练和验证数据 assert k > 1 # if k1==0:#第一次需要打开文件 file = open(image_dir, 'r', encoding='utf-8') reader=csv.reader(file ) imgs_ls = [] for line in file.readlines(): # if len(line): imgs_ls.append(line) file.close() #print(len(imgs_ls)) avg = len(imgs_ls) // k #print(avg) f1 = open('/ddhome/bird20_K/train_k.txt', 'w') f2 = open('/ddhome/bird20_K/test_k.txt', 'w') writer1 = csv.writer(f1) writer2 = csv.writer(f2) for i, row in enumerate(imgs_ls): if (i // avg) == k1: writer2.writerow(row) else: writer1.writerow(row) f1.close() f2.close()def k_fold(k,image_dir,num_epochs,device,batch_size,optimizer,loss,net): train_k = '/root/Datasets/bird/train_k.txt' test_k = '/root/Datasets/bird/test_k.txt' #loss_acc_sum,train_acc_sum, test_acc_sum = 0,0,0 Ktrain_min_l = [] Ktrain_acc_max_l = [] Ktest_acc_max_l = [] for i in range(k): get_k_fold_data(k, i,image_dir) #修改train函数,使其返回每一批次的准确率,tarin_ls用列表表示 train_data = MyDataset(is_train=True, root=train_k) test_data = MyDataset(is_train=False, root=test_k) train_loader = torch.utils.data.DataLoader(dataset=train_data, batch_size=10, shuffle=True, num_workers=5) test_loader = torch.utils.data.DataLoader(dataset=test_data, batch_size=10, shuffle=True, num_workers=5) loss_min,train_acc_max,test_acc_max=train(i,train_loader,test_loader, net, loss, optimizer, device, num_epochs) Ktrain_min_l.append(loss_min) Ktrain_acc_max_l.append(train_acc_max) Ktest_acc_max_l.append(test_acc_max) #train_acc_sum += train_acc# train函数epoches(即第k个数据集被测试后)结束后,累加 #test_acc_sum += test_acc# #loss_acc_sum+=loss_acc #print('fold %d, lose_rmse_max %.4f, train_rmse_max %.4f, test_rmse_max %.4f ' %(i+1, loss_acc,train_acc, test_acc_max_l[i])) return sum(Ktrain_min_l)/len(Ktrain_min_l),sum(Ktrain_acc_max_l)/len(Ktrain_acc_max_l),sum(Ktest_acc_max_l)/len(Ktest_acc_max_l)def train(i,train_iter, test_iter, net, loss, optimizer, device, num_epochs): net = net.to(device) print("training on ", device) start = time.time() test_acc_max_l = [] train_acc_max_l = [] train_l_min_l=[] for epoch in range(num_epochs): #迭代100次 batch_count = 0 train_l_sum, train_acc_sum, test_acc_sum, n = 0.0, 0.0, 0.0, 0 for X, y in train_iter: X = X.to(device) y = y.to(device) y_hat = net(X) l = loss(y_hat, y) optimizer.zero_grad() l.backward() optimizer.step() train_l_sum += l.cpu().item() train_acc_sum += (y_hat.argmax(dim=1) == y).sum().cpu().item() n += y.shape[0] batch_count += 1 #至此,一个epoches完成 test_acc_sum= d2l.evaluate_accuracy(test_iter, net) train_l_min_l.append(train_l_sum/batch_count) train_acc_max_l.append(train_acc_sum/n) test_acc_max_l.append(test_acc_sum) print('fold %d epoch %d, loss %.4f, train acc %.3f, test acc %.3f' % (i+1,epoch + 1, train_l_sum / batch_count, train_acc_sum / n, test_acc_sum)) #train_l_min_l.sort() #¥train_acc_max_l.sort() index_max=test_acc_max_l.index(max(test_acc_max_l)) f = open("/root/pythoncodes/results.txt", "a") if i==0: f.write("%d fold"+" "+"train_loss"+" "+"train_acc"+" "+"test_acc") f.write('\n' +"fold"+str(i+1)+":"+str(train_l_min_l[index_max]) + " ;" + str(train_acc_max_l[index_max]) + " ;" + str(test_acc_max_l[index_max])) f.close() print('fold %d, train_loss_min %.4f, train acc max%.4f, test acc max %.4f, time %.1f sec' % (i + 1, train_l_min_l[index_max], train_acc_max_l[index_max], test_acc_max_l[index_max], time.time() - start)) return train_l_min_l[index_max],train_acc_max_l[index_max],test_acc_max_l[index_max]batch_size=10k=5image_dir='/root/Datasets/bird/shuffle_datas.txt'num_epochs=100net = EfficientNet.from_pretrained('efficientnet-b0')net._fc = nn.Linear(1280, 6)output_params = list(map(id, net._fc.parameters()))feature_params = filter(lambda p: id(p) not in output_params, net.parameters())lr = 0.01optimizer = optim.SGD([{'params': feature_params}, {'params': net._fc.parameters(), 'lr': lr * 10}], lr=lr, weight_decay=0.001)net=net.cuda()net = torch.nn.DataParallel(net)loss = torch.nn.CrossEntropyLoss()loss_k,train_k, valid_k=k_fold(k,image_dir,num_epochs,device,batch_size,optimizer,loss,net)f=open("/root/pythoncodes/results.txt","a")f.write('\n'+"avg in k fold:"+"\n"+str(loss_k)+" ;"+str(train_k)+" ;"+str(valid_k))f.close()print('%d-fold validation: min loss rmse %.5f, max train rmse %.5f,max test rmse %.5f' % (k,loss_k,train_k, valid_k))print("Congratulations!!! hou bin")torch.save(net.module.state_dict(), "./bird_model_k.pt")