1. 简介

简单优雅,下载次数多,受人喜欢,下载量高,官方文档完善

Request官网

2. 发起请求

构建请求和响应报文

import requeststargetUrl = "http://httpbin.org/get"resp = requests.get(targetUrl)print(resp.text)

{"args": {},"headers": {"Accept": "*/*","Accept-Encoding": "gzip, deflate","Host": "httpbin.org","User-Agent": "python-requests/2.25.1","X-Amzn-Trace-Id": "Root=1-60225ec0-04d817761fdafd9459be4c66"},"origin": "47.240.65.248","url": "http://httpbin.org/get"}

直接打印文本

import requeststest_url = "http://httpbin.org/get" # 测试urlres = requests.get(test_url)print(res.text)

{"args": {},"headers": {"Accept": "*/*","Accept-Encoding": "gzip, deflate","Host": "httpbin.org","User-Agent": "python-requests/2.25.1","X-Amzn-Trace-Id": "Root=1-6022666c-492947c37cb3a71443f517bd"},"origin": "183.223.85.232","url": "http://httpbin.org/get"}

测试其他请求方式

POST方式

import requests# post请求data = {"data1":"Spider", "data2":"测试爬虫"}test_url = "http://httpbin.org/post" # 测试urlres = requests.post(test_url,data=data)print(res.text)

{"args": {},"data": "","files": {},"form": {"data1": "Spider","data2": "\u6d4b\u8bd5\u722c\u866b"},"headers": {"Accept": "*/*","Accept-Encoding": "gzip, deflate","Content-Length": "55","Content-Type": "application/x-www-form-urlencoded","Host": "httpbin.org","User-Agent": "python-requests/2.25.1","X-Amzn-Trace-Id": "Root=1-60227e99-47295bf138329e21631e53d8"},"json": null,"origin": "47.240.65.248","url": "http://httpbin.org/post"}

传递json表单

json和data二选一, 有data则json为null

import requests# post请求data = {"data1":"Spider", "data2":"测试爬虫"}json = {"json_style":"json-data"}test_url = "http://httpbin.org/post" # 测试urlres = requests.post(test_url, json=json)print(res.text)

{"args": {},"data": "{\"json_style\": \"json-data\"}","files": {},"form": {},"headers": {"Accept": "*/*","Accept-Encoding": "gzip, deflate","Content-Length": "27","Content-Type": "application/json","Host": "httpbin.org","User-Agent": "python-requests/2.25.1","X-Amzn-Trace-Id": "Root=1-60228036-0845b88b3f85bca75085b16c"},"json": {"json_style": "json-data"},"origin": "47.240.65.248","url": "http://httpbin.org/post"}

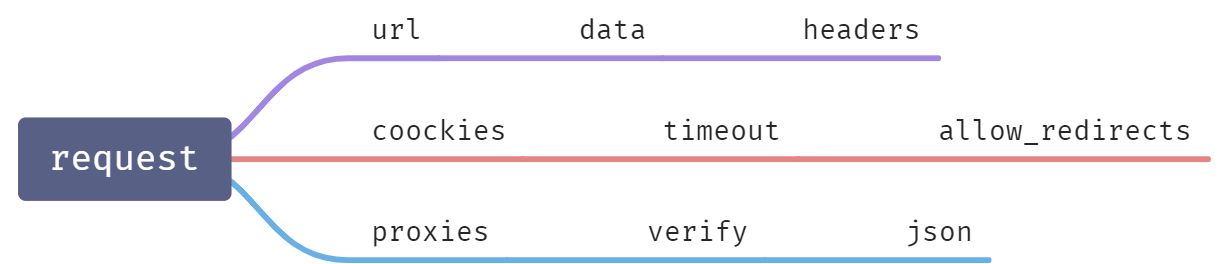

测试request的几个参数

测试自动转码

用户以get访问方式,传入字典形式的参数,特别注意的是字典中的值是中文,所以requests库的一个功能就是中文的自动转码

import requeststest_url = "http://httpbin.org/get" # 测试urlparams = {"name1":"网络", "name2":"爬虫"} # 请求参数res = requests.get(test_url, params=params)print(res.text)

{"args": {"name1": "\u7f51\u7edc","name2": "\u722c\u866b"},"headers": {"Accept": "*/*","Accept-Encoding": "gzip, deflate","Host": "httpbin.org","User-Agent": "python-requests/2.25.1","X-Amzn-Trace-Id": "Root=1-602269a1-670a1cff406e773611659033"},"origin": "47.240.65.248","url": "http://httpbin.org/get?name1=\u7f51\u7edc&name2=\u722c\u866b"}

测试headers

响应报文中有headers

import requeststest_url = "http://httpbin.org/get" # 测试urlheaders = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56"}params = {"name1":"网络", "name2":"爬虫"}res = requests.get(test_url, params=params, headers=headers)print(res.text)

{"args": {"name1": "\u7f51\u7edc","name2": "\u722c\u866b"},"headers": {"Accept": "*/*","Accept-Encoding": "gzip, deflate","Host": "httpbin.org","User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56","X-Amzn-Trace-Id": "Root=1-60226c1f-7f595f34039a54485b1ace0d"},"origin": "47.240.65.248","url": "http://httpbin.org/get?name1=\u7f51\u7edc&name2=\u722c\u866b"}

直接在requests方法中使用cookies参数

import requeststest_url = "http://httpbin.org/get" # 测试urlheaders = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56"}cookies = {"sessionid":"hashcode", "userid": "987654321"}params = {"name1":"网络", "name2":"爬虫"}res = requests.get(test_url, params=params, headers=headers, cookies=cookies)print(res.text)

{"args": {"name1": "\u7f51\u7edc","name2": "\u722c\u866b"},"headers": {"Accept": "*/*","Accept-Encoding": "gzip, deflate","Cookie": "sessionid=hashcode; userid=987654321","Host": "httpbin.org","User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56","X-Amzn-Trace-Id": "Root=1-60226e2a-5a62cdd162da8d122627d70f"},"origin": "47.240.65.248","url": "http://httpbin.org/get?name1=\u7f51\u7edc&name2=\u722c\u866b"}

设置超时

import requeststest_url = "http://httpbin.org/get" # 测试urlheaders = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56"}cookies = {"sessionid":"hashcode", "userid": "987654321"}params = {"name1":"网络", "name2":"爬虫"}res = requests.get(test_url, params=params, headers=headers, cookies=cookies, timeout=1)print(res.text)

关闭重定向

关闭重定向之后,像github此类存在重定向功能的网站无法正常访问,会直接显示302

302是临时重定向的意思

# 重定向url = "http://github.com"res_gh = requests.get(url, allow_redirects=False)print(res_gh.text)print(res_gh.status_code)

使用代理

仅作演示

import requeststest_url = "http://httpbin.org/get" # 测试urlheaders = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56"}cookies = {"sessionid":"hashcode", "userid": "987654321"}proxies = {"http":"123.123.12.123"}params = {"name1":"网络", "name2":"爬虫"}res = requests.get(test_url, params=params, headers=headers, cookies=cookies, timeout=100, proxies=proxies)print(res.text)

证书验证

# 证书验证url = "https://inv-veri.chinatax.gov.cn/"res_ca = requests.get(url)print(res_ca.text)

During handling of the above exception, another exception occurred:Traceback (most recent call last):File "C:\Users\41999\Documents\PycharmProjects\Python\TZ—Spyder\第三节Request库的使用\request_demo.py", line 40, in <module>res_ca = requests.get(url)File "C:\Users\41999\AppData\Local\Programs\Python\Python39\lib\site-packages\requests\api.py", line 76, in getreturn request('get', url, params=params, **kwargs)File "C:\Users\41999\AppData\Local\Programs\Python\Python39\lib\site-packages\requests\api.py", line 61, in requestreturn session.request(method=method, url=url, **kwargs)File "C:\Users\41999\AppData\Local\Programs\Python\Python39\lib\site-packages\requests\sessions.py", line 542, in requestresp = self.send(prep, **send_kwargs)File "C:\Users\41999\AppData\Local\Programs\Python\Python39\lib\site-packages\requests\sessions.py", line 655, in sendr = adapter.send(request, **kwargs)File "C:\Users\41999\AppData\Local\Programs\Python\Python39\lib\site-packages\requests\adapters.py", line 514, in sendraise SSLError(e, request=request)requests.exceptions.SSLError: HTTPSConnectionPool(host='inv-veri.chinatax.gov.cn', port=443): Max retries exceeded with url: / (Caused by SSLError(SSLCertVerificationError(1, '[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self signed certificate in certificate chain (_ssl.c:1123)')))

对比(设置verify=false之后就不再验证证书,但是返回报文依然会报错)

# 证书验证url = "https://inv-veri.chinatax.gov.cn/"res_ca = requests.get(url, verify=False)print(res_ca.text)

C:\Users\41999\AppData\Local\Programs\Python\Python39\lib\site-packages\urllib3\connectionpool.py:1013: InsecureRequestWarning: Unverified HTTPS request is being made to host 'inv-veri.chinatax.gov.cn'. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.io/en/latest/advanced-usage.html#ssl-warningswarnings.warn(<!DOCTYPE html><html xmlns="http://www.w3.org/1999/xhtml"><head><meta http-equiv="Content-Type" content="text/html; charset=utf-8" /><meta http-equiv="X-UA-Compatible" content="IE=edge" /><title>å½å®¶ç¨å¡æ»å±å ¨å½å¢å¼ç¨å票æ¥éªå¹³å°</title><meta name="keywords" content=""><META HTTP-EQUIV="pragma" CONTENT="no-cache">

使用urllib3忽略警告(urllib3.disable_warnings())

# 证书验证urllib3.disable_warnings()url = "https://inv-veri.chinatax.gov.cn/"res_ca = requests.get(url, verify=False)print(res_ca.text)

使用requests包忽略警告(无需导入urllib3包)

# 证书验证requests.packages.urllib3.disable_warnings()url = "https://inv-veri.chinatax.gov.cn/"res_ca = requests.get(url, verify=False)print(res_ca.text)

<!DOCTYPE html><html xmlns="http://www.w3.org/1999/xhtml"><head><meta http-equiv="Content-Type" content="text/html; charset=utf-8" /><meta http-equiv="X-UA-Compatible" content="IE=edge" /><title>å½å®¶ç¨å¡æ»å±å ¨å½å¢å¼ç¨å票æ¥éªå¹³å°</title><meta name="keywords" content=""><META HTTP-EQUIV="pragma" CONTENT="no-cache"><META HTTP-EQUIV="Cache-Control" CONTENT="no-cache, must-revalidate"><META HTTP-EQUIV="expires" CONTENT="0">

4. 接受响应

正常显示网页中的中文字体

res.encoding = “utf-8” # 为了正常显示网页中的中文信息

将获取的网页转换成字符串,而非字节码

print(res.text) # 直接转换成字符串,非字节码

将获取的数据转换成字节码而非字符串,图片和视频需要此格式

print(res.content) # 字节码,图片视频使用此参数

打印状态码

print(res.status_code) # 打印状态码

print(“—-“*20)

解析Json并且获取响应头中的数据

print(res.json()[“headers”][“User-Agent”]) # 用json取响应报文中headers中的数据,自动转换为字典格式

获取响应头

print(res.headers)

print(“—-“*20)

获取Cookies

print(res.cookies)

获取URL

print(res.url)

print(“—-“*20)

这里是获取响应头,request是标准格式,不是包Resquests

print(res.request.headers)

import requests# post请求data = {"data1":"Spider", "data2":"测试爬虫"}json = {"json_style":"json-data"}headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56"}test_url = "http://httpbin.org/post" # 测试urlres = requests.post(test_url, json=json)res.encoding = "utf-8" # 为了正常显示网页中的中文信息print(res.text) # 直接转换成字符串,非字节码print(res.content) # 字节码,图片视频使用此参数print(res.status_code) # 打印状态码print("---"*20)print(res.json()["headers"]["User-Agent"]) # 用json取响应报文中headers中的数据,自动转换为字典格式print(res.headers)print("---"*20)print(res.cookies)print(res.url)print("---"*20)# 这里是获取响应头,request是标准格式,不是包Resquestsprint(res.request.headers)

{"args": {},"data": "{\"json_style\": \"json-data\"}","files": {},"form": {},"headers": {"Accept": "*/*","Accept-Encoding": "gzip, deflate","Content-Length": "27","Content-Type": "application/json","Host": "httpbin.org","User-Agent": "python-requests/2.25.1","X-Amzn-Trace-Id": "Root=1-602342b5-6d003f1f348f62150ec0a2f5"},"json": {"json_style": "json-data"},"origin": "47.240.65.248","url": "http://httpbin.org/post"}b'{\n "args": {}, \n "data": "{\\"json_style\\": \\"json-data\\"}", \n "files": {}, \n "form": {}, \n "headers": {\n "Accept": "*/*", \n "Accept-Encoding": "gzip, deflate", \n "Content-Length": "27", \n "Content-Type": "application/json", \n "Host": "httpbin.org", \n "User-Agent": "python-requests/2.25.1", \n "X-Amzn-Trace-Id": "Root=1-602342b5-6d003f1f348f62150ec0a2f5"\n }, \n "json": {\n "json_style": "json-data"\n }, \n "origin": "47.240.65.248", \n "url": "http://httpbin.org/post"\n}\n'200------------------------------------------------------------python-requests/2.25.1{'Content-Length': '502', 'Access-Control-Allow-Credentials': 'true', 'Access-Control-Allow-Origin': '*', 'Connection': 'keep-alive', 'Content-Type': 'application/json', 'Date': 'Wed, 10 Feb 2021 02:19:33 GMT', 'Keep-Alive': 'timeout=4', 'Proxy-Connection': 'keep-alive', 'Server': 'gunicorn/19.9.0'}------------------------------------------------------------<RequestsCookieJar[]>http://httpbin.org/post------------------------------------------------------------{'User-Agent': 'python-requests/2.25.1', 'Accept-Encoding': 'gzip, deflate', 'Accept': '*/*', 'Connection': 'keep-alive', 'Content-Length': '27', 'Content-Type': 'application/json'}Process finished with exit code 0

5. Session对象

功能:自动更新请求头信息,常用在账户登录的时候,先访问登录页面的URL,再访问数据提交的URL

例如12306

使用request请求获取请求头headers,含cookies

import resquestsindex_url = "https://www.bing.com"headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56"}session = requests.session()session.headers = headersres_ss = session.get(index_url)res_ss.encoding = "utf-8"# print(res_ss.text)'''使用session之后就可以使用request请求获取headers,这时候headers包含cookies'''print("---"*40)print(res_ss.request.headers)

{'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.56','Cookie': 'SNRHOP=TS=637485229167516980&I=1; _EDGE_S=F=1&SID=05EF50E1397D6E18095B5F3B383E6F57; _EDGE_V=1; MUID=056E607057156E9C14FE6FAA56566F14'}

20210210 年三十前一天 headers中的cookies无法解决,先学习后面的抓包工具