import mathimport torchfrom torch import nnclass GraphConvolution(nn.Module): """ Simple GCN layer, similar to https://arxiv.org/abs/1609.02907 """ def __init__(self, in_features, out_features, bias=True): super(GraphConvolution, self).__init__() self.in_features = in_features self.out_features = out_features self.weight = nn.Parameter(torch.FloatTensor(in_features, out_features)) if bias: self.bias = nn.Parameter(torch.FloatTensor(out_features)) else: self.register_parameter('bias', None) self.reset_parameters() def reset_parameters(self): stdv = 1. / math.sqrt(self.weight.size(1)) self.weight.data.uniform_(-stdv, stdv) if self.bias is not None: self.bias.data.uniform_(-stdv, stdv) def forward(self, input, adj): """ :param input: :param adj: :return: """ support = torch.mm(input, self.weight) # sparse matrix multiplication output = torch.spmm(adj, support) if self.bias is not None: return output + self.bias else: return output def __repr__(self): return self.__class__.__name__ + ' (' \ + str(self.in_features) + ' -> ' \ + str(self.out_features) + ')'

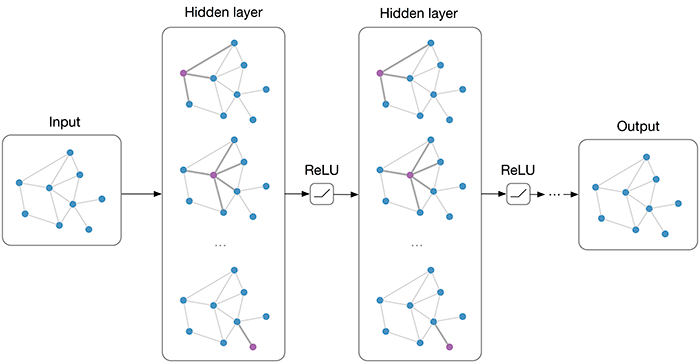

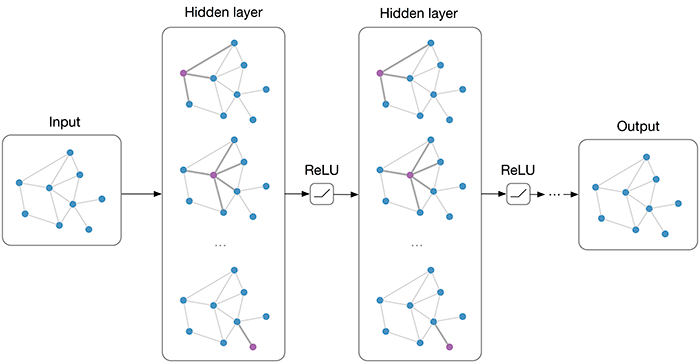

import torch.nn as nnimport torch.nn.functional as Ffrom layers import GraphConvolutionclass GCN(nn.Module): def __init__(self, nfeat, nhid, nclass, dropout): super(GCN, self).__init__() self.gc1 = GraphConvolution(nfeat, nhid) self.gc2 = GraphConvolution(nhid, nclass) self.dropout = dropout def forward(self, x, adj): """ :param x: [2708, 1433] :param adj: [2708, 2708] :return: """ # print('x:', x.shape, 'adj:', adj.shape) # => [2708, 16] x = F.relu(self.gc1(x, adj)) # print('gcn1:', x.shape) x = F.dropout(x, self.dropout, training=self.training) # => [2708, 7] x = self.gc2(x, adj) # print('gcn2:', x.shape) return F.log_softmax(x, dim=1)

from __future__ import divisionfrom __future__ import print_functionimport timeimport argparseimport numpy as npimport torchimport torch.nn.functional as Fimport torch.optim as optimfrom utils import load_data, accuracyfrom models import GCN# Training settingsparser = argparse.ArgumentParser()parser.add_argument('--no-cuda', action='store_true', default=False, help='Disables CUDA training.')parser.add_argument('--fastmode', action='store_true', default=False, help='Validate during training pass.')parser.add_argument('--seed', type=int, default=42, help='Random seed.')parser.add_argument('--epochs', type=int, default=200, help='Number of epochs to train.')parser.add_argument('--lr', type=float, default=0.01, help='Initial learning rate.')parser.add_argument('--weight_decay', type=float, default=5e-4, help='Weight decay (L2 loss on parameters).')parser.add_argument('--hidden', type=int, default=16, help='Number of hidden units.')parser.add_argument('--dropout', type=float, default=0.5, help='Dropout rate (1 - keep probability).')args = parser.parse_args()args.cuda = not args.no_cuda and torch.cuda.is_available()np.random.seed(args.seed)torch.manual_seed(args.seed)if args.cuda: torch.cuda.manual_seed(args.seed)# Load dataadj, features, labels, idx_train, idx_val, idx_test = load_data()# Model and optimizermodel = GCN(nfeat=features.shape[1], nhid=args.hidden, nclass=labels.max().item() + 1, dropout=args.dropout)optimizer = optim.Adam(model.parameters(), lr=args.lr, weight_decay=args.weight_decay)print(model)if args.cuda: model.cuda() features = features.cuda() adj = adj.cuda() labels = labels.cuda() idx_train = idx_train.cuda() idx_val = idx_val.cuda() idx_test = idx_test.cuda()def train(epoch): t = time.time() model.train() # [N, 7] output = model(features, adj) loss_train = F.nll_loss(output[idx_train], labels[idx_train]) acc_train = accuracy(output[idx_train], labels[idx_train]) optimizer.zero_grad() loss_train.backward() optimizer.step() if not args.fastmode: # Evaluate validation set performance separately, # deactivates dropout during validation run. model.eval() output = model(features, adj) loss_val = F.nll_loss(output[idx_val], labels[idx_val]) acc_val = accuracy(output[idx_val], labels[idx_val]) print('Epoch: {:04d}'.format(epoch+1), 'loss_train: {:.4f}'.format(loss_train.item()), 'acc_train: {:.4f}'.format(acc_train.item()), 'loss_val: {:.4f}'.format(loss_val.item()), 'acc_val: {:.4f}'.format(acc_val.item()), 'time: {:.4f}s'.format(time.time() - t))def test(): model.eval() output = model(features, adj) loss_test = F.nll_loss(output[idx_test], labels[idx_test]) acc_test = accuracy(output[idx_test], labels[idx_test]) print("Test set results:", "loss= {:.4f}".format(loss_test.item()), "accuracy= {:.4f}".format(acc_test.item()))# Train modelt_total = time.time()for epoch in range(args.epochs): train(epoch)print("Optimization Finished!")print("Total time elapsed: {:.4f}s".format(time.time() - t_total))# Testingtest()

import numpy as npimport scipy.sparse as spimport torchdef encode_onehot(labels): classes = set(labels) classes_dict = {c: np.identity(len(classes))[i, :] for i, c in enumerate(classes)} labels_onehot = np.array(list(map(classes_dict.get, labels)), dtype=np.int32) return labels_onehotdef load_data(path="./data/cora/", dataset="cora"): """Load citation network dataset (cora only for now)""" print('Loading {} dataset...'.format(dataset)) idx_features_labels = np.genfromtxt("{}{}.content".format(path, dataset), dtype=np.dtype(str)) features = sp.csr_matrix(idx_features_labels[:, 1:-1], dtype=np.float32) labels = encode_onehot(idx_features_labels[:, -1]) # build graph idx = np.array(idx_features_labels[:, 0], dtype=np.int32) idx_map = {j: i for i, j in enumerate(idx)} edges_unordered = np.genfromtxt("{}{}.cites".format(path, dataset), dtype=np.int32) edges = np.array(list(map(idx_map.get, edges_unordered.flatten())), dtype=np.int32).reshape(edges_unordered.shape) adj = sp.coo_matrix((np.ones(edges.shape[0]), (edges[:, 0], edges[:, 1])), shape=(labels.shape[0], labels.shape[0]), dtype=np.float32) # build symmetric adjacency matrix adj = adj + adj.T.multiply(adj.T > adj) - adj.multiply(adj.T > adj) features = normalize(features) adj = normalize(adj + sp.eye(adj.shape[0])) idx_train = range(140) idx_val = range(200, 500) idx_test = range(500, 1500) features = torch.FloatTensor(np.array(features.todense())) labels = torch.LongTensor(np.where(labels)[1]) adj = sparse_mx_to_torch_sparse_tensor(adj) idx_train = torch.LongTensor(idx_train) idx_val = torch.LongTensor(idx_val) idx_test = torch.LongTensor(idx_test) return adj, features, labels, idx_train, idx_val, idx_testdef normalize(mx): """Row-normalize sparse matrix""" rowsum = np.array(mx.sum(1)) r_inv = np.power(rowsum, -1).flatten() r_inv[np.isinf(r_inv)] = 0. r_mat_inv = sp.diags(r_inv) mx = r_mat_inv.dot(mx) return mxdef accuracy(output, labels): preds = output.max(1)[1].type_as(labels) correct = preds.eq(labels).double() correct = correct.sum() return correct / len(labels)def sparse_mx_to_torch_sparse_tensor(sparse_mx): """Convert a scipy sparse matrix to a torch sparse tensor.""" sparse_mx = sparse_mx.tocoo().astype(np.float32) indices = torch.from_numpy( np.vstack((sparse_mx.row, sparse_mx.col)).astype(np.int64)) values = torch.from_numpy(sparse_mx.data) shape = torch.Size(sparse_mx.shape) return torch.sparse.FloatTensor(indices, values, shape)