| Author: | Neng Pan, Ruibin Zhang, Tiankai Yang, Chao Xu, and Fei Gao� |

|---|---|

| Publisher: | IROS2021 |

| Publish year: | 2021 |

| Editor: | 柯西 |

Abstract/摘要

近几年,许多进步的工作促进了无人机跟踪的发展。最具代表性的工作之一是Fast-tracker1.0,该工作挑战了许多跟踪场景。但是它有两点缺点:1.使用二维码作目标检测,2.在有限视场角内跟随目标和环境感知的矛盾。在该文章中,我们升级了目标检测方法,采用深度学习和非线性回归检测和定位人类目标。然后给四旋翼系统安装可旋转云台,以获得360度视场角。此外我们优化了在Fast-tracker 1.0里的跟踪轨迹规划方法.全面的真实世界的测试确认了该系统的鲁棒性和实时性。与Fast-tracker 1.0相比,该系统在更复杂的跟踪任务中具有更好的跟踪效果。

Instruction/介绍

无人机跟随运动目标近几年在工业和学术界爆发。得益于视觉和导航技术的发展,使目标跟随更敏捷且具有鲁棒性。在我们前期工作,我们提供了完整的跟踪系统Fast-tracker 1.0,该系统使四旋翼在未知的复杂环境自主跟踪灵活运动的目标并且在多功能方案中成功运用

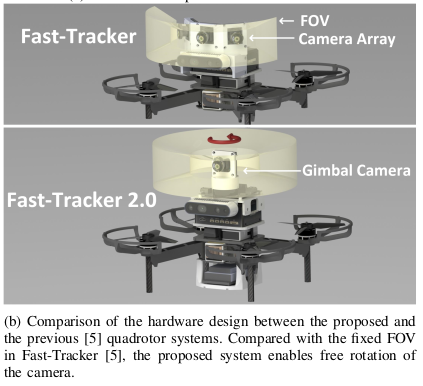

但是Fast-tracker 1.0仍然有许多缺点。主要的,采用二维码代替目标检测限制了系统的更广泛的应用。在本文中,我们升级了目标感知算法,采用深度学习检测人类目标并用预先训练的非线性回归估计其位置。此外,在Fast-tracker 1.0中,用有限视场角检测目标同时感知周围的环境障碍是自相矛盾的。为了解决这个问题,我们采用轻量级机载云台代替Fast-tracker 1.0中的相机阵列方法,将视觉跟踪和环境感知分开。可控的云台相机解决了目标和环境的冲突,使四旋翼专注于沿安全导航。此外,我们在路径搜索方法中加入了遮挡处罚,使四旋翼在跟踪的时候倾向于找到可观测的路径。

整体上看,我们的解决方案包括五个步骤:1.检测定位人类目标;2.在未知移动意图时目标运动意图预测;3.在跟踪搜索路径时加入遮挡感知;4.安全动态可行的跟踪路径的生成;5.一个全方位视觉机载系统的构建。该方案解决了灵活目标跟踪的大部分挑战。

上述方法被整合在四旋翼系统中。在真实环境中进行充分实验,证明系统可以精确检测并定位人类目标,然后高效安全的跟踪。与Fast-tracker 1.0对比,结果显示目标检测和定位更有挑战,2.0系统能够达到更好的跟踪效果。本文贡献如下:

1.一个轻量级无视人类意图的目标检测定位方法,该方法基于深度学习和非线性回归;

2.通过采用遮挡感知机制,改进Fast-tracker 1.0中的集成在线跟踪规划模块。

3.集成上述方法的四旋翼机载系统,并经过充分测试和评估。

Related Work/相关工作

A.略

B.采用卡尔曼的方法不能精确的预测目标的未来移动,Jeon et al. [3]将避免碰撞加入目标预测利用优化方法解决,但是物体碰撞由ESDF计算,浪费计算资源。Chen et al. [4]采用多面体回归近似为QP问题。Fast-tracker 1.0在此基础上,在预测目标轨迹优化问题中加入动力学约束和时间变量置信度

C.人类检测和定位

[2,8,9]主要利用2D目标单目图像恢复3D定位,[2]中结合MobileNet [10]和Faster-RCNN [11]作目标检测。然后用ray-casting模块将2D边界框投影到机载雷达传感器的高度地图上。上述场景中的雷达传感器对于机载目标跟随场景负载过重。Cheng et al. [8]将人类目标设为质点,让四旋翼在高空飞行,飞行器的高度近似为图像中的目标深度,然后用于3D位置估计。但是高海拔飞行不适用于室内和狭窄环境跟踪。Huang et al. [9]使用seq2seq� 神经网络从一系列骨架检测的2D点估计3D位置并定义其优化损失函数。上述方法主要用于航空电影摄影,其中目标姿势估计是规划拍摄角度所必需的。至于空中跟踪,没有迫切需要估计目标姿势。相对于[9],我们也使用骨架检测模型,但我们直接估计目标位置,不进行姿态估计以节省计算资源。

在机载3自由度云台相机上有[1, 2, 8, 9, 12, 13]等工作,解决动态跟踪,提高噪声下的目标观测。采用闭环控制或优化方法可以将目标保持在相机的期望位置。事实上,多数场景中目标在垂直方向不会有大幅移动,在相机平面控制水平方向即可避免目标丢失,保证四旋翼系统的灵活性。我们增加一个电滑环结构,使云台自由旋转增强目标感知能力

Overview/概要

问题建模

总体任务是控制四旋翼检测,定位,跟踪未知环境中未知意图的人类目标。使用是目标在世界坐标系下的3D位置估计。

是当前时刻

到

时刻的目标预测轨迹。对于四旋翼规划模块,我们使用占据地图

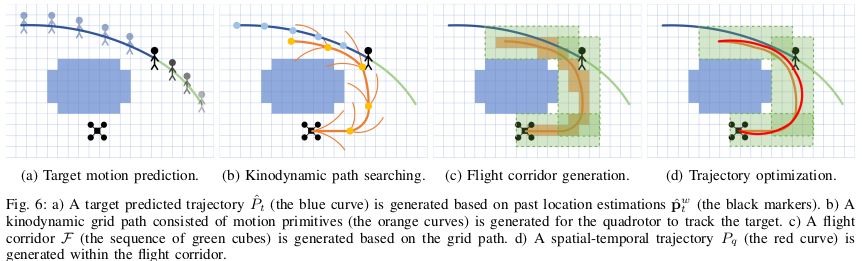

表示环境。以

为引导轨迹,前段路径搜索方法基于动力学信息生成栅格路径。利用由栅格路径点生成的飞行走廊,后端优化生成四旋翼跟踪目标的多项式轨迹

,根据跟踪场景,作了一系列如下简化假设:

- 在跟踪过程中,目标在垂直方向的运动忽略不计

- 目标上半身长度大致保持相同

- 目标在有限速度加速度以内平滑地运动

Action Vision Design

根据假设1,只控制目标在相机平面的水平位置。一个1自由度yaw可控的相机即可满足。我们采用PI控制器独立控制相机运动,与飞机控制分开。

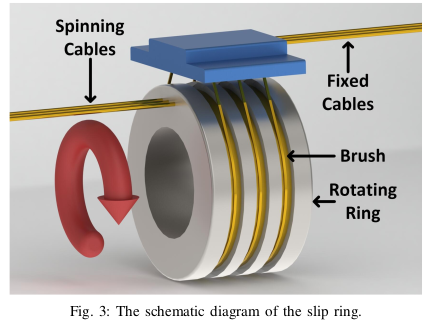

由于相机和云台是固连的,相机连接线缆会限制其旋转。为了使云台电机自由旋转,我们采用定制的电动滑环代替线缆。如图三所示,滑环由旋转环和环形固定刷组成。旋转电缆和固定电缆之间的数据传输分别实现,因为它们分别与旋转环和固定刷相连。因此相机可以在与机载计算机保持连接的同时自由旋转。

由于相机可活动,四旋翼可以面向速度方向而非目标方向,因此能够同时观察目标和周围环境障碍。

基于定位回归的目标检测

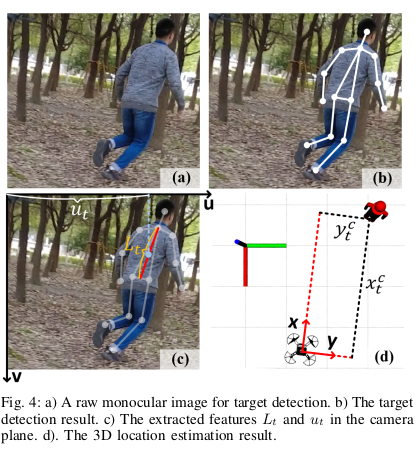

该章节阐述目标检测和定位方法。利用单目图像,该模块输出,如图4.

人类目标检测

对于人类目标检测,使用OpenPose[14],基于2D位姿估计深度学习方法,该方法检测2D点,命名为头,肩膀,膝盖等. OpenPose采用自下而上的解析架构,并且能高效地重标记。为确保在机载平台上的实时性,采用TensorRT 推理优化器。

基于假设2,目标的上身长度被认为定植,我们将臀部到脖子的距离作为上身长度,用于目标定位。

基于目标定位的非线性回归

上身长度为,利用目标中心的在图像中的坐标

来定位。非线性函数是

到相机系

的映射。基于假设1,

设为定值。定义由一系列未确定参数的函数表示如下:

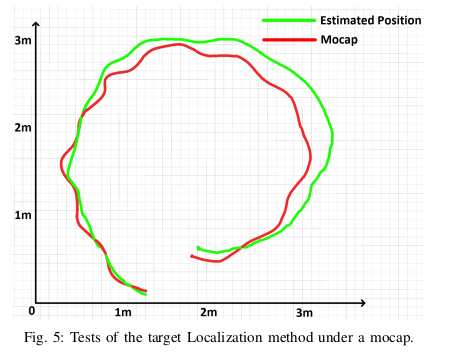

在训练中,用从运动捕获系统(mocap)获得的地面真实值制作一个数据集,训练这些参数。通作坐标变换转到world系下.

我们测试位置回归方法的性能,如下图。平均距离误差约为 20 厘米,在跟踪场景可以接受这种误差。结果(Sect. VI)也证实这种方法是实用和有效的。

在线跟踪规划

在本章节,我们介绍Fast-Tracker 1.0的跟踪规划模块,并对其进行改进

目标运动预测

目标预测轨迹利用第四节中的目标历史估计信息。多项式回归用于目标运动估计。我们采用基于多项式的贝塞尔曲线(凸包和hodograph特性)进行动力学约束。目标预测轨迹如下:

每个是n阶贝塞尔多项式基。并且

是贝塞尔曲线的一系列控制点

目标函数被建模为QP问题, 包括残差

和正交项

,

%22%20aria-hidden%3D%22true%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-4A%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(555%2C-150)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-70%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-72%22%20x%3D%22503%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-65%22%20x%3D%22955%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%221938%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(2994%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-4A%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-64%22%20x%3D%22785%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2B%22%20x%3D%224242%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(5243%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-4A%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-72%22%20x%3D%22785%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fsvg%3E#card=math&code=J_%7Bpre%7D%20%3D%20J_d%20%2B%20J_r&id=jlLdZ)

其中表示

和历史目标位置估计的最小距离残差。

是避免过拟合的加速度正交化方向。

基于假设3,速度和加速度预测被约束在预先定义的保证动态可行的范围内。时间项权重被用于减小历史目标位置估计的置信度。得到的时间段的轨迹即目标运动预测轨迹,详细公式见论文[5].

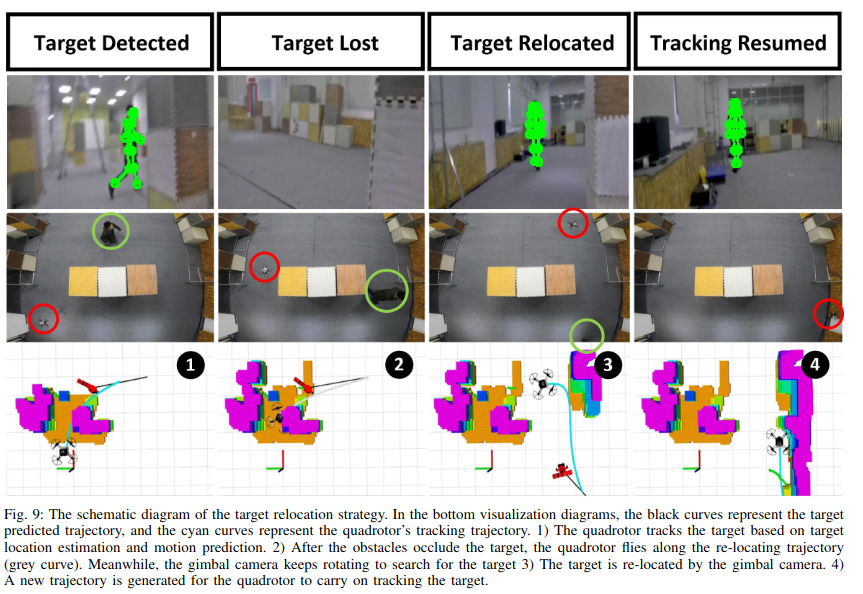

在Fast-Tracker1.0中提出了目标重定位,使飞机在目标丢失后自主探索并发现目标。在有动态视觉后,可以通过旋转云台完成重定位过程,提高了重定位效率。

障碍已知的跟踪轨迹搜索

路径搜索算法采用hybrid A,详见论文[16]。让位置和速度向量作为四旋翼状态量.四旋翼加速度作为控制输入

,离散生成运动基点,被命名为hybrid A算法的节点。

每个节点的损失函数为,其中

表示当前状态

到初始状态

的代价,

表示代价函数。

平衡控制输入

和时间

使轨迹最小化能量-时间,公式如下:

其中为权重。在实践中,损失函数离散为拓展节点代价的和。

表示当前状态

到目标状态

的启发式代价,用

表示.采用论文[17]中介绍的两点边界值问题(OBVP)求解该问题.为了使搜索到的路径更可靠且具有前瞻性,采用目标当前状态

和终止状态

(从预测轨迹得到的预测目标状态

作为终止状态

)乘权重后的值为代价.此外为了加速度搜索,加入时间项

.

在本文中,每个节点拓展过程都会进行到

的碰撞检测.若在直线

检测不通过,则该节点被舍弃.碰撞损失项

被加入启发式函数.如下:

安全跟踪轨迹生成

使用时空多项式轨迹生成作为后端优化.

飞行走廊由搜索到的路径生成,如下:

每个为有限多面体:

路径起点和终点位置分别位于中,而路径上的中间点则位于

相交空间内,保证轨迹安全性。

优化项包括平滑性,走廊约束

,高效性

:

项最小化jerk使轨迹尽可能平滑,

是保证每个

在

范围内的对数,如下:

其中是常数项,1为各项为1的向量,

自然对数

最后一项调整整条轨迹的激进性。定义如下:

其中

表示最大速度,

表示最大加速度。

避免轨迹整体时间过大。

限制速度和加速度避免跟踪轨迹过于激进。

Result/结果

实施详情

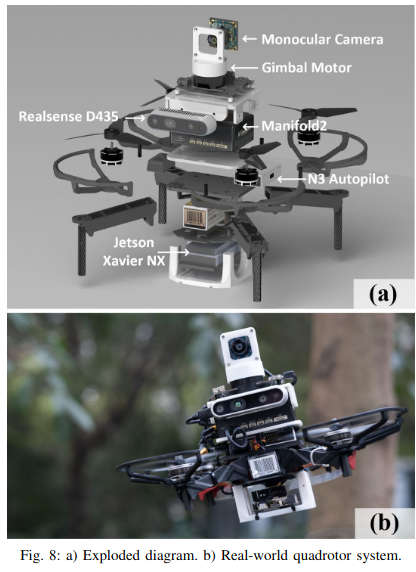

真实实验环境的四旋翼系统如下图,,搭载D435深度相机用于定位和建图,其中定位,建图,运动规划算法在,i7-8550 CPU上运行,目标检测算法在Jetson Xavier NX4,21 TOPS的GPU上运行。云台由无刷电机驱动。四旋翼大小为

mm,重量1.4kg(包括4s电池),平均计算时间为40ms(目标检测模块),20ms(运动规划模块),重规划频率约为13HZ。

真实实验

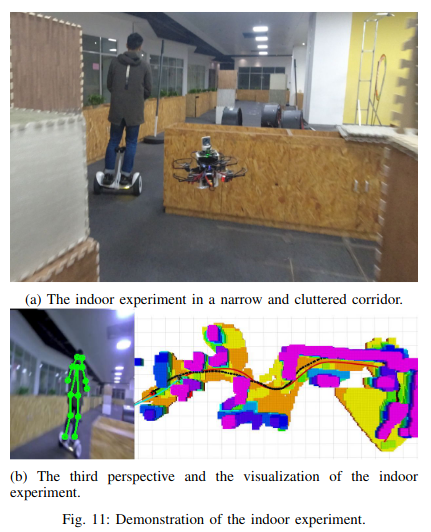

实验一,如下图,在狭窄复杂环境的走廊,目标使用平衡车移动,需要四旋翼反应灵敏,且在有危险时重规划轨迹,结果是在狭窄环境下能够安全近距离的跟踪目标

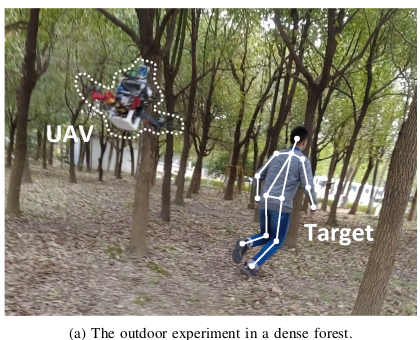

实验二:如下图,在该试验中,四旋翼由于目标的突然转向丢失目标,然后目标转回又快速移动到另外一个方向,使其难以被发现。实验结果是,目标依然能够被重定位,因为云台相机在四旋翼移动过程中在所有方向寻找目标。 实验三:在室外稠密树林环境中,如图1a,目标在树木间穿梭,强风扬起的树叶和灰尘使飞机的自我定位和建图置信度降低。在此情况下,飞机仍可以达到3m/s的速度,近距离跟踪目标。

实验三:在室外稠密树林环境中,如图1a,目标在树木间穿梭,强风扬起的树叶和灰尘使飞机的自我定位和建图置信度降低。在此情况下,飞机仍可以达到3m/s的速度,近距离跟踪目标。

从以上实验中,我们可以得出该系统在多个挑战任务中仍能够保持鲁棒性和安全性。更多详情在视频中可见。

对比

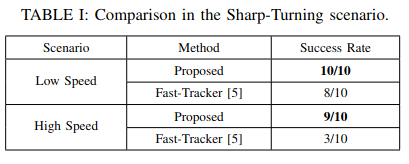

Fast-Tracker1.0系统对于突然转弯的情况可能会导致撞上障碍,10次测试表明可跟踪的目标最低速度0.85m/s,最高速度1.5m/s,且并不能一直跟踪到目标。下表表明本文方法能够更好的检测跟踪目标。基于活动视觉,飞机能够在完成jerk运动的同时检测到目标。此外,基于障碍物感知的路径搜索方法倾向于搜索到更方便看到目标的路径。

CONCLUSION/结论

略