Flink 客户端日志

如果设置了环境变量 FLINK_HOME,日志将会放置在 FLINK_HOME 指向目录的 log 目录之下。否则,日志将会放在安装的 Pyflink 模块的 log 目录下。你可以通过执行下面的命令来查找 PyFlink 模块的 log 目录的路径:这里我设置了环境变量 FLINK_HOME,所以 Flink 客户端日志路径为:$FLINK_HOME/log/flink-root-client-$HOSTNAME.log

$ python -c "import pyflink;import os;print(os.path.dirname(os.path.abspath(pyflink.__file__))+'/log')"

bash

root@node01:/usr/local/flink# tail /usr/local/flink-1.14.3/log/flink-root-client-node01.log

2022-02-11 17:03:16,522 INFO org.apache.flink.shaded.curator4.org.apache.curator.framework.imps.CuratorFrameworkImpl [] - Starting

2022-02-11 17:03:16,522 INFO org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper [] - Initiating client connection, connectString=node01:2181,node02:2181,node03:2181,node04:2181,node05:2181 sessionTimeout=60000 watcher=org.apache.flink.shaded.curator4.org.apache.curator.ConnectionState@62f11ebb

2022-02-11 17:03:16,524 INFO org.apache.flink.shaded.curator4.org.apache.curator.framework.imps.CuratorFrameworkImpl [] - Default schema

2022-02-11 17:03:16,524 WARN org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ClientCnxn [] - SASL configuration failed: javax.security.auth.login.LoginException: No JAAS configuration section named 'Client' was found in specified JAAS configuration file: '/tmp/jaas-871643657761434662.conf'. Will continue connection to Zookeeper server without SASL authentication, if Zookeeper server allows it.

2022-02-11 17:03:16,524 INFO org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ClientCnxn [] - Opening socket connection to server node02/172.31.1.17:2181

2022-02-11 17:03:16,525 ERROR org.apache.flink.shaded.curator4.org.apache.curator.ConnectionState [] - Authentication failed

2022-02-11 17:03:16,525 INFO org.apache.flink.runtime.leaderretrieval.DefaultLeaderRetrievalService [] - Starting DefaultLeaderRetrievalService with ZookeeperLeaderRetrievalDriver{connectionInformationPath='/leader/rest_server/connection_info'}.

2022-02-11 17:03:16,525 INFO org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ClientCnxn [] - Socket connection established to node02/172.31.1.17:2181, initiating session

2022-02-11 17:03:16,529 INFO org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ClientCnxn [] - Session establishment complete on server node02/172.31.1.17:2181, sessionid = 0x20000f82b4b0037, negotiated timeout = 40000

2022-02-11 17:03:16,529 INFO org.apache.flink.shaded.curator4.org.apache.curator.framework.state.ConnectionStateManager [] - State change: CONNECTED

通过 Flink 命令行提交任务的日志保存在上面的文件夹中

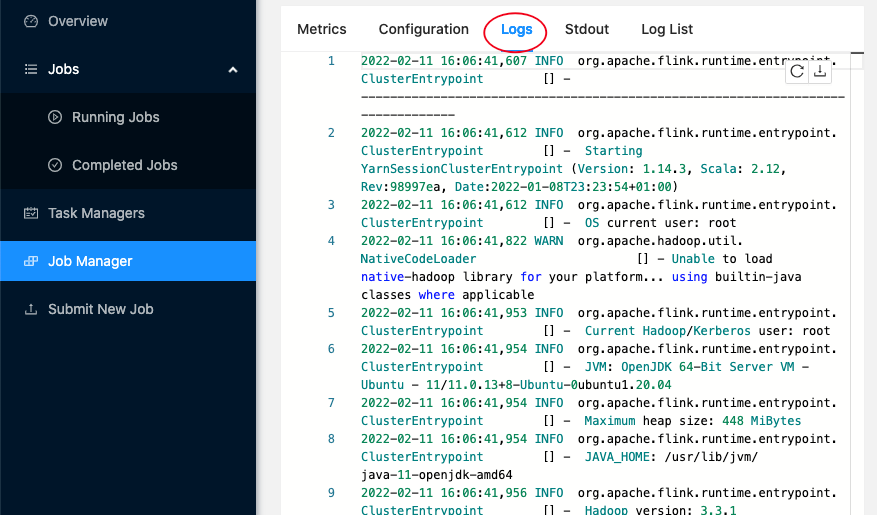

JobManager 日志

查看在 hadoop 的 yarn-site.xml 中配置的日志路径:进入 job 的日志,/data/hadoop/yarn/log/

<property><name>yarn.nodemanager.log-dirs</name><value>/data/hadoop/yarn/log</value></property>

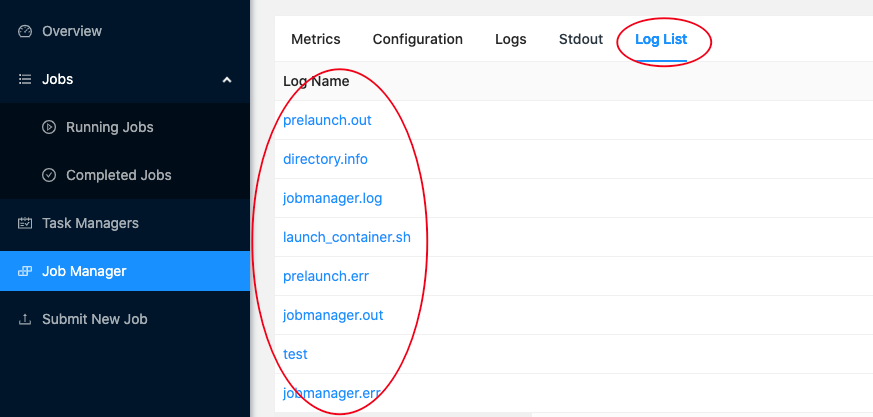

也可以通过 Flink Web 管理界面的 Job Manager 查看,可以看到文件列表与上面的方式保持一致,比如我手动创建的 test 文件也会显示了

$ cd /data/hadoop/yarn/log/application_1642755490153_0047/container_1642755490153_0047_01_000001$ lltotal 108drwx--x--- 2 root root 4096 Feb 11 16:36 ./drwx--x--- 3 root root 4096 Feb 11 16:36 ../-rw-r--r-- 1 root root 5536 Feb 11 16:06 directory.info-rw-r--r-- 1 root root 557 Feb 11 16:06 jobmanager.err-rw-r--r-- 1 root root 68230 Feb 11 16:06 jobmanager.log-rw-r--r-- 1 root root 0 Feb 11 16:06 jobmanager.out-rw-r----- 1 root root 15589 Feb 11 16:06 launch_container.sh-rw-r--r-- 1 root root 0 Feb 11 16:06 prelaunch.err-rw-r--r-- 1 root root 100 Feb 11 16:06 prelaunch.out-rw-r--r-- 1 root root 0 Feb 11 16:36 test

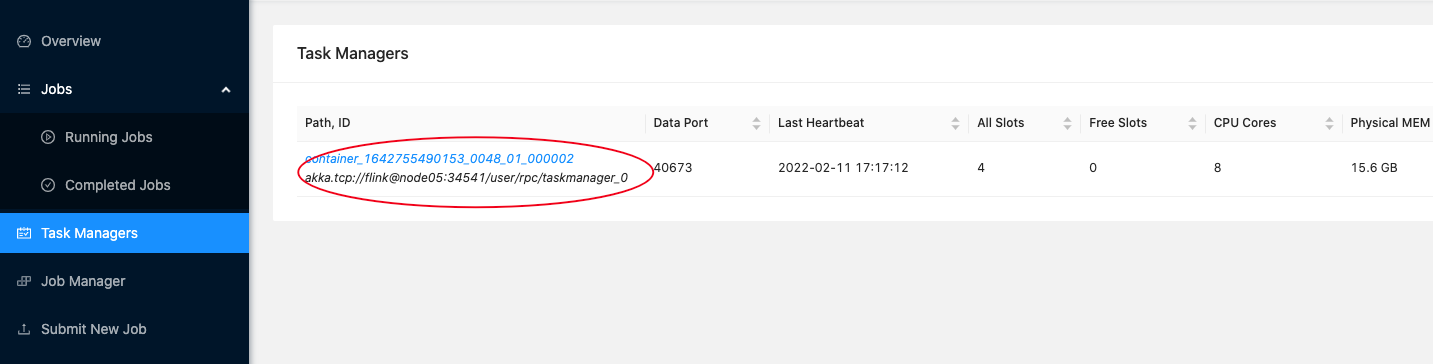

TaskManager 日志

task 的日志路径与 job 的基本一致,只是 CONTAINER_ID 不一样,/data/hadoop/yarn/log/

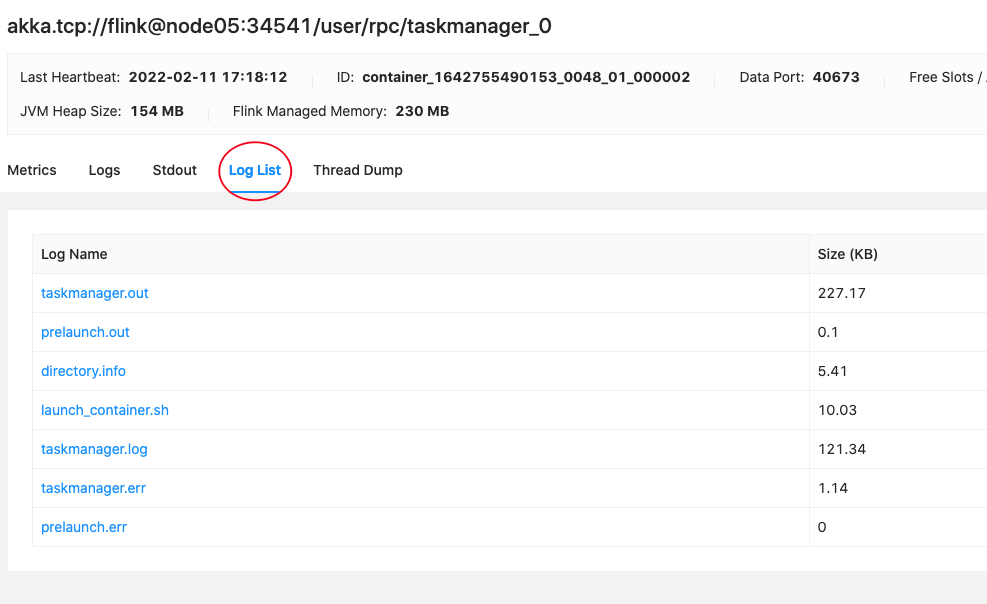

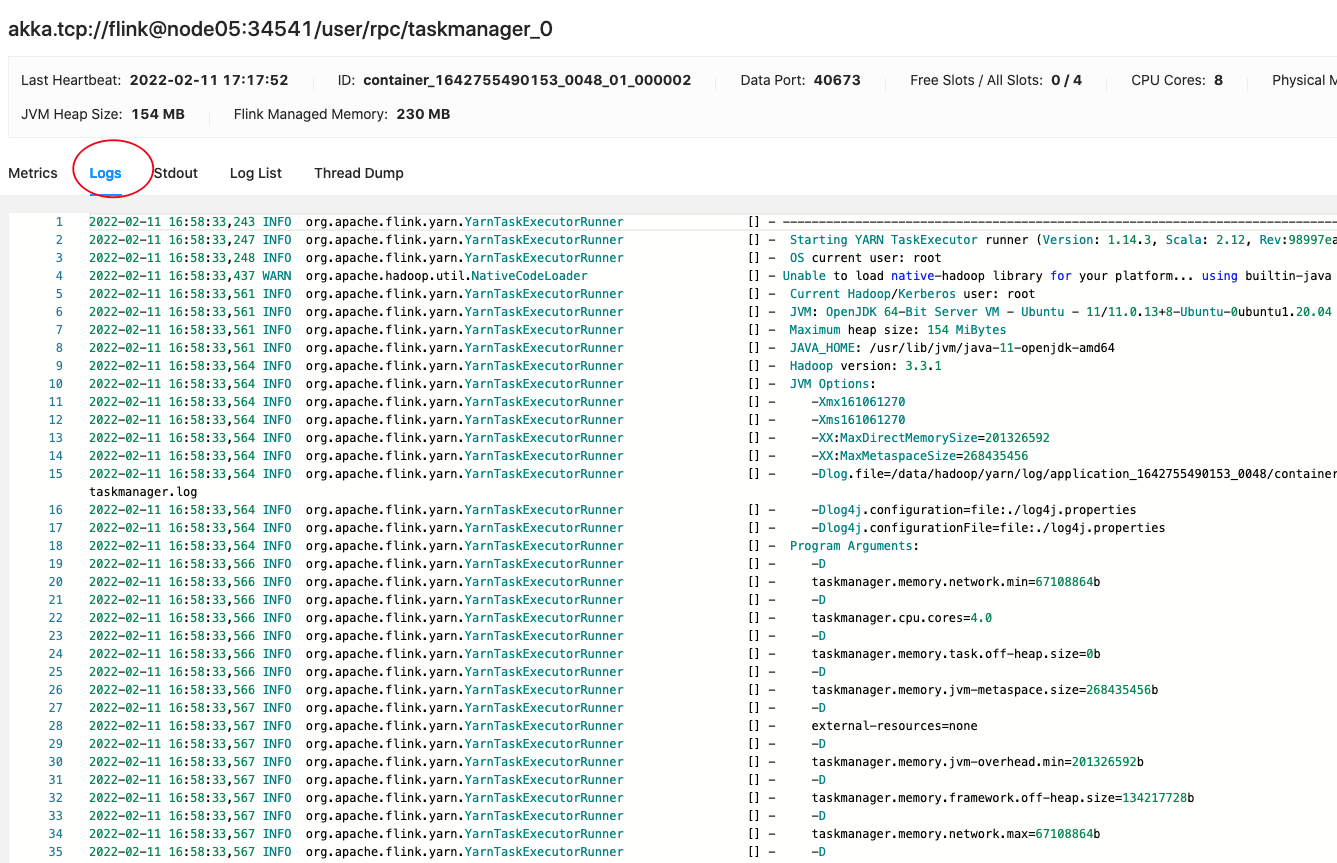

在 Log List 我们能看到 task 所有的日志文件,单击即可打开