适用数据类型:标称型

使用Python进行文本分类:

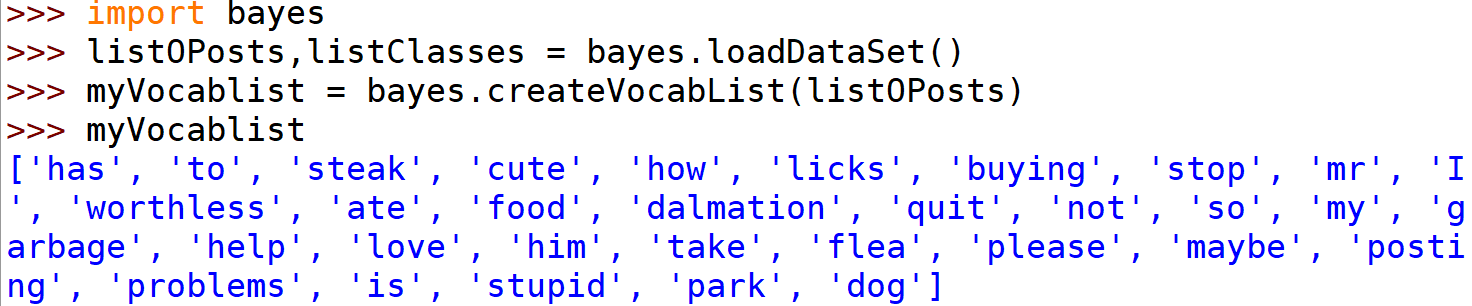

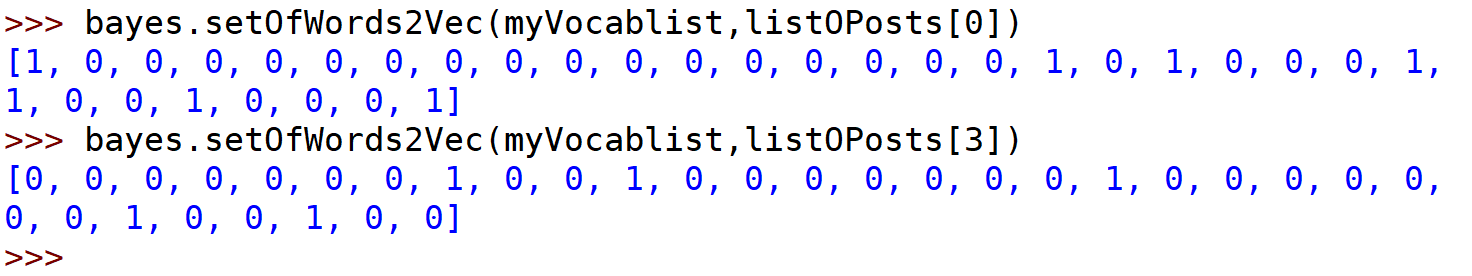

从文本中构建词向量:

def loadDataSet():postingList = [['my', 'dog', 'has', 'flea', 'problems', 'help', 'please'],['maybe', 'not', 'take', 'him', 'to', 'dog', 'park', 'stupid'],['my', 'dalmation', 'is', 'so', 'cute', 'I', 'love', 'him'],['stop', 'posting', 'stupid', 'worthless', 'garbage'],['mr', 'licks', 'ate', 'my', 'steak', 'how', 'to', 'stop', 'him'],['quit', 'buying', 'worthless', 'dog', 'food', 'stupid']]classVec = [0, 1, 0, 1, 0, 1] #1 is abusive, 0 notreturn postingList, classVec#利用集合set创造不重复的词组列表:def createVocabList(dataSet):vocabSet = set([]) #create empty setfor document in dataSet:vocabSet = vocabSet | set(document) #union of the two setsreturn list(vocabSet)def setOfWords2Vec(vocabList, inputSet):returnVec = [0]*len(vocabList)for word in inputSet:if word in vocabList:returnVec[vocabList.index(word)] = 1else: print("the word: %s is not in my Vocabulary!" % word)return returnVec

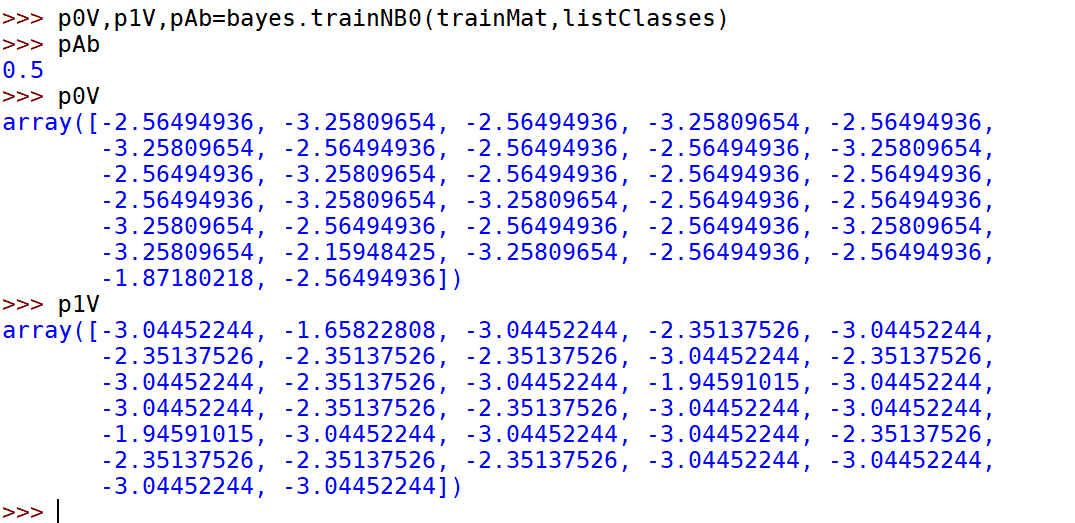

import numpy as npdef trainNB0(trainMatrix, trainCategory):numTrainDocs = len(trainMatrix)numWords = len(trainMatrix[0])pAbusive = sum(trainCategory)/float(numTrainDocs)p0Num = np.ones(numWords); p1Num = np.ones(numWords) #change to np.ones()p0Denom = 2.0; p1Denom = 2.0 #change to 2.0for i in range(numTrainDocs):if trainCategory[i] == 1:p1Num += trainMatrix[i]p1Denom += sum(trainMatrix[i])else:p0Num += trainMatrix[i]p0Denom += sum(trainMatrix[i])p1Vect = np.log(p1Num/p1Denom) #change to np.log()p0Vect = np.log(p0Num/p0Denom) #change to np.log()return p0Vect, p1Vect, pAbusive

修改分类器:

下溢出问题:使用对乘积取自然对数的方法。

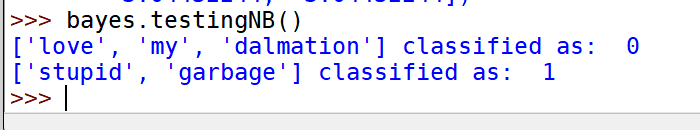

def classifyNB(vec2Classify, p0Vec, p1Vec, pClass1):p1 = sum(vec2Classify * p1Vec) + np.log(pClass1) #element-wise multp0 = sum(vec2Classify * p0Vec) + np.log(1.0 - pClass1)if p1 > p0:return 1else:return 0def testingNB():listOPosts, listClasses = loadDataSet()myVocabList = createVocabList(listOPosts)trainMat = []for postinDoc in listOPosts:trainMat.append(setOfWords2Vec(myVocabList, postinDoc))p0V, p1V, pAb = trainNB0(np.array(trainMat), np.array(listClasses))testEntry = ['love', 'my', 'dalmation']thisDoc = np.array(setOfWords2Vec(myVocabList, testEntry))print(testEntry, 'classified as: ', classifyNB(thisDoc, p0V, p1V, pAb))testEntry = ['stupid', 'garbage']thisDoc = np.array(setOfWords2Vec(myVocabList, testEntry))print(testEntry, 'classified as: ', classifyNB(thisDoc, p0V, p1V, pAb))

def bagOfWords2VecMN(vocabList, inputSet):returnVec = [0]*len(vocabList)for word in inputSet:if word in vocabList:returnVec[vocabList.index(word)] += 1return returnVec

(词集模型:每个词出现或不出现;词袋模型,每个词出现的次数多少)

示例:使用朴素贝叶斯过滤垃圾邮件

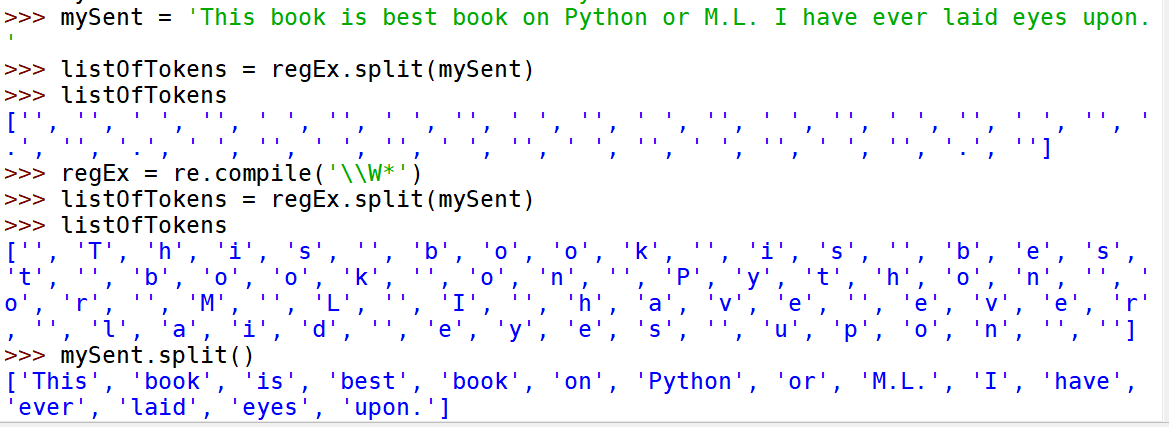

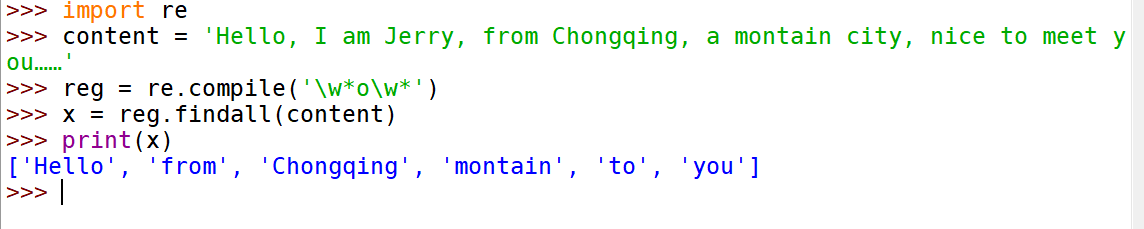

问题:为什么没办法实现字符的分隔

可以换一种方法

https://www.cnblogs.com/xiaokuangnvhai/p/11213308.html

https://www.cnblogs.com/xp1315458571/p/13720333.html

def textParse(bigString): #input is big string, #output is word listimport relistOfTokens = re.split(r'\W+', bigString)return [tok.lower() for tok in listOfTokens if len(tok) > 2]def spamTest():docList = []; classList = []; fullText = []for i in range(1, 26):wordList = textParse(open('email/spam/%d.txt' % i, encoding="ISO-8859-1").read())docList.append(wordList)fullText.extend(wordList)classList.append(1)wordList = textParse(open('email/ham/%d.txt' % i, encoding="ISO-8859-1").read())docList.append(wordList)fullText.extend(wordList)classList.append(0)vocabList = createVocabList(docList)#create vocabularytrainingSet = range(50); testSet = [] #create test setfor i in range(10):randIndex = int(np.random.uniform(0, len(trainingSet)))testSet.append(trainingSet[randIndex])del(list(trainingSet)[randIndex])trainMat = []; trainClasses = []for docIndex in trainingSet:#train the classifier (get probs) trainNB0trainMat.append(bagOfWords2VecMN(vocabList, docList[docIndex]))trainClasses.append(classList[docIndex])p0V, p1V, pSpam = trainNB0(np.array(trainMat), np.array(trainClasses))errorCount = 0for docIndex in testSet: #classify the remaining itemswordVector = bagOfWords2VecMN(vocabList, docList[docIndex])if classifyNB(np.array(wordVector), p0V, p1V, pSpam) != classList[docIndex]:errorCount += 1print("classification error", docList[docIndex])print('the error rate is: ', float(errorCount)/len(testSet))#return vocabList, fullText