基于最大间隔分割数据

N维的数据需要N-1维来分隔,称为分隔超平面

数据离超平面越远,则获得的结果越可信。

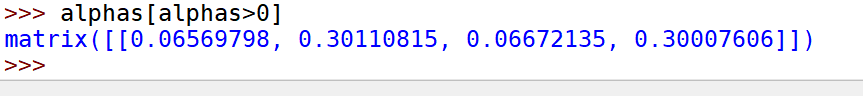

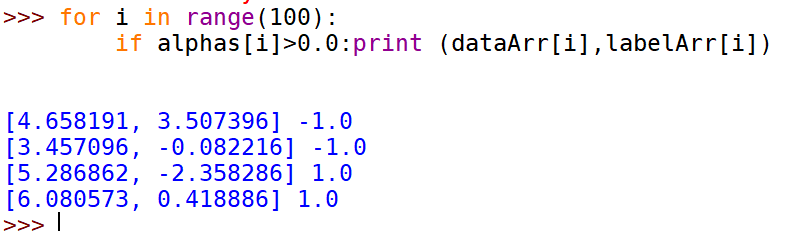

支持向量就是离分隔超平面最近的那些点。需要最大化支持向量到分隔面的距离。

寻找最大间隔

SVM主要应用于二类的分类问题,对于多类需要进行代码修改。

SMO高效优化算法

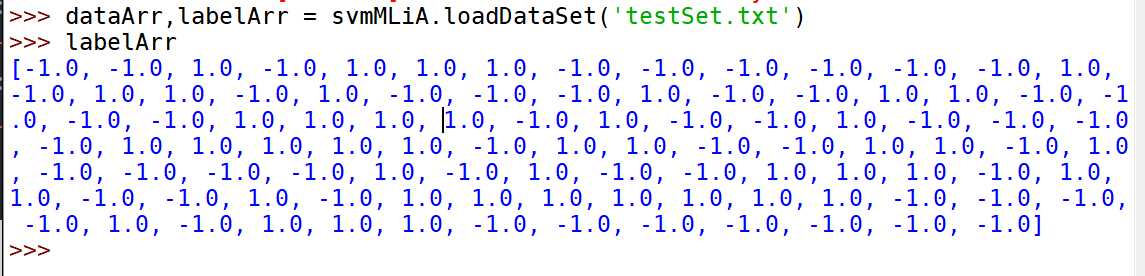

def loadDataSet(fileName):dataMat = []; labelMat = []fr = open(fileName)for line in fr.readlines():lineArr = line.strip().split('\t')dataMat.append([float(lineArr[0]), float(lineArr[1])])labelMat.append(float(lineArr[2]))return dataMat,labelMatdef selectJrand(i,m):j=i #we want to select any J not equal to iwhile (j==i):j = int(random.uniform(0,m))return jdef clipAlpha(aj,H,L):if aj > H:aj = Hif L > aj:aj = Lreturn aj

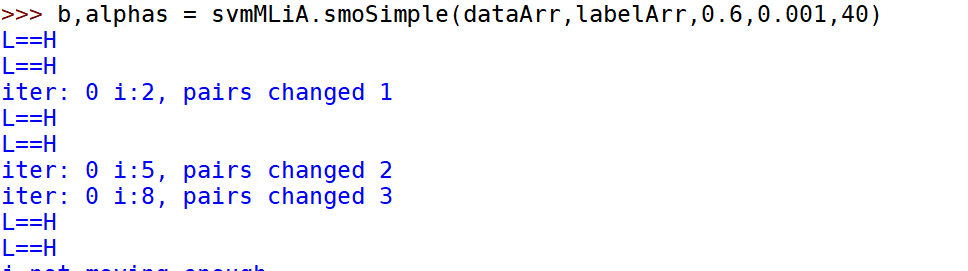

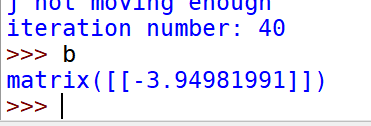

def smoSimple(dataMatIn, classLabels, C, toler, maxIter):dataMatrix = mat(dataMatIn); labelMat = mat(classLabels).transpose()b = 0; m,n = shape(dataMatrix)alphas = mat(zeros((m,1)))iter = 0while (iter < maxIter):alphaPairsChanged = 0for i in range(m):fXi = float(multiply(alphas,labelMat).T*(dataMatrix*dataMatrix[i,:].T)) + bEi = fXi - float(labelMat[i])#if checks if an example violates KKT conditionsif ((labelMat[i]*Ei < -toler) and (alphas[i] < C)) or ((labelMat[i]*Ei > toler) and (alphas[i] > 0)):j = selectJrand(i,m)fXj = float(multiply(alphas,labelMat).T*(dataMatrix*dataMatrix[j,:].T)) + bEj = fXj - float(labelMat[j])alphaIold = alphas[i].copy(); alphaJold = alphas[j].copy();if (labelMat[i] != labelMat[j]):L = max(0, alphas[j] - alphas[i])H = min(C, C + alphas[j] - alphas[i])else:L = max(0, alphas[j] + alphas[i] - C)H = min(C, alphas[j] + alphas[i])if L==H: print("L==H"); continueeta = 2.0 * dataMatrix[i,:]*dataMatrix[j,:].T - dataMatrix[i,:]*dataMatrix[i,:].T - dataMatrix[j,:]*dataMatrix[j,:].Tif eta >= 0: print("eta>=0"); continuealphas[j] -= labelMat[j]*(Ei - Ej)/etaalphas[j] = clipAlpha(alphas[j],H,L)if (abs(alphas[j] - alphaJold) < 0.00001): print("j not moving enough"); continuealphas[i] += labelMat[j]*labelMat[i]*(alphaJold - alphas[j])#update i by the same amount as j#the update is in the oppostie directionb1 = b - Ei- labelMat[i]*(alphas[i]-alphaIold)*dataMatrix[i,:]*dataMatrix[i,:].T - labelMat[j]*(alphas[j]-alphaJold)*dataMatrix[i,:]*dataMatrix[j,:].Tb2 = b - Ej- labelMat[i]*(alphas[i]-alphaIold)*dataMatrix[i,:]*dataMatrix[j,:].T - labelMat[j]*(alphas[j]-alphaJold)*dataMatrix[j,:]*dataMatrix[j,:].Tif (0 < alphas[i]) and (C > alphas[i]): b = b1elif (0 < alphas[j]) and (C > alphas[j]): b = b2else: b = (b1 + b2)/2.0alphaPairsChanged += 1print("iter: %d i:%d, pairs changed %d" % (iter,i,alphaPairsChanged))if (alphaPairsChanged == 0): iter += 1else: iter = 0print("iteration number: %d" % iter)return b,alphas

利用完整Platt SMO算法加速优化

class optStruct:def __init__(self,dataMatIn, classLabels, C, toler, kTup): # Initialize the structure with the parametersself.X = dataMatInself.labelMat = classLabelsself.C = Cself.tol = tolerself.m = shape(dataMatIn)[0]self.alphas = mat(zeros((self.m,1)))self.b = 0self.eCache = mat(zeros((self.m,2))) #first column is valid flagself.K = mat(zeros((self.m,self.m)))for i in range(self.m):self.K[:,i] = kernelTrans(self.X, self.X[i,:], kTup)def calcEk(oS, k):fXk = float(multiply(oS.alphas,oS.labelMat).T*oS.K[:,k] + oS.b)Ek = fXk - float(oS.labelMat[k])return Ekdef selectJ(i, oS, Ei): #this is the second choice -heurstic, and calcs EjmaxK = -1; maxDeltaE = 0; Ej = 0oS.eCache[i] = [1,Ei] #set valid #choose the alpha that gives the maximum delta EvalidEcacheList = nonzero(oS.eCache[:,0].A)[0]if (len(validEcacheList)) > 1:for k in validEcacheList: #loop through valid Ecache values and find the one that maximizes delta Eif k == i: continue #don't calc for i, waste of timeEk = calcEk(oS, k)deltaE = abs(Ei - Ek)if (deltaE > maxDeltaE):maxK = k; maxDeltaE = deltaE; Ej = Ekreturn maxK, Ejelse: #in this case (first time around) we don't have any valid eCache valuesj = selectJrand(i, oS.m)Ej = calcEk(oS, j)return j, Ejdef updateEk(oS, k):#after any alpha has changed update the new value in the cacheEk = calcEk(oS, k)oS.eCache[k] = [1,Ek]

def innerL(i, oS):Ei = calcEk(oS, i)if ((oS.labelMat[i]*Ei < -oS.tol) and (oS.alphas[i] < oS.C)) or ((oS.labelMat[i]*Ei > oS.tol) and (oS.alphas[i] > 0)):j,Ej = selectJ(i, oS, Ei) #this has been changed from selectJrandalphaIold = oS.alphas[i].copy(); alphaJold = oS.alphas[j].copy();if (oS.labelMat[i] != oS.labelMat[j]):L = max(0, oS.alphas[j] - oS.alphas[i])H = min(oS.C, oS.C + oS.alphas[j] - oS.alphas[i])else:L = max(0, oS.alphas[j] + oS.alphas[i] - oS.C)H = min(oS.C, oS.alphas[j] + oS.alphas[i])if L==H: print("L==H"); return 0eta = 2.0 * oS.K[i,j] - oS.K[i,i] - oS.K[j,j] #changed for kernelif eta >= 0: print("eta>=0"); return 0oS.alphas[j] -= oS.labelMat[j]*(Ei - Ej)/etaoS.alphas[j] = clipAlpha(oS.alphas[j],H,L)updateEk(oS, j) #added this for the Ecacheif (abs(oS.alphas[j] - alphaJold) < 0.00001): print("j not moving enough"); return 0oS.alphas[i] += oS.labelMat[j]*oS.labelMat[i]*(alphaJold - oS.alphas[j])#update i by the same amount as jupdateEk(oS, i) #added this for the Ecache #the update is in the oppostie directionb1 = oS.b - Ei- oS.labelMat[i]*(oS.alphas[i]-alphaIold)*oS.K[i,i] - oS.labelMat[j]*(oS.alphas[j]-alphaJold)*oS.K[i,j]b2 = oS.b - Ej- oS.labelMat[i]*(oS.alphas[i]-alphaIold)*oS.K[i,j]- oS.labelMat[j]*(oS.alphas[j]-alphaJold)*oS.K[j,j]if (0 < oS.alphas[i]) and (oS.C > oS.alphas[i]): oS.b = b1elif (0 < oS.alphas[j]) and (oS.C > oS.alphas[j]): oS.b = b2else: oS.b = (b1 + b2)/2.0return 1else: return 0

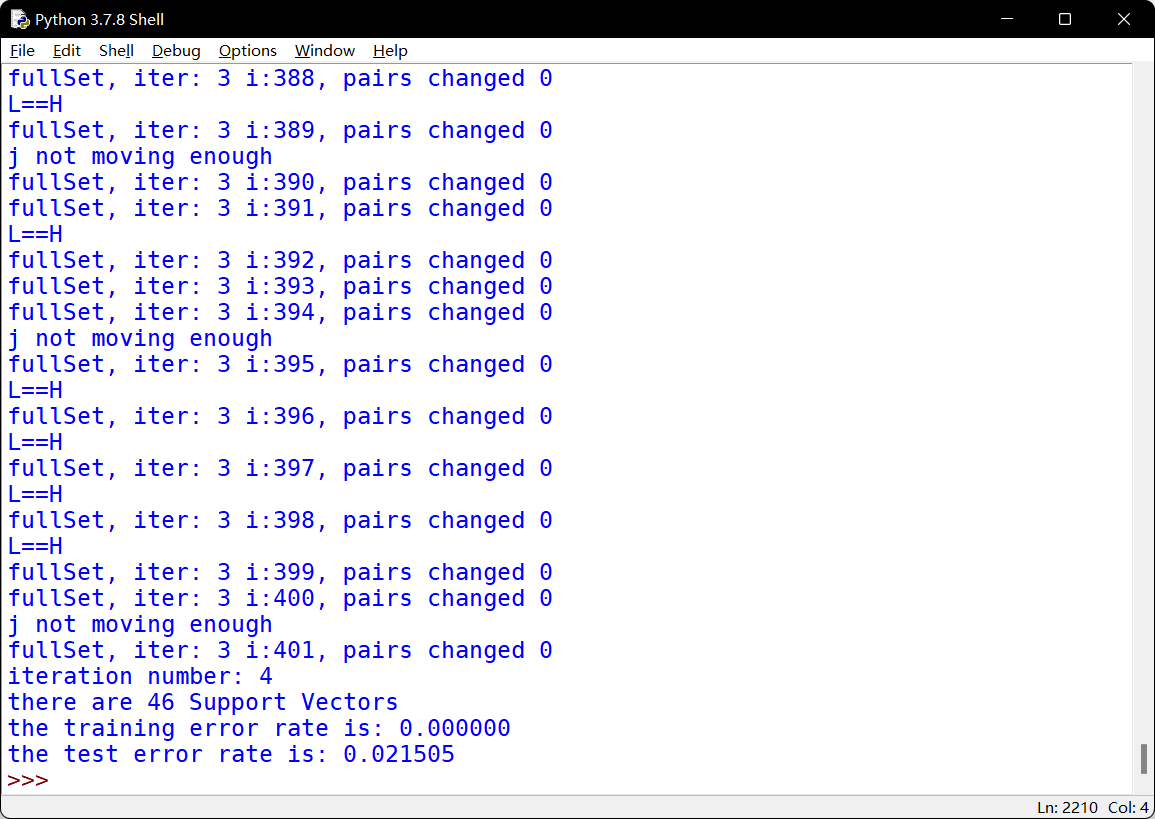

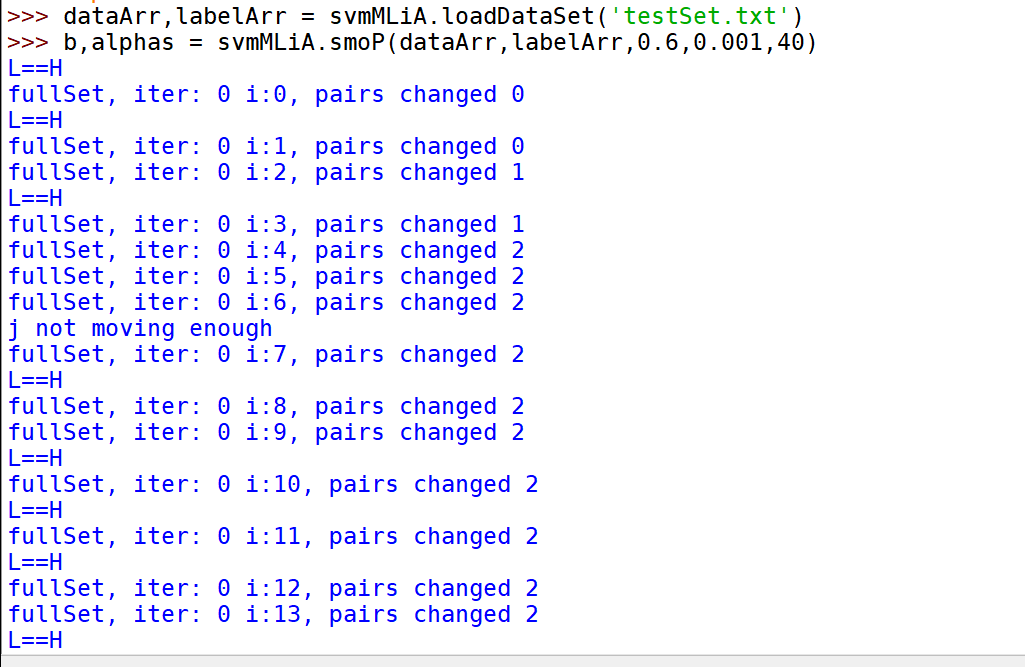

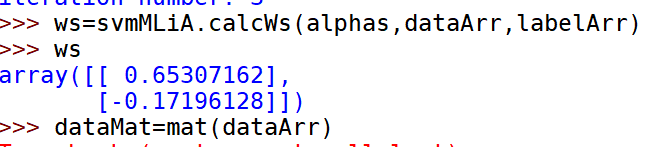

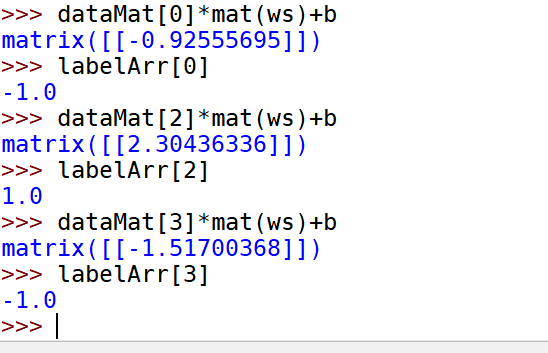

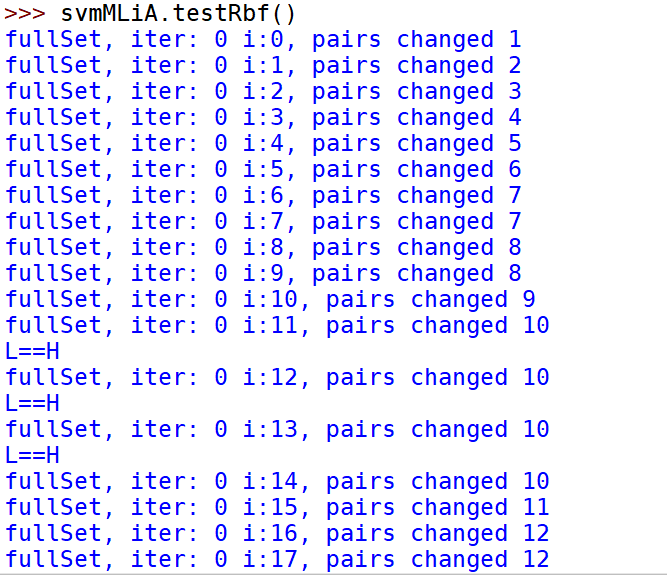

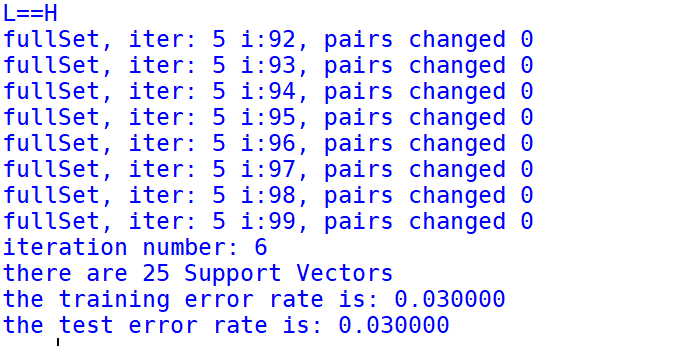

def smoP(dataMatIn, classLabels, C, toler, maxIter,kTup=('lin', 0)): #full Platt SMOoS = optStruct(mat(dataMatIn),mat(classLabels).transpose(),C,toler, kTup)iter = 0entireSet = True; alphaPairsChanged = 0while (iter < maxIter) and ((alphaPairsChanged > 0) or (entireSet)):alphaPairsChanged = 0if entireSet: #go over allfor i in range(oS.m):alphaPairsChanged += innerL(i,oS)print("fullSet, iter: %d i:%d, pairs changed %d" % (iter,i,alphaPairsChanged))iter += 1else:#go over non-bound (railed) alphasnonBoundIs = nonzero((oS.alphas.A > 0) * (oS.alphas.A < C))[0]for i in nonBoundIs:alphaPairsChanged += innerL(i,oS)print("non-bound, iter: %d i:%d, pairs changed %d" % (iter,i,alphaPairsChanged))iter += 1if entireSet: entireSet = False #toggle entire set loopelif (alphaPairsChanged == 0): entireSet = Trueprint("iteration number: %d" % iter)return oS.b,oS.alphas

在复杂数据上应用核函数

核函数(kerenl)

实现:将数据进行处理,从一个特征空间映射到另一个特征空间。

从很难处理的形式转为比较容易处理的形式。

径向基核函数,主流的核函数。

def kernelTrans(X, A, kTup): #calc the kernel or transform data to a higher dimensional spacem,n = shape(X)K = mat(zeros((m,1)))if kTup[0]=='lin': K = X * A.T #linear kernelelif kTup[0]=='rbf':for j in range(m):deltaRow = X[j,:] - AK[j] = deltaRow*deltaRow.TK = exp(K/(-1*kTup[1]**2)) #divide in NumPy is element-wise not matrix like Matlabelse: raise NameError('Houston We Have a Problem -- \That Kernel is not recognized')return Kclass optStruct:def __init__(self,dataMatIn, classLabels, C, toler, kTup): # Initialize the structure with the parametersself.X = dataMatInself.labelMat = classLabelsself.C = Cself.tol = tolerself.m = shape(dataMatIn)[0]self.alphas = mat(zeros((self.m,1)))self.b = 0self.eCache = mat(zeros((self.m,2))) #first column is valid flagself.K = mat(zeros((self.m,self.m)))for i in range(self.m):self.K[:,i] = kernelTrans(self.X, self.X[i,:], kTup)

在numpy矩阵中,除法运算意味着对所有矩阵元素进行运算,而matlab中表示对矩阵求逆。

在测试中使用核函数

def testRbf(k1=1.3):dataArr,labelArr = loadDataSet('testSetRBF.txt')b,alphas = smoP(dataArr, labelArr, 200, 0.0001, 10000, ('rbf', k1)) #C=200 importantdatMat=mat(dataArr); labelMat = mat(labelArr).transpose()svInd=nonzero(alphas.A>0)[0]sVs=datMat[svInd] #get matrix of only support vectorslabelSV = labelMat[svInd];print("there are %d Support Vectors" % shape(sVs)[0])m,n = shape(datMat)errorCount = 0for i in range(m):kernelEval = kernelTrans(sVs,datMat[i,:],('rbf', k1))predict=kernelEval.T * multiply(labelSV,alphas[svInd]) + bif sign(predict)!=sign(labelArr[i]): errorCount += 1print("the training error rate is: %f" % (float(errorCount)/m))dataArr,labelArr = loadDataSet('testSetRBF2.txt')errorCount = 0datMat=mat(dataArr); labelMat = mat(labelArr).transpose()m,n = shape(datMat)for i in range(m):kernelEval = kernelTrans(sVs,datMat[i,:],('rbf', k1))predict=kernelEval.T * multiply(labelSV,alphas[svInd]) + bif sign(predict)!=sign(labelArr[i]): errorCount += 1print("the test error rate is: %f" % (float(errorCount)/m))

示例:手写问题回顾

def loadImages(dirName):from os import listdirhwLabels = []trainingFileList = listdir(dirName) #load the training setm = len(trainingFileList)trainingMat = zeros((m,1024))for i in range(m):fileNameStr = trainingFileList[i]fileStr = fileNameStr.split('.')[0] #take off .txtclassNumStr = int(fileStr.split('_')[0])if classNumStr == 9: hwLabels.append(-1)else: hwLabels.append(1)trainingMat[i,:] = img2vector('%s/%s' % (dirName, fileNameStr))return trainingMat, hwLabelsdef testDigits(kTup=('rbf', 10)):dataArr,labelArr = loadImages('trainingDigits')b,alphas = smoP(dataArr, labelArr, 200, 0.0001, 10000, kTup)datMat=mat(dataArr); labelMat = mat(labelArr).transpose()svInd=nonzero(alphas.A>0)[0]sVs=datMat[svInd]labelSV = labelMat[svInd];print("there are %d Support Vectors" % shape(sVs)[0])m,n = shape(datMat)errorCount = 0for i in range(m):kernelEval = kernelTrans(sVs,datMat[i,:],kTup)predict=kernelEval.T * multiply(labelSV,alphas[svInd]) + bif sign(predict)!=sign(labelArr[i]): errorCount += 1print("the training error rate is: %f" % (float(errorCount)/m))dataArr,labelArr = loadImages('testDigits')errorCount = 0datMat=mat(dataArr); labelMat = mat(labelArr).transpose()m,n = shape(datMat)for i in range(m):kernelEval = kernelTrans(sVs,datMat[i,:],kTup)predict=kernelEval.T * multiply(labelSV,alphas[svInd]) + bif sign(predict)!=sign(labelArr[i]): errorCount += 1print("the test error rate is: %f" % (float(errorCount)/m))