- 修改cluster.yml中的定义kubernetes版本

kubernetes_version: v1.17.2-rancher1-2 - 执行up的操作

rke up —config ./cluster.yml - etcd数据恢复

rke etcd snapshot-restore —config cluster.yml —name snapshot-test - 使用相同CA轮换所有服务证书

rke cert rotate —config cluster.yml - 使用相同CA轮换单个服务证书

rke cert rotate —service kubelet —config cluster.yml - 轮换CA和所有服务证书

rke cert rotate —rotate-ca —config cluster.yml - 如果仅增加或者删除Work node节点可以添加 —update-only参数,表明只更新Work node的资源

rke up —update-only —config cluster.yml - 添加执行权限

chmod +x estore-kube-config.sh - 恢复文件

./estore-kube-config.sh —master-ip=<任意一台master节点IP> - 测试

kubectl —kubeconfig ./kubeconfig_admin.yaml get nodes - 安装jq工具

yum -y install jq - 恢复文件

kubectl —kubeconfig kube_config_cluster.yml get configmap -n kube-system \

full-cluster-state -o json | jq -r .data.\”full-cluster-state\” | jq -r . > cluster.rkestate - 取消维护模式

kubectl uncordon

1. 集群升级

# 查看RKE支持的Kubernetes版本

rke config —list-version —all

修改cluster.yml中的定义kubernetes版本

kubernetes_version: v1.17.2-rancher1-2

执行up的操作

rke up —config ./cluster.yml

[liwm@rmaster01 ~]$ kubectl get nodesNAME STATUS ROLES AGE VERSIONnode01 Ready worker 21d v1.17.2node02 Ready worker 21d v1.17.2rmaster01 Ready controlplane,etcd 21d v1.17.2rmaster02 Ready controlplane,etcd 21d v1.17.2rmaster03 Ready controlplane,etcd 21d v1.17.2[liwm@rmaster01 ~]$[rancher@rmaster01 ~]$ rke config --list-version --allv1.16.8-rancher1-1v1.17.4-rancher1-1v1.15.11-rancher1-1[rancher@rmaster01 ~]$ vim cluster.yml[rancher@rmaster01 ~]$[rancher@rmaster01 ~]$ rke up --config cluster.ymlINFO[0000] Running RKE version: v1.0.5INFO[0000] Initiating Kubernetes clusterINFO[0000] [certificates] Generating admin certificates and kubeconfigINFO[0000] Successfully Deployed state file at [./cluster.rkestate]INFO[0000] Building Kubernetes clusterINFO[0000] [dialer] Setup tunnel for host [192.168.31.133]INFO[0000] [dialer] Setup tunnel for host [192.168.31.132]INFO[0000] [dialer] Setup tunnel for host [192.168.31.131]INFO[0000] [dialer] Setup tunnel for host [192.168.31.134]INFO[0000] [dialer] Setup tunnel for host [192.168.31.130]INFO[0001] [network] No hosts added existing cluster, skipping port checkINFO[0001] [certificates] Deploying kubernetes certificates to Cluster nodesINFO[0001] Checking if container [cert-deployer] is running on host [192.168.31.133], try #1INFO[0001] Checking if container [cert-deployer] is running on host [192.168.31.134], try #1INFO[0001] Checking if container [cert-deployer] is running on host [192.168.31.131], try #1INFO[0001] Checking if container [cert-deployer] is running on host [192.168.31.130], try #1INFO[0001] Checking if container [cert-deployer] is running on host [192.168.31.132], try #1INFO[0001] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.133]INFO[0001] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.132]INFO[0001] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.130]INFO[0001] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.134]INFO[0001] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.131]INFO[0001] Starting container [cert-deployer] on host [192.168.31.132], try #1INFO[0001] Starting container [cert-deployer] on host [192.168.31.134], try #1INFO[0002] Starting container [cert-deployer] on host [192.168.31.130], try #1INFO[0002] Starting container [cert-deployer] on host [192.168.31.133], try #1INFO[0003] Checking if container [cert-deployer] is running on host [192.168.31.132], try #1INFO[0004] Checking if container [cert-deployer] is running on host [192.168.31.133], try #1INFO[0004] Starting container [cert-deployer] on host [192.168.31.131], try #1INFO[0004] Checking if container [cert-deployer] is running on host [192.168.31.130], try #1INFO[0004] Checking if container [cert-deployer] is running on host [192.168.31.134], try #1INFO[0006] Checking if container [cert-deployer] is running on host [192.168.31.131], try #1INFO[0008] Checking if container [cert-deployer] is running on host [192.168.31.132], try #1INFO[0008] Removing container [cert-deployer] on host [192.168.31.132], try #1INFO[0009] Checking if container [cert-deployer] is running on host [192.168.31.133], try #1INFO[0009] Removing container [cert-deployer] on host [192.168.31.133], try #1INFO[0009] Checking if container [cert-deployer] is running on host [192.168.31.130], try #1INFO[0009] Removing container [cert-deployer] on host [192.168.31.130], try #1INFO[0009] Checking if container [cert-deployer] is running on host [192.168.31.134], try #1INFO[0009] Removing container [cert-deployer] on host [192.168.31.134], try #1INFO[0011] Checking if container [cert-deployer] is running on host [192.168.31.131], try #1INFO[0011] Removing container [cert-deployer] on host [192.168.31.131], try #1INFO[0011] [reconcile] Rebuilding and updating local kube configINFO[0011] Successfully Deployed local admin kubeconfig at [./kube_config_cluster.yml]INFO[0011] [reconcile] host [192.168.31.130] is active master on the clusterINFO[0011] [certificates] Successfully deployed kubernetes certificates to Cluster nodesINFO[0011] [reconcile] Reconciling cluster stateINFO[0011] [reconcile] Check etcd hosts to be deletedINFO[0011] [reconcile] Check etcd hosts to be addedINFO[0011] [reconcile] Rebuilding and updating local kube configINFO[0011] Successfully Deployed local admin kubeconfig at [./kube_config_cluster.yml]INFO[0011] [reconcile] host [192.168.31.130] is active master on the clusterINFO[0011] [reconcile] Reconciled cluster state successfullyINFO[0011] Pre-pulling kubernetes imagesINFO[0011] Pulling image [rancher/hyperkube:v1.17.4-rancher1] on host [192.168.31.133], try #1INFO[0011] Pulling image [rancher/hyperkube:v1.17.4-rancher1] on host [192.168.31.130], try #1INFO[0011] Pulling image [rancher/hyperkube:v1.17.4-rancher1] on host [192.168.31.131], try #1INFO[0011] Pulling image [rancher/hyperkube:v1.17.4-rancher1] on host [192.168.31.132], try #1INFO[0011] Pulling image [rancher/hyperkube:v1.17.4-rancher1] on host [192.168.31.134], try #1INFO[0459] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.130]INFO[0640] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.132]INFO[0840] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.134]INFO[0903] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.133]INFO[1021] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.131]INFO[1021] Kubernetes images pulled successfullyINFO[1021] [etcd] Building up etcd plane..INFO[1021] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.130]INFO[1023] Starting container [etcd-fix-perm] on host [192.168.31.130], try #1INFO[1025] Successfully started [etcd-fix-perm] container on host [192.168.31.130]INFO[1025] Waiting for [etcd-fix-perm] container to exit on host [192.168.31.130]INFO[1025] Waiting for [etcd-fix-perm] container to exit on host [192.168.31.130]INFO[1025] Container [etcd-fix-perm] is still running on host [192.168.31.130]INFO[1026] Waiting for [etcd-fix-perm] container to exit on host [192.168.31.130]INFO[1026] Removing container [etcd-fix-perm] on host [192.168.31.130], try #1INFO[1028] [remove/etcd-fix-perm] Successfully removed container on host [192.168.31.130]INFO[1028] [etcd] Running rolling snapshot container [etcd-snapshot-once] on host [192.168.31.130]INFO[1028] Removing container [etcd-rolling-snapshots] on host [192.168.31.130], try #1INFO[1029] [remove/etcd-rolling-snapshots] Successfully removed container on host [192.168.31.130]INFO[1029] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.130]INFO[1029] Starting container [etcd-rolling-snapshots] on host [192.168.31.130], try #1INFO[1030] [etcd] Successfully started [etcd-rolling-snapshots] container on host [192.168.31.130]INFO[1035] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.130]INFO[1035] Starting container [rke-bundle-cert] on host [192.168.31.130], try #1INFO[1036] [certificates] Successfully started [rke-bundle-cert] container on host [192.168.31.130]INFO[1036] Waiting for [rke-bundle-cert] container to exit on host [192.168.31.130]INFO[1036] Container [rke-bundle-cert] is still running on host [192.168.31.130]INFO[1037] Waiting for [rke-bundle-cert] container to exit on host [192.168.31.130]INFO[1038] [certificates] successfully saved certificate bundle [/opt/rke/etcd-snapshots//pki.bundle.tar.gz] on host [192.168.31.130]INFO[1038] Removing container [rke-bundle-cert] on host [192.168.31.130], try #1INFO[1038] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.130]INFO[1038] Starting container [rke-log-linker] on host [192.168.31.130], try #1INFO[1039] [etcd] Successfully started [rke-log-linker] container on host [192.168.31.130]INFO[1039] Removing container [rke-log-linker] on host [192.168.31.130], try #1INFO[1039] [remove/rke-log-linker] Successfully removed container on host [192.168.31.130]INFO[1040] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.131]INFO[1086] Starting container [etcd-fix-perm] on host [192.168.31.131], try #1INFO[1135] Successfully started [etcd-fix-perm] container on host [192.168.31.131]INFO[1135] Waiting for [etcd-fix-perm] container to exit on host [192.168.31.131]INFO[1135] Waiting for [etcd-fix-perm] container to exit on host [192.168.31.131]INFO[1147] Removing container [etcd-fix-perm] on host [192.168.31.131], try #1INFO[1160] [remove/etcd-fix-perm] Successfully removed container on host [192.168.31.131]INFO[1160] [etcd] Running rolling snapshot container [etcd-snapshot-once] on host [192.168.31.131]INFO[1160] Removing container [etcd-rolling-snapshots] on host [192.168.31.131], try #1INFO[1170] [remove/etcd-rolling-snapshots] Successfully removed container on host [192.168.31.131]INFO[1170] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.131]INFO[1173] Starting container [etcd-rolling-snapshots] on host [192.168.31.131], try #1INFO[1176] [etcd] Successfully started [etcd-rolling-snapshots] container on host [192.168.31.131]INFO[1184] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.131]INFO[1189] Starting container [rke-bundle-cert] on host [192.168.31.131], try #1INFO[1194] [certificates] Successfully started [rke-bundle-cert] container on host [192.168.31.131]INFO[1194] Waiting for [rke-bundle-cert] container to exit on host [192.168.31.131]INFO[1194] Container [rke-bundle-cert] is still running on host [192.168.31.131]INFO[1195] Waiting for [rke-bundle-cert] container to exit on host [192.168.31.131]INFO[1195] [certificates] successfully saved certificate bundle [/opt/rke/etcd-snapshots//pki.bundle.tar.gz] on host [192.168.31.131]INFO[1195] Removing container [rke-bundle-cert] on host [192.168.31.131], try #1INFO[1195] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.131]INFO[1195] Starting container [rke-log-linker] on host [192.168.31.131], try #1INFO[1196] [etcd] Successfully started [rke-log-linker] container on host [192.168.31.131]INFO[1196] Removing container [rke-log-linker] on host [192.168.31.131], try #1INFO[1197] [remove/rke-log-linker] Successfully removed container on host [192.168.31.131]INFO[1197] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.132]INFO[1197] Starting container [etcd-fix-perm] on host [192.168.31.132], try #1INFO[1198] Successfully started [etcd-fix-perm] container on host [192.168.31.132]INFO[1198] Waiting for [etcd-fix-perm] container to exit on host [192.168.31.132]INFO[1198] Waiting for [etcd-fix-perm] container to exit on host [192.168.31.132]INFO[1198] Container [etcd-fix-perm] is still running on host [192.168.31.132]INFO[1199] Waiting for [etcd-fix-perm] container to exit on host [192.168.31.132]INFO[1199] Removing container [etcd-fix-perm] on host [192.168.31.132], try #1INFO[1199] [remove/etcd-fix-perm] Successfully removed container on host [192.168.31.132]INFO[1200] [etcd] Running rolling snapshot container [etcd-snapshot-once] on host [192.168.31.132]INFO[1200] Removing container [etcd-rolling-snapshots] on host [192.168.31.132], try #1INFO[1200] [remove/etcd-rolling-snapshots] Successfully removed container on host [192.168.31.132]INFO[1200] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.132]INFO[1200] Starting container [etcd-rolling-snapshots] on host [192.168.31.132], try #1INFO[1201] [etcd] Successfully started [etcd-rolling-snapshots] container on host [192.168.31.132]INFO[1206] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.132]INFO[1206] Starting container [rke-bundle-cert] on host [192.168.31.132], try #1INFO[1206] [certificates] Successfully started [rke-bundle-cert] container on host [192.168.31.132]INFO[1206] Waiting for [rke-bundle-cert] container to exit on host [192.168.31.132]INFO[1206] Container [rke-bundle-cert] is still running on host [192.168.31.132]INFO[1207] Waiting for [rke-bundle-cert] container to exit on host [192.168.31.132]INFO[1207] [certificates] successfully saved certificate bundle [/opt/rke/etcd-snapshots//pki.bundle.tar.gz] on host [192.168.31.132]INFO[1207] Removing container [rke-bundle-cert] on host [192.168.31.132], try #1INFO[1207] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.132]INFO[1208] Starting container [rke-log-linker] on host [192.168.31.132], try #1INFO[1208] [etcd] Successfully started [rke-log-linker] container on host [192.168.31.132]INFO[1208] Removing container [rke-log-linker] on host [192.168.31.132], try #1INFO[1209] [remove/rke-log-linker] Successfully removed container on host [192.168.31.132]INFO[1209] [etcd] Successfully started etcd plane.. Checking etcd cluster healthINFO[1209] [controlplane] Building up Controller Plane..INFO[1209] Checking if container [service-sidekick] is running on host [192.168.31.130], try #1INFO[1209] Checking if container [service-sidekick] is running on host [192.168.31.131], try #1INFO[1209] Checking if container [service-sidekick] is running on host [192.168.31.132], try #1INFO[1209] [sidekick] Sidekick container already created on host [192.168.31.132]INFO[1209] [sidekick] Sidekick container already created on host [192.168.31.131]INFO[1209] Checking if container [kube-apiserver] is running on host [192.168.31.132], try #1INFO[1209] Checking if container [kube-apiserver] is running on host [192.168.31.131], try #1INFO[1209] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.132]INFO[1209] Checking if container [old-kube-apiserver] is running on host [192.168.31.132], try #1INFO[1209] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.131]INFO[1209] Checking if container [old-kube-apiserver] is running on host [192.168.31.131], try #1INFO[1209] Stopping container [kube-apiserver] on host [192.168.31.132] with stopTimeoutDuration [5s], try #1INFO[1209] Stopping container [kube-apiserver] on host [192.168.31.131] with stopTimeoutDuration [5s], try #1INFO[1209] [sidekick] Sidekick container already created on host [192.168.31.130]INFO[1210] Checking if container [kube-apiserver] is running on host [192.168.31.130], try #1INFO[1210] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.130]INFO[1210] Checking if container [old-kube-apiserver] is running on host [192.168.31.130], try #1INFO[1210] Stopping container [kube-apiserver] on host [192.168.31.130] with stopTimeoutDuration [5s], try #1INFO[1211] Waiting for [kube-apiserver] container to exit on host [192.168.31.132]INFO[1211] Renaming container [kube-apiserver] to [old-kube-apiserver] on host [192.168.31.132], try #1INFO[1211] Starting container [kube-apiserver] on host [192.168.31.132], try #1INFO[1212] [controlplane] Successfully updated [kube-apiserver] container on host [192.168.31.132]INFO[1212] Removing container [old-kube-apiserver] on host [192.168.31.132], try #1INFO[1212] [healthcheck] Start Healthcheck on service [kube-apiserver] on host [192.168.31.132]INFO[1216] Waiting for [kube-apiserver] container to exit on host [192.168.31.130]INFO[1216] Renaming container [kube-apiserver] to [old-kube-apiserver] on host [192.168.31.130], try #1INFO[1216] Starting container [kube-apiserver] on host [192.168.31.130], try #1INFO[1217] Waiting for [kube-apiserver] container to exit on host [192.168.31.131]INFO[1217] Renaming container [kube-apiserver] to [old-kube-apiserver] on host [192.168.31.131], try #1INFO[1217] Starting container [kube-apiserver] on host [192.168.31.131], try #1INFO[1217] [controlplane] Successfully updated [kube-apiserver] container on host [192.168.31.130]INFO[1217] Removing container [old-kube-apiserver] on host [192.168.31.130], try #1INFO[1218] [healthcheck] Start Healthcheck on service [kube-apiserver] on host [192.168.31.130]INFO[1218] [controlplane] Successfully updated [kube-apiserver] container on host [192.168.31.131]INFO[1218] Removing container [old-kube-apiserver] on host [192.168.31.131], try #1INFO[1219] [healthcheck] Start Healthcheck on service [kube-apiserver] on host [192.168.31.131]INFO[1267] [healthcheck] service [kube-apiserver] on host [192.168.31.132] is healthyINFO[1267] [healthcheck] service [kube-apiserver] on host [192.168.31.130] is healthyINFO[1267] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.130]INFO[1267] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.132]INFO[1267] Starting container [rke-log-linker] on host [192.168.31.132], try #1INFO[1268] Starting container [rke-log-linker] on host [192.168.31.130], try #1INFO[1268] [controlplane] Successfully started [rke-log-linker] container on host [192.168.31.132]INFO[1268] Removing container [rke-log-linker] on host [192.168.31.132], try #1INFO[1269] [remove/rke-log-linker] Successfully removed container on host [192.168.31.132]INFO[1269] Checking if container [kube-controller-manager] is running on host [192.168.31.132], try #1INFO[1269] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.132]INFO[1269] Checking if container [old-kube-controller-manager] is running on host [192.168.31.132], try #1INFO[1269] Stopping container [kube-controller-manager] on host [192.168.31.132] with stopTimeoutDuration [5s], try #1INFO[1269] [controlplane] Successfully started [rke-log-linker] container on host [192.168.31.130]INFO[1269] Removing container [rke-log-linker] on host [192.168.31.130], try #1INFO[1269] Waiting for [kube-controller-manager] container to exit on host [192.168.31.132]INFO[1269] Renaming container [kube-controller-manager] to [old-kube-controller-manager] on host [192.168.31.132], try #1INFO[1269] Starting container [kube-controller-manager] on host [192.168.31.132], try #1INFO[1269] [remove/rke-log-linker] Successfully removed container on host [192.168.31.130]INFO[1269] Checking if container [kube-controller-manager] is running on host [192.168.31.130], try #1INFO[1269] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.130]INFO[1269] Checking if container [old-kube-controller-manager] is running on host [192.168.31.130], try #1INFO[1270] Stopping container [kube-controller-manager] on host [192.168.31.130] with stopTimeoutDuration [5s], try #1INFO[1270] [controlplane] Successfully updated [kube-controller-manager] container on host [192.168.31.132]INFO[1270] Removing container [old-kube-controller-manager] on host [192.168.31.132], try #1INFO[1270] [healthcheck] Start Healthcheck on service [kube-controller-manager] on host [192.168.31.132]INFO[1270] Waiting for [kube-controller-manager] container to exit on host [192.168.31.130]INFO[1270] Renaming container [kube-controller-manager] to [old-kube-controller-manager] on host [192.168.31.130], try #1INFO[1270] Starting container [kube-controller-manager] on host [192.168.31.130], try #1INFO[1270] [healthcheck] service [kube-apiserver] on host [192.168.31.131] is healthyINFO[1270] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.131]INFO[1270] [controlplane] Successfully updated [kube-controller-manager] container on host [192.168.31.130]INFO[1270] Removing container [old-kube-controller-manager] on host [192.168.31.130], try #1INFO[1271] [healthcheck] Start Healthcheck on service [kube-controller-manager] on host [192.168.31.130]INFO[1271] Starting container [rke-log-linker] on host [192.168.31.131], try #1INFO[1274] [controlplane] Successfully started [rke-log-linker] container on host [192.168.31.131]INFO[1274] Removing container [rke-log-linker] on host [192.168.31.131], try #1INFO[1274] [remove/rke-log-linker] Successfully removed container on host [192.168.31.131]INFO[1274] Checking if container [kube-controller-manager] is running on host [192.168.31.131], try #1INFO[1274] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.131]INFO[1274] Checking if container [old-kube-controller-manager] is running on host [192.168.31.131], try #1INFO[1274] Stopping container [kube-controller-manager] on host [192.168.31.131] with stopTimeoutDuration [5s], try #1INFO[1275] Waiting for [kube-controller-manager] container to exit on host [192.168.31.131]INFO[1275] Renaming container [kube-controller-manager] to [old-kube-controller-manager] on host [192.168.31.131], try #1INFO[1275] Starting container [kube-controller-manager] on host [192.168.31.131], try #1INFO[1275] [healthcheck] service [kube-controller-manager] on host [192.168.31.132] is healthyINFO[1275] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.132]INFO[1275] Starting container [rke-log-linker] on host [192.168.31.132], try #1INFO[1275] [controlplane] Successfully updated [kube-controller-manager] container on host [192.168.31.131]INFO[1275] Removing container [old-kube-controller-manager] on host [192.168.31.131], try #1INFO[1276] [healthcheck] Start Healthcheck on service [kube-controller-manager] on host [192.168.31.131]INFO[1276] [controlplane] Successfully started [rke-log-linker] container on host [192.168.31.132]INFO[1276] Removing container [rke-log-linker] on host [192.168.31.132], try #1INFO[1276] [remove/rke-log-linker] Successfully removed container on host [192.168.31.132]INFO[1276] Checking if container [kube-scheduler] is running on host [192.168.31.132], try #1INFO[1277] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.132]INFO[1277] Checking if container [old-kube-scheduler] is running on host [192.168.31.132], try #1INFO[1277] Stopping container [kube-scheduler] on host [192.168.31.132] with stopTimeoutDuration [5s], try #1INFO[1277] Waiting for [kube-scheduler] container to exit on host [192.168.31.132]INFO[1277] Renaming container [kube-scheduler] to [old-kube-scheduler] on host [192.168.31.132], try #1INFO[1277] Starting container [kube-scheduler] on host [192.168.31.132], try #1INFO[1278] [controlplane] Successfully updated [kube-scheduler] container on host [192.168.31.132]INFO[1278] Removing container [old-kube-scheduler] on host [192.168.31.132], try #1INFO[1278] [healthcheck] Start Healthcheck on service [kube-scheduler] on host [192.168.31.132]INFO[1281] [healthcheck] service [kube-controller-manager] on host [192.168.31.130] is healthyINFO[1281] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.130]INFO[1281] Starting container [rke-log-linker] on host [192.168.31.130], try #1INFO[1284] [healthcheck] service [kube-scheduler] on host [192.168.31.132] is healthyINFO[1284] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.132]INFO[1284] [controlplane] Successfully started [rke-log-linker] container on host [192.168.31.130]INFO[1284] Removing container [rke-log-linker] on host [192.168.31.130], try #1INFO[1284] Starting container [rke-log-linker] on host [192.168.31.132], try #1INFO[1285] [healthcheck] service [kube-controller-manager] on host [192.168.31.131] is healthyINFO[1285] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.131]INFO[1285] [remove/rke-log-linker] Successfully removed container on host [192.168.31.130]INFO[1285] Checking if container [kube-scheduler] is running on host [192.168.31.130], try #1INFO[1285] [controlplane] Successfully started [rke-log-linker] container on host [192.168.31.132]INFO[1285] Starting container [rke-log-linker] on host [192.168.31.131], try #1INFO[1285] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.130]INFO[1285] Checking if container [old-kube-scheduler] is running on host [192.168.31.130], try #1INFO[1286] Removing container [rke-log-linker] on host [192.168.31.132], try #1INFO[1286] Stopping container [kube-scheduler] on host [192.168.31.130] with stopTimeoutDuration [5s], try #1INFO[1286] [remove/rke-log-linker] Successfully removed container on host [192.168.31.132]INFO[1287] [controlplane] Successfully started [rke-log-linker] container on host [192.168.31.131]INFO[1287] Removing container [rke-log-linker] on host [192.168.31.131], try #1INFO[1287] Waiting for [kube-scheduler] container to exit on host [192.168.31.130]INFO[1287] Renaming container [kube-scheduler] to [old-kube-scheduler] on host [192.168.31.130], try #1INFO[1287] Starting container [kube-scheduler] on host [192.168.31.130], try #1INFO[1287] [controlplane] Successfully updated [kube-scheduler] container on host [192.168.31.130]INFO[1287] Removing container [old-kube-scheduler] on host [192.168.31.130], try #1INFO[1287] [healthcheck] Start Healthcheck on service [kube-scheduler] on host [192.168.31.130]INFO[1288] [remove/rke-log-linker] Successfully removed container on host [192.168.31.131]INFO[1288] Checking if container [kube-scheduler] is running on host [192.168.31.131], try #1INFO[1289] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.131]INFO[1289] Checking if container [old-kube-scheduler] is running on host [192.168.31.131], try #1INFO[1289] Stopping container [kube-scheduler] on host [192.168.31.131] with stopTimeoutDuration [5s], try #1INFO[1290] Waiting for [kube-scheduler] container to exit on host [192.168.31.131]INFO[1290] Renaming container [kube-scheduler] to [old-kube-scheduler] on host [192.168.31.131], try #1INFO[1290] Starting container [kube-scheduler] on host [192.168.31.131], try #1INFO[1291] [controlplane] Successfully updated [kube-scheduler] container on host [192.168.31.131]INFO[1291] Removing container [old-kube-scheduler] on host [192.168.31.131], try #1INFO[1291] [healthcheck] Start Healthcheck on service [kube-scheduler] on host [192.168.31.131]INFO[1295] [healthcheck] service [kube-scheduler] on host [192.168.31.130] is healthyINFO[1295] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.130]INFO[1295] Starting container [rke-log-linker] on host [192.168.31.130], try #1INFO[1296] [controlplane] Successfully started [rke-log-linker] container on host [192.168.31.130]INFO[1296] Removing container [rke-log-linker] on host [192.168.31.130], try #1INFO[1296] [remove/rke-log-linker] Successfully removed container on host [192.168.31.130]INFO[1298] [healthcheck] service [kube-scheduler] on host [192.168.31.131] is healthyINFO[1298] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.131]INFO[1298] Starting container [rke-log-linker] on host [192.168.31.131], try #1INFO[1299] [controlplane] Successfully started [rke-log-linker] container on host [192.168.31.131]INFO[1300] Removing container [rke-log-linker] on host [192.168.31.131], try #1INFO[1300] [remove/rke-log-linker] Successfully removed container on host [192.168.31.131]INFO[1300] [controlplane] Successfully started Controller Plane..INFO[1300] [authz] Creating rke-job-deployer ServiceAccountINFO[1300] [authz] rke-job-deployer ServiceAccount created successfullyINFO[1300] [authz] Creating system:node ClusterRoleBindingINFO[1301] [authz] system:node ClusterRoleBinding created successfullyINFO[1301] [authz] Creating kube-apiserver proxy ClusterRole and ClusterRoleBindingINFO[1301] [authz] kube-apiserver proxy ClusterRole and ClusterRoleBinding created successfullyINFO[1302] Successfully Deployed state file at [./cluster.rkestate]INFO[1302] [state] Saving full cluster state to KubernetesINFO[1302] [state] Successfully Saved full cluster state to Kubernetes ConfigMap: cluster-stateINFO[1302] [worker] Building up Worker Plane..INFO[1302] Checking if container [service-sidekick] is running on host [192.168.31.130], try #1INFO[1302] Checking if container [service-sidekick] is running on host [192.168.31.131], try #1INFO[1302] Checking if container [service-sidekick] is running on host [192.168.31.132], try #1INFO[1302] [sidekick] Sidekick container already created on host [192.168.31.130]INFO[1302] [sidekick] Sidekick container already created on host [192.168.31.132]INFO[1302] Checking if container [kubelet] is running on host [192.168.31.132], try #1INFO[1302] Checking if container [kubelet] is running on host [192.168.31.130], try #1INFO[1302] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.132]INFO[1302] Checking if container [old-kubelet] is running on host [192.168.31.132], try #1INFO[1302] [sidekick] Sidekick container already created on host [192.168.31.131]INFO[1302] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.130]INFO[1302] Checking if container [old-kubelet] is running on host [192.168.31.130], try #1INFO[1302] Stopping container [kubelet] on host [192.168.31.132] with stopTimeoutDuration [5s], try #1INFO[1302] Stopping container [kubelet] on host [192.168.31.130] with stopTimeoutDuration [5s], try #1INFO[1302] Checking if container [kubelet] is running on host [192.168.31.131], try #1INFO[1302] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.131]INFO[1302] Checking if container [old-kubelet] is running on host [192.168.31.131], try #1INFO[1302] Stopping container [kubelet] on host [192.168.31.131] with stopTimeoutDuration [5s], try #1INFO[1302] Waiting for [kubelet] container to exit on host [192.168.31.132]INFO[1302] Renaming container [kubelet] to [old-kubelet] on host [192.168.31.132], try #1INFO[1302] Waiting for [kubelet] container to exit on host [192.168.31.130]INFO[1302] Renaming container [kubelet] to [old-kubelet] on host [192.168.31.130], try #1INFO[1302] Starting container [kubelet] on host [192.168.31.130], try #1INFO[1302] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.133]INFO[1303] Starting container [kubelet] on host [192.168.31.132], try #1INFO[1303] [worker] Successfully updated [kubelet] container on host [192.168.31.130]INFO[1303] Removing container [old-kubelet] on host [192.168.31.130], try #1INFO[1303] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.134]INFO[1304] Starting container [rke-log-linker] on host [192.168.31.134], try #1INFO[1304] [worker] Successfully updated [kubelet] container on host [192.168.31.132]INFO[1304] Removing container [old-kubelet] on host [192.168.31.132], try #1INFO[1304] [healthcheck] Start Healthcheck on service [kubelet] on host [192.168.31.130]INFO[1304] Starting container [rke-log-linker] on host [192.168.31.133], try #1INFO[1304] [healthcheck] Start Healthcheck on service [kubelet] on host [192.168.31.132]INFO[1304] Waiting for [kubelet] container to exit on host [192.168.31.131]INFO[1304] Renaming container [kubelet] to [old-kubelet] on host [192.168.31.131], try #1INFO[1305] Starting container [kubelet] on host [192.168.31.131], try #1INFO[1306] [worker] Successfully updated [kubelet] container on host [192.168.31.131]INFO[1306] Removing container [old-kubelet] on host [192.168.31.131], try #1INFO[1306] [worker] Successfully started [rke-log-linker] container on host [192.168.31.133]INFO[1307] Removing container [rke-log-linker] on host [192.168.31.133], try #1INFO[1307] [remove/rke-log-linker] Successfully removed container on host [192.168.31.133]INFO[1307] Checking if container [service-sidekick] is running on host [192.168.31.133], try #1INFO[1307] [healthcheck] Start Healthcheck on service [kubelet] on host [192.168.31.131]INFO[1307] [sidekick] Sidekick container already created on host [192.168.31.133]INFO[1307] Checking if container [kubelet] is running on host [192.168.31.133], try #1INFO[1307] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.133]INFO[1307] Checking if container [old-kubelet] is running on host [192.168.31.133], try #1INFO[1307] Stopping container [kubelet] on host [192.168.31.133] with stopTimeoutDuration [5s], try #1INFO[1308] [worker] Successfully started [rke-log-linker] container on host [192.168.31.134]INFO[1308] Removing container [rke-log-linker] on host [192.168.31.134], try #1INFO[1308] Waiting for [kubelet] container to exit on host [192.168.31.133]INFO[1308] Renaming container [kubelet] to [old-kubelet] on host [192.168.31.133], try #1INFO[1308] Starting container [kubelet] on host [192.168.31.133], try #1INFO[1309] [worker] Successfully updated [kubelet] container on host [192.168.31.133]INFO[1309] Removing container [old-kubelet] on host [192.168.31.133], try #1INFO[1309] [remove/rke-log-linker] Successfully removed container on host [192.168.31.134]INFO[1309] Checking if container [service-sidekick] is running on host [192.168.31.134], try #1INFO[1309] [sidekick] Sidekick container already created on host [192.168.31.134]INFO[1309] Checking if container [kubelet] is running on host [192.168.31.134], try #1INFO[1309] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.134]INFO[1309] Checking if container [old-kubelet] is running on host [192.168.31.134], try #1INFO[1309] Stopping container [kubelet] on host [192.168.31.134] with stopTimeoutDuration [5s], try #1INFO[1309] [healthcheck] Start Healthcheck on service [kubelet] on host [192.168.31.133]INFO[1309] Waiting for [kubelet] container to exit on host [192.168.31.134]INFO[1309] Renaming container [kubelet] to [old-kubelet] on host [192.168.31.134], try #1INFO[1309] Starting container [kubelet] on host [192.168.31.134], try #1INFO[1310] [worker] Successfully updated [kubelet] container on host [192.168.31.134]INFO[1310] Removing container [old-kubelet] on host [192.168.31.134], try #1INFO[1310] [healthcheck] Start Healthcheck on service [kubelet] on host [192.168.31.134]INFO[1331] [healthcheck] service [kubelet] on host [192.168.31.130] is healthyINFO[1331] [healthcheck] service [kubelet] on host [192.168.31.132] is healthyINFO[1331] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.132]INFO[1331] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.130]INFO[1331] Starting container [rke-log-linker] on host [192.168.31.132], try #1INFO[1331] Starting container [rke-log-linker] on host [192.168.31.130], try #1INFO[1332] [worker] Successfully started [rke-log-linker] container on host [192.168.31.132]INFO[1332] Removing container [rke-log-linker] on host [192.168.31.132], try #1INFO[1332] [worker] Successfully started [rke-log-linker] container on host [192.168.31.130]INFO[1332] Removing container [rke-log-linker] on host [192.168.31.130], try #1INFO[1332] [remove/rke-log-linker] Successfully removed container on host [192.168.31.132]INFO[1332] Checking if container [kube-proxy] is running on host [192.168.31.132], try #1INFO[1332] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.132]INFO[1332] Checking if container [old-kube-proxy] is running on host [192.168.31.132], try #1INFO[1332] Stopping container [kube-proxy] on host [192.168.31.132] with stopTimeoutDuration [5s], try #1INFO[1333] [remove/rke-log-linker] Successfully removed container on host [192.168.31.130]INFO[1333] Checking if container [kube-proxy] is running on host [192.168.31.130], try #1INFO[1333] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.130]INFO[1333] Checking if container [old-kube-proxy] is running on host [192.168.31.130], try #1INFO[1333] Waiting for [kube-proxy] container to exit on host [192.168.31.132]INFO[1333] Renaming container [kube-proxy] to [old-kube-proxy] on host [192.168.31.132], try #1INFO[1333] Stopping container [kube-proxy] on host [192.168.31.130] with stopTimeoutDuration [5s], try #1INFO[1333] Starting container [kube-proxy] on host [192.168.31.132], try #1INFO[1333] [worker] Successfully updated [kube-proxy] container on host [192.168.31.132]INFO[1333] Removing container [old-kube-proxy] on host [192.168.31.132], try #1INFO[1333] [healthcheck] Start Healthcheck on service [kube-proxy] on host [192.168.31.132]INFO[1334] [healthcheck] service [kubelet] on host [192.168.31.131] is healthyINFO[1334] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.131]INFO[1334] Starting container [rke-log-linker] on host [192.168.31.131], try #1INFO[1335] Waiting for [kube-proxy] container to exit on host [192.168.31.130]INFO[1335] Renaming container [kube-proxy] to [old-kube-proxy] on host [192.168.31.130], try #1INFO[1335] Starting container [kube-proxy] on host [192.168.31.130], try #1INFO[1335] [healthcheck] service [kubelet] on host [192.168.31.133] is healthyINFO[1335] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.133]INFO[1335] Starting container [rke-log-linker] on host [192.168.31.133], try #1INFO[1335] [worker] Successfully updated [kube-proxy] container on host [192.168.31.130]INFO[1335] Removing container [old-kube-proxy] on host [192.168.31.130], try #1INFO[1336] [healthcheck] Start Healthcheck on service [kube-proxy] on host [192.168.31.130]INFO[1336] [worker] Successfully started [rke-log-linker] container on host [192.168.31.131]INFO[1336] Removing container [rke-log-linker] on host [192.168.31.131], try #1INFO[1336] [remove/rke-log-linker] Successfully removed container on host [192.168.31.131]INFO[1336] Checking if container [kube-proxy] is running on host [192.168.31.131], try #1INFO[1336] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.131]INFO[1336] Checking if container [old-kube-proxy] is running on host [192.168.31.131], try #1INFO[1336] Stopping container [kube-proxy] on host [192.168.31.131] with stopTimeoutDuration [5s], try #1INFO[1337] Waiting for [kube-proxy] container to exit on host [192.168.31.131]INFO[1337] Renaming container [kube-proxy] to [old-kube-proxy] on host [192.168.31.131], try #1INFO[1337] Starting container [kube-proxy] on host [192.168.31.131], try #1INFO[1337] [worker] Successfully updated [kube-proxy] container on host [192.168.31.131]INFO[1337] Removing container [old-kube-proxy] on host [192.168.31.131], try #1INFO[1337] [healthcheck] Start Healthcheck on service [kube-proxy] on host [192.168.31.131]INFO[1337] [worker] Successfully started [rke-log-linker] container on host [192.168.31.133]INFO[1337] Removing container [rke-log-linker] on host [192.168.31.133], try #1INFO[1338] [remove/rke-log-linker] Successfully removed container on host [192.168.31.133]INFO[1338] [healthcheck] service [kubelet] on host [192.168.31.134] is healthyINFO[1338] Checking if container [kube-proxy] is running on host [192.168.31.133], try #1INFO[1338] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.133]INFO[1338] Checking if container [old-kube-proxy] is running on host [192.168.31.133], try #1INFO[1338] Stopping container [kube-proxy] on host [192.168.31.133] with stopTimeoutDuration [5s], try #1INFO[1338] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.134]INFO[1338] Waiting for [kube-proxy] container to exit on host [192.168.31.133]INFO[1338] Renaming container [kube-proxy] to [old-kube-proxy] on host [192.168.31.133], try #1INFO[1338] Starting container [rke-log-linker] on host [192.168.31.134], try #1INFO[1338] Starting container [kube-proxy] on host [192.168.31.133], try #1INFO[1339] [worker] Successfully updated [kube-proxy] container on host [192.168.31.133]INFO[1339] Removing container [old-kube-proxy] on host [192.168.31.133], try #1INFO[1339] [healthcheck] Start Healthcheck on service [kube-proxy] on host [192.168.31.133]INFO[1339] [healthcheck] service [kube-proxy] on host [192.168.31.132] is healthyINFO[1339] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.132]INFO[1339] Starting container [rke-log-linker] on host [192.168.31.132], try #1INFO[1340] [worker] Successfully started [rke-log-linker] container on host [192.168.31.134]INFO[1340] [worker] Successfully started [rke-log-linker] container on host [192.168.31.132]INFO[1341] Removing container [rke-log-linker] on host [192.168.31.132], try #1INFO[1341] [remove/rke-log-linker] Successfully removed container on host [192.168.31.132]INFO[1341] Removing container [rke-log-linker] on host [192.168.31.134], try #1INFO[1341] [remove/rke-log-linker] Successfully removed container on host [192.168.31.134]INFO[1341] Checking if container [kube-proxy] is running on host [192.168.31.134], try #1INFO[1341] Image [rancher/hyperkube:v1.17.4-rancher1] exists on host [192.168.31.134]INFO[1341] Checking if container [old-kube-proxy] is running on host [192.168.31.134], try #1INFO[1341] Stopping container [kube-proxy] on host [192.168.31.134] with stopTimeoutDuration [5s], try #1INFO[1341] [healthcheck] service [kube-proxy] on host [192.168.31.130] is healthyINFO[1341] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.130]INFO[1341] Starting container [rke-log-linker] on host [192.168.31.130], try #1INFO[1342] Waiting for [kube-proxy] container to exit on host [192.168.31.134]INFO[1342] Renaming container [kube-proxy] to [old-kube-proxy] on host [192.168.31.134], try #1INFO[1342] Starting container [kube-proxy] on host [192.168.31.134], try #1INFO[1342] [worker] Successfully updated [kube-proxy] container on host [192.168.31.134]INFO[1342] Removing container [old-kube-proxy] on host [192.168.31.134], try #1INFO[1342] [healthcheck] service [kube-proxy] on host [192.168.31.131] is healthyINFO[1343] [healthcheck] Start Healthcheck on service [kube-proxy] on host [192.168.31.134]INFO[1343] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.131]INFO[1343] Starting container [rke-log-linker] on host [192.168.31.131], try #1INFO[1343] [healthcheck] service [kube-proxy] on host [192.168.31.134] is healthyINFO[1343] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.134]INFO[1343] [worker] Successfully started [rke-log-linker] container on host [192.168.31.130]INFO[1343] Removing container [rke-log-linker] on host [192.168.31.130], try #1INFO[1343] Starting container [rke-log-linker] on host [192.168.31.134], try #1INFO[1344] [worker] Successfully started [rke-log-linker] container on host [192.168.31.134]INFO[1344] Removing container [rke-log-linker] on host [192.168.31.134], try #1INFO[1344] [worker] Successfully started [rke-log-linker] container on host [192.168.31.131]INFO[1344] Removing container [rke-log-linker] on host [192.168.31.131], try #1INFO[1344] [remove/rke-log-linker] Successfully removed container on host [192.168.31.134]INFO[1344] [healthcheck] service [kube-proxy] on host [192.168.31.133] is healthyINFO[1344] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.133]INFO[1344] Starting container [rke-log-linker] on host [192.168.31.133], try #1INFO[1344] [remove/rke-log-linker] Successfully removed container on host [192.168.31.131]INFO[1345] [remove/rke-log-linker] Successfully removed container on host [192.168.31.130]INFO[1345] [worker] Successfully started [rke-log-linker] container on host [192.168.31.133]INFO[1345] Removing container [rke-log-linker] on host [192.168.31.133], try #1INFO[1345] [remove/rke-log-linker] Successfully removed container on host [192.168.31.133]INFO[1345] [worker] Successfully started Worker Plane..INFO[1345] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.133]INFO[1345] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.131]INFO[1345] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.130]INFO[1346] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.132]INFO[1346] Image [rancher/rke-tools:v0.1.52] exists on host [192.168.31.134]INFO[1346] Starting container [rke-log-cleaner] on host [192.168.31.133], try #1INFO[1346] Starting container [rke-log-cleaner] on host [192.168.31.132], try #1INFO[1346] Starting container [rke-log-cleaner] on host [192.168.31.130], try #1INFO[1346] Starting container [rke-log-cleaner] on host [192.168.31.131], try #1INFO[1346] Starting container [rke-log-cleaner] on host [192.168.31.134], try #1INFO[1346] [cleanup] Successfully started [rke-log-cleaner] container on host [192.168.31.133]INFO[1346] Removing container [rke-log-cleaner] on host [192.168.31.133], try #1INFO[1347] [remove/rke-log-cleaner] Successfully removed container on host [192.168.31.133]INFO[1347] [cleanup] Successfully started [rke-log-cleaner] container on host [192.168.31.131]INFO[1347] Removing container [rke-log-cleaner] on host [192.168.31.131], try #1INFO[1347] [cleanup] Successfully started [rke-log-cleaner] container on host [192.168.31.132]INFO[1347] Removing container [rke-log-cleaner] on host [192.168.31.132], try #1INFO[1348] [cleanup] Successfully started [rke-log-cleaner] container on host [192.168.31.130]INFO[1348] Removing container [rke-log-cleaner] on host [192.168.31.130], try #1INFO[1348] [remove/rke-log-cleaner] Successfully removed container on host [192.168.31.130]INFO[1349] [remove/rke-log-cleaner] Successfully removed container on host [192.168.31.131]INFO[1349] [remove/rke-log-cleaner] Successfully removed container on host [192.168.31.132]INFO[1350] [cleanup] Successfully started [rke-log-cleaner] container on host [192.168.31.134]INFO[1350] Removing container [rke-log-cleaner] on host [192.168.31.134], try #1INFO[1353] [remove/rke-log-cleaner] Successfully removed container on host [192.168.31.134]INFO[1353] [sync] Syncing nodes Labels and TaintsINFO[1354] [sync] Successfully synced nodes Labels and TaintsINFO[1354] [network] Setting up network plugin: canalINFO[1354] [addons] Saving ConfigMap for addon rke-network-plugin to KubernetesINFO[1354] [addons] Successfully saved ConfigMap for addon rke-network-plugin to KubernetesINFO[1354] [addons] Executing deploy job rke-network-pluginINFO[1383] [addons] Setting up corednsINFO[1383] [addons] Saving ConfigMap for addon rke-coredns-addon to KubernetesINFO[1383] [addons] Successfully saved ConfigMap for addon rke-coredns-addon to KubernetesINFO[1383] [addons] Executing deploy job rke-coredns-addonINFO[1383] [addons] CoreDNS deployed successfully..INFO[1383] [dns] DNS provider coredns deployed successfullyINFO[1383] [addons] Setting up Metrics ServerINFO[1383] [addons] Saving ConfigMap for addon rke-metrics-addon to KubernetesINFO[1383] [addons] Successfully saved ConfigMap for addon rke-metrics-addon to KubernetesINFO[1383] [addons] Executing deploy job rke-metrics-addonINFO[1383] [addons] Metrics Server deployed successfullyINFO[1383] [ingress] Setting up nginx ingress controllerINFO[1383] [addons] Saving ConfigMap for addon rke-ingress-controller to KubernetesINFO[1383] [addons] Successfully saved ConfigMap for addon rke-ingress-controller to KubernetesINFO[1383] [addons] Executing deploy job rke-ingress-controllerINFO[1383] [ingress] ingress controller nginx deployed successfullyINFO[1383] [addons] Setting up user addonsINFO[1383] [addons] no user addons definedINFO[1383] Finished building Kubernetes cluster successfully[rancher@rmaster01 ~]$[rancher@rmaster01 ~]$ kubectl get nodesNAME STATUS ROLES AGE VERSIONnode01 Ready worker 21d v1.17.4node02 Ready worker 21d v1.17.4rmaster01 Ready controlplane,etcd 21d v1.17.4rmaster02 Ready controlplane,etcd 21d v1.17.4rmaster03 Ready controlplane,etcd 21d v1.17.4[rancher@rmaster01 ~]$

2. 集群备份与恢复

# etcd数据备份,备份文件保存在/opt/rke/etcd-snapshots

rke etcd snapshot-save —config cluster.yml —name snapshot-test

etcd数据恢复

rke etcd snapshot-restore —config cluster.yml —name snapshot-test

- 定时备份

当集群启用了etcd-snapshot服务时,可以查看etcd-roll-snapshot容器日志,以确认是否自动创建备份。

docker logs etcd-rolling-snapshots

3. 证书管理

默认情况下,Kubernetes集群需要证书,RKE将自动为集群生成证书。在证书过期之前以及证书受到破坏时,轮换些证书非常重要。

证书轮换之后,Kubernetes组件将自动重新启动。证书轮换可用于下列服务:

- etcd

- kubelet

- kube-apiserver

- kube-proxy

- kube-scheduler

- kube-controller-manager

RKE可以通过一些简单的命令轮换自动生成的证书:

- 使用相同的CA轮换所有服务证书

- 使用相同的CA为单个服务轮换证书

- 轮换CA和所有服务证书

当您准备轮换证书时, RKE 配置文件 cluster.yml是必须的。运行rke cert rotate命令时,可通过—config指定配置路径。

使用相同CA轮换所有服务证书

rke cert rotate —config cluster.yml

使用相同CA轮换单个服务证书

rke cert rotate —service kubelet —config cluster.yml

轮换CA和所有服务证书

rke cert rotate —rotate-ca —config cluster.yml

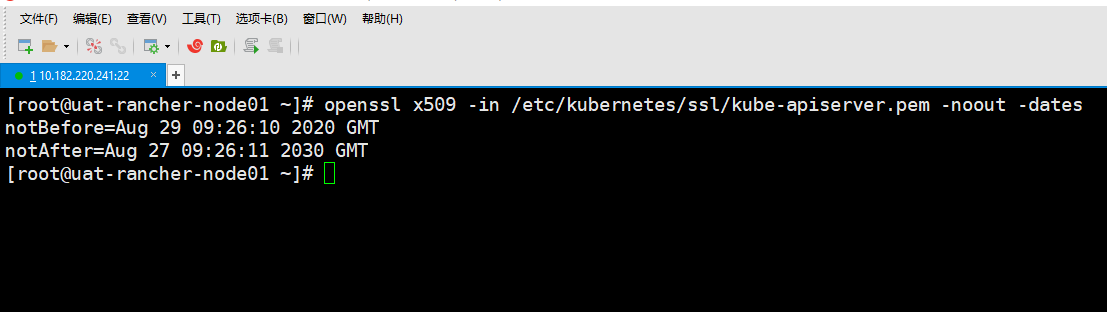

rke部署默认证书10年

[root@uat-rancher-node01 ~]# openssl x509 -in /etc/kubernetes/ssl/kube-apiserver.pem -noout -datesnotBefore=Aug 29 09:26:10 2020 GMTnotAfter=Aug 27 09:26:11 2030 GMT[root@uat-rancher-node01 ~]#

- 集群节点管理

# 添加删除节点需修改cluster.yml文件重新执行rke up

如果仅增加或者删除Work node节点可以添加 —update-only参数,表明只更新Work node的资源

rke up —update-only —config cluster.yml

4. 删除Kubernetes集群

4.1 删除Kubernetes集群

rke remove —config cluster.yml

4.2 执行脚本,清理残留信息.注意:使用root用户执行

#!/bin/bash# 停止服务systemctl disable kubelet.servicesystemctl disable kube-scheduler.servicesystemctl disable kube-proxy.servicesystemctl disable kube-controller-manager.servicesystemctl disable kube-apiserver.servicesystemctl stop kubelet.servicesystemctl stop kube-scheduler.servicesystemctl stop kube-proxy.servicesystemctl stop kube-controller-manager.servicesystemctl stop kube-apiserver.service# 删除所有容器docker rm -f $(docker ps -qa)# 删除所有容器卷docker volume rm $(docker volume ls -q)# 卸载mount目录for mount in $(mount | grep tmpfs | grep '/var/lib/kubelet' | awk '{ print $3 }') /var/lib/kubelet /var/lib/rancher; do umount $mount; done# 备份目录mv /etc/kubernetes /etc/kubernetes-bak-$(date +"%Y%m%d%H%M")mv /var/lib/etcd /var/lib/etcd-bak-$(date +"%Y%m%d%H%M")mv /var/lib/rancher /var/lib/rancher-bak-$(date +"%Y%m%d%H%M")mv /opt/rke /opt/rke-bak-$(date +"%Y%m%d%H%M")# 删除残留路径rm -rf /etc/ceph \/etc/cni \/opt/cni \/run/secrets/kubernetes.io \/run/calico \/run/flannel \/var/lib/calico \/var/lib/cni \/var/lib/kubelet \/var/log/containers \/var/log/pods \/var/run/calico# 清理网络接口network_interface=`ls /sys/class/net`for net_inter in $network_interface;doif ! echo $net_inter | grep -qiE 'lo|docker0|eth*|ens*';thenip link delete $net_interfidone# 清理残留进程port_list='80 443 6443 2376 2379 2380 8472 9099 10250 10254'for port in $port_listdopid=`netstat -atlnup|grep $port |awk '{print $7}'|awk -F '/' '{print $1}'|grep -v -|sort -rnk2|uniq`if [[ -n $pid ]];thenkill -9 $pidfidonepro_pid=`ps -ef |grep -v grep |grep kube|awk '{print $2}'`if [[ -n $pro_pid ]];thenkill -9 $pro_pidfi# 清理Iptables表## 注意:如果节点Iptables有特殊配置,以下命令请谨慎操作sudo iptables --flushsudo iptables --flush --table natsudo iptables --flush --table filtersudo iptables --table nat --delete-chainsudo iptables --table filter --delete-chainsystemctl restart docker### 注意:清理完后请重启机器

5. 恢复Kubectl配置文件

# 编写脚本

#!/bin/bashhelp (){echo ' ================================================================ 'echo ' --master-ip: 指定Master节点IP,任意一个K8S Master节点IP即可。'echo ' 使用示例:bash restore-kube-config.sh --master-ip=1.1.1.1 'echo ' ================================================================'}case "$1" in-h|--help) help; exit;;esacif [[ $1 == '' ]];thenhelp;exit;fiCMDOPTS="$*"for OPTS in $CMDOPTS;dokey=$(echo ${OPTS} | awk -F"=" '{print $1}' )value=$(echo ${OPTS} | awk -F"=" '{print $2}' )case "$key" in--master-ip) K8S_MASTER_NODE_IP=$value ;;esacdone# 获取Rancher Agent镜像RANCHER_IMAGE=$( docker images --filter=label=io.cattle.agent=true |grep 'v2.' | \grep -v -E 'rc|alpha|<none>' | head -n 1 | awk '{print $3}' )if [ -d /opt/rke/etc/kubernetes/ssl ]; thenK8S_SSLDIR=/opt/rke/etc/kubernetes/sslelseK8S_SSLDIR=/etc/kubernetes/sslfiCHECK_CLUSTER_STATE_CONFIGMAP=$( docker run --rm --entrypoint bash --net=host \-v $K8S_SSLDIR:/etc/kubernetes/ssl:ro $RANCHER_IMAGE -c '\if kubectl --kubeconfig /etc/kubernetes/ssl/kubecfg-kube-node.yaml \-n kube-system get configmap full-cluster-state | grep full-cluster-state > /dev/null; then \echo 'yes'; else echo 'no'; fi' )if [ $CHECK_CLUSTER_STATE_CONFIGMAP != 'yes' ]; thendocker run --rm --net=host \--entrypoint bash \-e K8S_MASTER_NODE_IP=$K8S_MASTER_NODE_IP \-v $K8S_SSLDIR:/etc/kubernetes/ssl:ro \$RANCHER_IMAGE \-c '\kubectl --kubeconfig /etc/kubernetes/ssl/kubecfg-kube-node.yaml \-n kube-system \get secret kube-admin -o jsonpath={.data.Config} | base64 --decode | \sed -e "/^[[:space:]]*server:/ s_:.*_: \"https://${K8S_MASTER_NODE_IP}:6443\"_"' > kubeconfig_admin.yamlif [ -s kubeconfig_admin.yaml ]; thenecho '恢复成功,执行以下命令测试:'echo ''echo "kubectl --kubeconfig kubeconfig_admin.yaml get nodes"elseecho "kubeconfig恢复失败。"fielsedocker run --rm --entrypoint bash --net=host \-e K8S_MASTER_NODE_IP=$K8S_MASTER_NODE_IP \-v $K8S_SSLDIR:/etc/kubernetes/ssl:ro \$RANCHER_IMAGE \-c '\kubectl --kubeconfig /etc/kubernetes/ssl/kubecfg-kube-node.yaml \-n kube-system \get configmap full-cluster-state -o json | \jq -r .data.\"full-cluster-state\" | \jq -r .currentState.certificatesBundle.\"kube-admin\".config | \sed -e "/^[[:space:]]*server:/ s_:.*_: \"https://${K8S_MASTER_NODE_IP}:6443\"_"' > kubeconfig_admin.yamlif [ -s kubeconfig_admin.yaml ]; thenecho '恢复成功,执行以下命令测试:'echo ''echo "kubectl --kubeconfig kubeconfig_admin.yaml get nodes"elseecho "kubeconfig恢复失败。"fifi

添加执行权限

chmod +x estore-kube-config.sh

恢复文件

./estore-kube-config.sh —master-ip=<任意一台master节点IP>

测试

kubectl —kubeconfig ./kubeconfig_admin.yaml get nodes

6. 恢复rkestate状态文件

RKE在集群配置文件cluster.yml的同一目录中创建cluster.rkestate文件。该.rkestate文件包含集群的当前状态,包括RKE配置和证书。需要保留此文件以更新集群或通过RKE对集群执行任何操作。

安装jq工具

yum -y install jq

恢复文件

kubectl —kubeconfig kube_config_cluster.yml get configmap -n kube-system \

full-cluster-state -o json | jq -r .data.\”full-cluster-state\” | jq -r . > cluster.rkestate

7. 节点维护

# 设置维护模式

kubectl cordon

取消维护模式

kubectl uncordon

8. 节点应用迁移

kubectl drain