镜像离线打包脚本

docker pull rancher/coreos-etcd:v3.4.3-rancher1docker pull rancher/rke-tools:v0.1.59docker pull rancher/k8s-dns-kube-dns:1.15.2docker pull rancher/k8s-dns-dnsmasq-nanny:1.15.2docker pull rancher/k8s-dns-sidecar:1.15.2docker pull rancher/cluster-proportional-autoscaler:1.7.1docker pull rancher/coredns-coredns:1.6.9docker pull rancher/k8s-dns-node-cache:1.15.7docker pull rancher/hyperkube:v1.18.6-rancher1docker pull rancher/coreos-flannel:v0.12.0docker pull rancher/flannel-cni:v0.3.0-rancher6docker pull rancher/calico-node:v3.13.4docker pull rancher/calico-cni:v3.13.4docker pull rancher/calico-kube-controllers:v3.13.4docker pull rancher/calico-ctl:v3.13.4docker pull rancher/calico-pod2daemon-flexvol:v3.13.4docker pull weaveworks/weave-kube:2.6.4docker pull weaveworks/weave-npc:2.6.4docker pull rancher/pause:3.1docker pull rancher/nginx-ingress-controller:nginx-0.32.0-rancher1docker pull rancher/nginx-ingress-controller-defaultbackend:1.5-rancher1docker pull rancher/metrics-server:v0.3.6

[root@uat-rancher-node01 rancher]# ll *.tar-rw-rw-r-- 1 root root 226441216 Aug 29 20:53 calico-cni.tar-rw-rw-r-- 1 root root 48206848 Aug 29 20:51 calico-ctl.tar-rw-rw-r-- 1 root root 56617984 Aug 29 20:50 calico-kube-controllers.tar-rw-rw-r-- 1 root root 265684992 Aug 29 20:55 calico-node.tar-rw-rw-r-- 1 root root 114141696 Aug 29 20:54 calico-pod2daemon-flexvol.tar-rw-rw-r-- 1 root root 41323520 Aug 29 20:42 cluster-proportional-autoscaler.tar-rw-rw-r-- 1 root root 43297792 Aug 29 20:47 coredns-coredns.tar-rw-rw-r-- 1 root root 85169664 Aug 29 20:46 coreos-etcd.tar-rw-rw-r-- 1 root root 1552846336 Aug 29 20:59 hyperkube.tar-rw-rw-r-- 1 root root 40140288 Aug 29 20:34 k8s-dns-dnsmasq-nanny.tar-rw-rw-r-- 1 root root 88547328 Aug 29 20:38 k8s-dns-kube-dns.tar-rw-rw-r-- 1 root root 92754944 Aug 29 20:43 k8s-dns-node-cache.tar-rw-rw-r-- 1 root root 80885760 Aug 29 20:39 k8s-dns-sidecar.tar-rw-rw-r-- 1 root root 41199616 Aug 29 20:44 metrics-server.tar-rw-rw-r-- 1 root root 5144064 Aug 29 20:34 nginx-ingress-controller-defaultbackend.tar-rw-rw-r-- 1 root root 331629056 Aug 29 20:48 nginx-ingress-controller.tar-rw-rw-r-- 1 root root 754176 Aug 29 20:34 pause.tar-rw-rw-r-- 1 root root 135227392 Aug 29 20:56 rke-tools.tar

[root@uat-rancher-node01 rancher]# ll *.tar|awk '{print $NF}'calico-cni.tarcalico-ctl.tarcalico-kube-controllers.tarcalico-node.tarcalico-pod2daemon-flexvol.tarcluster-proportional-autoscaler.tarcoredns-coredns.tarcoreos-etcd.tarhyperkube.tark8s-dns-dnsmasq-nanny.tark8s-dns-kube-dns.tark8s-dns-node-cache.tark8s-dns-sidecar.tarmetrics-server.tarnginx-ingress-controller-defaultbackend.tarnginx-ingress-controller.tarpause.tarrke-tools.tar[root@uat-rancher-node01 rancher]#[root@uat-rancher-node01 rancher]# ll *.tar|awk '{print $NF}'|sed -r 's#(.*)#docker load -i \1#' |bashb76aa58f4c23: Loading layer [==================================================>] 107.4MB/107.4MB566d20c1ccdf: Loading layer [==================================================>] 20.48kB/20.48kBf3e35332f964: Loading layer [==================================================>] 101.2MB/101.2MB109a0c66209a: Loading layer [==================================================>] 10.24kB/10.24kB6ac59be9ed64: Loading layer [==================================================>] 2.56kB/2.56kB3b481276ac88: Loading layer [==================================================>] 17.79MB/17.79MB11703ffeceb1: Loading layer [==================================================>] 13.82kB/13.82kBLoaded image: rancher/calico-cni:v3.13.41b3ee35aacca: Loading layer [==================================================>] 5.84MB/5.84MB419eaad88244: Loading layer [==================================================>] 42.35MB/42.35MBLoaded image: rancher/calico-ctl:v3.13.47bd4affc29eb: Loading layer [==================================================>] 13.82kB/13.82kB523c4550fd32: Loading layer [==================================================>] 53.52MB/53.52MBb5dadf89acf5: Loading layer [==================================================>] 3.07MB/3.07MBLoaded image: rancher/calico-kube-controllers:v3.13.4f80c95f61fff: Loading layer [==================================================>] 108.5MB/108.5MBeddba477a8ae: Loading layer [==================================================>] 20.48kB/20.48kB76224ad063b6: Loading layer [==================================================>] 2.781MB/2.781MB2ca638bbce84: Loading layer [==================================================>] 3.298MB/3.298MB62b8adc82952: Loading layer [==================================================>] 3.072kB/3.072kB2e1e28e9c135: Loading layer [==================================================>] 75.24MB/75.24MB1fa8cad5d0e3: Loading layer [==================================================>] 4.096kB/4.096kBaf19fd058de9: Loading layer [==================================================>] 7.185MB/7.185MB4ba854688443: Loading layer [==================================================>] 6.052MB/6.052MBdb5b88cbf87f: Loading layer [==================================================>] 2.048kB/2.048kB9fcde00c5e9a: Loading layer [==================================================>] 13.82kB/13.82kB5f956aaa317c: Loading layer [==================================================>] 61.32MB/61.32MB151cbe937db5: Loading layer [==================================================>] 1.251MB/1.251MBLoaded image: rancher/calico-node:v3.13.4724362325411: Loading layer [==================================================>] 2.048kB/2.048kB689becca0610: Loading layer [==================================================>] 13.82kB/13.82kB1140aa2f2fa9: Loading layer [==================================================>] 5.12kB/5.12kB6c83a0e86620: Loading layer [==================================================>] 5.606MB/5.606MBLoaded image: rancher/calico-pod2daemon-flexvol:v3.13.4932da5156413: Loading layer [==================================================>] 3.062MB/3.062MB7b00adda7217: Loading layer [==================================================>] 38.25MB/38.25MBLoaded image: rancher/cluster-proportional-autoscaler:1.7.1225df95e717c: Loading layer [==================================================>] 336.4kB/336.4kB8762ba1e4767: Loading layer [==================================================>] 42.95MB/42.95MBLoaded image: rancher/coredns-coredns:1.6.9fe9a8b4f1dcc: Loading layer [==================================================>] 43.87MB/43.87MB816dcf8208f7: Loading layer [==================================================>] 23.72MB/23.72MB4da29af72f7f: Loading layer [==================================================>] 17.55MB/17.55MBe94602b7c460: Loading layer [==================================================>] 2.56kB/2.56kBe74140cc410f: Loading layer [==================================================>] 3.072kB/3.072kB0c356e885c8a: Loading layer [==================================================>] 3.072kB/3.072kBLoaded image: rancher/coreos-etcd:v3.4.3-rancher182a5cde9d9a9: Loading layer [==================================================>] 53.87MB/53.87MBa2b38eae1b39: Loading layer [==================================================>] 21.62MB/21.62MBf378e9487360: Loading layer [==================================================>] 5.168MB/5.168MBa35a0b8b55f5: Loading layer [==================================================>] 4.608kB/4.608kBdea351e760ec: Loading layer [==================================================>] 8.192kB/8.192kBd57a645c2b0c: Loading layer [==================================================>] 8.704kB/8.704kB80c8b272a31c: Loading layer [==================================================>] 9.728kB/9.728kB588868021aa0: Loading layer [==================================================>] 1.824MB/1.824MB0a514e5f8343: Loading layer [==================================================>] 5.12kB/5.12kBe46a9dd0cf8f: Loading layer [==================================================>] 23.04kB/23.04kBea3e975f63d4: Loading layer [==================================================>] 349.1MB/349.1MB488428ef6ca2: Loading layer [==================================================>] 72.17MB/72.17MB8cff9f524c8b: Loading layer [==================================================>] 469.4MB/469.4MB9105b0079c7f: Loading layer [==================================================>] 3.584kB/3.584kB03a3faf297ee: Loading layer [==================================================>] 579.6MB/579.6MBLoaded image: rancher/hyperkube:v1.18.6-rancher1d9ff549177a9: Loading layer [==================================================>] 4.671MB/4.671MB257e31c9cb28: Loading layer [==================================================>] 2.56kB/2.56kBe3418a4c0703: Loading layer [==================================================>] 362kB/362kB39fe80c5a89b: Loading layer [==================================================>] 3.584kB/3.584kB295a83faf517: Loading layer [==================================================>] 35.08MB/35.08MBLoaded image: rancher/k8s-dns-dnsmasq-nanny:1.15.247d8bc5560bb: Loading layer [==================================================>] 44.66MB/44.66MBLoaded image: rancher/k8s-dns-kube-dns:1.15.235c35a973795: Loading layer [==================================================>] 2.276MB/2.276MBd71bb3de1e76: Loading layer [==================================================>] 46.59MB/46.59MBLoaded image: rancher/k8s-dns-node-cache:1.15.7e3b0318787d0: Loading layer [==================================================>] 37MB/37MBLoaded image: rancher/k8s-dns-sidecar:1.15.27bf3709d22bb: Loading layer [==================================================>] 38.13MB/38.13MBLoaded image: rancher/metrics-server:v0.3.6b108d4968233: Loading layer [==================================================>] 5.134MB/5.134MBLoaded image: rancher/nginx-ingress-controller-defaultbackend:1.5-rancher1beee9f30bc1f: Loading layer [==================================================>] 5.862MB/5.862MB378129e7fefc: Loading layer [==================================================>] 161.4MB/161.4MB2c4876c55341: Loading layer [==================================================>] 6.144kB/6.144kBa5cd644ea51c: Loading layer [==================================================>] 30.43MB/30.43MBf99824ffc859: Loading layer [==================================================>] 16.91MB/16.91MBe816d879e6f5: Loading layer [==================================================>] 4.096kB/4.096kBd43abedb29d5: Loading layer [==================================================>] 8.042MB/8.042MBbfa0001530d1: Loading layer [==================================================>] 50.41MB/50.41MB4becb331f71a: Loading layer [==================================================>] 6.656kB/6.656kBb2a014433f54: Loading layer [==================================================>] 37.57MB/37.57MB7863f1fb0c1e: Loading layer [==================================================>] 20.9MB/20.9MB909651a2178f: Loading layer [==================================================>] 6.656kB/6.656kBLoaded image: rancher/nginx-ingress-controller:nginx-0.32.0-rancher1e17133b79956: Loading layer [==================================================>] 744.4kB/744.4kBLoaded image: rancher/pause:3.1f1b5933fe4b5: Loading layer [==================================================>] 5.796MB/5.796MB2bdf88b2699d: Loading layer [==================================================>] 17.72MB/17.72MB519b820c8bb3: Loading layer [==================================================>] 1.603MB/1.603MBeed905e10fc1: Loading layer [==================================================>] 24.45MB/24.45MB126480a1531c: Loading layer [==================================================>] 3.072kB/3.072kB021ed1072f21: Loading layer [==================================================>] 56.38MB/56.38MB25f3c3b9821e: Loading layer [==================================================>] 3.509MB/3.509MBb5449afc16e1: Loading layer [==================================================>] 16.08MB/16.08MB8122015cea19: Loading layer [==================================================>] 4.096kB/4.096kB06002c2e3403: Loading layer [==================================================>] 4.096kB/4.096kB3f81d91d9bbb: Loading layer [==================================================>] 5.632kB/5.632kBc6382345d3ee: Loading layer [==================================================>] 12.8kB/12.8kB4ab3470e2725: Loading layer [==================================================>] 9.601MB/9.601MBLoaded image: rancher/rke-tools:v0.1.59[root@uat-rancher-node01 rancher]# docker image lsREPOSITORY TAG IMAGE ID CREATED SIZErancher/hyperkube v1.18.6-rancher1 5a1e9f24e782 6 weeks ago 1.51GBrancher/rke-tools v0.1.59 904d2afa34c8 7 weeks ago 132MBrancher/calico-node v3.13.4 c91d49e6f044 3 months ago 261MBrancher/calico-pod2daemon-flexvol v3.13.4 c5dca18c0346 3 months ago 112MBrancher/calico-cni v3.13.4 9e1176a74e85 3 months ago 225MBrancher/calico-ctl v3.13.4 cbd105686d60 3 months ago 47.9MBrancher/calico-kube-controllers v3.13.4 f9f70a2e922f 3 months ago 56.6MBrancher/nginx-ingress-controller nginx-0.32.0-rancher1 eda78cfd6f9d 3 months ago 328MBrancher/coredns-coredns 1.6.9 4e797b323460 5 months ago 43.2MBrancher/coreos-etcd v3.4.3-rancher1 a0b920cf970d 10 months ago 83.6MBrancher/metrics-server v0.3.6 9dd718864ce6 10 months ago 39.9MBrancher/k8s-dns-node-cache 1.15.7 ce4f91502e1b 10 months ago 91MBrancher/cluster-proportional-autoscaler 1.7.1 14afc47fd5af 12 months ago 40.1MBrancher/k8s-dns-sidecar 1.15.2 ffc7ccc8fded 16 months ago 79.3MBrancher/k8s-dns-kube-dns 1.15.2 4ad5e24b1ad2 16 months ago 87MBrancher/k8s-dns-dnsmasq-nanny 1.15.2 c4d9bb9e5ff0 16 months ago 39.8MBrancher/nginx-ingress-controller-defaultbackend 1.5-rancher1 b5af743e5984 23 months ago 5.13MBrancher/pause 3.1 da86e6ba6ca1 2 years ago 742kB[root@uat-rancher-node01 rancher]#

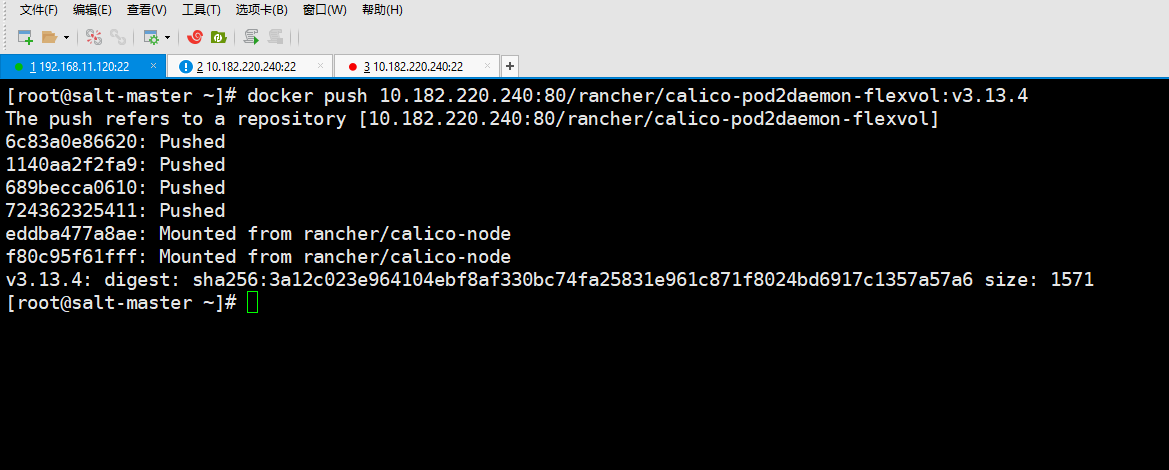

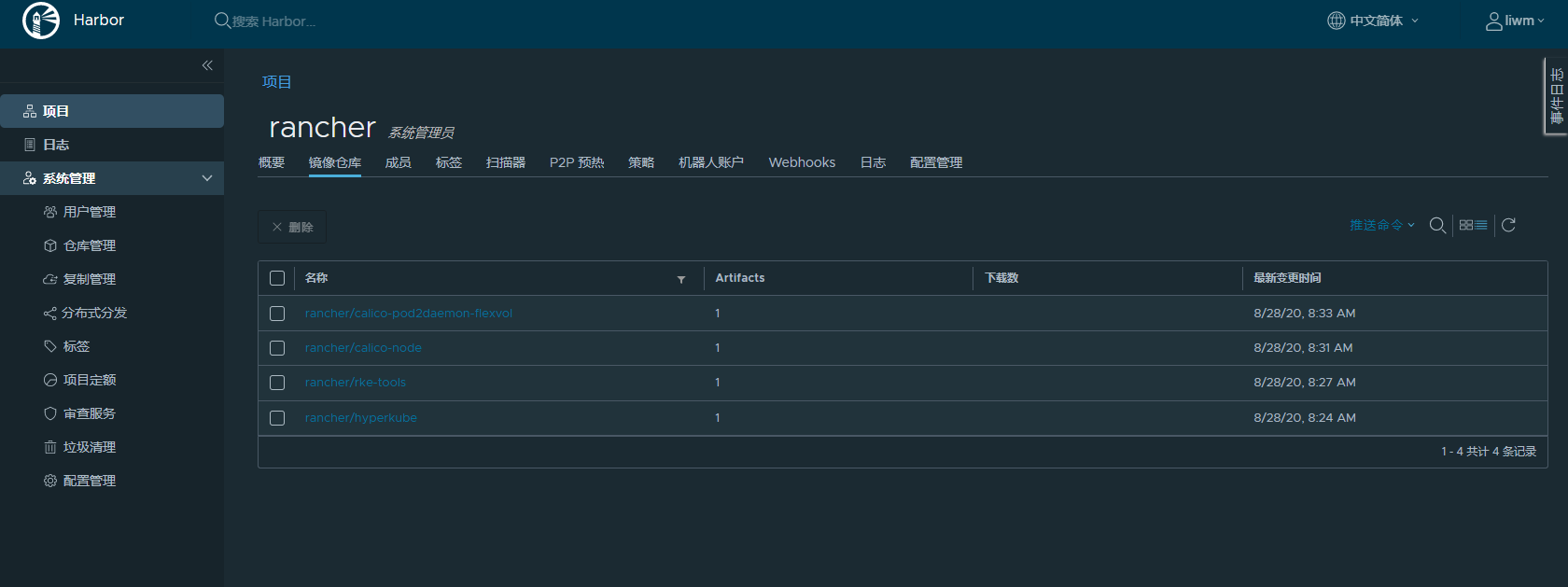

批量下载镜像并打包推送到harbor镜像仓库

[root@salt-master ~]# docker push 10.182.220.240:80/rancher/calico-pod2daemon-flexvol:v3.13.4The push refers to a repository [10.182.220.240:80/rancher/calico-pod2daemon-flexvol]6c83a0e86620: Pushed1140aa2f2fa9: Pushed689becca0610: Pushed724362325411: Pushededdba477a8ae: Mounted from rancher/calico-nodef80c95f61fff: Mounted from rancher/calico-nodev3.13.4: digest: sha256:3a12c023e964104ebf8af330bc74fa25831e961c871f8024bd6917c1357a57a6 size: 1571[root@salt-master ~]#

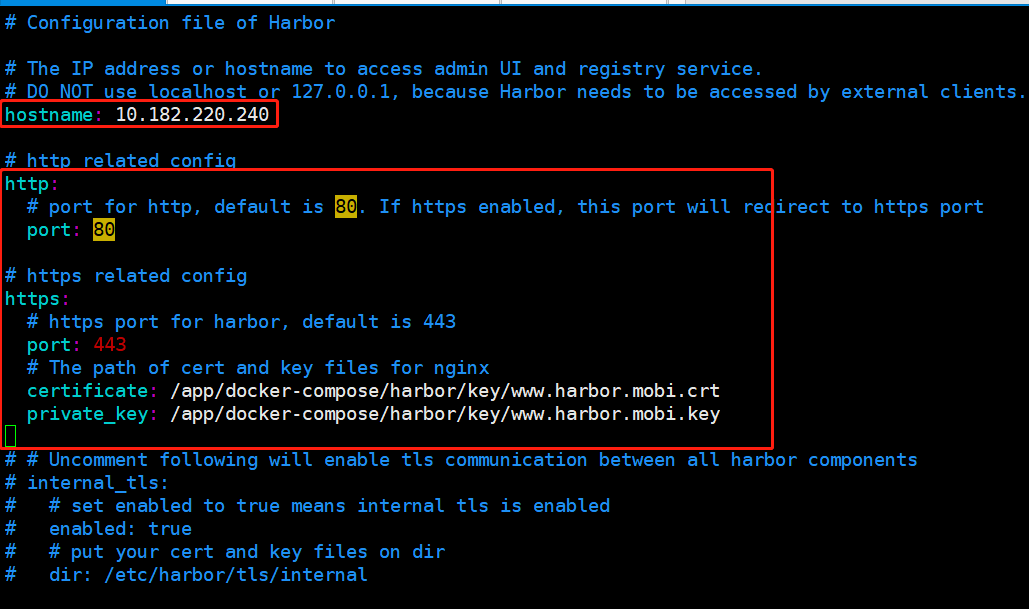

Harbor私有镜像仓库部署

[root@harbor harbor]# vim harbor.yml

harbor证书配置

[root@harbor harbor]# cat key.sh#!/bin/bash# 在该目录下操作生成证书,正好供harbor.yml使用#mkdir -p /data/certcd /app/docker-compose/harbor/keyopenssl genrsa -out ca.key 4096openssl req -x509 -new -nodes -sha512 -days 3650 -subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=www.harbor.mobi" -key ca.key -out ca.crtopenssl genrsa -out www.harbor.mobi.key 4096openssl req -sha512 -new -subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=www.harbor.mobi" -key www.harbor.mobi.key -out www.harbor.mobi.csrcat > v3.ext <<-EOFauthorityKeyIdentifier=keyid,issuerbasicConstraints=CA:FALSEkeyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEnciphermentextendedKeyUsage = serverAuthsubjectAltName = @alt_names[alt_names]DNS.1=www.harbor.mobiDNS.2=harborDNS.3=ks-allinoneEOFopenssl x509 -req -sha512 -days 3650 -extfile v3.ext -CA ca.crt -CAkey ca.key -CAcreateserial -in www.harbor.mobi.csr -out www.harbor.mobi.crtopenssl x509 -inform PEM -in www.harbor.mobi.crt -out www.harbor.mobi.certcp www.harbor.mobi.crt /etc/pki/ca-trust/source/anchors/www.harbor.mobi.crtupdate-ca-trust[root@harbor harbor]#

[root@harbor harbor]# tree /app/docker-compose/harbor/key//app/docker-compose/harbor/key/├── ca.crt├── ca.key├── ca.srl├── v3.ext├── www.harbor.mobi.cert├── www.harbor.mobi.crt├── www.harbor.mobi.csr└── www.harbor.mobi.key0 directories, 8 files[root@harbor harbor]#

新建一个目录

www.harbor.mobi

复制到各个dockers节点的www.harbor.mobi

[rancher@uat-rancher-node01 ~]$ tree /etc/docker/certs.d/www.harbor.mobi//etc/docker/certs.d/www.harbor.mobi/├── ca.crt├── www.harbor.mobi.cert└── www.harbor.mobi.key0 directories, 3 files[rancher@uat-rancher-node01 ~]$

//“registry-mirrors”: [“https://10.182.220.240“],

“insecure-registries”: [“https://10.182.220.240“],

[root@uat-rancher-node04 ~]# docker login https://10.182.220.240Username: liwmPassword:WARNING! Your password will be stored unencrypted in /root/.docker/config.json.Configure a credential helper to remove this warning. Seehttps://docs.docker.com/engine/reference/commandline/login/#credentials-storeLogin Succeeded[root@uat-rancher-node04 ~]# docker login 10.182.220.242Username: adminPassword:WARNING! Your password will be stored unencrypted in /root/.docker/config.json.Configure a credential helper to remove this warning. Seehttps://docs.docker.com/engine/reference/commandline/login/#credentials-storeLogin Succeeded[root@uat-rancher-node04 ~]# cat /etc/docker/daemon.json{"graph": "/app/docker","max-concurrent-downloads": 3,"max-concurrent-uploads": 5,"insecure-registries": ["https://10.182.220.240","http://10.182.220.242"],"storage-driver": "overlay2","storage-opts": ["overlay2.override_kernel_check=true"],"log-driver": "json-file","log-opts": {"max-size": "100m","max-file": "3"}}[root@uat-rancher-node04 ~]#

配置多个私有仓库

[rancher@uat-rancher-node01 ~]$ cat /etc/docker/daemon.json{"graph": "/app/docker","max-concurrent-downloads": 3,"max-concurrent-uploads": 5,"insecure-registries": ["https://10.182.220.240"],"storage-driver": "overlay2","storage-opts": ["overlay2.override_kernel_check=true"],"log-driver": "json-file","log-opts": {"max-size": "100m","max-file": "3"}}[rancher@uat-rancher-node01 ~]$

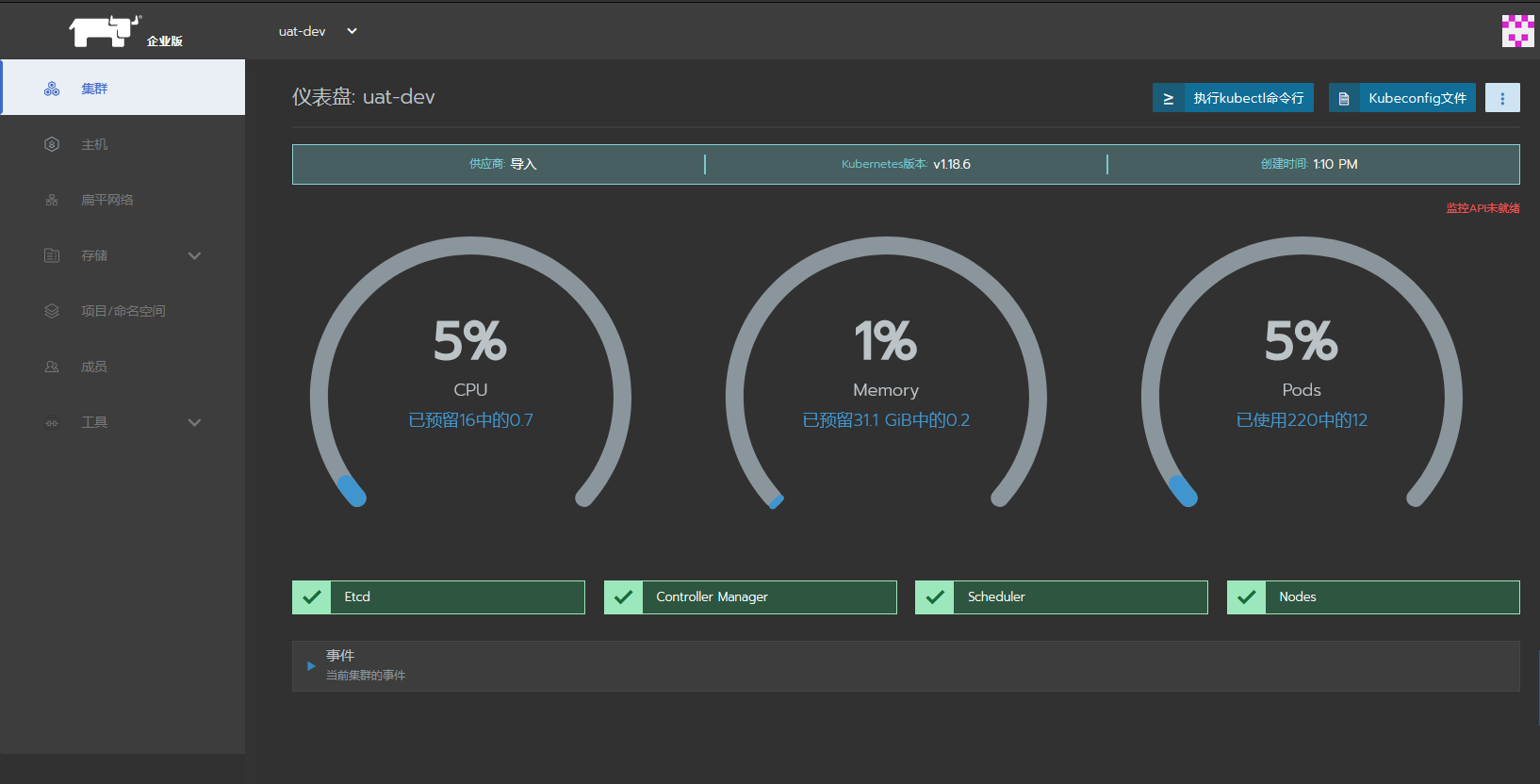

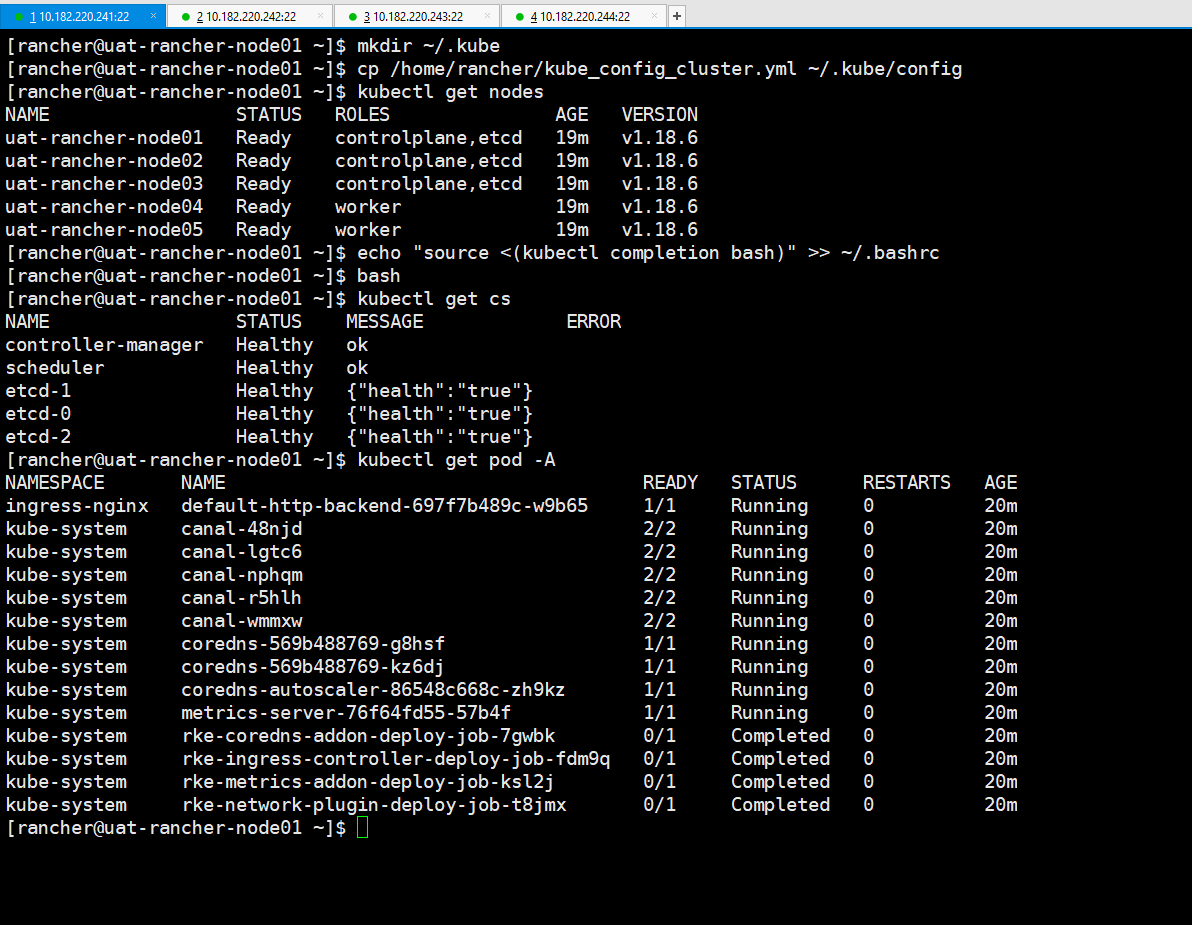

rke部署kubernetes集群

##配置私有仓库地址(需要配置证书)

修改rke cluster.yml部署配置文件

[rancher@uat-rancher-node01 ~]$ cat cluster.ymlnodes:- address: 10.182.220.241hostname_override: uat-rancher-node01internal_address:user: rancherrole: [controlplane,etcd]- address: 10.182.220.242hostname_override: uat-rancher-node02internal_address:user: rancherrole: [controlplane,etcd]- address: 10.182.220.243hostname_override: uat-rancher-node03internal_address:user: rancherrole: [controlplane,etcd]- address: 10.182.220.244hostname_override: uat-rancher-node04internal_address:user: rancherrole: [worker]- address: 10.182.220.245hostname_override: uat-rancher-node05internal_address:user: rancherrole: [worker]# 定义kubernetes版本kubernetes_version: v1.18.6-rancher1-2# 如果要使用私有仓库中的镜像,配置以下参数来指定默认私有仓库地址需要启用证书。private_registries:- url: 10.182.220.240user: liwmpassword: !Q2w3e4ris_default: trueservices:etcd:# 扩展参数extra_args:# 240个小时后自动清理磁盘碎片,通过auto-compaction-retention对历史数据压缩后,后端数据库可能会出现内部碎片。内部碎片是指空闲状态的,能被后端使用但是仍然消耗存储空间,碎片整理过程将此存储空间释放回文>件系统auto-compaction-retention: 240 #(单位小时)# 修改空间配额为6442450944,默认2G,最大8Gquota-backend-bytes: '6442450944'# 自动备份snapshot: truecreation: 5m0sretention: 24hkubelet:extra_args:# 支持静态Pod。在主机/etc/kubernetes/目录下创建manifest目录,Pod YAML文件放在/etc/kubernetes/manifest/目录下pod-manifest-path: "/etc/kubernetes/manifest/"# 有几个网络插件可以选择:flannel、canal、calico,Rancher2默认canalnetwork:plugin: canaloptions:flannel_backend_type: "vxlan"# 可以设置provider: none来禁用ingress controlleringress:provider: nginxnode_selector:app: ingress[rancher@uat-rancher-node01 ~]$

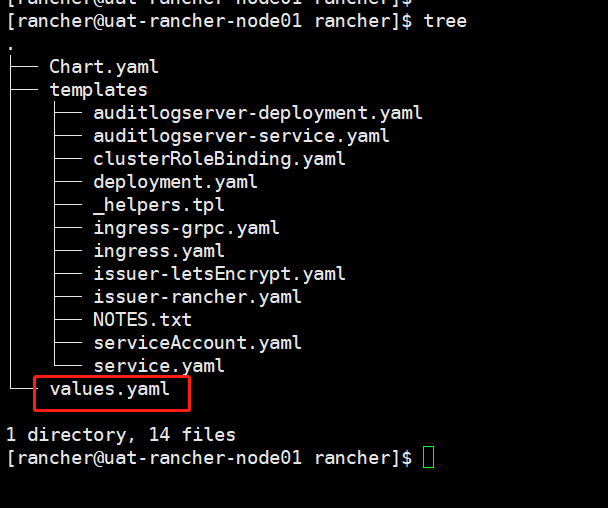

helm 部署 rancher

helm证书配置

[rancher@uat-rancher-node01 ~]$ cat helm.sh#!/bin/bash -e# * 为必改项# * 服务器FQDN或颁发者名(更换为你自己的域名)CN='rancher'# 扩展信任IP或域名## 一般ssl证书只信任域名的访问请求,有时候需要使用ip去访问server,那么需要给ssl证书添加扩展IP,用逗号隔开。配置节点ip和lb的ip。SSL_IP='10.182.220.241,10.182.220.242,10.182.220.243,10.182.220.244,10.182.220.245,10.182.220.246'SSL_DNS=''# 国家名(2个字母的代号)C=CN# 证书加密位数SSL_SIZE=2048# 证书有效期DATE=${DATE:-3650}# 配置文件SSL_CONFIG='openssl.cnf'if [[ -z $SILENT ]]; thenecho "----------------------------"echo "| SSL Cert Generator |"echo "----------------------------"echofiexport CA_KEY=${CA_KEY-"cakey.pem"}export CA_CERT=${CA_CERT-"cacerts.pem"}export CA_SUBJECT=ca-$CNexport CA_EXPIRE=${DATE}export SSL_CONFIG=${SSL_CONFIG}export SSL_KEY=$CN.keyexport SSL_CSR=$CN.csrexport SSL_CERT=$CN.crtexport SSL_EXPIRE=${DATE}export SSL_SUBJECT=${CN}export SSL_DNS=${SSL_DNS}export SSL_IP=${SSL_IP}export K8S_SECRET_COMBINE_CA=${K8S_SECRET_COMBINE_CA:-'true'}[[ -z $SILENT ]] && echo "--> Certificate Authority"if [[ -e ./${CA_KEY} ]]; then[[ -z $SILENT ]] && echo "====> Using existing CA Key ${CA_KEY}"else[[ -z $SILENT ]] && echo "====> Generating new CA key ${CA_KEY}"openssl genrsa -out ${CA_KEY} ${SSL_SIZE} > /dev/nullfiif [[ -e ./${CA_CERT} ]]; then[[ -z $SILENT ]] && echo "====> Using existing CA Certificate ${CA_CERT}"else[[ -z $SILENT ]] && echo "====> Generating new CA Certificate ${CA_CERT}"openssl req -x509 -sha256 -new -nodes -key ${CA_KEY} -days ${CA_EXPIRE} -out ${CA_CERT} -subj "/CN=${CA_SUBJECT}" > /dev/null || exit 1fiecho "====> Generating new config file ${SSL_CONFIG}"cat > ${SSL_CONFIG} <<EOM[req]req_extensions = v3_reqdistinguished_name = req_distinguished_name[req_distinguished_name][ v3_req ]basicConstraints = CA:FALSEkeyUsage = nonRepudiation, digitalSignature, keyEnciphermentextendedKeyUsage = clientAuth, serverAuthEOMif [[ -n ${SSL_DNS} || -n ${SSL_IP} ]]; thencat >> ${SSL_CONFIG} <<EOMsubjectAltName = @alt_names[alt_names]EOMIFS=","dns=(${SSL_DNS})dns+=(${SSL_SUBJECT})for i in "${!dns[@]}"; doecho DNS.$((i+1)) = ${dns[$i]} >> ${SSL_CONFIG}doneif [[ -n ${SSL_IP} ]]; thenip=(${SSL_IP})for i in "${!ip[@]}"; doecho IP.$((i+1)) = ${ip[$i]} >> ${SSL_CONFIG}donefifi[[ -z $SILENT ]] && echo "====> Generating new SSL KEY ${SSL_KEY}"openssl genrsa -out ${SSL_KEY} ${SSL_SIZE} > /dev/null || exit 1[[ -z $SILENT ]] && echo "====> Generating new SSL CSR ${SSL_CSR}"openssl req -sha256 -new -key ${SSL_KEY} -out ${SSL_CSR} -subj "/CN=${SSL_SUBJECT}" -config ${SSL_CONFIG} > /dev/null || exit 1[[ -z $SILENT ]] && echo "====> Generating new SSL CERT ${SSL_CERT}"openssl x509 -sha256 -req -in ${SSL_CSR} -CA ${CA_CERT} -CAkey ${CA_KEY} -CAcreateserial -out ${SSL_CERT} \-days ${SSL_EXPIRE} -extensions v3_req -extfile ${SSL_CONFIG} > /dev/null || exit 1if [[ -z $SILENT ]]; thenecho "====> Complete"echo "keys can be found in volume mapped to $(pwd)"echoecho "====> Output results as YAML"echo "---"echo "ca_key: |"cat $CA_KEY | sed 's/^/ /'echoecho "ca_cert: |"cat $CA_CERT | sed 's/^/ /'echoecho "ssl_key: |"cat $SSL_KEY | sed 's/^/ /'echoecho "ssl_csr: |"cat $SSL_CSR | sed 's/^/ /'echoecho "ssl_cert: |"cat $SSL_CERT | sed 's/^/ /'echofiif [[ -n $K8S_SECRET_NAME ]]; thenif [[ -n $K8S_SECRET_COMBINE_CA ]]; then[[ -z $SILENT ]] && echo "====> Adding CA to Cert file"cat ${CA_CERT} >> ${SSL_CERT}fi[[ -z $SILENT ]] && echo "====> Creating Kubernetes secret: $K8S_SECRET_NAME"kubectl delete secret $K8S_SECRET_NAME --ignore-not-foundif [[ -n $K8S_SECRET_SEPARATE_CA ]]; thenkubectl create secret generic \$K8S_SECRET_NAME \--from-file="tls.crt=${SSL_CERT}" \--from-file="tls.key=${SSL_KEY}" \--from-file="ca.crt=${CA_CERT}"elsekubectl create secret tls \$K8S_SECRET_NAME \--cert=${SSL_CERT} \--key=${SSL_KEY}fiif [[ -n $K8S_SECRET_LABELS ]]; then[[ -z $SILENT ]] && echo "====> Labeling Kubernetes secret"IFS=$' \n\t' # We have to reset IFS or label secret will misbehave on some systemskubectl label secret \$K8S_SECRET_NAME \$K8S_SECRET_LABELSfifiecho "4. 重命名服务证书"mv ${CN}.key tls.keymv ${CN}.crt tls.crt# 把生成的证书作为密文导入K8S## * 指定K8S配置文件路径kubeconfig=/home/rancher/.kube/configkubectl --kubeconfig=$kubeconfig create namespace cattle-systemkubectl --kubeconfig=$kubeconfig -n cattle-system create secret tls tls-rancher-ingress --cert=./tls.crt --key=./tls.keykubectl --kubeconfig=$kubeconfig -n cattle-system create secret generic tls-ca --from-file=cacerts.pem

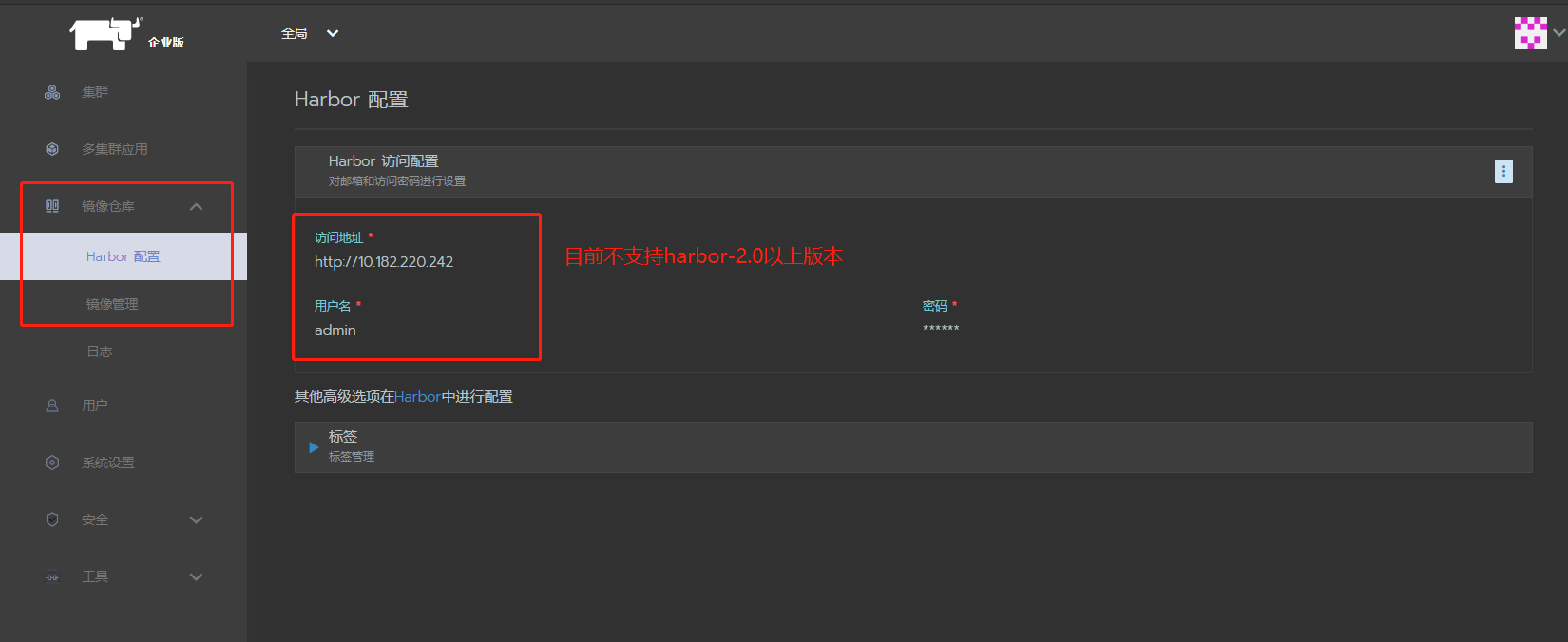

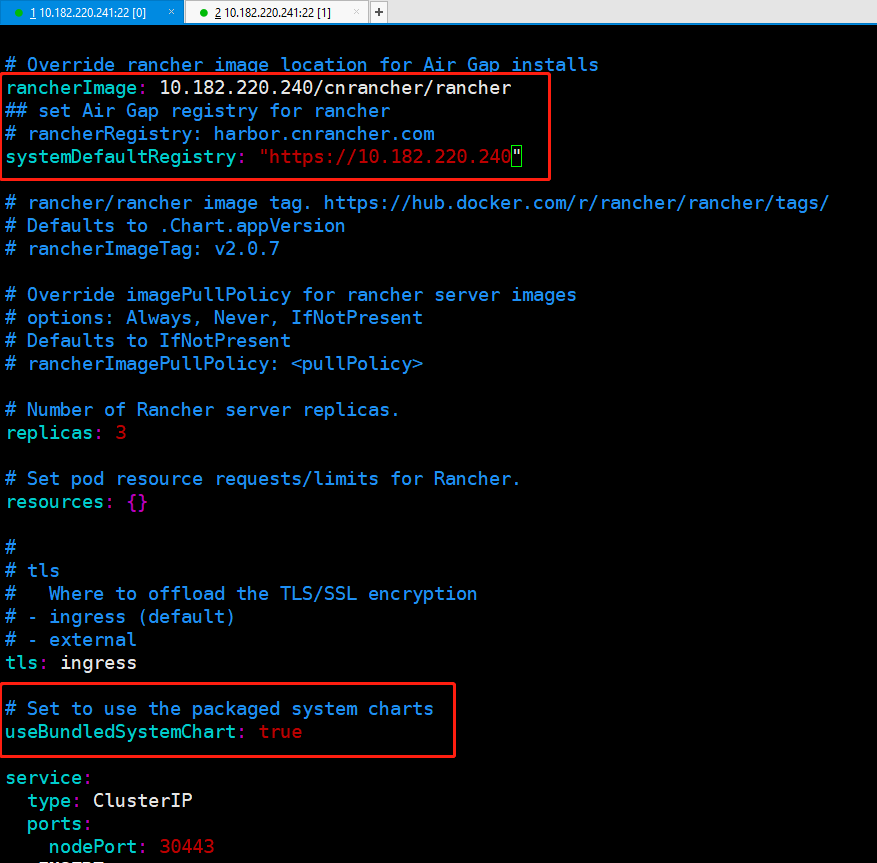

配置仓库地址

[rancher@uat-rancher-node01 rancher]$ cat values.yaml# Additional Trusted CAs.# Enable this flag and add your CA certs as a secret named tls-ca-additional in the namespace.# See README.md for details.additionalTrustedCAs: falseantiAffinity: preferred# Audit Logs https://rancher.com/docs/rancher/v2.x/en/installation/api-auditing/# The audit log is piped to the console of the rancher-audit-log container in the rancher pod.# https://rancher.com/docs/rancher/v2.x/en/installation/api-auditing/# destination stream to sidecar container console or hostPath volume# level: Verbosity of logs, 0 to 3. 0 is off 3 is a lot.auditLog:destination: sidecarhostPath: /var/log/rancher/audit/level: 0maxAge: 1maxBackup: 1maxSize: 100fluentbitImage: 10.182.220.240/cnrancher/rancher-auditlog-fluentbitfluentbitImageTag: v1.0.0# The Mysql should be deployed manually and create the user and database schema for auditlog server.# You should use nonadministrative account and a high strength password to connect to the Mysql.auditLogServer:image: 10.182.220.240/cnrancher/rancher-auditlog-serverimageTag: v1.0.0replicas: 1antiAffinity: preferredserverPort: 9000DBHost: localhostDBPort: 3306DBUser: rootDBPassword: passwordDBName: rancher# Have Rancher detect and import the "local" Rancher server cluster# Adding the "local" cluster available in the GUI can be convenient, but any user with access to this cluster has "root" on any of the clusters that Rancher manages.# options; "auto", "false". (auto pretty much means true)addLocal: "auto"# Image for collecting rancher audit logs.# Important: update pkg/image/export/main.go when this default image is changed, so that it's reflected accordingly in rancher-images.txt generated for air-gapped setups.busyboxImage: 10.182.220.240/dev/busybox# Add debug flag to Rancher serverdebug: false# Extra environment variables passed to the rancher pods.# extraEnv:# - name: CATTLE_TLS_MIN_VERSION# value: "1.0"# Fully qualified name to reach your Rancher server# hostname: rancher.my.org## Optional array of imagePullSecrets containing private registry credentials## Ref: https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/imagePullSecrets: []# - name: secretName### ingress #### Readme for details and instruction on adding tls secrets.ingress:extraAnnotations:nginx.ingress.kubernetes.io/proxy-connect-timeout: "30"nginx.ingress.kubernetes.io/proxy-read-timeout: "1800"nginx.ingress.kubernetes.io/proxy-send-timeout: "1800"# configurationSnippet - Add additional Nginx configuration. This example statically sets a header on the ingress.# configurationSnippet: |# more_set_input_headers "X-Forwarded-Host: {{ .Values.hostname }}";configurationSnippet: |more_clear_headers Server;tls:# options: rancher, letsEncrypt, secretsource: rancher### LetsEncrypt config #### ProTip: The production environment only allows you to register a name 5 times a week.# Use staging until you have your config right.letsEncrypt:# email: none@example.comenvironment: production# If you are using certs signed by a private CA set to 'true' and set the 'tls-ca'# in the 'rancher-system' namespace. See the README.md for detailsprivateCA: false# http[s] proxy server passed into rancher server.# proxy: http://<username>@<password>:<url>:<port># comma separated list of domains or ip addresses that will not use the proxynoProxy: 127.0.0.0/8,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16# Override rancher image location for Air Gap installsrancherImage: 10.182.220.240/cnrancher/rancher## set Air Gap registry for rancher# rancherRegistry: harbor.cnrancher.comsystemDefaultRegistry: ""# rancher/rancher image tag. https://hub.docker.com/r/rancher/rancher/tags/# Defaults to .Chart.appVersion# rancherImageTag: v2.0.7# Override imagePullPolicy for rancher server images# options: Always, Never, IfNotPresent# Defaults to IfNotPresent# rancherImagePullPolicy: <pullPolicy># Number of Rancher server replicas.replicas: 3# Set pod resource requests/limits for Rancher.resources: {}## tls# Where to offload the TLS/SSL encryption# - ingress (default)# - externaltls: ingress# Set to use the packaged system chartsuseBundledSystemChart: falseservice:type: ClusterIPports:nodePort: 30443# Certmanager version compatibilitycertmanager:version: ""[rancher@uat-rancher-node01 rancher]$

helm install rancher rancher/ --namespace cattle-system --set rancherImage=cnrancher/rancher --set service.type=NodePort --set service.ports.nodePort=30001 --set tls=internal --set privateCA=true