- PV PVC概念

- PV生命周期

- SCI 存储接口

- 创建一个pod使用pvc

- 在master节点操作

[root@master01 pv1]# kubectl exec -it task-pv-pod bash

root@task-pv-pod:/# cd /usr/share/nginx/html/

root@task-pv-pod:/usr/share/nginx/html# ls

root@task-pv-pod:/usr/share/nginx/html# touch index.html

root@task-pv-pod:/usr/share/nginx/html# echo 11 > index.html

root@task-pv-pod:/usr/share/nginx/html# exit

exit

[root@master01 pv1]# curl 192.168.1.41

11 - pod运行在node01,所以要去node01节点查看hostpath

[root@node01 ~]# cd /storage/

[root@node01 storage]# ls

pv1

[root@node01 storage]# cd pv1/

[root@node01 pv1]# ls

index.html

[root@node01 pv1]

- 在master节点操作

- https://github.com/kubernetes-incubator/external-storage

git clone https://github.com/kubernetes-incubator/external-storage.git">安装nfs server

yum -y install nfs-utils

# 启动服务,并设置为开机自启

systemctl enable —now nfs

# 创建共享目录

mkdir /storage

# 编辑nfs配置文件

vim /etc/exports

/storage (rw,sync,no_root_squash)

# 重启服务

systemctl restart nfs

# kubernetes集群计算节点部署

yum -y install nfs-utils

# 在计算节点测试

mkdir /test

mount.nfs 172.17.224.182:/storage /test

touch /test/123

StorageClass插件部署*

# 下载系统插件:

yum -y install git

https://github.com/kubernetes-incubator/external-storage

git clone https://github.com/kubernetes-incubator/external-storage.git - 修改yaml信息

[liwm@rmaster01 deploy]$ pwd

/home/liwm/yaml/nfs-client/deploy - 测试一:创建pvc后自动创建pv并bound

- 测试二:创建Pod,自动创建pvc与pv

- 测试三:将nfs的storageclass设置为默认,创建Pod不指定storageclass,申请pvc的资源是否成功

# 设置managed-nfs-storage为默认

kubectl patch storageclass managed-nfs-storage -p ‘{“metadata”: {“annotations”:{“storageclass.kubernetes.io/is-default-class”:”true”}}}’ - 测试,编写yaml文件不指定storageclass

- pod命令行使用comfigmaps的env环境变量

- 创建secret的yaml

- pod env使用secret

- 自定义环境变量 name: SECRET_USERNAME name: SECRET_PASSWORD

- volume挂在secret

- https://github.com/goharbor/harbor/releases/download/v1.10.0/harbor-offline-installer-v1.10.0.tgz">下载离线安装包

wget https://github.com/goharbor/harbor/releases/download/v1.10.0/harbor-offline-installer-v1.10.0.tgz - https://docs.rancher.cn/download/compose/v1.25.4-docker-compose-Linux-x86_64

chmod +x v1.25.4-docker-compose-Linux-x86_64 && mv v1.25.4-docker-compose-Linux-x86_64 /usr/local/bin/docker-compose">下载docker-compose

wget https://docs.rancher.cn/download/compose/v1.25.4-docker-compose-Linux-x86_64

chmod +x v1.25.4-docker-compose-Linux-x86_64 && mv v1.25.4-docker-compose-Linux-x86_64 /usr/local/bin/docker-compose - 修改docker daemon.json ,添加安全私有镜像仓库

- 安装harbor之前需要在harbor安装目录下修改harbor.yml 文件

./install.sh

./install.sh —with-trivy —with-clair - 登陆web创建用户,设定密码,创建项目

user: liwm

password:AAbb0101 - docker login 私有仓库

docker login 192.168.31.131:8080 - 上传image到damon用户的私有仓库中

docker tag nginx:latest 192.168.31.131:8080/test/nginx:latest

docker push 192.168.31.131:8080/test/nginx:latest

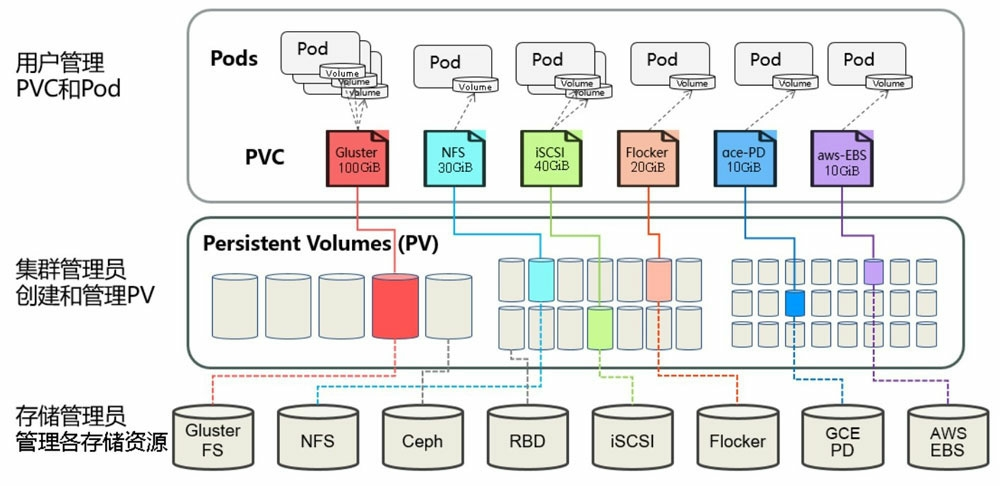

PV PVC概念

PersistentVolume(PV)面向管理员的资源,直接和底层存储关联的。其生命周期独立于使用PV的任何单个Pod。

PersistentVolumeClaim(PVC)面向用户使用者用于提交存储的请求,包含需要存储的容量与访问模式。

PV作为存储资源

主要包括存储能力、访问模式、存储类型、回收策略、后端存储类型等关键信息的设置

PVC作为用户对存储资源的需求申请

主要包括存储空间请求、访问模式、PV选择条件和存储类别等信息的设置

Kubernetes从1.0

引入PersistentVolume(PV)和PersistentVolumeClaim(PVC)两个资源对象来实现对存储的管理子系统

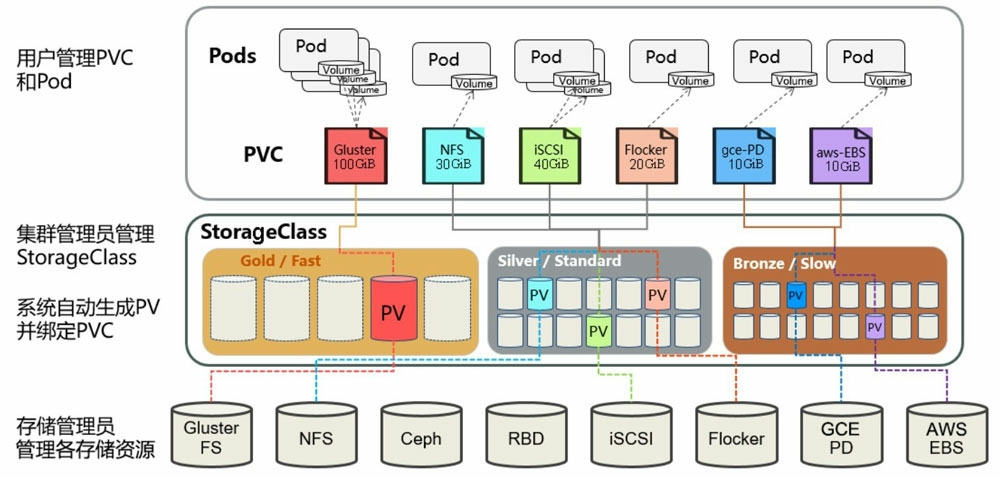

Kubernetes从1.4

引入了一个新的资源对象StorageClass,用于标记存储资源的特性和性能。

Kubernetes 1.7

本地数据卷管理。本地数据卷管理的主要内容是将非主分区的其他分区全部作为本地持久化数据卷供 Kubernetes 调度使用,遵循 Kubernetes 的 PV/PVC 模型。

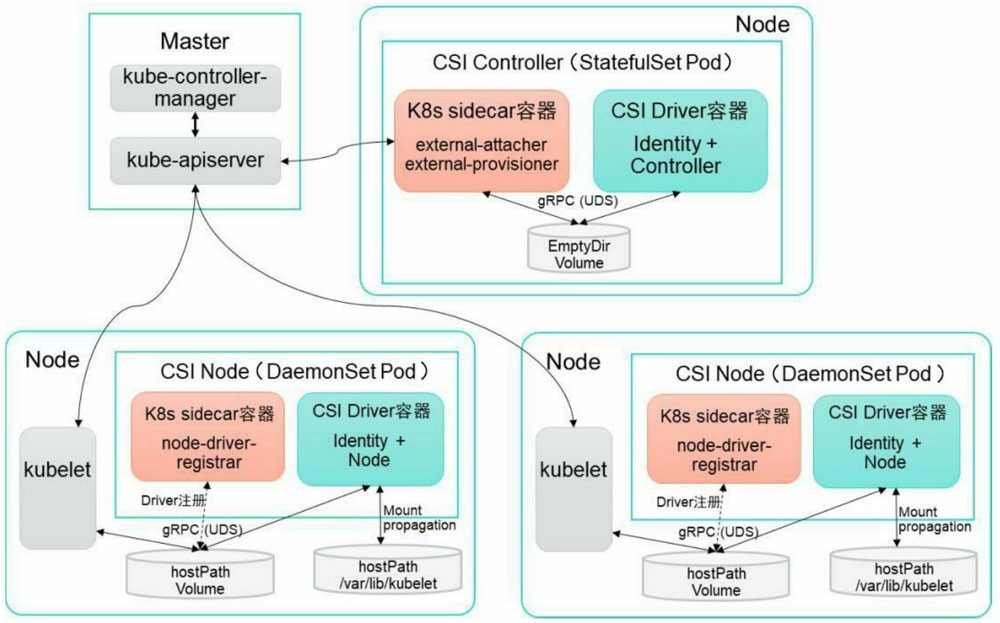

Kubernetes从1.9

引入容器存储接口Container Storage Interface(CSI)机制

目标是在Kubernetes和外部存储系统之间建立一套标准的存储管理接口,通过该接口为容器提供存储服务,类似于CRI(容器运行时接口)和CNI(容器网络接口)。

Kubernetes从1.13

引入存储卷类型的设置(volumeMode=xxx),可选项包括Filesystem(文件系统)和Block(块设备),默认值为Filesystem。如 RBD(Ceph Block Device)

PV访问模式:

(RWO)

ReadWriteOnce – the volume can be mounted as read-write by a single node

(单node的读写)

(ROM)

ReadOnlyMany – the volume can be mounted read-only by many nodes (多node的只读)

(RWM)

ReadWriteMany – the volume can be mounted as read-write by many nodes

(多node的读写)

pv可以设置三种回收策略:

保留(Retain),回收(Recycle)和删除(Delete)。

保留(Retain):允许人工处理保留的数据。(默认)

回收(Recycle):将执行清除操作,可以被新的pvc使用。注意:Recycle回收策略已弃用,推荐的方法是使用动态配置,

删除(Delete):将删除pv和外部关联的存储资源,需要插件支持。

PV卷阶段状态:

Available

– 资源尚未被claim使用

Bound

– 卷已经被绑定到claim了

Released

– claim被删除,卷处于释放状态,但未被集群回收。

Failed

– 卷自动回收失败

PV与PVC有两种方式去使用:

静态

集群管理员创建多个PV,用户手动提交PVC请求对PV进行bound。若管理员创建的PV与用户提交的PVC不匹配时,PVC处于挂起状态。

动态**

当管理员创建的所有静态PV均与用户创建的PVC不匹配时,会应基于StorageClasses自动创建PV并进行bound。这种方式需要管理员创建并配置了StorageClass,才能进行动态创建。

PV生命周期

SCI 存储接口

集群的共享存储

第一种方法是通过Samba、NFS或GlusterFS将Kubernetes集群与传统的存储设施进行集成。这种方法可以很容易地扩展到基于云的共享文件系统,如Amazon EFS、Azure Files和Google Cloud Filestore。

在这种架构中,存储层与Kubernetes所管理的计算层完全解耦。在Kubernetes的Pod中有两种方式来使用共享存储:

本地配置(Native Provisioning):幸运的是,大多数的共享文件系统都有内置到上游Kubernetes发行版中的卷插件,或者具有一个容器存储接口(Container Storage Interface - CSI)驱动程序。这使得集群管理员能够使用特定于共享文件系统或托管服务的参数,以声明的方式来定义持久卷(Persistent Volumes)。

基于主机的配置(Host-based Provisioning):在这种方法里,启动脚本在每个负责挂载共享存储的节点(Node)上运行。Kubernetes集群中的每个节点都有一个暴露给工作负载的挂载点,且该挂载点是一致的、众所周知的。持久卷(Persistent Volume)会通过hostPath或Local PV指向该主机目录。

由于耐久性和持久性是由底层存储来负责,因此工作负载与之完全解耦。这使得Pod可以在任何节点上调度,而且不需要定义节点关联,从而能确保Pod总是在选定好的节点上调度。

然而,当遇到需要高I/O吞吐量的有状态负载的时候这种方法就不是一个理想的选择了。因为共享文件系统的设计目的并不是为了满足那些带IOPS的需求,例如关系型数据库、NoSQL数据库和其他写密集型负载所需的IOPS。

可供选择的存储:GlusterFS、Samba、NFS、Amazon EFS、Azure Files、Google Cloud Filestore。

典型的工作负载:内容管理系统(Content Management Systems)、机器学习培训/推理作业(Machine Learning Training/Inference Jobs)和数字资产管理系统(Digital Asset Management Systems)。

Kubernetes通过控制器维护所需的配置状态。Deployment、ReplicaSet、DaemonSet和StatefulSet就是一些常用的控制器。

StatefulSet是一种特殊类型的控制器,它可以使Kubernetes中运行集群工作负载变得很容易。集群工作负载通常有一个或多个主服务器(Masters)和多个从服务器(Slaves)。大多数数据库都以集群模式设计的,这样可以提供高可用性和容错能力。

有状态集群工作负载持续地在Masters和Slaves之间复制数据。为此,集群基础设施寄期望于参与的实体(Masters和Slaves)有一致且众所周知的Endpoints,以可靠地同步状态。但在Kubernetes中,Pod的设计寿命很短,且不会保证拥有相同的名称和IP地址。

有状态集群工作负载的另一个需求是持久的后端存储,它具有容错能力,以及能够处理IOPS。

为了方便在Kubernetes中运行有状态集群工作负载,引入了StatefulSets。StatefulSet里的Pod可以保证有稳定且唯一的标识符。它们遵循一种可预测的命名规则,并且还支持有序、顺畅的部署和扩展。

参与StatefulSet的每个Pod都有一个相应的Persistent Volume Claim(PVC),该声明遵循类似的命名规则。当一个Pod终止并在不同的Node上重新调度时,Kubernetes控制器将确保该Pod与同一个PVC相关联,以确保状态是完整的。

由于StatefulSet中的每个Pod都有专用的PVC和PV,所以没有使用共享存储的硬性规则。但还是期望StatefulSet是由快速、可靠、持久的存储层(如基于SSD的块存储设备)支持。在确保将写操作完全提交到磁盘之后,可以在块存储设备中进行常规备份和快照。

可供选择的存储:SSD、块存储设备,例如Amazon EBS、Azure Disks、GCE PD。

典型的工作负载:Apache ZooKeeper、Apache Kafka、Percona Server for MySQL、PostgreSQL Automatic Failover以及JupyterHub。

容器中的数据存储是临时的,在容器中运行时应用程序会出现一些问题。首先,当容器崩溃时,kubelet将重新启动它,但是文件将丢失-容器以干净状态启动。其次,Pod里封装多个容器时,通常需要在这些容器之间实现文件共享。Kubernetes

Volume解决了这两个问题

创建Pod使用Volume

cat << EOF > test-volume.yamlapiVersion: v1kind: Podmetadata:name: test-volumespec:containers:- image: nginximagePullPolicy: IfNotPresentname: nginx-webvolumeMounts:- mountPath: /usr/share/nginx/htmlname: test-volumevolumes:- name: test-volumehostPath:path: /dataEOF

[liwm@rmaster01 liwm]$ kubectl create -f test-volume.yamlpod/test-volume created[liwm@rmaster01 liwm]$ kubectl get podNAME READY STATUS RESTARTS AGEtest-volume 1/1 Running 0 2m46s[liwm@rmaster01 liwm]$[liwm@rmaster01 liwm]$[liwm@rmaster01 liwm]$ kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATEStest-volume 1/1 Running 0 2m51s 10.42.4.37 node01 <none> <none>[root@node01 data]# echo nginx-404 > 50x.html[root@node01 data]# echo nginx-webserver > index.html[root@node01 data]#[liwm@rmaster01 liwm]$ curl 10.42.4.37nginx-webserver[liwm@rmaster01 liwm]$ kubectl exec -it test-volume bashroot@test-volume:/# cat /usr/share/nginx/html/50x.htmlnginx-404root@test-volume:/#[liwm@rmaster01 liwm]$ kubectl describe pod test-volume.....Volumes:test-volume:Type: HostPath (bare host directory volume)Path: /dataHostPathType:

PV and PVC

静态存储**

manual 该名称将用于将PersistentVolumeClaim请求绑定到此(自定义)

cat << EOF > pv.yamlkind: PersistentVolumeapiVersion: v1metadata:name: task-pv-volumelabels:type: localspec:storageClassName: manualcapacity:storage: 10GiaccessModes:- ReadWriteOncehostPath:path: "/storage/pv1"EOF

kubectl apply -f pv.yaml

[root@master01 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

task-pv-volume 10Gi RWO Retain Available manual 7s

述具体的PV功能。

访问模式:

(RWO) ReadWriteOnce – the volume can be mounted as read-write by a single node (单node的读写)

(ROM) ReadOnlyMany – the volume can be mounted read-only by many nodes (多node的只读)

(RWM) ReadWriteMany – the volume can be mounted as read-write by many nodes (多node的读写)

pv可以设置三种回收策略:保留(Retain),回收(Recycle)和删除(Delete)。

- 保留(Retain):允许人工处理保留的数据。(默认)

- 回收(Recycle):将执行清除操作,之后可以被新的pvc使用。

- 删除(Delete):将删除pv和外部关联的存储资源,需要插件支持。

PV卷阶段状态:

Available – 资源尚未被claim使用

Bound – 卷已经被绑定到claim了

Released – claim被删除,卷处于释放状态,但未被集群回收。

Failed – 卷自动回收失败

PVC

PV 10G

PVC 请求 3G =10G

PV不属于任何一个命名空间 独立于命名空间之外

cat << EOF > pvc.yamlkind: PersistentVolumeClaimapiVersion: v1metadata:name: task-pv-claimspec:storageClassName: manualaccessModes:- ReadWriteOnceresources:requests:storage: 3GiEOF

创建一个pod使用pvc

cat << EOF > pod-pvc.yamlkind: PodapiVersion: v1metadata:name: task-pvc-podspec:volumes:- name: task-pv-volumepersistentVolumeClaim:claimName: task-pv-claimcontainers:- name: task-pvc-containerimage: nginximagePullPolicy: IfNotPresentports:- containerPort: 80name: "http-server"volumeMounts:- mountPath: "/usr/share/nginx/html"name: task-pv-volumeEOF

[liwm@rmaster01 liwm]$ kubectl create -f pv.yamlpersistentvolume/task-pv-volume created[liwm@rmaster01 liwm]$ kubectl get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGEtask-pv-volume 10Gi RWO Retain Available manual 4s[liwm@rmaster01 liwm]$ kubectl create -f pvc.yamlpersistentvolumeclaim/task-pv-claim created[liwm@rmaster01 liwm]$ kubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEtask-pv-claim Bound task-pv-volume 10Gi RWO manual 3s[liwm@rmaster01 liwm]$ kubectl get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGEtask-pv-volume 10Gi RWO Retain Bound default/task-pv-claim manual 26s[liwm@rmaster01 liwm]$ kubectl create -f pod-pvc.yamlpod/task-pvc-pod created[liwm@rmaster01 liwm]$ kubectl get podNAME READY STATUS RESTARTS AGEtask-pvc-pod 1/1 Running 0 3s[liwm@rmaster01 liwm]$ kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATEStask-pvc-pod 1/1 Running 0 10s 10.42.4.41 node01 <none> <none>[liwm@rmaster01 liwm]$ kubectl exec -it task-pvc-pod bashroot@task-pvc-pod:/# cd /usr/share/nginx/html/root@task-pvc-pod:/usr/share/nginx/html# lsindex.htmlroot@task-pvc-pod:/usr/share/nginx/html# cat index.html111[root@node01 /]# cd storage/[root@node01 storage]#[root@node01 storage]# lltotal 0drwxr-xr-x 2 root root 24 Mar 14 13:59 pv1[root@node01 storage]# cd pv1/[root@node01 pv1]#[root@node01 pv1]# lsindex.html[root@node01 pv1]# echo 222 > index.html[root@node01 pv1]#[root@node01 pv1]# cat index.html222[root@node01 pv1]#root@task-pvc-pod:/usr/share/nginx/html# cat index.html222root@task-pvc-pod:/usr/share/nginx/html#

在master节点操作

[root@master01 pv1]# kubectl exec -it task-pv-pod bash

root@task-pv-pod:/# cd /usr/share/nginx/html/

root@task-pv-pod:/usr/share/nginx/html# ls

root@task-pv-pod:/usr/share/nginx/html# touch index.html

root@task-pv-pod:/usr/share/nginx/html# echo 11 > index.html

root@task-pv-pod:/usr/share/nginx/html# exit

exit

[root@master01 pv1]# curl 192.168.1.41

11

pod运行在node01,所以要去node01节点查看hostpath

[root@node01 ~]# cd /storage/

[root@node01 storage]# ls

pv1

[root@node01 storage]# cd pv1/

[root@node01 pv1]# ls

index.html

[root@node01 pv1]

回收策略

PV的状态 保留(Retain),回收(Recycle)和删除(Delete)。

cat << EOF > pv.yamlkind: PersistentVolumeapiVersion: v1metadata:name: task-pv-volumelabels:type: localspec:storageClassName: manualpersistentVolumeReclaimPolicy: Recyclecapacity:storage: 10GiaccessModes:- ReadWriteOncehostPath:path: "/storage/pv1"EOF

[liwm@rmaster01 liwm]$ kubectl get podNAME READY STATUS RESTARTS AGEtask-pvc-pod 1/1 Running 0 5s[liwm@rmaster01 liwm]$ kubectl get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGEtask-pv-volume 10Gi RWO Recycle Bound default/task-pv-claim manual 54s[liwm@rmaster01 liwm]$ kubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEtask-pv-claim Bound task-pv-volume 10Gi RWO manual 33s[liwm@rmaster01 liwm]$ kubectl delete pod task-pvc-podpod "task-pvc-pod" deleted[liwm@rmaster01 liwm]$ kubectl delete pvc task-pv-claimpersistentvolumeclaim "task-pv-claim" deleted[liwm@rmaster01 liwm]$ kubectl get pvcNo resources found in default namespace.[liwm@rmaster01 liwm]$ kubectl get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGEtask-pv-volume 10Gi RWO Recycle Available manual 3m7s[liwm@rmaster01 liwm]$

StorageClass

NFS环境准备

安装nfs server

yum -y install nfs-utils

# 启动服务,并设置为开机自启

systemctl enable —now nfs

# 创建共享目录

mkdir /storage

# 编辑nfs配置文件

vim /etc/exports

/storage (rw,sync,no_root_squash)

# 重启服务

systemctl restart nfs

# kubernetes集群计算节点部署

yum -y install nfs-utils

# 在计算节点测试

mkdir /test

mount.nfs 172.17.224.182:/storage /test

touch /test/123

StorageClass插件部署*

# 下载系统插件:

yum -y install git

https://github.com/kubernetes-incubator/external-storage

git clone https://github.com/kubernetes-incubator/external-storage.git

修改yaml信息

[liwm@rmaster01 deploy]$ pwd

/home/liwm/yaml/nfs-client/deploy

vim deployment.yaml

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.31.130

- name: NFS_PATH

value: /storage

volumes:

- name: nfs-client-root

nfs:

server: 192.168.31.130

path: /storage

[liwm@rmaster01 deploy]$ cat deployment.yamlapiVersion: apps/v1kind: Deploymentmetadata:name: nfs-client-provisionerlabels:app: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: defaultspec:replicas: 1strategy:type: Recreateselector:matchLabels:app: nfs-client-provisionertemplate:metadata:labels:app: nfs-client-provisionerspec:serviceAccountName: nfs-client-provisionercontainers:- name: nfs-client-provisionerimage: jmgao1983/nfs-client-provisionervolumeMounts:- name: nfs-client-rootmountPath: /persistentvolumesenv:- name: PROVISIONER_NAMEvalue: fuseim.pri/ifs- name: NFS_SERVERvalue: 192.168.31.130- name: NFS_PATHvalue: /storagevolumes:- name: nfs-client-rootnfs:server: 192.168.31.130path: /storage[liwm@rmaster01 deploy]$ cat rbac.yamlapiVersion: v1kind: ServiceAccountmetadata:name: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata:name: nfs-client-provisioner-runnerrules:- apiGroups: [""]resources: ["persistentvolumes"]verbs: ["get", "list", "watch", "create", "delete"]- apiGroups: [""]resources: ["persistentvolumeclaims"]verbs: ["get", "list", "watch", "update"]- apiGroups: ["storage.k8s.io"]resources: ["storageclasses"]verbs: ["get", "list", "watch"]- apiGroups: [""]resources: ["events"]verbs: ["create", "update", "patch"]---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: run-nfs-client-provisionersubjects:- kind: ServiceAccountname: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: defaultroleRef:kind: ClusterRolename: nfs-client-provisioner-runnerapiGroup: rbac.authorization.k8s.io---kind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata:name: leader-locking-nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: defaultrules:- apiGroups: [""]resources: ["endpoints"]verbs: ["get", "list", "watch", "create", "update", "patch"]---kind: RoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: leader-locking-nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: defaultsubjects:- kind: ServiceAccountname: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: defaultroleRef:kind: Rolename: leader-locking-nfs-client-provisionerapiGroup: rbac.authorization.k8s.io[liwm@rmaster01 deploy]$ cat class.yamlapiVersion: storage.k8s.io/v1kind: StorageClassmetadata:name: managed-nfs-storageprovisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'parameters:archiveOnDelete: "false"[liwm@rmaster01 deploy]$

reclaimPolicy:有两种策略:Delete、Retain。默认是Delet

fuseim.pri/ifs为上面deployment上创建的PROVISIONER_NAME

# 部署插件

[liwm@rmaster01 liwm]$ kubectl describe storageclasses.storage.k8s.io managed-nfs-storageName: managed-nfs-storageIsDefaultClass: NoAnnotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{},"name":"managed-nfs-storage"},"parameters":{"archiveOnDelete":"false"},"provisioner":"fuseim.pri/ifs"}Provisioner: fuseim.pri/ifsParameters: archiveOnDelete=falseAllowVolumeExpansion: <unset>MountOptions: <none>ReclaimPolicy: DeleteVolumeBindingMode: ImmediateEvents: <none>[liwm@rmaster01 liwm]$

kubectl apply -f rbac.yamlkubectl apply -f deployment.yamlkubectl apply -f class.yaml[liwm@rmaster01 deploy]$ kubectl get podNAME READY STATUS RESTARTS AGEnfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 25s[liwm@rmaster01 deploy]$[liwm@rmaster01 deploy]$ kubectl get storageclasses.storage.k8s.ioNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGEmanaged-nfs-storage fuseim.pri/ifs Delete Immediate false 13d[liwm@rmaster01 deploy]$

mount.nfs 192.168.31.130:/storage /test

测试

测试一:创建pvc后自动创建pv并bound

cat << EOF > pvc-nfs.yamlapiVersion: v1kind: PersistentVolumeClaimmetadata:name: nginx-testspec:accessModes:- ReadWriteManystorageClassName: managed-nfs-storageresources:requests:storage: 1GiEOF

[liwm@rmaster01 liwm]$ kubectl create -f pvc-nfs.yamlpersistentvolumeclaim/nginx-test created[liwm@rmaster01 liwm]$ kubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEnginx-test Bound pvc-aa1b584b-850c-49a7-834d-77cb56a2f6e1 1Gi RWX managed-nfs-storage 7s[liwm@rmaster01 liwm]$ kubectl get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGEpvc-aa1b584b-850c-49a7-834d-77cb56a2f6e1 1Gi RWX Delete Bound default/nginx-test managed-nfs-storage 9s[liwm@rmaster01 liwm]$

测试二:创建Pod,自动创建pvc与pv

cat << EOF > statefulset-pvc-nfs.yamlapiVersion: apps/v1kind: StatefulSetmetadata:name: webspec:selector:matchLabels:app: nginx # has to match .spec.template.metadata.labelsserviceName: "nginx"replicas: 3 # by default is 1template:metadata:labels:app: nginx # has to match .spec.selector.matchLabelsspec:terminationGracePeriodSeconds: 10containers:- name: nginximage: nginximagePullPolicy: IfNotPresentports:- containerPort: 80name: webvolumeMounts:- name: wwwmountPath: /usr/share/nginx/htmlvolumeClaimTemplates:- metadata:name: wwwspec:accessModes: [ "ReadWriteMany" ]storageClassName: "managed-nfs-storage"resources:requests:storage: 1GiEOF

策略

ReadWriteMany 多节点映射

[liwm@rmaster01 liwm]$ kubectl create -f statefulset-pvc-nfs.yaml[liwm@rmaster01 liwm]$ kubectl get podNAME READY STATUS RESTARTS AGEnfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 6mweb-0 1/1 Running 0 73sweb-1 1/1 Running 0 29sweb-2 1/1 Running 0 14s[liwm@rmaster01 liwm]$ kubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEwww-web-0 Bound pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3 1Gi RWX managed-nfs-storage 79swww-web-1 Bound pvc-0da53d10-6b52-41b0-b11c-bbb0f440e924 1Gi RWX managed-nfs-storage 35swww-web-2 Bound pvc-c7380539-7aa9-49c5-9cc5-69c0b9ee8970 1Gi RWX managed-nfs-storage 20s[liwm@rmaster01 liwm]$ kubectl get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGEpvc-0da53d10-6b52-41b0-b11c-bbb0f440e924 1Gi RWX Delete Bound default/www-web-1 managed-nfs-storage 36spvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3 1Gi RWX Delete Bound default/www-web-0 managed-nfs-storage 79spvc-c7380539-7aa9-49c5-9cc5-69c0b9ee8970 1Gi RWX Delete Bound default/www-web-2 managed-nfs-storage 21s[liwm@rmaster01 liwm]$ kubectl scale statefulset --replicas=1 webstatefulset.apps/web scaled[liwm@rmaster01 liwm]$ kubectl get podNAME READY STATUS RESTARTS AGEnfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 6m56sweb-0 1/1 Running 0 2m9s[liwm@rmaster01 liwm]$ kubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEwww-web-0 Bound pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3 1Gi RWX managed-nfs-storage 2m15swww-web-1 Bound pvc-0da53d10-6b52-41b0-b11c-bbb0f440e924 1Gi RWX managed-nfs-storage 91swww-web-2 Bound pvc-c7380539-7aa9-49c5-9cc5-69c0b9ee8970 1Gi RWX managed-nfs-storage 76s[liwm@rmaster01 liwm]$ kubectl get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGEpvc-0da53d10-6b52-41b0-b11c-bbb0f440e924 1Gi RWX Delete Bound default/www-web-1 managed-nfs-storage 93spvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3 1Gi RWX Delete Bound default/www-web-0 managed-nfs-storage 2m16spvc-c7380539-7aa9-49c5-9cc5-69c0b9ee8970 1Gi RWX Delete Bound default/www-web-2 managed-nfs-storage 78s[liwm@rmaster01 liwm]$ kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 9m2s 10.42.4.44 node01 <none> <none>web-0 1/1 Running 0 4m15s 10.42.4.46 node01 <none> <none>[liwm@rmaster01 liwm]$[liwm@rmaster01 liwm]$ kubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEwww-web-0 Bound pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3 1Gi RWX managed-nfs-storage 5m16swww-web-1 Bound pvc-0da53d10-6b52-41b0-b11c-bbb0f440e924 1Gi RWX managed-nfs-storage 4m32swww-web-2 Bound pvc-c7380539-7aa9-49c5-9cc5-69c0b9ee8970 1Gi RWX managed-nfs-storage 4m17s[liwm@rmaster01 liwm]$ kubectl get podNAME READY STATUS RESTARTS AGEnfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 10mweb-0 1/1 Running 0 5m26s[liwm@rmaster01 liwm]$ kubectl delete pvc www-web-1persistentvolumeclaim "www-web-1" deleted[liwm@rmaster01 liwm]$ kubectl delete pvc www-web-2persistentvolumeclaim "www-web-2" deleted[liwm@rmaster01 liwm]$ kubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEwww-web-0 Bound pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3 1Gi RWX managed-nfs-storage 6m9s[liwm@rmaster01 liwm]$ kubectl exec -it web-0 bashroot@web-0:/# cd /usr/share/nginx/html/root@web-0:/usr/share/nginx/html# lsroot@web-0:/usr/share/nginx/html# echo nfs-server > index.htmlroot@web-0:/usr/share/nginx/html# exitexit[liwm@rmaster01 liwm]$ cd /storage/[liwm@rmaster01 storage]$ lsarchived-default-nginx-test-pvc-aa1b584b-850c-49a7-834d-77cb56a2f6e1 default-www-web-0-pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3archived-default-www-web-1-pvc-0da53d10-6b52-41b0-b11c-bbb0f440e924 pvc-nfs.yamlarchived-default-www-web-2-pvc-c7380539-7aa9-49c5-9cc5-69c0b9ee8970[liwm@rmaster01 storage]$ cd default-www-web-0-pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3[liwm@rmaster01 default-www-web-0-pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3]$ lsindex.html[liwm@rmaster01 default-www-web-0-pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3]$ cat index.htmlnfs-server[liwm@rmaster01 default-www-web-0-pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3]$

[liwm@rmaster01 ~]$ kubectl get podNAME READY STATUS RESTARTS AGEnfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 15mweb-0 1/1 Running 0 11m[liwm@rmaster01 ~]$ kubectl delete -f statefulset.yamlstatefulset.apps "web" deleted[liwm@rmaster01 ~]$ kubectl get podNAME READY STATUS RESTARTS AGEnfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 16mweb-0 0/1 Terminating 0 11m[liwm@rmaster01 ~]$ kubectl get pvcNo resources found in default namespace.[liwm@rmaster01 ~]$ kubectl get pvNo resources found in default namespace.[liwm@rmaster01 ~]$[liwm@rmaster01 ~]$ cd /storage/[liwm@rmaster01 storage]$ lsarchived-default-nginx-test-pvc-aa1b584b-850c-49a7-834d-77cb56a2f6e1archived-default-www-web-0-pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3archived-default-www-web-1-pvc-0da53d10-6b52-41b0-b11c-bbb0f440e924archived-default-www-web-2-pvc-c7380539-7aa9-49c5-9cc5-69c0b9ee8970pvc-nfs.yaml[liwm@rmaster01 storage]$ cd archived-default-www-web-0-pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3[liwm@rmaster01 archived-default-www-web-0-pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3]$ lsindex.html[liwm@rmaster01 archived-default-www-web-0-pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3]$ cat index.htmlnfs-server[liwm@rmaster01 archived-default-www-web-0-pvc-172176c7-e498-4e12-8e59-d5e78f5b1ef3]$

测试三:将nfs的storageclass设置为默认,创建Pod不指定storageclass,申请pvc的资源是否成功

# 设置managed-nfs-storage为默认

kubectl patch storageclass managed-nfs-storage -p ‘{“metadata”: {“annotations”:{“storageclass.kubernetes.io/is-default-class”:”true”}}}’

测试,编写yaml文件不指定storageclass

cat <<EOF> statefulset2.yamlapiVersion: apps/v1kind: StatefulSetmetadata:name: webspec:selector:matchLabels:app: nginx # has to match .spec.template.metadata.labelsserviceName: "nginx"replicas: 2 # by default is 1template:metadata:labels:app: nginx # has to match .spec.selector.matchLabelsspec:terminationGracePeriodSeconds: 10containers:- name: nginximage: nginximagePullPolicy: IfNotPresentports:- containerPort: 80name: webvolumeMounts:- name: htmlmountPath: /usr/share/nginx/htmlvolumeClaimTemplates:- metadata:name: htmlspec:accessModes: [ "ReadWriteOnce" ]resources:requests:storage: 1GiEOF

kubectl apply -f statefulset2.yaml

ConfigMap

ConfigMap资源提供了将配置文件与image分离后,向Pod注入配置数据的方法,以保证容器化应用程序的可移植性。

为了让镜像和配置文件解耦,以便实现镜像的可移植性和可复用性

因为一个configMap其实就是一系列配置信息的集合,将来可直接注入到Pod中的容器使用

注入方式有两种

1:将configMap做为存储卷

2:将configMap通过env中configMapKeyRef注入到容器中

configMap是KeyValve形式来保存数据的

环境变量使用

# 通过yaml文件创建env configmaps

cat << EOF > configmap.yamlapiVersion: v1kind: ConfigMapmetadata:name: test-configdata:username: damonpassword: redhatEOF

**

[liwm@rmaster01 liwm]$ kubectl create -f configmap.yamlconfigmap/test-config created[liwm@rmaster01 liwm]$ kubectl get configmaps test-configNAME DATA AGEtest-config 2 17s[liwm@rmaster01 liwm]$[liwm@rmaster01 liwm]$ kubectl describe configmaps test-configName: test-configNamespace: defaultLabels: <none>Annotations: <none>Data====password:----redhatusername:----damonEvents: <none>[liwm@rmaster01 liwm]$

**

# pod使用configmaps的env环境变量

cat << EOF > config-pod-env1.yamlapiVersion: v1kind: Podmetadata:name: test-configmap-env-podspec:containers:- name: test-containerimage: radial/busyboxplusimagePullPolicy: IfNotPresentcommand: [ "/bin/sh", "-c", "sleep 1000000" ]envFrom:- configMapRef:name: test-configEOF

[liwm@rmaster01 liwm]$ kubectl get podNAME READY STATUS RESTARTS AGEnfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 84mtest-configmap-env-pod 1/1 Running 0 89s[liwm@rmaster01 liwm]$ kubectl exec -it test-configmap-env-pod -- envPATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/binHOSTNAME=test-configmap-env-podTERM=xtermusername=damonpassword=redhatTEST1_PORT_8081_TCP_PROTO=tcpTEST1_PORT_8081_TCP_PORT=8081TEST2_SERVICE_PORT=8081TEST2_PORT_8081_TCP_ADDR=10.43.34.138KUBERNETES_PORT_443_TCP=tcp://10.43.0.1:443TEST1_SERVICE_HOST=10.43.67.146TEST2_SERVICE_HOST=10.43.34.138TEST2_PORT=tcp://10.43.34.138:8081TEST2_PORT_8081_TCP=tcp://10.43.34.138:8081KUBERNETES_SERVICE_PORT=443KUBERNETES_SERVICE_PORT_HTTPS=443KUBERNETES_PORT_443_TCP_PROTO=tcpKUBERNETES_PORT_443_TCP_PORT=443TEST1_SERVICE_PORT=8081TEST1_PORT_8081_TCP=tcp://10.43.67.146:8081TEST2_PORT_8081_TCP_PORT=8081KUBERNETES_SERVICE_HOST=10.43.0.1KUBERNETES_PORT=tcp://10.43.0.1:443KUBERNETES_PORT_443_TCP_ADDR=10.43.0.1TEST1_PORT=tcp://10.43.67.146:8081TEST1_PORT_8081_TCP_ADDR=10.43.67.146TEST2_PORT_8081_TCP_PROTO=tcpHOME=/[liwm@rmaster01 liwm]$

pod命令行使用comfigmaps的env环境变量

cat << EOF > config-pod-env2.yamlapiVersion: v1kind: Podmetadata:name: test-configmap-command-env-podspec:containers:- name: test-containerimage: radial/busyboxplusimagePullPolicy: IfNotPresentcommand: [ "/bin/sh", "-c", "echo \$(MYSQLUSER) \$(MYSQLPASSWD); sleep 1000000" ]env:- name: MYSQLUSERvalueFrom:configMapKeyRef:name: test-configkey: username- name: MYSQLPASSWDvalueFrom:configMapKeyRef:name: test-configkey: passwordEOF

[liwm@rmaster01 liwm]$ kubectl create -f config-pod-env2.yamlpod/test-configmap-command-env-pod created[liwm@rmaster01 liwm]$ kubectl get podNAME READY STATUS RESTARTS AGEnfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 92mtest-configmap-command-env-pod 1/1 Running 0 9s[liwm@rmaster01 liwm]$ kubectl exec -it test-configmap-command-env-pod -- envPATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/binHOSTNAME=test-configmap-command-env-podTERM=xtermMYSQLUSER=damonMYSQLPASSWD=redhatKUBERNETES_PORT_443_TCP=tcp://10.43.0.1:443TEST1_PORT=tcp://10.43.67.146:8081TEST2_SERVICE_PORT=8081TEST2_PORT_8081_TCP_PORT=8081KUBERNETES_PORT_443_TCP_PROTO=tcpKUBERNETES_PORT_443_TCP_ADDR=10.43.0.1TEST1_SERVICE_PORT=8081TEST1_PORT_8081_TCP_PORT=8081TEST1_PORT_8081_TCP_ADDR=10.43.67.146TEST2_PORT_8081_TCP_PROTO=tcpTEST2_PORT_8081_TCP_ADDR=10.43.34.138KUBERNETES_SERVICE_HOST=10.43.0.1KUBERNETES_PORT=tcp://10.43.0.1:443TEST1_SERVICE_HOST=10.43.67.146TEST1_PORT_8081_TCP=tcp://10.43.67.146:8081TEST2_SERVICE_HOST=10.43.34.138TEST2_PORT=tcp://10.43.34.138:8081TEST2_PORT_8081_TCP=tcp://10.43.34.138:8081KUBERNETES_SERVICE_PORT=443KUBERNETES_SERVICE_PORT_HTTPS=443KUBERNETES_PORT_443_TCP_PORT=443TEST1_PORT_8081_TCP_PROTO=tcpHOME=/[liwm@rmaster01 liwm]$

#Volume挂载使用

# 创建配置文件的configmap

echo 123 > index.html

kubectl create configmap web-config —from-file=index.html

# pod使用volume挂载

[liwm@rmaster01 liwm]$ echo 123 > index.html[liwm@rmaster01 liwm]$ kubectl create configmap web-config --from-file=index.htmlconfigmap/web-config created[liwm@rmaster01 liwm]$[liwm@rmaster01 liwm]$ kubectl describe configmaps web-configName: web-configNamespace: defaultLabels: <none>Annotations: <none>Data====index.html:----123Events: <none>[liwm@rmaster01 liwm]$

cat << EOF > test-configmap-volume-pod.yamlapiVersion: v1kind: Podmetadata:name: test-configmap-volume-podspec:volumes:- name: config-volumeconfigMap:name: web-configcontainers:- name: test-containerimage: nginximagePullPolicy: IfNotPresentvolumeMounts:- name: config-volumemountPath: /usr/share/nginx/htmlEOF

[liwm@rmaster01 liwm]$ kubectl create -f test-configmap-volume-pod.yamlpod/test-configmap-volume-pod created[liwm@rmaster01 liwm]$ kubectl get podNAME READY STATUS RESTARTS AGEnfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 98mtest-configmap-volume-pod 1/1 Running 0 8s[liwm@rmaster01 liwm]$ kubectl exec -it test-configmap-volume-pod bashroot@test-configmap-volume-pod:/# cd /usr/share/nginx/html/root@test-configmap-volume-pod:/usr/share/nginx/html# cat index.html123root@test-configmap-volume-pod:/usr/share/nginx/html#

# subPath使用

cat << EOF > test-configmap-subpath.yamlapiVersion: v1kind: Podmetadata:name: test-configmap-pod01spec:volumes:- name: config-volumeconfigMap:name: web-configcontainers:- name: test-containerimage: nginximagePullPolicy: IfNotPresentvolumeMounts:- name: config-volumemountPath: /usr/share/nginx/html/index.htmlsubPath: index.htmlEOF

[liwm@rmaster01 liwm]$ kubectl create -f test-configmap-subpath.yamlpod/test-configmap-volume-pod created[liwm@rmaster01 liwm]$ kubectl get podNAME READY STATUS RESTARTS AGEnfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 102mtest-configmap-volume-pod 1/1 Running 0 4s[liwm@rmaster01 liwm]$ kubectl exec -it test-configmap-volume-pod bashroot@test-configmap-volume-pod:/# cd /usr/share/nginx/html/root@test-configmap-volume-pod:/usr/share/nginx/html# ls50x.html index.htmlroot@test-configmap-volume-pod:/usr/share/nginx/html# cat index.html123root@test-configmap-volume-pod:/usr/share/nginx/html#

#mountpath 自动更新

cat << EOF > test-configmap-mountpath.yamlapiVersion: v1kind: Podmetadata:name: test-configmap-pod02spec:volumes:- name: config-volumeconfigMap:name: web-configcontainers:- name: test-containerimage: nginximagePullPolicy: IfNotPresentvolumeMounts:- name: config-volumemountPath: /usr/share/nginx/htmlEOF

[liwm@rmaster01 liwm]$ kubectl create -f .pod/test-configmap-pod02 createdpod/test-configmap-pod01 created[liwm@rmaster01 liwm]$[liwm@rmaster01 liwm]$ kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 135m 10.42.4.44 node01 <none> <none>test-configmap-pod01 1/1 Running 0 21s 10.42.4.68 node01 <none> <none>test-configmap-pod02 1/1 Running 0 21s 10.42.4.67 node01 <none> <none>[liwm@rmaster01 liwm]$ curl 10.42.4.68test[liwm@rmaster01 liwm]$ curl 10.42.4.67test[liwm@rmaster01 liwm]$ kubectl edit configmaps web-configconfigmap/web-config edited[liwm@rmaster01 liwm]$ curl 10.42.4.68test[liwm@rmaster01 liwm]$ curl 10.42.4.67uat[liwm@rmaster01 liwm]$

Secret

Secret资源用来给Pod传递敏感信息,例如密码,令牌,密钥。Secret卷由tmpfs(基于 RAM 的文件系统)提供存储,因此Secret数据永远不会被写入持久化的存储器。

secret类型有三种:

generic: 通用类型,环境变量 文本 通常用于存储密码数据。

tls:此类型仅用于存储私钥和证书。

docker-registry: 若要保存docker仓库的认证信息的话,就必须使用此种类型来创建

**

[liwm@rmaster01 liwm]$ kubectl create secretdocker-registry generic tls[liwm@rmaster01 liwm]$

环境变量使用

# 手动加密

echo -n ‘admin’ | base64

YWRtaW4=

echo -n ‘redhat’ | base64

cmVkaGF0

# 解密

echo ‘YWRtaW4=’ | base64 —decode

#返回结果:admin

echo ‘cmVkaGF0’ | base64 —decode

#返回结果:redhat

创建secret的yaml

cat << EOF > secret-env.yamlapiVersion: v1kind: Secretmetadata:name: mysecret-envtype: Opaquedata:username: YWRtaW4=password: cmVkaGF0EOF

[liwm@rmaster01 liwm]$ kubectl create -f secret-env.yaml secret/mysecret-env created[liwm@rmaster01 liwm]$ kubectl get configmapsNo resources found in default namespace.[liwm@rmaster01 liwm]$ kubectl get secretsNAME TYPE DATA AGEdefault-token-qxwg5 kubernetes.io/service-account-token 3 20distio.default istio.io/key-and-cert 3 15dmysecret-env Opaque 2 24snfs-client-provisioner-token-797f6 kubernetes.io/service-account-token 3 13d[liwm@rmaster01 liwm]$[liwm@rmaster01 liwm]$ kubectl describe secrets mysecret-envName: mysecret-envNamespace: defaultLabels: <none>Annotations: <none>Type: OpaqueData====password: 6 bytesusername: 5 bytes[liwm@rmaster01 liwm]$

pod env使用secret

cat << EOF > secret-pod-env1.yamlapiVersion: v1kind: Podmetadata:name: envfrom-secretspec:containers:- name: envars-test-containerimage: nginximagePullPolicy: IfNotPresentenvFrom:- secretRef:name: mysecret-envEOF

[liwm@rmaster01 liwm]$ kubectl create -f secret-pod-env1.yamlpod/envfrom-secret created[liwm@rmaster01 liwm]$[liwm@rmaster01 liwm]$ kubectl get podNAME READY STATUS RESTARTS AGEenvfrom-secret 1/1 Running 0 111snfs-client-provisioner-7757d98f8c-m66g5 1/1 Running 0 176m[liwm@rmaster01 liwm]$ kubectl exec -it envfrom-secret -- envPATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/binHOSTNAME=envfrom-secretTERM=xtermpassword=redhatusername=adminKUBERNETES_PORT_443_TCP_PORT=443TEST1_PORT_8081_TCP=tcp://10.43.67.146:8081TEST2_PORT_8081_TCP=tcp://10.43.34.138:8081TEST2_PORT_8081_TCP_ADDR=10.43.34.138KUBERNETES_SERVICE_PORT=443TEST2_PORT_8081_TCP_PROTO=tcpKUBERNETES_SERVICE_HOST=10.43.0.1TEST1_PORT=tcp://10.43.67.146:8081TEST1_PORT_8081_TCP_ADDR=10.43.67.146TEST2_SERVICE_PORT=8081TEST2_PORT=tcp://10.43.34.138:8081KUBERNETES_PORT_443_TCP_PROTO=tcpTEST1_SERVICE_PORT=8081TEST1_PORT_8081_TCP_PROTO=tcpTEST1_PORT_8081_TCP_PORT=8081KUBERNETES_SERVICE_PORT_HTTPS=443KUBERNETES_PORT_443_TCP=tcp://10.43.0.1:443KUBERNETES_PORT_443_TCP_ADDR=10.43.0.1TEST1_SERVICE_HOST=10.43.67.146TEST2_SERVICE_HOST=10.43.34.138TEST2_PORT_8081_TCP_PORT=8081KUBERNETES_PORT=tcp://10.43.0.1:443NGINX_VERSION=1.17.9NJS_VERSION=0.3.9PKG_RELEASE=1~busterHOME=/root[liwm@rmaster01 liwm]$

自定义环境变量 name: SECRET_USERNAME name: SECRET_PASSWORD

cat << EOF > secret-pod-env2.yamlapiVersion: v1kind: Podmetadata:name: pod-env-secretspec:containers:- name: mycontainerimage: radial/busyboxplusimagePullPolicy: IfNotPresentcommand: [ "/bin/sh", "-c", "echo \$(SECRET_USERNAME) \$(SECRET_PASSWORD); sleep 1000000" ]env:- name: SECRET_USERNAMEvalueFrom:secretKeyRef:name: mysecret-envkey: username- name: SECRET_PASSWORDvalueFrom:secretKeyRef:name: mysecret-envkey: passwordEOF

#Volume挂载

**# 创建配置文件的secret

kubectl create secret generic web-secret —from-file=index.html

volume挂在secret

cat << EOF > pod-volume-secret.yamlapiVersion: v1kind: Podmetadata:name: pod-volume-secretspec:containers:- name: pod-volume-secretimage: nginximagePullPolicy: IfNotPresentvolumeMounts:- name: test-webmountPath: "/usr/share/nginx/html"readOnly: truevolumes:- name: test-websecret:secretName: web-secretEOF

应用场景:

secret docker-registory 部署Harbo

http://192.168.31.131:8080/

下载离线安装包

wget https://github.com/goharbor/harbor/releases/download/v1.10.0/harbor-offline-installer-v1.10.0.tgz

下载docker-compose

wget https://docs.rancher.cn/download/compose/v1.25.4-docker-compose-Linux-x86_64

chmod +x v1.25.4-docker-compose-Linux-x86_64 && mv v1.25.4-docker-compose-Linux-x86_64 /usr/local/bin/docker-compose

修改docker daemon.json ,添加安全私有镜像仓库

[root@rmaster02 harbor]# cat /etc/docker/daemon.json{"insecure-registries":["192.168.31.131:8080"],"max-concurrent-downloads": 3,"max-concurrent-uploads": 5,"registry-mirrors": ["https://0bb06s1q.mirror.aliyuncs.com"],"storage-driver": "overlay2","storage-opts": ["overlay2.override_kernel_check=true"],"log-driver": "json-file","log-opts": {"max-size": "100m","max-file": "3"}}

[root@rmaster01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.31.130 rmaster01

192.168.31.131 rmaster02

192.168.31.132 rmaster03

192.168.31.133 node01

192.168.31.134 node02

192.168.31.133 node01 riyimei.cn

[root@rmaster01 ~]#

[root@rmaster01 ~]# scp /etc/docker/daemon.json node01:/etc/docker/

[root@rmaster01 ~]# scp /etc/docker/daemon.json node02:/etc/docker/

systemctl restart docker

安装harbor之前需要在harbor安装目录下修改harbor.yml 文件

./install.sh

./install.sh —with-trivy —with-clair

登陆web创建用户,设定密码,创建项目

user: liwm

password:AAbb0101

docker login 私有仓库

docker login 192.168.31.131:8080

上传image到damon用户的私有仓库中

docker tag nginx:latest 192.168.31.131:8080/test/nginx:latest

docker push 192.168.31.131:8080/test/nginx:latest

创建secret

kubectl create secret docker-registry harbor-secret --docker-server=192.168.31.131:8080 --docker-username=liwm --docker-password=AAbb0101 --docker-email=liweiming0611@163.com

创建Pod,调用imagePullSecrets

cat << EOF > harbor-sc.yamlapiVersion: v1kind: Podmetadata:name: nginxspec:containers:- name: nginx-demoimage: 192.168.31.131:8080/test/nginx:latestimagePullSecrets:- name: harbor-secretEOF

kubectl create -f harbor-sc.yaml

[liwm@rmaster01 liwm]$ kubectl create -f harbor-sc.yamlpod/nginx created[liwm@rmaster01 liwm]$ kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnfs-client-provisioner-7757d98f8c-97cjt 1/1 Running 0 4m42s 10.42.4.85 node01 <none> <none>nginx 0/1 ImagePullBackOff 0 5s 10.42.4.87 node01 <none> <none>[liwm@rmaster01 liwm]$ kubectl create secret docker-registry harbor-secret --docker-server=192.168.31.131:8080 --docker-username=liwm --docker-password=AAbb0101 --docker-email=liweiming0611@163.comsecret/harbor-secret created[liwm@rmaster01 liwm]$ kubectl apply -f harbor-sc.yamlWarning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl applypod/nginx configured[liwm@rmaster01 liwm]$ kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnfs-client-provisioner-7757d98f8c-97cjt 1/1 Running 0 5m28s 10.42.4.85 node01 <none> <none>nginx 1/1 Running 0 51s 10.42.4.87 node01 <none> <none>[liwm@rmaster01 liwm]$ kubectl describe pod nginxName: nginxNamespace: defaultPriority: 0Node: node01/192.168.31.133Start Time: Sat, 28 Mar 2020 00:13:43 +0800Labels: <none>Annotations: cni.projectcalico.org/podIP: 10.42.4.87/32kubectl.kubernetes.io/last-applied-configuration:{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"name":"nginx","namespace":"default"},"spec":{"containers":[{"image":"192.168...Status: RunningIP: 10.42.4.87IPs:IP: 10.42.4.87Containers:secret-pod:Container ID: docker://53700ed416c3d549c69e6269af09549ac81ef286ae0d02a765e6e1ba3444fc77Image: 192.168.31.131:8080/test/nginx:latestImage ID: docker-pullable://192.168.31.131:8080/test/nginx@sha256:3936fb3946790d711a68c58be93628e43cbca72439079e16d154b5db216b58daPort: <none>Host Port: <none>State: RunningStarted: Sat, 28 Mar 2020 00:14:25 +0800Ready: TrueRestart Count: 0Environment: <none>Mounts:/var/run/secrets/kubernetes.io/serviceaccount from default-token-qxwg5 (ro)Conditions:Type StatusInitialized TrueReady TrueContainersReady TruePodScheduled TrueVolumes:default-token-qxwg5:Type: Secret (a volume populated by a Secret)SecretName: default-token-qxwg5Optional: falseQoS Class: BestEffortNode-Selectors: <none>Tolerations: node.kubernetes.io/not-ready:NoExecute for 300snode.kubernetes.io/unreachable:NoExecute for 300sEvents:Type Reason Age From Message---- ------ ---- ---- -------Normal Scheduled <unknown> default-scheduler Successfully assigned default/nginx to node01Warning Failed 44s (x2 over 58s) kubelet, node01 Failed to pull image "192.168.31.131:8080/test/nginx:latest": rpc error: code = Unknown desc = Error response from daemon: pull access denied for 192.168.31.131:8080/test/nginx, repository does not exist or may require 'docker login'Warning Failed 44s (x2 over 58s) kubelet, node01 Error: ErrImagePullNormal BackOff 33s (x3 over 58s) kubelet, node01 Back-off pulling image "192.168.31.131:8080/test/nginx:latest"Warning Failed 33s (x3 over 58s) kubelet, node01 Error: ImagePullBackOffNormal Pulling 19s (x3 over 59s) kubelet, node01 Pulling image "192.168.31.131:8080/test/nginx:latest"Normal Pulled 19s kubelet, node01 Successfully pulled image "192.168.31.131:8080/test/nginx:latest"Normal Created 19s kubelet, node01 Created container secret-podNormal Started 18s kubelet, node01 Started container secret-pod[liwm@rmaster01 liwm]$

emptyDir

EmptyDir在将Pod分配给节点时,首先创建一个卷,只要Pod在首次创建的节点一直运行没有删除的触发,那么该卷一直存在。Pod中的容器都可以在该emptyDir卷中读取和写入相同的文件,尽管该卷可以共享在每个Container中的相同或不同路径上。如果出于任何原因将Pod从emptyDir节点中删除,则其中的数据将被永久删除。

注:容器崩溃而不触发删除Pod的动作,数据在emptyDir中不会丢失。

cat << EOF > emptydir.yaml

apiVersion: v1

kind: Pod

metadata:

name: emptydir-pod

labels:

app: myapp

spec:

volumes:

- name: storage

emptyDir: {}

containers:

- name: myapp1

image: radial/busyboxplus

imagePullPolicy: IfNotPresent

volumeMounts:

- name: storage

mountPath: /storage

command: ['sh', '-c', 'sleep 3600000']

- name: myapp2

image: radial/busyboxplus

imagePullPolicy: IfNotPresent

volumeMounts:

- name: storage

mountPath: /storage

command: ['sh', '-c', 'sleep 10000000']

EOF

kubectl apply -f emptydir.yaml

kubete维护容器

[liwm@rmaster01 liwm]$ kubectl create -f emptydir.yaml

pod/emptydir-pod created

[liwm@rmaster01 liwm]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

emptydir-pod 0/2 ContainerCreating 0 4s

nfs-client-provisioner-7757d98f8c-qwjrl 1/1 Running 0 4m6s

[liwm@rmaster01 liwm]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

emptydir-pod 2/2 Running 0 15s

nfs-client-provisioner-7757d98f8c-qwjrl 1/1 Running 0 4m17s

[liwm@rmaster01 liwm]$ kubectl exec -it emptydir-pod -c myapp1 sh

/ # cd /storage/

/storage # touch 222

/storage # exit

[liwm@rmaster01 liwm]$ kubectl exec -it emptydir-pod -c myapp2 sh

/ #

/ # cd /storage/

/storage # ls

222

/storage # exit

[liwm@rmaster01 liwm]$

[liwm@rmaster01 liwm]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

emptydir-pod 2/2 Running 0 2m10s 10.42.4.90 node01 <none> <none>

nfs-client-provisioner-7757d98f8c-qwjrl 1/1 Running 0 6m12s 10.42.4.89 node01 <none> <none>

[root@node01 ~]# docker ps |grep emptydir-pod

6b3623beca2c fffcfdfce622 "sh -c 'sleep 100000…" 4 minutes ago Up 4 minutes k8s_myapp2_emptydir-pod_default_1b5bdd0d-346c-42eb-9151-025a1366b4e5_0

d07d098747a2 fffcfdfce622 "sh -c 'sleep 360000…" 4 minutes ago Up 4 minutes k8s_myapp1_emptydir-pod_default_1b5bdd0d-346c-42eb-9151-025a1366b4e5_0

c0aef4d253fb rancher/pause:3.1 "/pause" 4 minutes ago Up 4 minutes k8s_POD_emptydir-pod_default_1b5bdd0d-346c-42eb-9151-025a1366b4e5_0

[root@node01 ~]# docker inspect 6b3623beca2c|grep storage

"/var/lib/kubelet/pods/1b5bdd0d-346c-42eb-9151-025a1366b4e5/volumes/kubernetes.io~empty-dir/storage:/storage",

"Source": "/var/lib/kubelet/pods/1b5bdd0d-346c-42eb-9151-025a1366b4e5/volumes/kubernetes.io~empty-dir/storage",

"Destination": "/storage",

[root@node01 ~]# cd /var/lib/kubelet/pods/1b5bdd0d-346c-42eb-9151-025a1366b4e5/volumes/kubernetes.io~empty-dir/storage

[root@node01 storage]# ls

222

[root@node01 storage]#

[root@node01 storage]# docker rm -f 6b3623beca2c d07d098747a2

6b3623beca2c

d07d098747a2

[root@node01 storage]# ls

222

[root@node01 storage]#

[liwm@rmaster01 liwm]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

emptydir-pod 2/2 Running 0 11m

nfs-client-provisioner-7757d98f8c-qwjrl 1/1 Running 0 15m

[liwm@rmaster01 liwm]$ kubectl exec -it emptydir-pod -c myapp1 sh

/ # cd /storage/

/storage # ls

222

/storage # exit

[liwm@rmaster01 liwm]$ kubectl delete pod emptydir-pod

pod "emptydir-pod" deleted

[liwm@rmaster01 liwm]$

[root@node01 storage]# ls

222

[root@node01 storage]# ls

[root@node01 storage]#

emptyDir + init-containers

cat << EOF > initcontainers.yaml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

volumes:

- name: storage

emptyDir: {}

containers:

- name: myapp-containers

image: radial/busyboxplus

imagePullPolicy: IfNotPresent

volumeMounts:

- name: storage

mountPath: /storage

command: ['sh', '-c', 'if [ -f /storage/testfile ] ; then sleep 3600000 ; fi']

initContainers:

- name: init-containers

image: radial/busyboxplus

imagePullPolicy: IfNotPresent

volumeMounts:

- name: storage

mountPath: /storage

command: ['sh', '-c', 'touch /storage/testfile && sleep 10']

EOF

[liwm@rmaster01 liwm]$ kubectl create -f initcontainers.yaml

pod/myapp-pod created

[liwm@rmaster01 liwm]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 0/1 Init:0/1 0 4s

nfs-client-provisioner-7757d98f8c-qwjrl 1/1 Running 0 20m

[liwm@rmaster01 liwm]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-pod 1/1 Running 0 36s

nfs-client-provisioner-7757d98f8c-qwjrl 1/1 Running 0 20m

[liwm@rmaster01 liwm]$ kubectl exec -it myapp-pod -- ls /storage/

testfile

[liwm@rmaster01 liwm]$