1.kafka整合springboot

我们基本了解kafka后,我们来进行与springboot的整合,来做个小测验

首先导入我们需要的包:

pom.xml

<dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId></dependency><dependency><groupId>org.springframework.kafka</groupId><artifactId>spring-kafka</artifactId></dependency><dependency><groupId>com.alibaba</groupId><artifactId>fastjson</artifactId><version>1.2.73</version></dependency><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><optional>true</optional></dependency>

配置文件:

spring.application.name=springboot-kafkaserver.port=8082#==============kafka==============#spring.kafka.bootstrap-servers=localhost:9092#==============provider===========#spring.kafka.producer.retries=0# 每次批量发送消息的数量spring.kafka.producer.batch-size=16384spring.kafka.producer.buffer-memory=33554432# 指定消息key和消息体的编解码方式spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializerspring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer#==============consumer===========## 指定默认消费者group idspring.kafka.consumer.group-id=test-log-groupspring.kafka.consumer.auto-offset-reset=earliestspring.kafka.consumer.enable-auto-commit=truespring.kafka.consumer.auto-commit-interval=100# 指定消息key和消息体的编解码方式spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializerspring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer

User.java

package com.zym.kafka.entity;import lombok.Data;import lombok.experimental.Accessors;@Data@Accessors(chain = true)public class User {private String username;private String userid;private String state;}

UserProducer.java

package com.zym.kafka.producer;import com.alibaba.fastjson.JSON;import com.zym.kafka.entity.User;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.kafka.core.KafkaTemplate;import org.springframework.stereotype.Component;@Componentpublic class UserProducer {@Autowiredprivate KafkaTemplate kafkaTemplate;public void sendMessage(String userId){User user = new User();user.setUsername("zym").setUserid(userId).setState("正常");System.out.println(user.toString());kafkaTemplate.send("test", JSON.toJSONString(user));}}

UserConsumer.java

package com.zym.kafka.consumer;import lombok.extern.slf4j.Slf4j;import org.apache.kafka.clients.consumer.ConsumerRecord;import org.springframework.kafka.annotation.KafkaListener;import org.springframework.stereotype.Component;import java.util.Optional;@Component@Slf4jpublic class UserConsumer {@KafkaListener(topics = {"test"})public void consumer(ConsumerRecord consumerRecord){Optional<?> kafkaMessage = Optional.ofNullable(consumerRecord.value());log.info(">>>>>>>>record<<<<<<<<" + kafkaMessage);if (kafkaMessage.isPresent()){Object message = kafkaMessage.get();System.out.println("消费消息" + message);}}}

然后我们修改一下启动类,让项目注入bean后就生产十条消息到kafka(或者可以新写接口去测试,我这里偷了懒)

SpringbootKafkaApplication.java

package com.zym.kafka;import com.zym.kafka.producer.UserProducer;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.boot.SpringApplication;import org.springframework.boot.autoconfigure.SpringBootApplication;import javax.annotation.PostConstruct;@SpringBootApplicationpublic class SpringbootKafkaApplication {@Autowiredprivate UserProducer userProducer;@PostConstructpublic void init(){for (int i = 0; i < 10; i++) {userProducer.sendMessage(String.valueOf(i));}}public static void main(String[] args) {SpringApplication.run(SpringbootKafkaApplication.class, args);}}

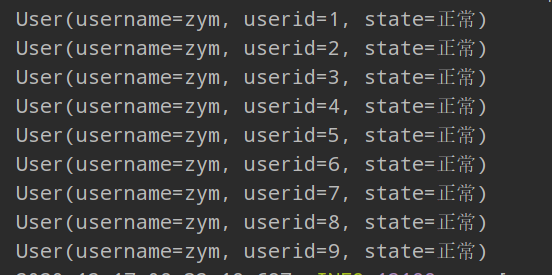

然后我们启动项目,来查看是否整合成功:

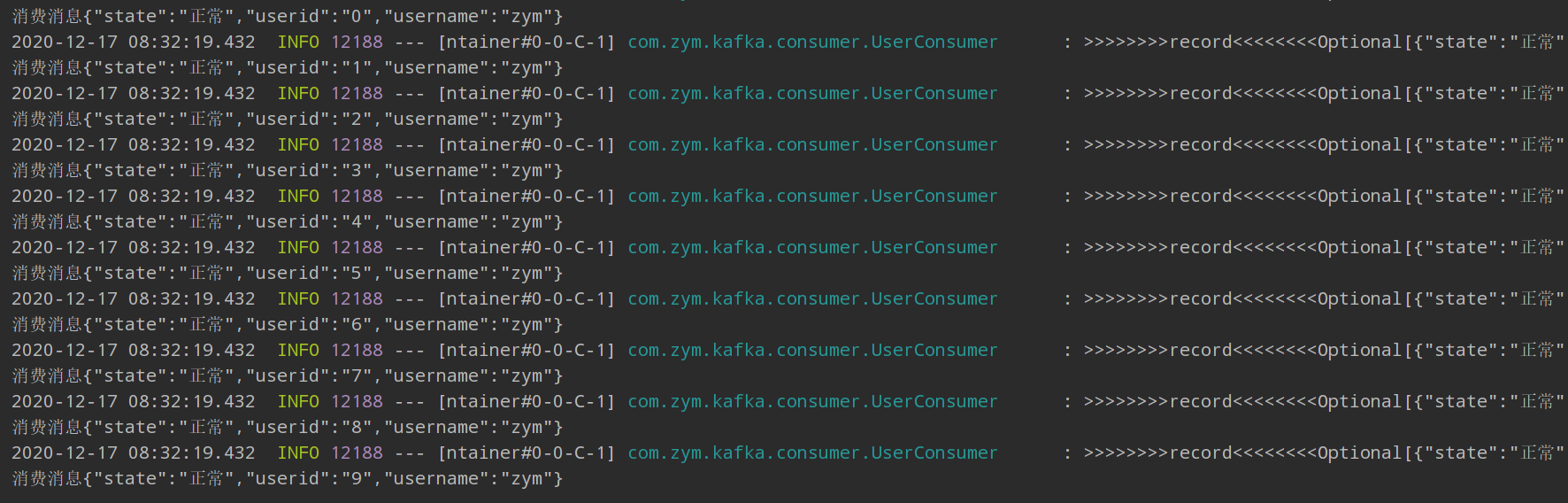

查看控制台:

由于控制台打印顺序被打乱,我这里只截了后面九条消息,但实际上是十条,说明已经生产了十条消息到kafka,然后我们找一下消费者消费的打印语句

我们也可以看到,消息也已经被消费了。

2.遇到的问题:

1.控制台打印:Connection to node 1 (localhost/127.0.0.1:9092) could not be established.

问题原因:kafka启动后会在zookeeper的/brokers/ids下注册监听协议,包括IP和端口号,客户端连接的时候,会取得这个IP和端口号。

后来查看了kafka的配置,原来我忽视了listeners和advertised.listeners的区别,advertised.listeners才是真正暴露给外部使用的连接地址,会写入到zookeeper节点中的。于是再次进行修改,把IP配置到advertised.listeners中,问题再一次解决。

解决方法:在kafka的server.properties修改配置

# 允许外部端口连接listeners=PLAINTEXT://0.0.0.0:9092# 外部代理地址advertised.listeners=PLAINTEXT://xx.xx.xx.xx:9092

3.后续学习

与常用的rabbitmq相比,使用起来大同小异,具体两者有什么区别,还需要深入了解kafka,我会在接下来的文章中更新。

参考文章:https://blog.csdn.net/qq_18603599/article/details/81169488