1 实现把任意图片放进训练好的网络进行测试

输入的图片是白底黑字的数字图片进行测试,测试前需要做两步

(1)转换图片矩阵大小为28*28符合网络的输入

(2)把图片的转换成白字黑底的黑白图片

mnist_app.py

import tensorflow as tfimport numpy as npfrom PIL import Imageimport mnist_backwardimport mnist_forwarddef restore_model(testPicArr):# 利用tf.Graph()复现之前定义的计算图with tf.Graph().as_default() as tg:x = tf.placeholder(tf.float32, [None, mnist_forward.INPUT_NODE])# 调用mnist_forward文件中的前向传播过程forword()函数y = mnist_forward.forward(x, None)# 得到概率最大的预测值preValue = tf.argmax(y, 1)# 实例化具有滑动平均的saver对象variable_averages = tf.train.ExponentialMovingAverage(mnist_backward.MOVING_AVERAGE_DECAY)variables_to_restore = variable_averages.variables_to_restore()saver = tf.train.Saver(variables_to_restore)with tf.Session() as sess:# 通过ckpt获取最新保存的模型ckpt = tf.train.get_checkpoint_state(mnist_backward.MODEL_SAVE_PATH)if ckpt and ckpt.model_checkpoint_path:saver.restore(sess, ckpt.model_checkpoint_path)preValue = sess.run(preValue, feed_dict={x: testPicArr})return preValueelse:print("No checkpoint file found")return -1# 预处理,包括resize,转变灰度图,二值化def pre_pic(picName):img = Image.open(picName)reIm = img.resize((28, 28), Image.ANTIALIAS)#把图片转换为灰度值图片im_arr = np.array(reIm.convert('L'))# 对图片做二值化处理(这样以滤掉噪声,另外调试中可适当调节阈值)threshold = 50# 模型的要求是黑底白字,但输入的图是白底黑字,所以需要对每个像素点的值改为255减去原值以得到互补的反色。for i in range(28):for j in range(28):im_arr[i][j] = 255 - im_arr[i][j]if (im_arr[i][j] < threshold):im_arr[i][j] = 0else:im_arr[i][j] = 255# 把图片形状拉成1行784列,并把值变为浮点型(因为要求像素点是0-1 之间的浮点数)nm_arr = im_arr.reshape([1, 784])nm_arr = nm_arr.astype(np.float32)# 接着让现有的RGB图从0-255之间的数变为0-1之间的浮点数img_ready = np.multiply(nm_arr, 1.0 / 255.0)return img_readydef application():# 输入要识别的几张图片testNum = int(input("input the number of test pictures:"))for i in range(testNum):# 给出待识别图片的路径和名称testPic = input("the path of test picture:")# 图片预处理testPicArr = pre_pic(testPic)# 获取预测结果preValue = restore_model(testPicArr)print("The prediction number is:", preValue)def main():application()if __name__ == '__main__':main()

2 实现制作数据

2.1 简介

- 数据集可以生成二进制的tfrecords文件。先将图片和标签制作成该格式的文件,使用tfrecords进行数据读取,会提高内存利用率。

- 用tf.train.Example的协议存储训练情况,训练数据的特征用键值对的形式表示。

- 用SerializeToString()把数据序列化为字符串存储。

2.2 生成tfrecords文件

writer = tf.python_io.TFRecordWriter(tfRecordName)# 把每张图片和标签封装到example中example = tf.train.Example(features=tf.train.Features(feature={'img_raw': tf.train.Feature(bytes_list=tf.train.BytesList(value=[img_raw])),# img_raw放入原始图片'label': tf.train.Feature(int64_list=tf.train.Int64List(value=labels))# labels是图片的标签}))# 把example进行序列化writer.write(example.SerializeToString())# 关闭writerwriter.close()

2.3 解析tfrecords文件

```python该函数会生成一个先入先出的队列,文件阅读器会使用它来读取数据

filename_queue = tf.train.string_input_producer([tfRecord_path], shuffle=True)新建一个reader

reader = tf.TFRecordReader()把读出的每个样本保存在serialized_example中进行解序列化,标签和图片的键名应该和制作tfrecords的键名相同,其中标签给出几分类。

_, serialized_example = reader.read(filename_queue)将tf.train.Example协议内存块(protocol buffer)解析为张量

features = tf.parse_single_example(serialized_example,features={'label': tf.FixedLenFeature([10], tf.int64),'img_raw': tf.FixedLenFeature([], tf.string)})

将img_raw字符串转换为8位无符号整型

img = tf.decode_raw(features[‘img_raw’], tf.uint8)将形状变为一行784列

img.set_shape([784]) img = tf.cast(img, tf.float32) * (1. / 255)变成0到1之间的浮点数

label = tf.cast(features[‘label’], tf.float32)

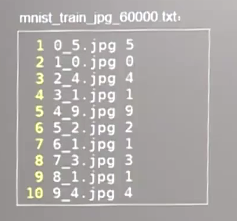

<a name="Qh9Mi"></a>## 2.4 生成自定义数据的完整代码读取的文件格式是。图片文件名+空格+标签<br /><a name="hXJ73"></a>### mnist_generateds.py文件```python#mnist_generateds.py# coding:utf-8import tensorflow as tfimport numpy as npfrom PIL import Imageimport osimage_train_path = './mnist_data_jpg/mnist_train_jpg_60000/'label_train_path = './mnist_data_jpg/mnist_train_jpg_60000.txt'tfRecord_train = './data/mnist_train.tfrecords'image_test_path = './mnist_data_jpg/mnist_test_jpg_10000/'label_test_path = './mnist_data_jpg/mnist_test_jpg_10000.txt'tfRecord_test = './data/mnist_test.tfrecords'data_path = './data'resize_height = 28resize_width = 28# 生成tfrecords文件def write_tfRecord(tfRecordName, image_path, label_path):# 新建一个writerwriter = tf.python_io.TFRecordWriter(tfRecordName)num_pic = 0f = open(label_path, 'r')contents = f.readlines()f.close()# 循环遍历每张图和标签for content in contents:value = content.split()img_path = image_path + value[0]img = Image.open(img_path)img_raw = img.tobytes()#图片转换为二进制数据labels = [0] * 10labels[int(value[1])] = 1# 把每张图片和标签封装到example中example = tf.train.Example(features=tf.train.Features(feature={'img_raw': tf.train.Feature(bytes_list=tf.train.BytesList(value=[img_raw])),'label': tf.train.Feature(int64_list=tf.train.Int64List(value=labels))}))# 把example进行序列化writer.write(example.SerializeToString())num_pic += 1#每完成一张图片,计数器加1print("the number of picture:", num_pic)# 关闭writerwriter.close()print("write tfrecord successful")def generate_tfRecord():isExists = os.path.exists(data_path)if not isExists:os.makedirs(data_path)print('The directory was created successfully')else:print('directory already exists')write_tfRecord(tfRecord_train, image_train_path, label_train_path)write_tfRecord(tfRecord_test, image_test_path, label_test_path)# 解析tfrecords文件def read_tfRecord(tfRecord_path):# 该函数会生成一个先入先出的队列,文件阅读器会使用它来读取数据filename_queue = tf.train.string_input_producer([tfRecord_path], shuffle=True)# 新建一个readerreader = tf.TFRecordReader()# 把读出的每个样本保存在serialized_example中进行解序列化,标签和图片的键名应该和制作tfrecords的键名相同,其中标签给出几分类。_, serialized_example = reader.read(filename_queue)# 将tf.train.Example协议内存块(protocol buffer)解析为张量features = tf.parse_single_example(serialized_example,features={'label': tf.FixedLenFeature([10], tf.int64),# 10表示标签的分类数量'img_raw': tf.FixedLenFeature([], tf.string)})# 将img_raw字符串转换为8位无符号整型img = tf.decode_raw(features['img_raw'], tf.uint8)# 将形状变为一行784列img.set_shape([784])img = tf.cast(img, tf.float32) * (1. / 255)# 变成0到1之间的浮点数label = tf.cast(features['label'], tf.float32)# 返回图片和标签return img, labeldef get_tfrecord(num, isTrain=True):if isTrain:tfRecord_path = tfRecord_trainelse:tfRecord_path = tfRecord_testimg, label = read_tfRecord(tfRecord_path)# 随机读取一个batch的数据,打乱数据img_batch, label_batch = tf.train.shuffle_batch([img, label],batch_size=num,num_threads=2,# 线程capacity=1000,min_after_dequeue=700)# 返回的图片和标签为随机抽取的batch_size组return img_batch, label_batchdef main():generate_tfRecord()if __name__ == '__main__':main()

在反向传播mnistbackward.py和测试程序mnist_test.py中修改图片标签的接口。使用线程协调器,方法如下

coord = tf.train.Coordinator()threads = tf.train.start_queue_runners(sess = sess,coord = coord)# 图片和标签的批获取coord.request_stop()coord.join(threads)

mnist_backward.py文件

线程协调器的代码是用################################################括起来的

#mnist_backward.pyimport tensorflow as tffrom tensorflow.examples.tutorials.mnist import input_dataimport mnist_forwardimport osimport mnist_generateds # 1BATCH_SIZE = 200LEARNING_RATE_BASE = 0.1LEARNING_RATE_DECAY = 0.99REGULARIZER = 0.0001STEPS = 50000MOVING_AVERAGE_DECAY = 0.99MODEL_SAVE_PATH = "./model/"MODEL_NAME = "mnist_model"# 手动给出训练的总样本数6万train_num_examples = 60000 # 给出数据集的数量def backward():x = tf.placeholder(tf.float32, [None, mnist_forward.INPUT_NODE])y_ = tf.placeholder(tf.float32, [None, mnist_forward.OUTPUT_NODE])y = mnist_forward.forward(x, REGULARIZER)global_step = tf.Variable(0, trainable=False)ce = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y, labels=tf.argmax(y_, 1))cem = tf.reduce_mean(ce)loss = cem + tf.add_n(tf.get_collection('losses'))learning_rate = tf.train.exponential_decay(LEARNING_RATE_BASE,global_step,train_num_examples / BATCH_SIZE,LEARNING_RATE_DECAY,staircase=True)train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss, global_step=global_step)ema = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY, global_step)ema_op = ema.apply(tf.trainable_variables())with tf.control_dependencies([train_step, ema_op]):train_op = tf.no_op(name='train')saver = tf.train.Saver()# 一次批获取 batch_size张图片和标签################################################img_batch, label_batch = mnist_generateds.get_tfrecord(BATCH_SIZE, isTrain=True) # 3################################################with tf.Session() as sess:init_op = tf.global_variables_initializer()sess.run(init_op)ckpt = tf.train.get_checkpoint_state(MODEL_SAVE_PATH)if ckpt and ckpt.model_checkpoint_path:saver.restore(sess, ckpt.model_checkpoint_path)################################################# 利用多线程提高图片和标签的批获取效率coord = tf.train.Coordinator() # 4# 启动输入队列的线程threads = tf.train.start_queue_runners(sess=sess, coord=coord) # 5################################################for i in range(STEPS):################################################# 执行图片和标签的批获取xs, ys = sess.run([img_batch, label_batch]) # 6################################################_, loss_value, step = sess.run([train_op, loss, global_step], feed_dict={x: xs, y_: ys})if i % 1000 == 0:print("After %d training step(s), loss on training batch is %g." % (step, loss_value))saver.save(sess, os.path.join(MODEL_SAVE_PATH, MODEL_NAME), global_step=global_step)################################################# 关闭线程协调器coord.request_stop() # 7coord.join(threads) # 8################################################def main():backward() # 9if __name__ == '__main__':main()

mnist_test.py文件

线程协调器的代码是用################################################括起来的

import timeimport tensorflow as tffrom tensorflow.examples.tutorials.mnist import input_dataimport mnist_forwardimport mnist_backwardimport mnist_generatedsTEST_INTERVAL_SECS = 5# 手动给出测试的总样本数1万TEST_NUM = 10000 # 1def test():with tf.Graph().as_default() as g:x = tf.placeholder(tf.float32, [None, mnist_forward.INPUT_NODE])y_ = tf.placeholder(tf.float32, [None, mnist_forward.OUTPUT_NODE])y = mnist_forward.forward(x, None)ema = tf.train.ExponentialMovingAverage(mnist_backward.MOVING_AVERAGE_DECAY)ema_restore = ema.variables_to_restore()saver = tf.train.Saver(ema_restore)correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1))accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))################################################# 用函数get_tfrecord替换读取所有测试集1万张图片img_batch, label_batch = mnist_generateds.get_tfrecord(TEST_NUM, isTrain=False) # 2################################################while True:with tf.Session() as sess:ckpt = tf.train.get_checkpoint_state(mnist_backward.MODEL_SAVE_PATH)if ckpt and ckpt.model_checkpoint_path:saver.restore(sess, ckpt.model_checkpoint_path)global_step = ckpt.model_checkpoint_path.split('/')[-1].split('-')[-1]################################################# 利用多线程提高图片和标签的批获取效率coord = tf.train.Coordinator() # 3# 启动输入队列的线程threads = tf.train.start_queue_runners(sess=sess, coord=coord) # 4# 执行图片和标签的批获取xs, ys = sess.run([img_batch, label_batch]) # 5################################################accuracy_score = sess.run(accuracy, feed_dict={x: xs, y_: ys})print("After %s training step(s), test accuracy = %g" % (global_step, accuracy_score))################################################# 关闭线程协调器coord.request_stop() # 6coord.join(threads) # 7################################################else:print('No checkpoint file found')returntime.sleep(TEST_INTERVAL_SECS)def main():test() # 8if __name__ == '__main__':main()