- 数据预处理

- ">—————-数据预处理—————-

question = [“你是谁?”,”电话号码是多少?”,”叫什么名字?”] # 训练问题

answer = [“我是机器人”,”没有电话”,”智能客服”] # 用于训练的问题的回答

# 分词

fcqs = [jieba.lcut(q) for q in question] # 对问题语句进行分词。一维数组—>二维数组

fcas = [jieba.lcut(a) for a in answer] # 对回答语句进行分词

#

words = []

alls = fcqs + fcas # 合并分词后的问题和回答

for k in alls:

for word in k:

if word not in words:

words.append(word)

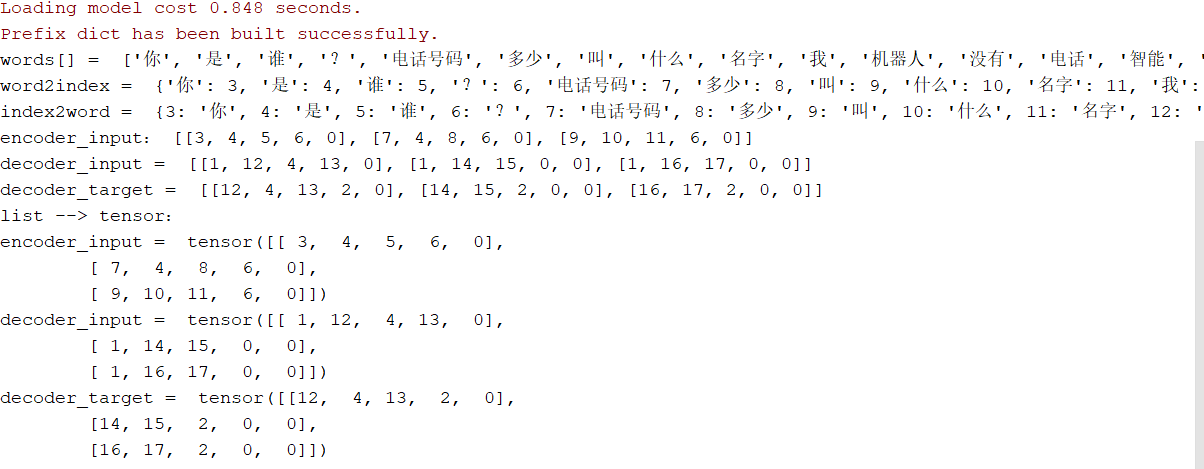

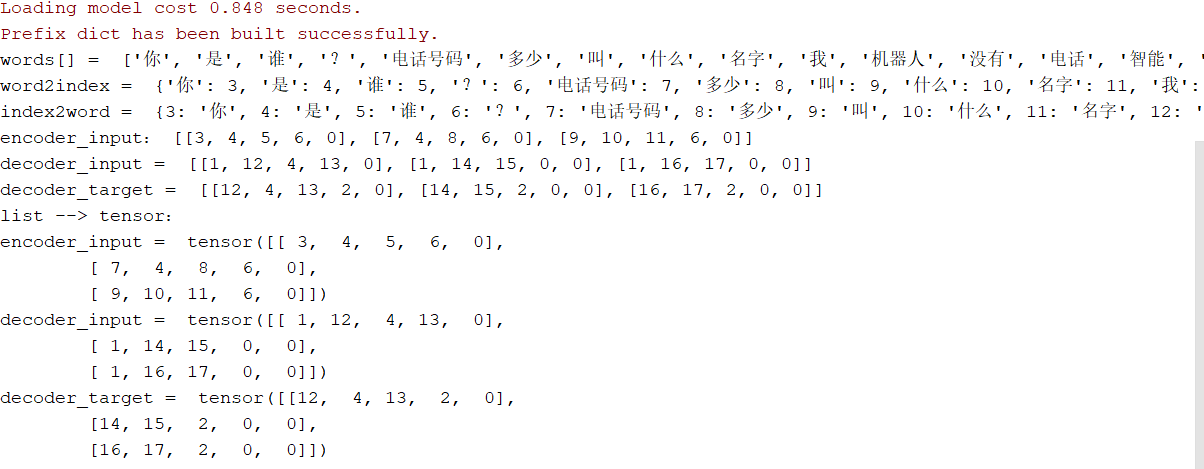

print(“words[] = “,words)

# 创建字典类型索引库,给words中的每个词创建索引。{词:索引}

word2index = {w:i+3 for i,w in enumerate(words)}

print(“word2index = “,word2index)

word2index[“PAD”] = 0 # 补全句子长度

word2index[“SOS”] = 1 # 句子开头

word2index[“EOS”] = 2 # 句子结束

# 索引—>词 {索引:词}

index2word = {v:k for k,v in word2index.items()}

print(“index2word = “,index2word)

# 词表向量化

seq_length = max([len(i) for i in alls]) + 1 # 输入序列(问题语句)的最大长度

def make_data(seq_list):

rs = []

for word in seq_list:

seq_index = [word2index[i] for i in word] # 词在词表中的索引

if len(seq_index) < seq_length:

seq_index += [0]*(seq_length - len(seq_index))

rs.append(seq_index)

return rs

encoder_input = make_data(fcqs)

print(“encoder_input:”,encoder_input)

# 对每个回答标记开始和结尾

decoder_input = make_data([[“SOS”]+i for i in fcas])

print(“decoder_input = “,decoder_input)

decoder_target = make_data([i+[“EOS”] for i in fcas])

print(“decoder_target = “,decoder_target)

# 将 list —> tensor

encoder_input= torch.LongTensor(encoder_input)

decoder_input = torch.LongTensor(decoder_input)

decoder_target = torch.LongTensor(decoder_target)

print(“list —> tensor:”)

print(“encoder_input = “,encoder_input)

print(“decoder_input = “,decoder_input)

print(“decoder_target = “,decoder_target)`

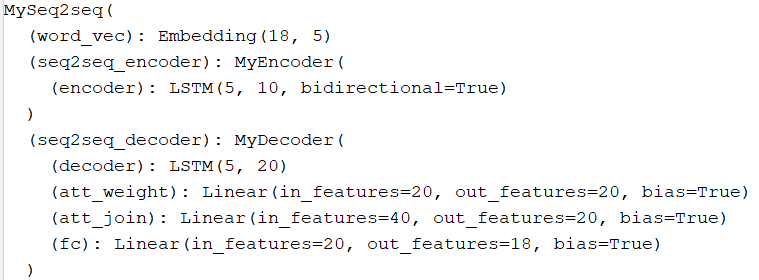

- 解码器神经网络

class MyDecoder(torch.nn.Module):

def init(self):

super(MyDecoder,self).init()

# 循环神经网络层

self.decoder = torch.nn.LSTM(embedding_size,n_hidder2,1)

# 注意力参数

self.att_weight = torch.nn.Linear(n_hidder 2,n_hidder 2)

self.att_join = torch.nn.Linear(n_hidder 4,n_hidder2)

self.fc = torch.nn.Linear(n_hidder 2,vocab_size)

def forward(self, x,encoder_out,hn,cn):

decoder_out,(decoder_h_n,decoder_c_n) = self.decoder(x,(hn,cn))

decoder_out = decoder_out.permute(1,0,2)

encoder_out = encoder_out.permute(1,0,2)

decoder_out_att = self.att_weight(encoder_out)

decoder_out_att = decoder_out_att.permute(0,2,1)

# 计算分数

decoder_out_score = decoder_out.bmm(decoder_out_att)

# 计算权重

at = torch.nn.functional.log_softmax(decoder_out_score,dim=2)

# 新上下文

ct = at.bmm(encoder_out)

ht_join = torch.cat((ct,decoder_out),dim=2)

fc_join = torch.tanh(self.att_join(ht_join))

fc_out = self.fc(fc_join)

return fc_out,decoder_h_n,decoder_c_n - ">seq2seq

class MySeq2seq(torch.nn.Module):

def init(self):

super(MySeq2seq, self).init()

# 文本张量化

self.word_vec = torch.nn.Embedding(vocab_size,embedding_size)

self.seq2seq_encoder = MyEncoder() # 编码器

self.seq2seq_decoder = MyDecoder() # 解码器

def forward(self,encoder_input,decoder_input,inference_threshold=0):

embedding_encoder_input = self.word_vec(encoder_input)

embedding_decoder_input = self.word_vec(decoder_input)

# 调换第一维度和第二维度:转置

embedding_encoder_input = embedding_encoder_input.permute(1,0,2)

embedding_decoder_input = embedding_decoder_input.permute(1,0,2)

# 编码

encoder_out,h_n,c_n = self.seq2seq_encoder(embedding_encoder_input)

# 解码

if inference_threshold:

decoder_out,decoder_h_n,decoder_c_n = self.seq2seq_decoder(

embedding_encoder_input,

encoder_out,

h_n,

c_n

)

return decoder_out

else:

outs = []

for i in range(seq_length):

decoder_out,decoder_h_n, decoder_c_n = self.seq2seq_decoder(

embedding_encoder_input,encoder_out,h_n,c_n

)

decoder_x = torch.max(decoder_out.reshape(-1,25),dim=1)[1].item()

if decoder_x in [0,2]:

return outs

outs.append(decoder_x)

embedding_encoder_input = self.word_vec(torch.LongTensor([[decoder_x]]))

embedding_encoder_input = embedding_encoder_input.permute(1,0,2)

return outs<br />model = MySeq2seq()

print(model)`

数据预处理

`# 中文智能客服

import jieba

import torch

—————-数据预处理—————-

question = [“你是谁?”,”电话号码是多少?”,”叫什么名字?”] # 训练问题

answer = [“我是机器人”,”没有电话”,”智能客服”] # 用于训练的问题的回答

# 分词

fcqs = [jieba.lcut(q) for q in question] # 对问题语句进行分词。一维数组—>二维数组

fcas = [jieba.lcut(a) for a in answer] # 对回答语句进行分词

#

words = []

alls = fcqs + fcas # 合并分词后的问题和回答

for k in alls:

for word in k:

if word not in words:

words.append(word)

print(“words[] = “,words)

# 创建字典类型索引库,给words中的每个词创建索引。{词:索引}

word2index = {w:i+3 for i,w in enumerate(words)}

print(“word2index = “,word2index)

word2index[“PAD”] = 0 # 补全句子长度

word2index[“SOS”] = 1 # 句子开头

word2index[“EOS”] = 2 # 句子结束

# 索引—>词 {索引:词}

index2word = {v:k for k,v in word2index.items()}

print(“index2word = “,index2word)

# 词表向量化

seq_length = max([len(i) for i in alls]) + 1 # 输入序列(问题语句)的最大长度

def make_data(seq_list):

rs = []

for word in seq_list:

seq_index = [word2index[i] for i in word] # 词在词表中的索引

if len(seq_index) < seq_length:

seq_index += [0]*(seq_length - len(seq_index))

rs.append(seq_index)

return rs

encoder_input = make_data(fcqs)

print(“encoder_input:”,encoder_input)

# 对每个回答标记开始和结尾

decoder_input = make_data([[“SOS”]+i for i in fcas])

print(“decoder_input = “,decoder_input)

decoder_target = make_data([i+[“EOS”] for i in fcas])

print(“decoder_target = “,decoder_target)

# 将 list —> tensor

encoder_input= torch.LongTensor(encoder_input)

decoder_input = torch.LongTensor(decoder_input)

decoder_target = torch.LongTensor(decoder_target)

print(“list —> tensor:”)

print(“encoder_input = “,encoder_input)

print(“decoder_input = “,decoder_input)

print(“decoder_target = “,decoder_target)`

构建模型

`# ——————建模———————

embeddingsize = 5

nhidder = 10

vocabsize = len(word2index)

classes = vocabsize

# 编码器神经网络

class MyEncoder(torch.nn.Module):

def __init(self):

super(MyEncoder, self).__init()

# 创建循环神经网络层 LSTM:循环神经网络

self.encoder = torch.nn.LSTM(embedding_size,n_hidder,1,bidirectional=True)

def forward(self,embedding_input):

encoder_output,(encoder_h_n,encoder_c_n) = self.encoder(embedding_input)

encoder_h_n = torch.cat([encoder_h_n[0],encoder_h_n[1]],dim=1)

encoder_c_n = torch.cat([encoder_h_n[0], encoder_c_n[1]],dim=1)

return encoder_output,encoder_h_n.unsqueeze(0),encoder_c_n.unsqueeze(0)