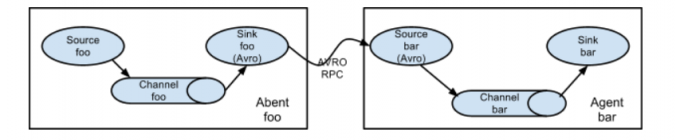

串联的Agent中间采用Avro Sink和 Avro Source方式进行数据传输

1. Hadoop02配置文件a.conf

采集Hadoop02文件,传输到Hadoop02

# Name the components on this agenta1.sources = r1a1.sinks = k1a1.channels = c1# Describe/configure the sourcea1.sources.r1.type = execa1.sources.r1.command = cat /home/hadoop/second.txt# Describe the sinka1.sinks.k1.type = avroa1.sinks.k1.hostname = hadoop04a1.sinks.k1.port = 44444# Use a channel which buffers events in memorya1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100# Bind the source and sink to the channela1.sources.r1.channels = c1a1.sinks.k1.channel = c1

2. Hadoop04配置文件b.conf

采集Hadoop04文件,传输到hdfs

# Name the components on this agenta1.sources = r1a1.sinks = k1a1.channels = c1# Describe/configure the sourcea1.sources.r1.type = avroa1.sources.r1.bind = hadoop04a1.sources.r1.port = 44444# Describe the sinka1.sinks.k1.type = hdfsa1.sinks.k1.hdfs.path = /a/flume/test_01# Use a channel which buffers events in memorya1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100# Bind the source and sink to the channela1.sources.r1.channels = c1a1.sinks.k1.channel = c1

3. 启动Hadoop04上的Agent

flume-ng agent --conf conf --conf-file /home/hadoop/kylin/b.conf --name a1

4. 启动Hadoop02上的Agent

flume-ng agent --conf conf --conf-file /home/hadoop/kylin/a.conf --name a1