可以创建一个分层的拓扑结构

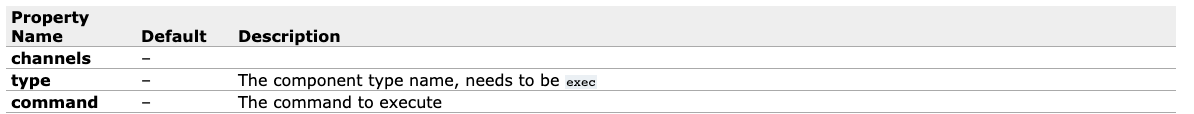

Exec Source

批量采集,一次处理一个文件。

command:

cat [named pipe]:查看文件所有内容

tail -F [file]:倒序查看文件的内容

# Name the components on this agenta1.sources = r1a1.sinks = k1a1.channels = c1# Describe/configure the sourcea1.sources.r1.type = execa1.sources.r1.command = cat /home/hadoop/pikachu.txt# Describe the sinka1.sinks.k1.type = logger# Use a channel which buffers events in memorya1.channels.c1.type = memorya1.channels.c1.capacity = 1000a1.channels.c1.transactionCapacity = 100# Bind the source and sink to the channela1.sources.r1.channels = c1a1.sinks.k1.channel = c1

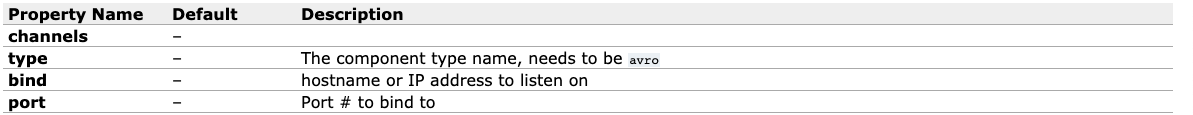

Avro Source

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = avro

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44445

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

客户端flume-ng agent --conf conf --conf-file /home/hadoop/kylin/c.conf --name a1 -Dflume.root.logger=INFO,console

新窗口:flume-ng avro-client -H localhost -p 44445 --filename /home/hadoop/pikachu.txt

PS:

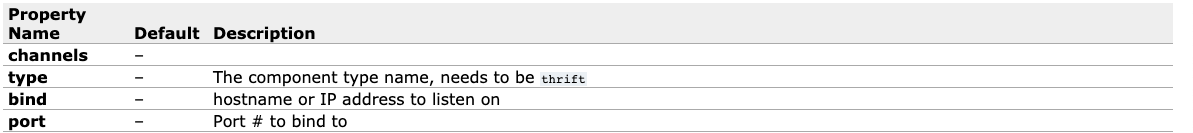

- Thrift Source:需要指定端口号

- netcat:udp/tcp 指定端口号传输数据

Spooling Directory Source

批量数据移动。 采集文件夹中新增的文件。

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = spooldir

a1.sources.r1.spoolDir = /home/hadoop/tmppkq

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1