一、前言

在前两篇文章中,我们已经介绍了:

VirtualBox搭建CentOS集群

Docker安装

现在,我们可以开启我们真正的K8S之旅。

我们将现有的虚拟机称之为node1,用作主节点。为了减少工作量,在Node1安装Kubernetes后,我们利用VirtualBox的虚拟机复制功能,复制出两个完全一样的虚拟机作为工作节点。三者角色为:

| 节点名 | 节点作用 |

|---|---|

| node1 | master |

| node2 | worker |

| node3 | worker |

二、安装Kubernetes主节点

官方文档:https://kubernetes.io/docs/setup/independent/create-cluster-kubeadm/

但是,仅供参考,因为很多朋友不能科学上网。下面将详细介绍在Node1上安装Kubernetes的过程,安装完毕后,再进行虚拟机的复制出Node2、Node3即可。

2.1、基础设置

CentOS添加阿里源:

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repoyum makecache

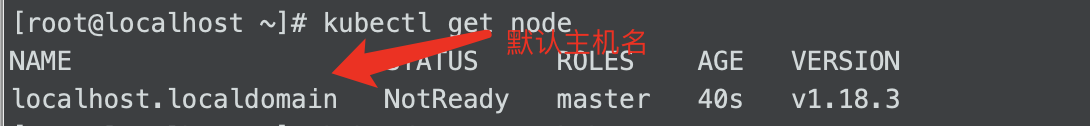

配置主机名

hostnamectl set-hostname master01reboot

如果不设置主机名,那么到时候 kubectl get node 的名字,显示如下

设置hosts

# vi /etc/hosts192.168.56.3 master01.ratels.com master01 # 第一部分:网络IP地址;第二部分:主机名或域名;第三部分:主机名别名;

2.2、配置K8S的yum源

官方仓库无法使用,建议使用阿里源的仓库,执行以下命令添加kubernetes.repo仓库:

cat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF

2.3、关闭防火墙、swap、SeLinux

关闭防火墙:

systemctl stop firewalld & systemctl disable firewalld

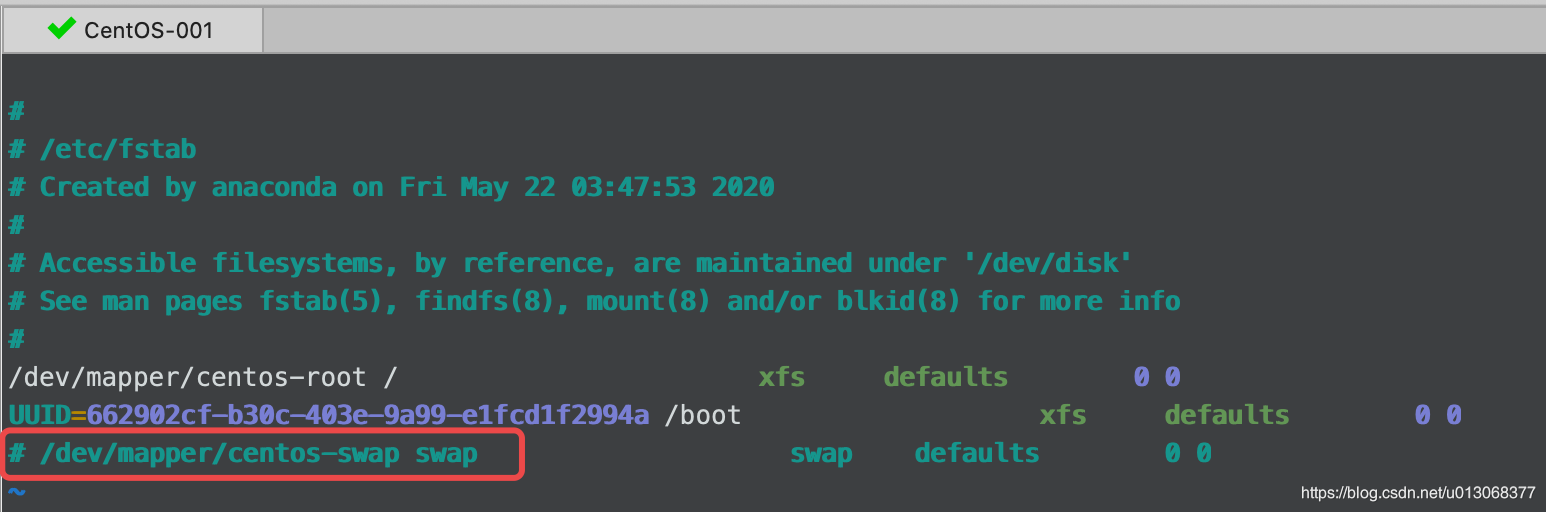

关闭swap:

在安装K8S集群时,Linux的Swap内存交换机制是一定要关闭的,否则会因为内存交换而影响性能以及稳定性。这里,我们可以提前进行设置:

执行swapoff -a可临时关闭,但系统重启后恢复;

编辑/etc/fstab,注释掉包含swap的那一行即可,重启后可永久关闭,如下所示:

关闭SeLinux

setenforce 0

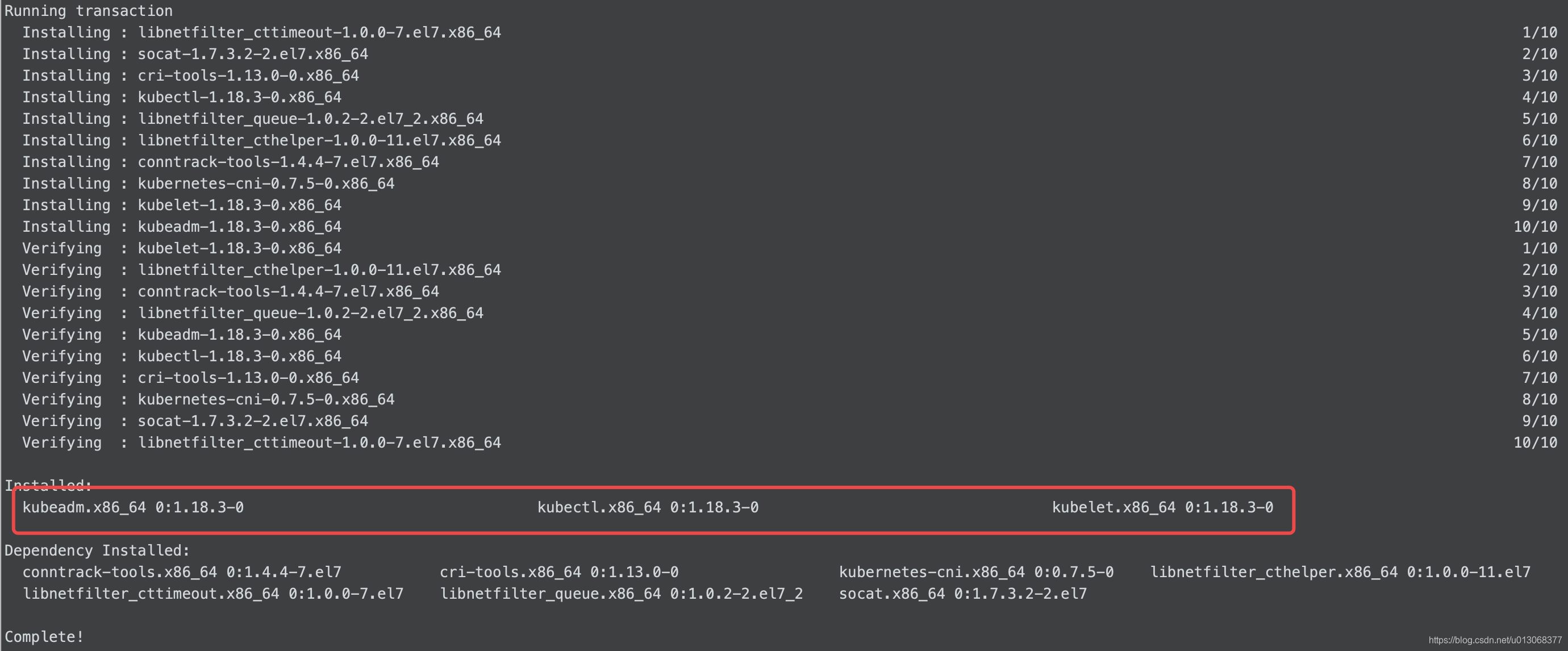

2.4、安装K8S组件

执行以下命令安装kubelet、kubeadm、kubectl:

yum install -y kubelet kubeadm kubectl

如下图所示:

2.5、修改 cgroup driver

修改 docker 的 cgroup 驱动 或者 kubelet的cgroup drive,确保docker 的cgroup drive 与 kubelet的cgroup drive保持一致,官方推荐使用 systemd驱动

2.5.1、修改 docker 的 cgroup 驱动:

方法一:

Create /etc/docker

mkdir /etc/docker

Set up the Docker daemon

cat > /etc/docker/daemon.json <<EOF{"exec-opts": ["native.cgroupdriver=systemd"],"log-driver": "json-file","log-opts": {"max-size": "100m"},"storage-driver": "overlay2","storage-opts": ["overlay2.override_kernel_check=true"]}EOF

mkdir -p /etc/systemd/system/docker.service.d

方法二:

vi /usr/lib/systemd/system/docker.serviceExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd

Restart Docker

systemctl daemon-reloadsystemctl restart docker

查看docker cgroup驱动

docker info | grep -i cgroup

2.5.2、修改 kubectl 的 cgroup 驱动:

修改 /etc/systemd/system/kubelet.service.d/10-kubeadm.conf 文件,增加—cgroup-driver=cgroupfs,==如果 /etc/systemd/system/kubelet.service.d/10-kubeadm.conf 文件不存在,可以看下 /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf ==

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --cgroup-driver=systemd"

重启kubelet

systemctl daemon-reloadsystemctl restart kubelet

2.6、启动kubelet

注意,根据官方文档描述,安装kubelet、kubeadm、kubectl三者后,要求启动kubelet:

systemctl enable kubelet && systemctl start kubelet

2.7、初始化 k8s 集群

注意:一定要把执行结果保存起来,因为需要执行结果的秘钥来创建node

kubeadm init --kubernetes-version=1.18.3 \--apiserver-advertise-address=192.168.56.3 \--image-repository registry.aliyuncs.com/google_containers \--service-cidr=10.10.0.0/16 --pod-network-cidr=10.20.0.0/16 --ignore-preflight-errors=Swap > kubeadm-init-res.txt

参数说明:

--apiserver-advertise-address 0.0.0.0 \# API 服务器所公布的其正在监听的 IP 地址,指定“0.0.0.0”以使用默认网络接口的地址# 切记只可以是内网IP,不能是外网IP,如果有多网卡,可以使用此选项指定某个网卡--apiserver-bind-port 6443 \# API 服务器绑定的端口,默认 6443--cert-dir /etc/kubernetes/pki \# 保存和存储证书的路径,默认值:"/etc/kubernetes/pki"--control-plane-endpoint kuber4s.api \# 为控制平面指定一个稳定的 IP 地址或 DNS 名称,# 这里指定的 kuber4s.api 已经在 /etc/hosts 配置解析为本机IP--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \# 选择用于拉取Control-plane的镜像的容器仓库,默认值:"k8s.gcr.io"# 因 Google被墙,这里选择国内仓库--kubernetes-version 1.17.3 \# 为Control-plane选择一个特定的 Kubernetes 版本, 默认值:"stable-1"--node-name master01 \# 指定节点的名称,不指定的话为主机hostname,默认可以不指定--pod-network-cidr 10.10.0.0/16 \# 指定pod的IP地址范围--service-cidr 10.20.0.0/16 \# 指定Service的VIP地址范围--service-dns-domain cluster.local \# 为Service另外指定域名,默认"cluster.local"--upload-certs# 将 Control-plane 证书上传到 kubeadm-certs Secret4) 配置kubectl:#rm -f .kube && mkdir .kube#cp -i /etc/kubernets/admin.conf .kube/config#chown $(id -u):$(id -g) $HOME/.kube/config //可用于为普通用户分配kubectl权限

根据提示执行下面命令:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

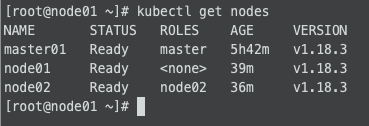

查看节点,pod

[root@localhost ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01.paas.com NotReady master 2m29s v1.18.0

$ kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7ff77c879f-fsj9l 0/1 Pending 0 2m12s

kube-system coredns-7ff77c879f-q5ll2 0/1 Pending 0 2m12s

kube-system etcd-master01.paas.com 1/1 Running 0 2m22s

kube-system kube-apiserver-master01.paas.com 1/1 Running 0 2m22s

kube-system kube-controller-manager-master01.paas.com 1/1 Running 0 2m22s

kube-system kube-proxy-th472 1/1 Running 0 2m12s

kube-system kube-scheduler-master01.paas.com 1/1 Running 0 2m22s

node节点为NotReady,因为corednspod没有启动,缺少网络pod

2.8、安装 calico 网络

[root@master01 ~]# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

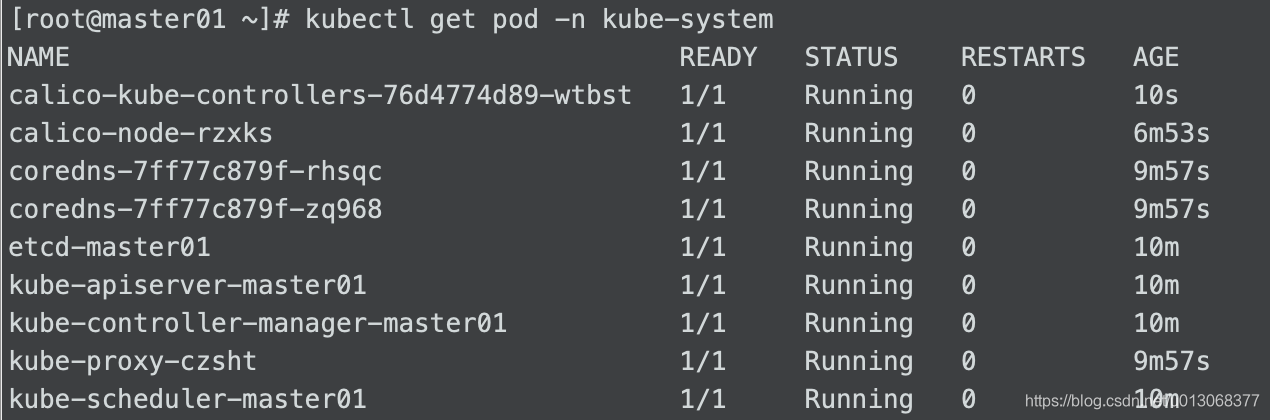

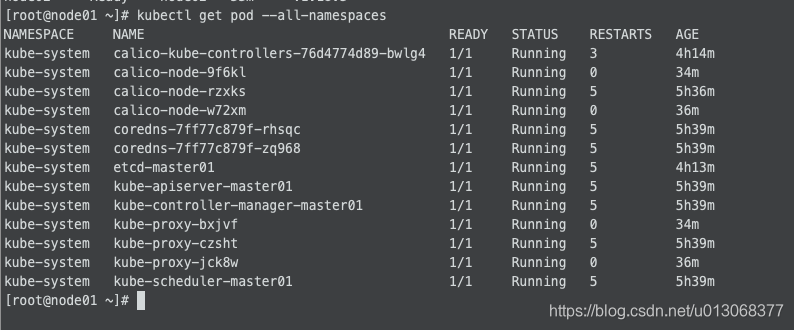

等待几分钟

主节点安装ok

三、安装从节点

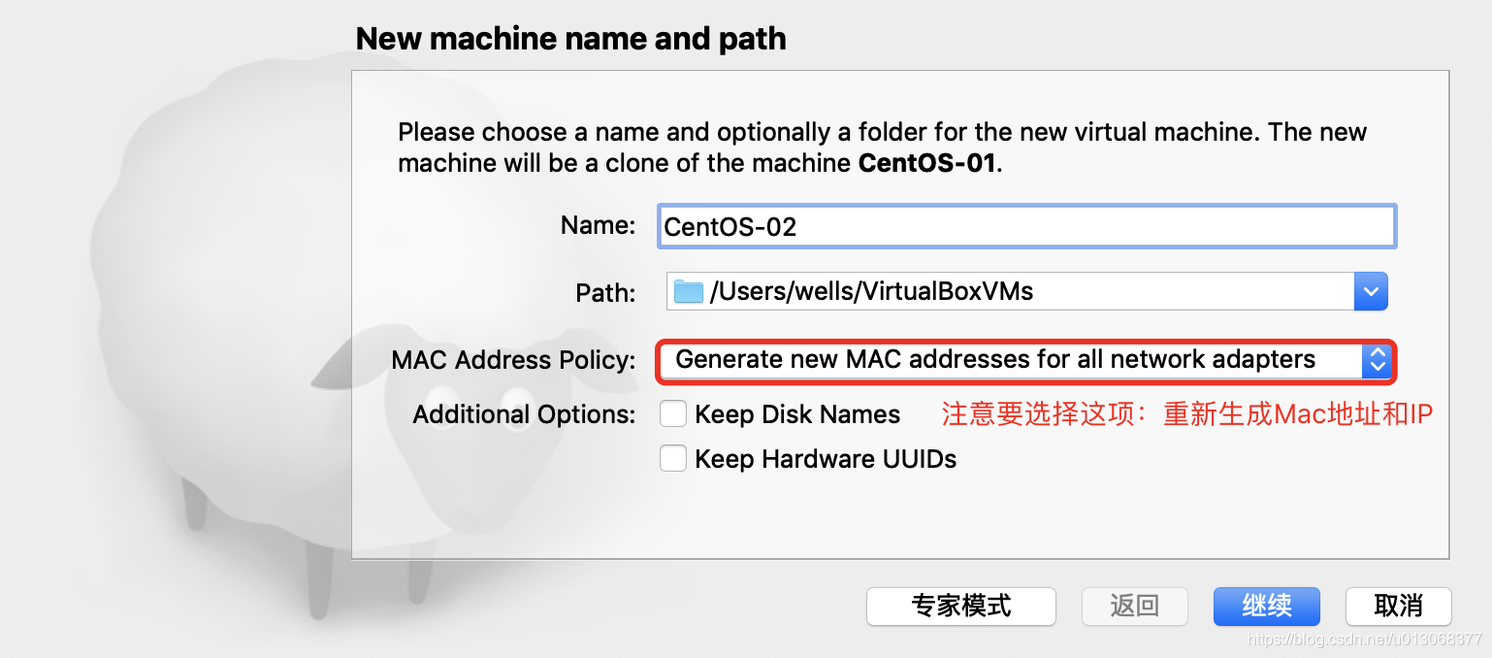

3.1、方法一:从主节点copy安装从节点

3.1.1、从 k8s master 虚拟机复制两个服务器节点:

3.1.2、将node加入k8s集群

在安装 kubernetes 集群的时候,进行 kubeadm init 时,会打印出一个结果文件,即 “步骤二” 的 kubeadm-init-res.txt 文件,在文件最末尾有一行,如下:

kubeadm join 192.168.56.3:6443 --token oc52pv.cf3ho1y31xd9emi7 \

--discovery-token-ca-cert-hash sha256:ff1f49f651cf59d5c60de9b254cff9118790ccff7bee75e9e68437a8f17bf951

将上面命令在copy出来的服务器节点上执行,如下:

[root@node01 ~]# kubeadm join 192.168.56.3:6443 --token oc52pv.cf3ho1y31xd9emi7 --discovery-token-ca-cert-hash sha256:ff1f49f651cf59d5c60de9b254cff9118790ccff7bee75e9e68437a8f17bf951

W0610 13:14:31.557179 11071 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

可能报错:

[root@node02 ~]# kubeadm join 192.168.56.3:6443 --token oc52pv.cf3ho1y31xd9emi7 --discovery-token-ca-cert-hash sha256:ff1f49f651cf59d5c60de9b254cff9118790ccff7bee75e9e68437a8f17bf951

W0610 13:15:32.765942 12138 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR DirAvailable--etc-kubernetes-manifests]: /etc/kubernetes/manifests is not empty

[ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

[ERROR Port-10250]: Port 10250 is in use

[ERROR FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

这是因为,我们是从 k8s master 中直接完全复制过来的CentOS节点,已经有上述目录了,那么如何解决呢

- kubeadm reset :执行该命令,将 k8s master 移除,然后再执行 kubeadm join

3.2、方法二:从一个新的服务器上构建两个服务器节点

服务器复制完成后,需要安装 docker、kubectl、kubeadm、kubelet 等组件,可参考:”步骤二”,执行 “步骤二 - 初始化 k8s 集群” 以前的步骤,然后再进行 节点加入 k8s 集群:

[root@node01 ~]# kubeadm join 192.168.56.3:6443 --token oc52pv.cf3ho1y31xd9emi7 --discovery-token-ca-cert-hash sha256:ff1f49f651cf59d5c60de9b254cff9118790ccff7bee75e9e68437a8f17bf951

W0610 13:14:31.557179 11071 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

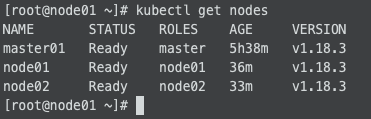

安装结果:

四、遇到问题

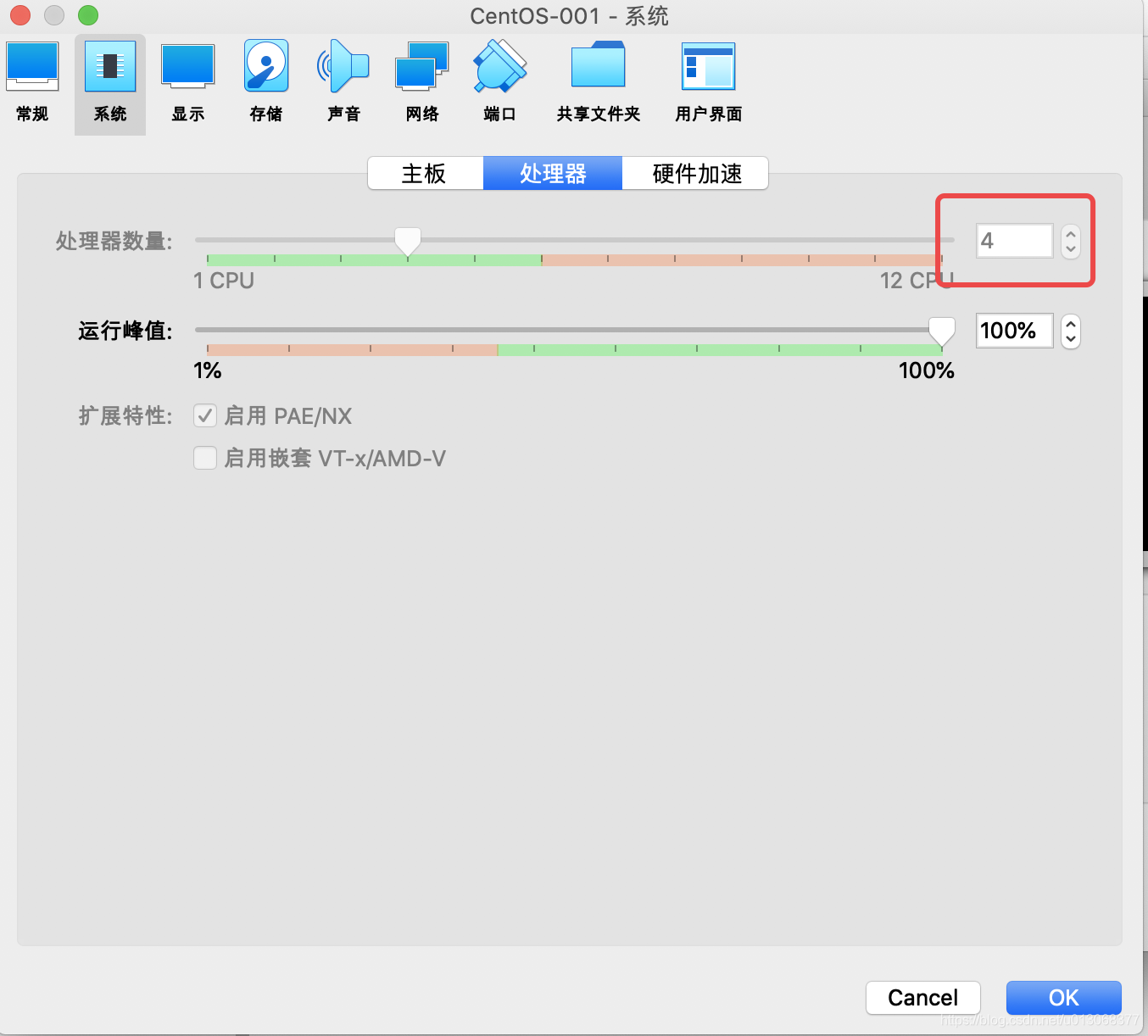

问题一:[ERROR NumCPU]: the number of available CPUs 1 is less than the required 2

出现上述错误的原因:因为物理机或者虚拟机不满足Kubernetes的基础配置造成的。而Kubernetes对GPU要求至少是2核,2G内存。修改下处理器的数量即可

问题二:kubeadm init /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

解决:

$ echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

$ echo 1 > /proc/sys/net/bridge/bridge-nf-call-ip6tables

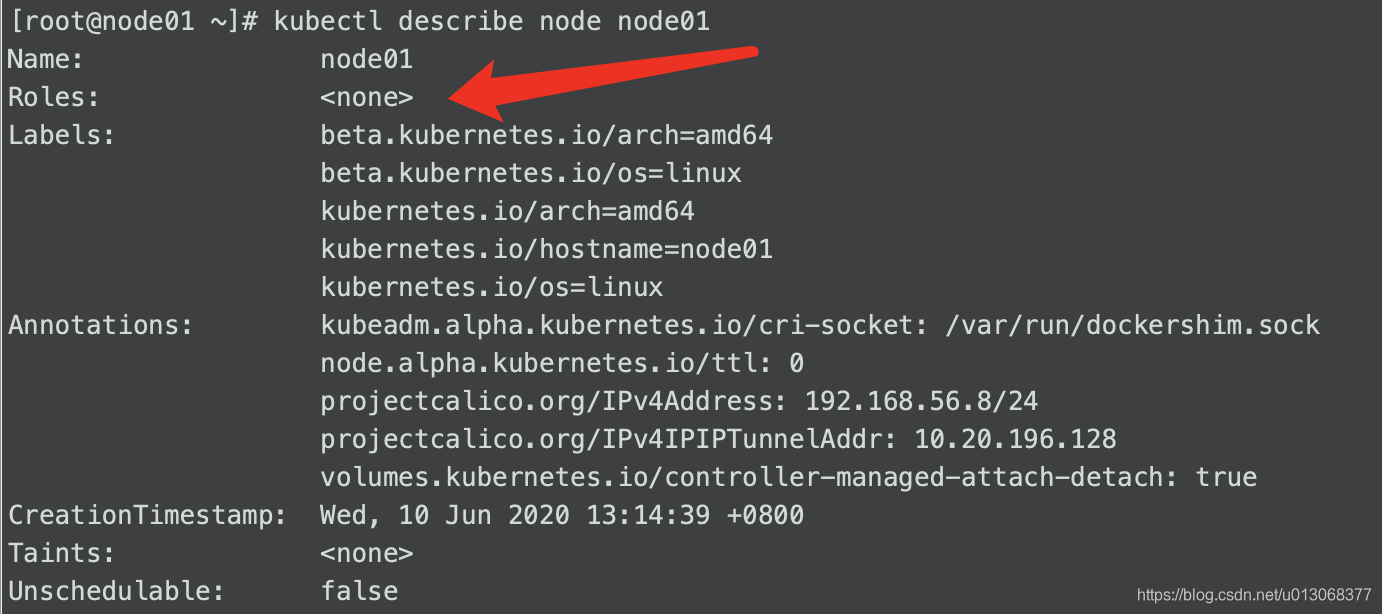

问题三:kubectl get node 发现标签为 none,kubernetes修改node的role标签

查看节点标签描述:

kubectl describe node 节点名字

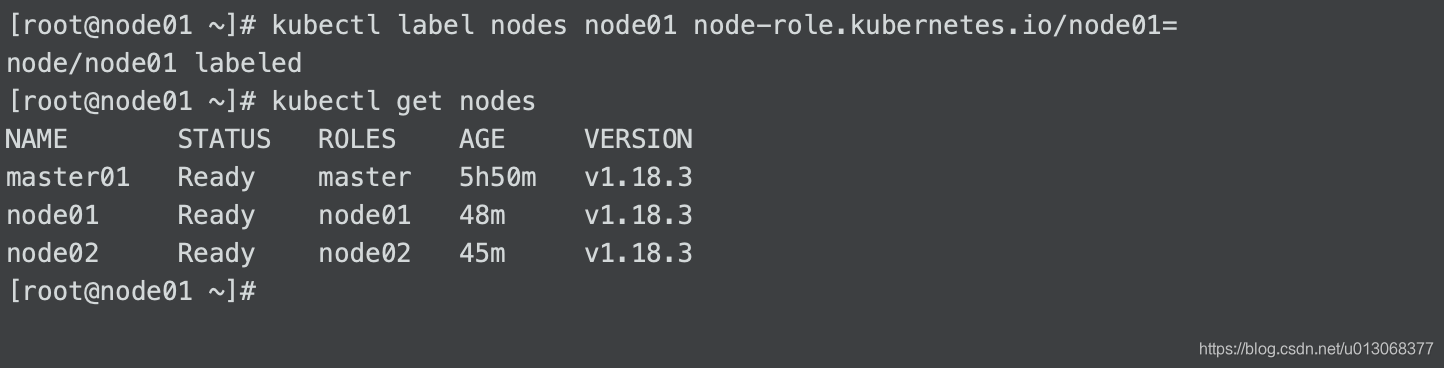

如果需要给 node 增加标签,则要执行:

kubectl label nodes 节点名字 node-role.kubernetes.io/标签名字=

如果要去掉 node 标签,需要执行命令

kubectl label node 节点名字 node-role.kubernetes.io/标签名字-

执行情况:

五、参考

https://www.tecmint.com/install-kubernetes-cluster-on-centos-7/

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

https://www.jianshu.com/p/e43f5e848da1

https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-config/