本文主要讲解 Hadoop RPC Server 的设计与实现,以 ProtobufRpcEngine 为实例,分步进行叙述

RPC 是帮助我们屏蔽网络编程细节,实现调用远程方法就跟调用本地(同一个项目中的方法)一样的体验,我们不需要因为这个方法是远程调用就需要编写很多与业务无关的代码

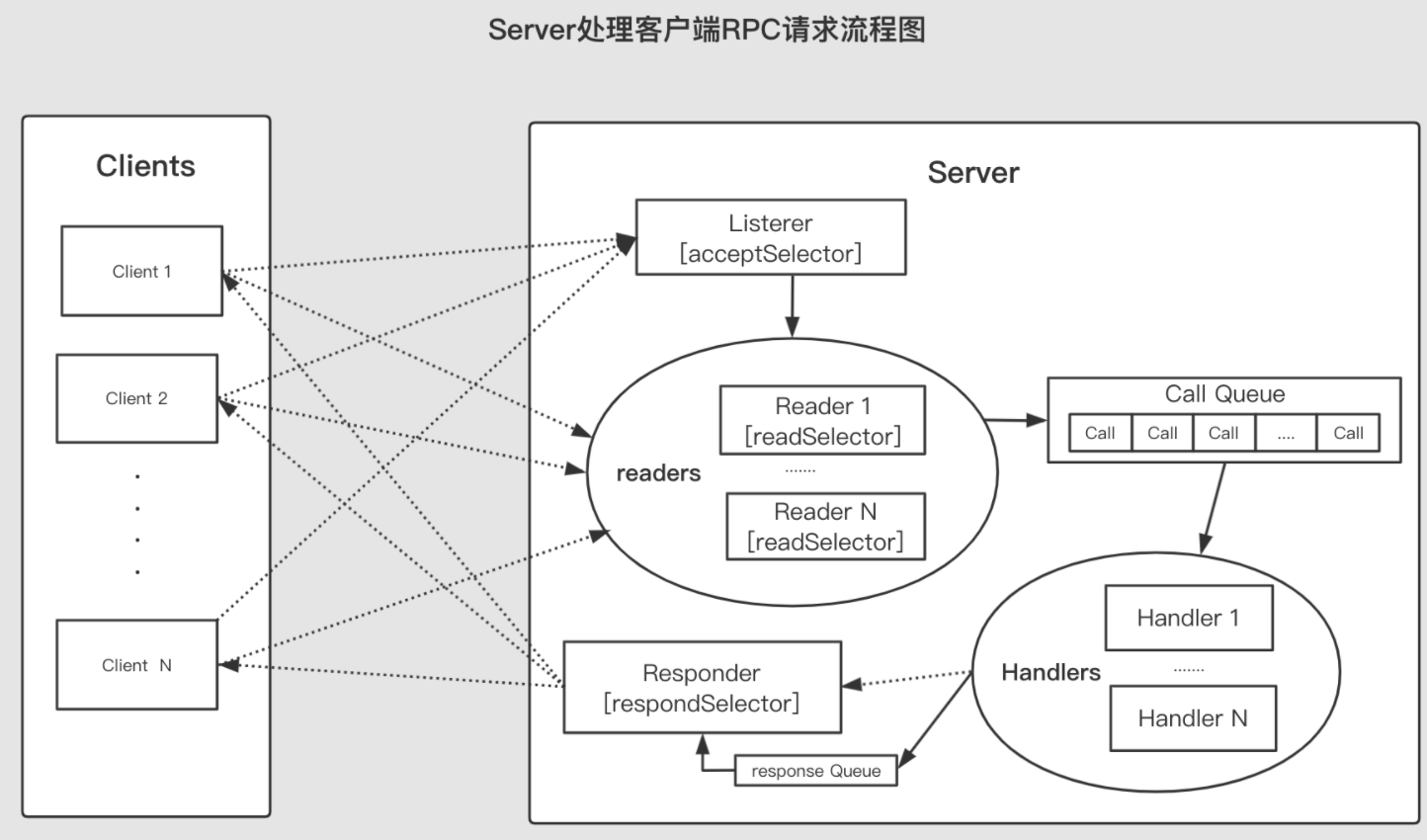

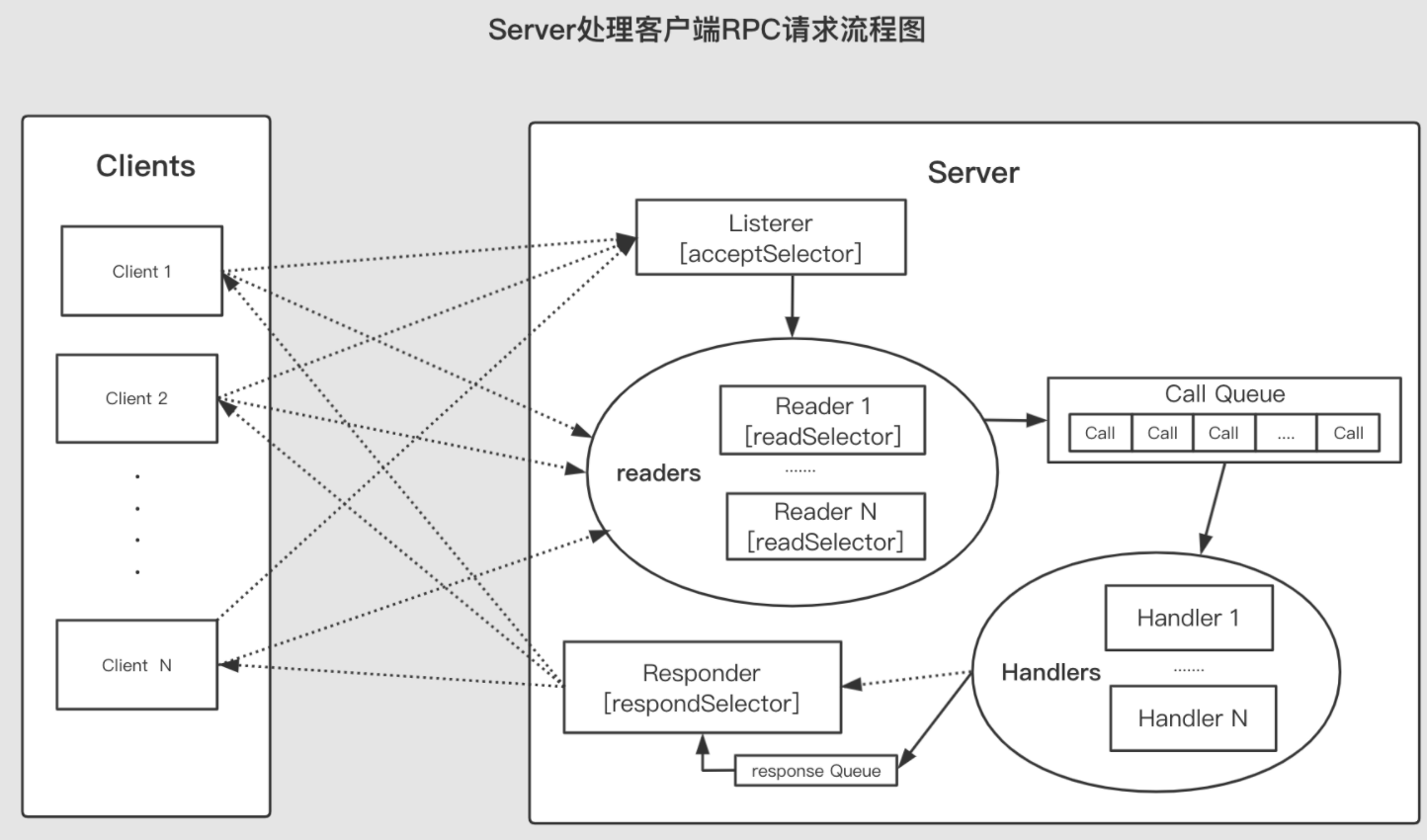

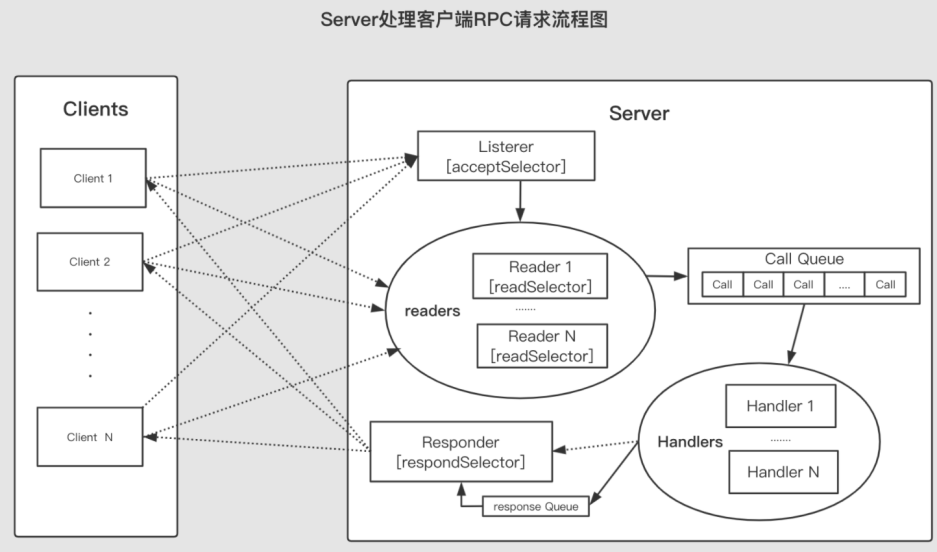

Server端架构

Server 端,采用 Reactor 架构

Listener 负责监听服务,当有请求进来,会在 reader pool 中获取一个 Reader 读取请求

Reader 读取 Client 的请求,将读取的数据 Call 放到队列 callQueue 中

Handler 读取 callQueue 中的数据进行处理,将处理的结果交由 Responder 处理 [

[

](https://blog.csdn.net/zhanglong_4444/article/details/105634160)

Server 处理流程如下:

整个 Server 只有一个 Listener 线程,Listener 对象中的 Selector 对象 acceptorSelector 负责监听来自客户端的 Socket 连接请求。acceptorSelector 在 ServerSocketChannel 上注册 OP_ACCEPT 事件,等待客户端 Client.call() 中的 getConnection 触发该事件唤醒 Listener 线程,创建新的 SocketChannel 并创建 readers 线程池;Listener 会在 reader 线程池中选取一个线程,并在 Reader 的 readerSelector 上注册 OP_READ 事件

readerSelector 监听 OP_READ 事件,当客户端发送 RPC 请求,触发 readerSelector 唤醒 Reader 线程;Reader 线程从 SocketChannel 中读取数据封装成 Call 对象,然后放入共享队列 callQueue

最初,handlers 线程池都在 callQueue 上阻塞(BlockingQueue.take()),当有 Call 对象加入,其中一个 Handler 线程被唤醒。根据 Call 对象上的信息,调用 Server.call() 方法(类似 Client.call() ),反序列化并执行 RPC 请求对应的本地函数,最后将响应返回写入 SocketChannel

Responder 线程起着缓冲作用。当有大量响应或网络不佳时,Handler 不能将完整的响应返回客户端,会在 Responder 的 respondSelector 上注册 OP_WRITE 事件,当监听到写条件时,会唤醒 Responder 返回响应

Server端创建流程

以下创建 Server 端的代码中协议采用 proto ,产生的 RpcEngine 是 ProtobufRpcEngine

public static void main(String[] args) throws Exception{//1. 构建配置对象Configuration conf = new Configuration();//2. 协议对象的实例MetaInfoServer serverImpl = new MetaInfoServer();BlockingService blockingService =CustomProtos.MetaInfo.newReflectiveBlockingService(serverImpl);//3. 设置协议的RpcEngine为ProtobufRpcEngine .RPC.setProtocolEngine(conf, MetaInfoProtocol.class,ProtobufRpcEngine.class);//4. 构建RPC框架RPC.Builder builder = new RPC.Builder(conf);//5. 绑定地址builder.setBindAddress("localhost");//6. 绑定端口builder.setPort(7777);//7. 绑定协议builder.setProtocol(MetaInfoProtocol.class);//8. 调用协议实现类builder.setInstance(blockingService);//9. 创建服务RPC.Server server = builder.build();//10. 启动服务server.start();}

代码主要分三部分:

定义接口以及实现

设置服务的参数。如:协议使用的 RpcEngine 类型、Server 发布的 IP ,端口。绑定协议&协议的实现对象.

根据第二条中设置的 RpcEngine 的参数,构建 RpcEngine 并启动服务

第一部分和第二部分就是使用 proto 定义一个协议,绑定到 RPC.Builder 的实现对象里面,核心是

RPC.Server server = builder.build(); // 创建服务

来解读一下如何构建 RpcEngine

/*** Build the RPC Server.* @throws IOException on error* @throws HadoopIllegalArgumentException when mandatory fields are not set*/public Server build() throws IOException, HadoopIllegalArgumentException {if (this.conf == null) {throw new HadoopIllegalArgumentException("conf is not set");}if (this.protocol == null) {throw new HadoopIllegalArgumentException("protocol is not set");}if (this.instance == null) {throw new HadoopIllegalArgumentException("instance is not set");}// 调用getProtocolEngine()获取当前RPC类配置的RpcEngine对象// 在NameNodeRpcServer的构造方法中已将当前RPC类的RpcEngine对象设置为ProtobufRpcEngine// 获取了ProtobufRpcEngine对象之后,build()方法会在// ProtobufRpcEngine对象上调用getServer()方法获取一个RPC Server对象的引用return getProtocolEngine(this.protocol, this.conf).getServer(this.protocol, this.instance, this.bindAddress, this.port,this.numHandlers, this.numReaders, this.queueSizePerHandler,this.verbose, this.conf, this.secretManager, this.portRangeConfig,this.alignmentContext);}

在这里,主要的是有两个方法,一个是 getProtocolEngine 另一个是 getServer

逻辑顺序是先获取对应协议的 RpcEngine ,然后再用 RpcEngine 创建一个 Server 服务

首先看 getProtocolEngine

// return the RpcEngine configured to handle a protocolstatic synchronized RpcEngine getProtocolEngine(Class<?> protocol,Configuration conf) {// 从缓存中获取RpcEngine, 这个是提前设置的// 通过 RPC.setProtocolEngine(conf, MetaInfoProtocol.class,ProtobufRpcEngine.class);RpcEngine engine = PROTOCOL_ENGINES.get(protocol);if (engine == null) {// 通过这里获取RpcEngine的实现类, 这里我们获取的是 ProtobufRpcEngine.classClass<?> impl = conf.getClass(ENGINE_PROP + "." + protocol.getName(),WritableRpcEngine.class);// impl : org.apache.hadoop.ipc.ProtobufRpcEngineengine = (RpcEngine)ReflectionUtils.newInstance(impl, conf);PROTOCOL_ENGINES.put(protocol, engine);}return engine;}

在这里, 先通过

RpcEngine engine = PROTOCOL_ENGINES.get(protocol);

获取到协议对应的 RpcEngine,然后再通过

engine = (RpcEngine)ReflectionUtils.newInstance(impl, conf);

进行实例化,这样我们就获取到了 RpcEngine 对象的实例 ProtobufRpcEngine

在获取到 ProtobufRpcEngine 之后,调用其 getServer 方法,获取 Server 实例

@Overridepublic RPC.Server getServer(Class<?> protocol, Object protocolImpl,String bindAddress, int port, int numHandlers, int numReaders,int queueSizePerHandler, boolean verbose, Configuration conf,SecretManager<? extends TokenIdentifier> secretManager,String portRangeConfig, AlignmentContext alignmentContext)throws IOException {return new Server(protocol, protocolImpl, conf, bindAddress, port,numHandlers, numReaders, queueSizePerHandler, verbose, secretManager,portRangeConfig, alignmentContext);}

在整个流程中 getServer 会调用 new Server 的构造方法创建 Server 服务

protocolClass:protocol协议的类 protocolImpl:protocol实现类 conf:配置文件 bindAddress:Server绑定的ip地址 port:Server绑定的端口 numHandlers:handler的线程数量 , 默认值 1 verbose:是否每一个请求,都需要打印日志. portRangeConfig:A config parameter that can be used to restrict alignmentContext:provides server state info on client responses

在 Server 的构建方法中,首先会调用父类的构建方法。然后再调用 registerProtocolAndlmpl 方法注册接口类和接口的实现类

/*** Construct an RPC server.** @param protocolClass the class of protocol* @param protocolImpl the protocolImpl whose methods will be called* @param conf the configuration to use* @param bindAddress the address to bind on to listen for connection* @param port the port to listen for connections on* @param numHandlers the number of method handler threads to run* @param verbose whether each call should be logged* @param portRangeConfig A config parameter that can be used to restrict* @param alignmentContext provides server state info on client responses*/public Server(Class<?> protocolClass, Object protocolImpl,Configuration conf, String bindAddress, int port, int numHandlers,int numReaders, int queueSizePerHandler, boolean verbose,SecretManager<? extends TokenIdentifier> secretManager,String portRangeConfig, AlignmentContext alignmentContext)throws IOException {super(bindAddress, port, null, numHandlers,numReaders, queueSizePerHandler, conf,serverNameFromClass(protocolImpl.getClass()), secretManager,portRangeConfig);setAlignmentContext(alignmentContext);this.verbose = verbose;// 调用registerProtocolAndlmpl()方法// 注册接口类protocolClass和实现类protocolImpl的映射关系registerProtocolAndImpl(RPC.RpcKind.RPC_PROTOCOL_BUFFER, protocolClass,protocolImpl);}

重点在于调用父类的构造方法,解读一下这里面做了什么

RPC.Server

protected Server(String bindAddress, int port,Class<? extends Writable> paramClass, int handlerCount,int numReaders, int queueSizePerHandler,Configuration conf, String serverName,SecretManager<? extends TokenIdentifier> secretManager,String portRangeConfig) throws IOException {// 调用父类, 进行Server的初始化操作super(bindAddress, port, paramClass, handlerCount, numReaders, queueSizePerHandler,conf, serverName, secretManager, portRangeConfig);// 在这里设置meta data的通讯协议, 已经处理的RpcEngineinitProtocolMetaInfo(conf);}

这里一共分两步,一个当前 Server 继续调用父类[org.apache.hadoop.ipc.Server]构造方法。另一个继续注册元数据的通讯协议&实现类

同样,只看父类[org.apache.hadoop.ipc.Server]构造方法就可以了

org.apache.hadoop.ipc.Server

到这里,才是 Server 端的真正构建过程

protected Server(String bindAddress, int port,Class<? extends Writable> rpcRequestClass, int handlerCount,int numReaders, int queueSizePerHandler, Configuration conf,String serverName, SecretManager<? extends TokenIdentifier> secretManager,String portRangeConfig)throws IOException {// 绑定IP地址 必填this.bindAddress = bindAddress;// 绑定配置文件this.conf = conf;this.portRangeConfig = portRangeConfig;// 绑定端口 必填this.port = port;// 这个值应该是为nullthis.rpcRequestClass = rpcRequestClass;// handlerCount的线程数量this.handlerCount = handlerCount;this.socketSendBufferSize = 0;// 服务名this.serverName = serverName;this.auxiliaryListenerMap = null;// server接收的最大数据长度// ipc.maximum.data.length 默认 : 64 * 1024 * 1024 ===> 64 MBthis.maxDataLength = conf.getInt(CommonConfigurationKeys.IPC_MAXIMUM_DATA_LENGTH,CommonConfigurationKeys.IPC_MAXIMUM_DATA_LENGTH_DEFAULT);// handler队列的最大数量默认值为-1, 即默认最大容量为handler线程的数量 * 每个handler线程队列的数量 = 1 * 100 = 100if (queueSizePerHandler != -1) {// 最大队列长度: 如果不是默认值, 则是handler线程的数量 * 每个handler线程队列的数量this.maxQueueSize = handlerCount * queueSizePerHandler;} else {// 最大队列长度: 如果是默认值的话, 默认值handler队列值为100// 所以最大队列长度为: handler 线程的数量 * 100this.maxQueueSize = handlerCount * conf.getInt(CommonConfigurationKeys.IPC_SERVER_HANDLER_QUEUE_SIZE_KEY,CommonConfigurationKeys.IPC_SERVER_HANDLER_QUEUE_SIZE_DEFAULT);}// 返回值的大小如果超过 1024*1024 = 1M,将会有告警[WARN]级别的日志输出....this.maxRespSize = conf.getInt(CommonConfigurationKeys.IPC_SERVER_RPC_MAX_RESPONSE_SIZE_KEY,CommonConfigurationKeys.IPC_SERVER_RPC_MAX_RESPONSE_SIZE_DEFAULT);// 设置readThread的线程数量, 默认 1if (numReaders != -1) {this.readThreads = numReaders;} else {this.readThreads = conf.getInt(CommonConfigurationKeys.IPC_SERVER_RPC_READ_THREADS_KEY,CommonConfigurationKeys.IPC_SERVER_RPC_READ_THREADS_DEFAULT);}// 设置reader的队列长度, 默认 100this.readerPendingConnectionQueue = conf.getInt(CommonConfigurationKeys.IPC_SERVER_RPC_READ_CONNECTION_QUEUE_SIZE_KEY,CommonConfigurationKeys.IPC_SERVER_RPC_READ_CONNECTION_QUEUE_SIZE_DEFAULT);// Setup appropriate callqueuefinal String prefix = getQueueClassPrefix();// callQueue reader读取client端的数据之后, 放到这个队列里面, 等到handler进行处理// 队列 : LinkedBlockingQueue<Call> 格式, 调度器默认: DefaultRpcSchedulerthis.callQueue = new CallQueueManager<Call>(getQueueClass(prefix, conf),getSchedulerClass(prefix, conf),getClientBackoffEnable(prefix, conf), maxQueueSize, prefix, conf);// 安全相关this.secretManager = (SecretManager<TokenIdentifier>) secretManager;this.authorize = conf.getBoolean(CommonConfigurationKeys.HADOOP_SECURITY_AUTHORIZATION, false);// configure supported authenticationsthis.enabledAuthMethods = getAuthMethods(secretManager, conf);this.negotiateResponse = buildNegotiateResponse(enabledAuthMethods);// Start the listener here and let it bind to the port// 创建Listener, 绑定监听的端口, 所有client端发送的请求, 都是通过这里进行转发listener = new Listener(port);// set the server port to the default listener port.this.port = listener.getAddress().getPort();connectionManager = new ConnectionManager();this.rpcMetrics = RpcMetrics.create(this, conf);this.rpcDetailedMetrics = RpcDetailedMetrics.create(this.port);// 打开/关闭服务器上TCP套接字连接的Nagle算法, 默认值 true// 如果设置为true,则禁用该算法,并可能会降低延迟,同时会导致更多/更小数据包的开销this.tcpNoDelay = conf.getBoolean(CommonConfigurationKeysPublic.IPC_SERVER_TCPNODELAY_KEY,CommonConfigurationKeysPublic.IPC_SERVER_TCPNODELAY_DEFAULT);// 如果当前的rpc服务比其他的rpc服务要慢的话, 记录日志, 默认 falsethis.setLogSlowRPC(conf.getBoolean(CommonConfigurationKeysPublic.IPC_SERVER_LOG_SLOW_RPC,CommonConfigurationKeysPublic.IPC_SERVER_LOG_SLOW_RPC_DEFAULT));// Create the responder here// 创建响应服务responder = new Responder();// 安全相关if (secretManager != null || UserGroupInformation.isSecurityEnabled()) {SaslRpcServer.init(conf);saslPropsResolver = SaslPropertiesResolver.getInstance(conf);}// 设置StandbyException异常处理this.exceptionsHandler.addTerseLoggingExceptions(StandbyException.class);}

构建完 Server 之后,就调用

server.start();

启动顺序为

public synchronized void start() {responder.start();listener.start();if (auxiliaryListenerMap != null && auxiliaryListenerMap.size() > 0) {for (Listener newListener : auxiliaryListenerMap.values()) {newListener.start();}}handlers = new Handler[handlerCount];for (int i = 0; i < handlerCount; i++) {handlers[i] = new Handler(i);handlers[i].start();}}

Server组件

Server 服务里面包含多个组件,多个组件之间相互衔接完成 RPC Server 端的功能

关键组件为:Listener,Reader,callQueue,Handler,ConnectionManager,Responder

Listener

Listener 是一个线程类,整个 Server 中只会有一个 Listener 线程,用于监听来自客户端的 Socket 连接请求。对于每一个新到达的 Socket 连接请求,Listener 都会从 readers 线程池中选择一个Reader线程来处理

Listener 对象中存在一个 Selector 对象 acceptSelector,负责监听来自客户端的 Socket 连接请求。当acceptSelector 监听到连接请求后,Listener 对象会初始化这个连接,之后采用轮询的方式从 readers 线程池中选出一个 Reader 线程处理 RPC 请求的读取操作

构建

// Start the listener here and let it bind to the port// 创建Listener, 绑定监听的端口, 所有client端发送的请求, 都是通过这里进行转发listener = new Listener(port);

常量

目的在于创建一个无阻塞的 socket

private class Listener extends Thread {// socket 接收服务的 channel 这是一个无阻塞的socker服务.private ServerSocketChannel acceptChannel = null; //the accept channel// 注册一个 Selector 用于服务的监控private Selector selector = null; //the selector that we use for the server// 注册Reader服务的缓冲池,用于读取client的服务.private Reader[] readers = null;private int currentReader = 0;// Socket 地址的实体对象private InetSocketAddress address; //the address we bind at// 监听的端口private int listenPort; //the port we bind at//服务监听队列的长度, 默认 128private int backlogLength = conf.getInt(CommonConfigurationKeysPublic.IPC_SERVER_LISTEN_QUEUE_SIZE_KEY,CommonConfigurationKeysPublic.IPC_SERVER_LISTEN_QUEUE_SIZE_DEFAULT);...........}

构造方法

初始化 Listener,根据 ip ,端口创建一个无阻塞的 socket 并绑定 SelectionKey.OP_ACCEPT 事件到 Selector

根据 readThreads 的数量,构建 Reader ```java Listener(int port) throws IOException {

// 创建InetSocketAddress 实例 address = new InetSocketAddress(bindAddress, port); // Create a new server socket and set to non blocking mode

// 创建一个无阻塞的socket服务 acceptChannel = ServerSocketChannel.open(); acceptChannel.configureBlocking(false);

// Bind the server socket to the local host and port // 绑定服务和端口 bind(acceptChannel.socket(), address, backlogLength, conf, portRangeConfig);

//Could be an ephemeral port // 可能是一个临时端口 this.listenPort = acceptChannel.socket().getLocalPort();

//设置当前线程的名字 Thread.currentThread().setName(“Listener at “ + bindAddress + “/“ + this.listenPort);

// create a selector; // 创建一个selector selector= Selector.open();

// 创建 Reader readers = new Reader[readThreads]; for (int i = 0; i < readThreads; i++) {

Reader reader = new Reader("Socket Reader #" + (i + 1) + " for port " + port);readers[i] = reader;reader.start();

}

// Register accepts on the server socket with the selector. /// 注册 SelectionKey.OP_ACCEPT 事件到 selector acceptChannel.register(selector, SelectionKey.OP_ACCEPT);

//设置线程名字 this.setName(“IPC Server listener on “ + port); //设置守护模式. this.setDaemon(true);

}

<a name="JToOX"></a>#### runListener 类中定义了一个 Selector 对象,负责监听 `SelectionKey.OP_ACCEPT` 事件,Listener 线程的 run() 方法会循环判断是否监听到了 OP_ACCEPT 事件, 也就是是否有新的 Socket 连接请求到达,如果有则调用doAccept() 方法响应```java@Overridepublic void run() {LOG.info(Thread.currentThread().getName() + ": starting");SERVER.set(Server.this);// 创建线程, 定时扫描connection, 关闭超时,无效的连接connectionManager.startIdleScan();while (running) {SelectionKey key = null;try {//如果没有请求进来的话,会阻塞.getSelector().select();//循环判断是否有新的连接建立请求Iterator<SelectionKey> iter = getSelector().selectedKeys().iterator();while (iter.hasNext()) {key = iter.next();iter.remove();try {if (key.isValid()) {if (key.isAcceptable()){//如果有,则调用doAccept()方法响应doAccept(key);}}} catch (IOException e) {}key = null;}} catch (OutOfMemoryError e) {// 这里可能出现内存溢出的情况,要特别注意// 如果内存溢出了, 会关闭当前连接, 休眠 60 秒LOG.warn("Out of Memory in server select", e);closeCurrentConnection(key, e);connectionManager.closeIdle(true);try { Thread.sleep(60000); } catch (Exception ie) {}} catch (Exception e) {//捕获到其他异常,也关闭当前连接closeCurrentConnection(key, e);}}LOG.info("Stopping " + Thread.currentThread().getName());// 关闭请求. 停止所有服务.synchronized (this) {try {acceptChannel.close();selector.close();} catch (IOException e) { }selector = null;acceptChannel = null;// close all connectionsconnectionManager.stopIdleScan();connectionManager.closeAll();}}

doAccept(key)

doAccept() 方法会接收来自客户端的 Socket 连接请求并初始化 Socket 连接。之后 doAccept() 方法会从 readers线程池中选出一个 Reader 线程读取来自这个客户端的RPC请求。每个Reader线程都会有一个自己的readSelector,用于监听是否有新的 RPC 请求到达

所以 doAccept() 方法在建立连接并选出 Reader 对象后,会在这个 Reader 对象的 readSelector 上注册 OP_READ事件。doAccept() 方法会通过 SelectionKey 将新构造的 Connection 对象传给 Reader,Connection 类封装了 Server 与 Client 之间的 Socket 连接,这样 Reader 线程在被唤醒时就可以通过 Connection 对象读取 RPC 请求

void doAccept(SelectionKey key) throws InterruptedException, IOException,OutOfMemoryError {//接收请求,建立连接ServerSocketChannel server = (ServerSocketChannel) key.channel();SocketChannel channel;while ((channel = server.accept()) != null) {channel.configureBlocking(false);channel.socket().setTcpNoDelay(tcpNoDelay);channel.socket().setKeepAlive(true);// 获取reader, 通过 % 取余的方式获取readerReader reader = getReader();//构造Connection对象,添加到readKey的附件传递给Reader对象Connection c = connectionManager.register(channel, this.listenPort);// 如果connectionManager获取不到Connection, 关闭当前连接if (c == null) {if (channel.isOpen()) {IOUtils.cleanup(null, channel);}connectionManager.droppedConnections.getAndIncrement();continue;}// so closeCurrentConnection can get the objectkey.attach(c);// reader增加连接, 处理 connection 里面的数据reader.addConnection(c);}}

Reader

Reader 也是一个线程类, 每个 Reader 线程都会负责读取若干个客户端连接发来的 RPC 请求。 而在 Server 类中会存在多个 Reader 线程构成一个 readers 线程池, readers 线程池并发地读取 RPC 请求, 提高了 Server 处理RPC 请求的速率。 Reader 类定义了自己的 readSelector 字段, 用于监听 SelectionKey.OP_READ 事件。 Reader类还定义了 adding 字段标识是否有任务正在添加到 Reader 线程

创建

Reader 是在 Listener 构造方法里面创建,Reader 继承 Thread 类,是一个线程方法

// 创建 Readerreaders = new Reader[readThreads];for (int i = 0; i < readThreads; i++) {Reader reader = new Reader("Socket Reader #" + (i + 1) + " for port " + port);readers[i] = reader;reader.start();}

[

](https://blog.csdn.net/zhanglong_4444/article/details/105634160)

常量

// 队列final private BlockingQueue<Connection> pendingConnections;// Selector 用于注册 channelprivate final Selector readSelector;

构造方法

Reader(String name) throws IOException {// 设置线程名字super(name);// reader的队列长度, 默认 100this.pendingConnections =new LinkedBlockingQueue<Connection>(readerPendingConnectionQueue);this.readSelector = Selector.open();}

run

调用 doRunLoop 方法

@Overridepublic void run() {LOG.info("Starting " + Thread.currentThread().getName());try {//Reader ... 进行轮询操作...doRunLoop();} finally {try {readSelector.close();} catch (IOException ioe) {LOG.error("Error closing read selector in " + Thread.currentThread().getName(), ioe);}}}

doRunLoop

Reader 线程的主循环则是在 doRunLoop() 方法中实现的,doRunLoop() 方法会监听当前 Reader 对象负责的所有客户端连接中是否有新的 RPC 请求到达,如果有则读取这些请求,然后将成功读取的请求用一个 Call 对象封装, 最后放入 callQueue 中等待 Handler 线程处理

主要有两个步骤:

从队列 pendingConnections 中接入连接,注册 SelectionKey.OP_READ 事件到 Selector

有可读事件时,调用 doRead() 方法处理

private synchronized void doRunLoop() {while (running) {SelectionKey key = null;try {// consume as many connections as currently queued to avoid// unbridled acceptance of connections that starves the selectint size = pendingConnections.size();for (int i=size; i>0; i--) {Connection conn = pendingConnections.take();conn.channel.register(readSelector, SelectionKey.OP_READ, conn);}//等待请求接入readSelector.select();//在当前的readSelector上等待可读事件,也就是有客户端RPC请求到达Iterator<SelectionKey> iter = readSelector.selectedKeys().iterator();while (iter.hasNext()) {key = iter.next();iter.remove();try {if (key.isReadable()) {//有可读事件时,调用doRead()方法处理doRead(key);}} catch (CancelledKeyException cke) {// something else closed the connection, ex. responder or// the listener doing an idle scan. ignore it and let them// clean up.LOG.info(Thread.currentThread().getName() +": connection aborted from " + key.attachment());}key = null;}} catch (InterruptedException e) {if (running) { // unexpected -- log itLOG.info(Thread.currentThread().getName() + " unexpectedly interrupted", e);}} catch (IOException ex) {LOG.error("Error in Reader", ex);} catch (Throwable re) {LOG.error("Bug in read selector!", re);ExitUtil.terminate(1, "Bug in read selector!");}}}

doRead(key)

当有数据到达触发 Selector 的 SelectionKey.OP_READ 的时候,会通过 key.attachment() 方法获取 SelectionKey key 值上绑定的 Connection 对象,然后调用 c.readAndProcess() 读取数据,同时会更新 connetion 上的 lastContact 时间戳,当 c.readAndProcess() 的返回值 count 值小于 0 或者 connetion 的 shouldClose 方法返回值true 时,才会关闭 connection

// doRead()方法负责读取RPC请求,// 虽然readSelector监听到了RPC请求的可读事件,// 但是doRead()方法此时并不知道这个RPC请求是由哪个客户端发送来的,// 所以doRead()方法首先调用SelectionKey.attachment()方法获取Listener对象构造的Connection对象,// Connection对象中封装了Server与Client之间的网络连接,之后doRead()方法只需调用// Connection.readAndProcess()方法就可以读取RPC请求了,这里的设计非常的巧妙。void doRead(SelectionKey key) throws InterruptedException {int count;//通过SelectionKey获取Connection对象// (Connection对象是 Listener#run方法中的doAccept 方法中绑定的 key.attach(c) )Connection c = (Connection)key.attachment();if (c == null) {return;}c.setLastContact(Time.now());try {//调用Connection.readAndProcess处理读取请求count = c.readAndProcess();} catch (InterruptedException ieo) {LOG.info(Thread.currentThread().getName() + ": readAndProcess caught InterruptedException", ieo);throw ieo;} catch (Exception e) {// Any exceptions that reach here are fatal unexpected internal errors// that could not be sent to the client.LOG.info(Thread.currentThread().getName() +": readAndProcess from client " + c +" threw exception [" + e + "]", e);count = -1; //so that the (count < 0) block is executed}// setupResponse will signal the connection should be closed when a// fatal response is sent.if (count < 0 || c.shouldClose()) {closeConnection(c);c = null;} else {c.setLastContact(Time.now());}}

CallQueue

这里默认就当成一个普通的阻塞式队列,如果不配置 scheduer 的话。默认的调度策略就是 DefaultRpcScheduler

DefaultRpcScheduler 就是一个摆设,什么也干不了。使用的是调度队里的 FIFO 策略

如果配置了其他的策略的话,需要自行去看一下对应的策略。比如:DecayRpcScheduler

默认调度策略是 FIFO,虽然 FIFO 在先到先服务的情况下足够公平,但如果用户执行的 I/O 操作较多,相比 I/O 操作较少的用户,将获得更多的服务。在这种情况下,FIFO 有失公平并且会导致延迟增加

FairCallQueue 队列会根据调用者的调用规模将传入的 RPC 调用分配至多个队列中。调度模块会跟踪最新的调用,并为调用量较小的用户分配更高的优先级

创建

callQueue 是在 Server 初始化的时候进行创建的

callQueue 不仅仅是一个队列,是通过 CallQueueManager 对象进行管理,支持阻塞式队列,调度

//队列 : LinkedBlockingQueue<Call> 格式. 调度器默认: DefaultRpcSchedulerthis.callQueue = new CallQueueManager<Call>(getQueueClass(prefix, conf),getSchedulerClass(prefix, conf),getClientBackoffEnable(prefix, conf), maxQueueSize, prefix, conf);

常量

// Number of checkpoints for empty queue.private static final int CHECKPOINT_NUM = 20;// Interval to check empty queue.private static final long CHECKPOINT_INTERVAL_MS = 10;/**** 启用Backoff配置参数* 当前,若应用程序中包含较多的用户调用,假设没有达到操作系统的连接限制,则RPC请求将处于阻塞状态。* 或者,当RPC或NameNode在重负载时,可以基于某些策略将一些明确定义的异常抛回给客户端,* 客户端将理解这种异常并进行指数回退,* 以此作为类RetryInvocationHandler的另一个实现*/private volatile boolean clientBackOffEnabled;// Atomic refs point to active callQueue// We have two so we can better control swapping// 存放队列引用private final AtomicReference<BlockingQueue<E>> putRef;// 获取队列引用private final AtomicReference<BlockingQueue<E>> takeRef;//调度器private RpcScheduler scheduler;

构造方法

public CallQueueManager(Class<? extends BlockingQueue<E>> backingClass,Class<? extends RpcScheduler> schedulerClass,boolean clientBackOffEnabled, int maxQueueSize, String namespace,Configuration conf) {int priorityLevels = parseNumLevels(namespace, conf);// 创建调度scheduler. 默认DefaultRpcSchedulerthis.scheduler = createScheduler(schedulerClass, priorityLevels,namespace, conf);// 创建queue 实例BlockingQueue<E> bq = createCallQueueInstance(backingClass,priorityLevels, maxQueueSize, namespace, conf);this.clientBackOffEnabled = clientBackOffEnabled;// 放入队列引用this.putRef = new AtomicReference<BlockingQueue<E>>(bq);// 获取队列引用this.takeRef = new AtomicReference<BlockingQueue<E>>(bq);LOG.info("Using callQueue: {}, queueCapacity: {}, " +"scheduler: {}, ipcBackoff: {}.",backingClass, maxQueueSize, schedulerClass, clientBackOffEnabled);}

put(E e)

/*** Insert e into the backing queue or block until we can. If client* backoff is enabled this method behaves like add which throws if* the queue overflows.* If we block and the queue changes on us, we will insert while the* queue is drained.*/@Overridepublic void put(E e) throws InterruptedException {if (!isClientBackoffEnabled()) {putRef.get().put(e);} else if (shouldBackOff(e)) {throwBackoff();} else {// No need to re-check backoff criteria since they were just checkedaddInternal(e, false);}}

offer(E e)

/*** Insert e into the backing queue.* Return true if e is queued.* Return false if the queue is full.*/@Overridepublic boolean offer(E e) {return putRef.get().offer(e);}

ConnectionManager

ConnectionManager 就是对 Connection 的一个管理类,可以对 Connection 进行创建、监控等操作

创建

在 Server 的构建方法中进行创建

connectionManager = new ConnectionManager();

常量

// 现有Connection的数量final private AtomicInteger count = new AtomicInteger();final private AtomicLong droppedConnections = new AtomicLong();//现有的Connection连接.final private Set<Connection> connections;/* Map to maintain the statistics per User */final private Map<String, Integer> userToConnectionsMap;final private Object userToConnectionsMapLock = new Object();//Timer定时器, 定期检查/关闭 Connectionfinal private Timer idleScanTimer;// 定义空闲多久之后关闭 Connection 默认值: 4秒final private int idleScanThreshold;// 扫描间隔默认10秒final private int idleScanInterval;// 最大等待时间默认值20秒final private int maxIdleTime;// 定义一次断开连接的最大客户端数。 默认值 10final private int maxIdleToClose;// 定义最大连接数默认值 0, 无限制final private int maxConnections;

构造方法

ConnectionManager() {this.idleScanTimer = new Timer("IPC Server idle connection scanner for port " + getPort(), true);this.idleScanThreshold = conf.getInt(CommonConfigurationKeysPublic.IPC_CLIENT_IDLETHRESHOLD_KEY,CommonConfigurationKeysPublic.IPC_CLIENT_IDLETHRESHOLD_DEFAULT);this.idleScanInterval = conf.getInt(CommonConfigurationKeys.IPC_CLIENT_CONNECTION_IDLESCANINTERVAL_KEY,CommonConfigurationKeys.IPC_CLIENT_CONNECTION_IDLESCANINTERVAL_DEFAULT);this.maxIdleTime = 2 * conf.getInt(CommonConfigurationKeysPublic.IPC_CLIENT_CONNECTION_MAXIDLETIME_KEY,CommonConfigurationKeysPublic.IPC_CLIENT_CONNECTION_MAXIDLETIME_DEFAULT);this.maxIdleToClose = conf.getInt(CommonConfigurationKeysPublic.IPC_CLIENT_KILL_MAX_KEY,CommonConfigurationKeysPublic.IPC_CLIENT_KILL_MAX_DEFAULT);this.maxConnections = conf.getInt(CommonConfigurationKeysPublic.IPC_SERVER_MAX_CONNECTIONS_KEY,CommonConfigurationKeysPublic.IPC_SERVER_MAX_CONNECTIONS_DEFAULT);// create a set with concurrency -and- a thread-safe iterator, add 2// for listener and idle closer threadsthis.connections = Collections.newSetFromMap(new ConcurrentHashMap<Connection,Boolean>(maxQueueSize, 0.75f, readThreads+2));this.userToConnectionsMap = new ConcurrentHashMap<>();}

初始化 idleScanTimer 定时任务

this.idleScanTimer = new Timer("IPC Server idle connection scanner for port " + getPort(), true);

this.connections = Collections.newSetFromMap(new ConcurrentHashMap<Connection,Boolean>(maxQueueSize, 0.75f, readThreads+2));//返回值是线程安全的 Set<Connection>

ScheduleIdleScanTask 方法

由 Listener 的 run 方法进行调用,定时扫描 connetion,关闭超时、无效的 connetion

private void scheduleIdleScanTask() {if (!running) {return;}//创建线程,定时扫描connection, 关闭超时、无效的连接TimerTask idleScanTask = new TimerTask(){@Overridepublic void run() {if (!running) {return;}if (LOG.isDebugEnabled()) {LOG.debug(Thread.currentThread().getName()+": task running");}try {closeIdle(false);} finally {// explicitly reschedule so next execution occurs relative// to the end of this scan, not the beginningscheduleIdleScanTask();}}};idleScanTimer.schedule(idleScanTask, idleScanInterval);}

register 注册 connetion

由 Listener 的 doAccept 方法创建 Connection,并通过 add(connection) 方法加入到 connections 缓存中

//注册IO读事件Connection c = connectionManager.register(channel, this.listenPort);

Connection register(SocketChannel channel, int ingressPort) {if (isFull()) {return null;}Connection connection = new Connection(channel, Time.now(), ingressPort);add(connection);if (LOG.isDebugEnabled()) {LOG.debug("Server connection from " + connection +"; # active connections: " + size() +"; # queued calls: " + callQueue.size());}return connection;}

private boolean add(Connection connection) {boolean added = connections.add(connection);if (added) {count.getAndIncrement();}return added;}

Connection

Connection 类封装了 Server 与 Client 之间的 Socket 连接,doAccept() 方法会通过 SelectionKey 将新构造的Connection 对象传给 Reader,这样 Reader 线程在被唤醒时就可以通过 Connection 对象读取 RPC 请求了

创建

当客户端接入,触发 selector 上绑定的 SelectionKey.OP_ACCEPT 事件的时候,会根据当时的 server.accept() 返回的 SocketChannel 和监听的端口建立一个 Connection

//注册IO读事件Connection c = connectionManager.register(channel, this.listenPort);

Connection connection = new Connection(channel, Time.now(), ingressPort);

常量

private boolean connectionHeaderRead = false; // connection header is read?private boolean connectionContextRead = false; //if connection context that//follows connection header is readprivate SocketChannel channel;private ByteBuffer data;private ByteBuffer dataLengthBuffer;private LinkedList<RpcCall> responseQueue;// number of outstanding rpcsprivate AtomicInteger rpcCount = new AtomicInteger();private long lastContact;private int dataLength;private Socket socket;// Cache the remote host & port info so that even if the socket is// disconnected, we can say where it used to connect to.private String hostAddress;private int remotePort;private InetAddress addr;IpcConnectionContextProto connectionContext;String protocolName;SaslServer saslServer;private String establishedQOP;private AuthMethod authMethod;private AuthProtocol authProtocol;private boolean saslContextEstablished;private ByteBuffer connectionHeaderBuf = null;private ByteBuffer unwrappedData;private ByteBuffer unwrappedDataLengthBuffer;private int serviceClass;private boolean shouldClose = false;private int ingressPort;UserGroupInformation user = null;public UserGroupInformation attemptingUser = null; // user name before auth// Fake 'call' for failed authorization responseprivate final RpcCall authFailedCall =new RpcCall(this, AUTHORIZATION_FAILED_CALL_ID);private boolean sentNegotiate = false;private boolean useWrap = false;

构造方法

public Connection(SocketChannel channel, long lastContact,int ingressPort) {this.channel = channel;this.lastContact = lastContact;this.data = null;// the buffer is initialized to read the "hrpc" and after that to read// the length of the Rpc-packet (i.e 4 bytes)this.dataLengthBuffer = ByteBuffer.allocate(4);this.unwrappedData = null;this.unwrappedDataLengthBuffer = ByteBuffer.allocate(4);this.socket = channel.socket();this.addr = socket.getInetAddress();this.ingressPort = ingressPort;if (addr == null) {this.hostAddress = "*Unknown*";} else {this.hostAddress = addr.getHostAddress();}this.remotePort = socket.getPort();this.responseQueue = new LinkedList<RpcCall>();if (socketSendBufferSize != 0) {try {socket.setSendBufferSize(socketSendBufferSize);} catch (IOException e) {LOG.warn("Connection: unable to set socket send buffer size to " +socketSendBufferSize);}}}

ReadAndProcess()方法

Reader 线程会调用 readAndProcess() 方法从IO流中读取一个 RPC 请求

/*** This method reads in a non-blocking fashion from the channel:* this method is called repeatedly when data is present in the channel;* when it has enough data to process one rpc it processes that rpc.** On the first pass, it processes the connectionHeader,* connectionContext (an outOfBand RPC) and at most one RPC request that* follows that. On future passes it will process at most one RPC request.** Quirky things: dataLengthBuffer (4 bytes) is used to read "hrpc" OR* rpc request length.** @return -1 in case of error, else num bytes read so far* @throws IOException - internal error that should not be returned to* client, typically failure to respond to client* @throws InterruptedException** readAndProcess()方法会首先从Socket流中读取连接头域(connectionHeader),* 然后读取一个完整的RPC请求,* 最后调用processOneRpc()方法处理这个RPC请求。* processOneRpc()方法会读取出RPC请求头域,* 然后调用processRpcRequest()处理RPC请求 体。** 这里特别注意,* 如果在处理过程中抛出了异常,则直接通过Socket返回RPC响应(带 有Server异常信息的响应)。*/public int readAndProcess() throws IOException, InterruptedException {while (!shouldClose()) { // stop if a fatal response has been sent.// dataLengthBuffer is used to read "hrpc" or the rpc-packet lengthint count = -1;if (dataLengthBuffer.remaining() > 0) {count = channelRead(channel, dataLengthBuffer);if (count < 0 || dataLengthBuffer.remaining() > 0)return count;}if (!connectionHeaderRead) {// Every connection is expected to send the header;// so far we read "hrpc" of the connection header.if (connectionHeaderBuf == null) {// for the bytes that follow "hrpc", in the connection headerconnectionHeaderBuf = ByteBuffer.allocate(HEADER_LEN_AFTER_HRPC_PART);}count = channelRead(channel, connectionHeaderBuf);if (count < 0 || connectionHeaderBuf.remaining() > 0) {return count;}int version = connectionHeaderBuf.get(0);// TODO we should add handler for service class laterthis.setServiceClass(connectionHeaderBuf.get(1));dataLengthBuffer.flip();// Check if it looks like the user is hitting an IPC port// with an HTTP GET - this is a common error, so we can// send back a simple string indicating as much.if (HTTP_GET_BYTES.equals(dataLengthBuffer)) {setupHttpRequestOnIpcPortResponse();return -1;}if(!RpcConstants.HEADER.equals(dataLengthBuffer)) {LOG.warn("Incorrect RPC Header length from {}:{} "+ "expected length: {} got length: {}",hostAddress, remotePort, RpcConstants.HEADER, dataLengthBuffer);setupBadVersionResponse(version);return -1;}if (version != CURRENT_VERSION) {//Warning is ok since this is not supposed to happen.LOG.warn("Version mismatch from " +hostAddress + ":" + remotePort +" got version " + version +" expected version " + CURRENT_VERSION);setupBadVersionResponse(version);return -1;}// this may switch us into SIMPLEauthProtocol = initializeAuthContext(connectionHeaderBuf.get(2));dataLengthBuffer.clear(); // clear to next read rpc packet lenconnectionHeaderBuf = null;connectionHeaderRead = true;continue; // connection header read, now read 4 bytes rpc packet len}if (data == null) { // just read 4 bytes - length of RPC packetdataLengthBuffer.flip();dataLength = dataLengthBuffer.getInt();checkDataLength(dataLength);// Set buffer for reading EXACTLY the RPC-packet length and no more.data = ByteBuffer.allocate(dataLength);}// Now read the RPC packetcount = channelRead(channel, data);if (data.remaining() == 0) {dataLengthBuffer.clear(); // to read length of future rpc packetsdata.flip();ByteBuffer requestData = data;data = null; // null out in case processOneRpc throws.boolean isHeaderRead = connectionContextRead;//处理这个RPC请求。processOneRpc(requestData);// the last rpc-request we processed could have simply been the// connectionContext; if so continue to read the first RPC.if (!isHeaderRead) {continue;}}return count;}return -1;}

ProcessOneRpc()方法

processOneRpc() 方法会读取出 RPC 请求头域,然后调用 processRpcRequest() 处理 RPC 请求体

/*** Process one RPC Request from buffer read from socket stream* - decode rpc in a rpc-Call* - handle out-of-band RPC requests such as the initial connectionContext* - A successfully decoded RpcCall will be deposited in RPC-Q and* its response will be sent later when the request is processed.** Prior to this call the connectionHeader ("hrpc...") has been handled and* if SASL then SASL has been established and the buf we are passed* has been unwrapped from SASL.** @param bb - contains the RPC request header and the rpc request* @throws IOException - internal error that should not be returned to* client, typically failure to respond to client* @throws InterruptedException*/private void processOneRpc(ByteBuffer bb)throws IOException, InterruptedException {// exceptions that escape this method are fatal to the connection.// setupResponse will use the rpc status to determine if the connection// should be closed.int callId = -1;int retry = RpcConstants.INVALID_RETRY_COUNT;try {final RpcWritable.Buffer buffer = RpcWritable.Buffer.wrap(bb);//解析出RPC请求头域final RpcRequestHeaderProto header =getMessage(RpcRequestHeaderProto.getDefaultInstance(), buffer);//从RPC请求头域中提取出callIdcallId = header.getCallId();//从RPC请求头域中提取出重试次数retry = header.getRetryCount();if (LOG.isDebugEnabled()) {LOG.debug(" got #" + callId);}//检测头信息是否正确checkRpcHeaders(header);//处理RPC请求头域异常的情况if (callId < 0) { // callIds typically used during connection setupprocessRpcOutOfBandRequest(header, buffer);} else if (!connectionContextRead) {throw new FatalRpcServerException(RpcErrorCodeProto.FATAL_INVALID_RPC_HEADER,"Connection context not established");} else {//如果RPC请求头域正常,则直接调用processRpcRequest处理RPC请求体processRpcRequest(header, buffer);}} catch (RpcServerException rse) {// inform client of error, but do not rethrow else non-fatal// exceptions will close connection!if (LOG.isDebugEnabled()) {LOG.debug(Thread.currentThread().getName() +": processOneRpc from client " + this +" threw exception [" + rse + "]");}//通过Socket返回这个带有异常信息的RPC响应// use the wrapped exception if there is one.Throwable t = (rse.getCause() != null) ? rse.getCause() : rse;final RpcCall call = new RpcCall(this, callId, retry);setupResponse(call,rse.getRpcStatusProto(), rse.getRpcErrorCodeProto(), null,t.getClass().getName(),t.getMessage() != null ? t.getMessage() : t.toString());sendResponse(call);}}

ProcessRpcRequest 方法

processRpcRequest() 会从输入流中解析出完整的请求对象(包括请求元数据以及请求参数), 然后根据 RPC 请求头的信息(包括callId)构造 Call 对象(Call对象保存了这次调用的所有信息),最后将这个 Call 对象放入callQueue 队列中保存,等待 Handler 线程处理

/*** Process an RPC Request* - the connection headers and context must have been already read.* - Based on the rpcKind, decode the rpcRequest.* - A successfully decoded RpcCall will be deposited in RPC-Q and* its response will be sent later when the request is processed.* @param header - RPC request header* @param buffer - stream to request payload* @throws RpcServerException - generally due to fatal rpc layer issues* such as invalid header or deserialization error. The call queue* may also throw a fatal or non-fatal exception on overflow.* @throws IOException - fatal internal error that should/could not* be sent to client.* @throws InterruptedException*/private void processRpcRequest(RpcRequestHeaderProto header,RpcWritable.Buffer buffer) throws RpcServerException,InterruptedException {Class<? extends Writable> rpcRequestClass =getRpcRequestWrapper(header.getRpcKind());if (rpcRequestClass == null) {LOG.warn("Unknown rpc kind " + header.getRpcKind() +" from client " + getHostAddress());final String err = "Unknown rpc kind in rpc header" +header.getRpcKind();throw new FatalRpcServerException(RpcErrorCodeProto.FATAL_INVALID_RPC_HEADER, err);}//读取RPC请求体Writable rpcRequest;try { //Read the rpc requestrpcRequest = buffer.newInstance(rpcRequestClass, conf);} catch (RpcServerException rse) { // lets tests inject failures.throw rse;} catch (Throwable t) { // includes runtime exception from newInstanceLOG.warn("Unable to read call parameters for client " +getHostAddress() + "on connection protocol " +this.protocolName + " for rpcKind " + header.getRpcKind(), t);String err = "IPC server unable to read call parameters: "+ t.getMessage();throw new FatalRpcServerException(RpcErrorCodeProto.FATAL_DESERIALIZING_REQUEST, err);}TraceScope traceScope = null;if (header.hasTraceInfo()) {if (tracer != null) {// If the incoming RPC included tracing info, always continue the// traceSpanId parentSpanId = new SpanId(header.getTraceInfo().getTraceId(),header.getTraceInfo().getParentId());traceScope = tracer.newScope(RpcClientUtil.toTraceName(rpcRequest.toString()),parentSpanId);traceScope.detach();}}CallerContext callerContext = null;if (header.hasCallerContext()) {callerContext =new CallerContext.Builder(header.getCallerContext().getContext()).setSignature(header.getCallerContext().getSignature().toByteArray()).build();}//构造Call对象封装RPC请求信息RpcCall call = new RpcCall(this, header.getCallId(),header.getRetryCount(), rpcRequest,ProtoUtil.convert(header.getRpcKind()),header.getClientId().toByteArray(), traceScope, callerContext);// Save the priority level assignment by the schedulercall.setPriorityLevel(callQueue.getPriorityLevel(call));call.markCallCoordinated(false);if(alignmentContext != null && call.rpcRequest != null &&(call.rpcRequest instanceof ProtobufRpcEngine.RpcProtobufRequest)) {// if call.rpcRequest is not RpcProtobufRequest, will skip the following// step and treat the call as uncoordinated. As currently only certain// ClientProtocol methods request made through RPC protobuf needs to be// coordinated.String methodName;String protoName;ProtobufRpcEngine.RpcProtobufRequest req =(ProtobufRpcEngine.RpcProtobufRequest) call.rpcRequest;try {methodName = req.getRequestHeader().getMethodName();protoName = req.getRequestHeader().getDeclaringClassProtocolName();if (alignmentContext.isCoordinatedCall(protoName, methodName)) {call.markCallCoordinated(true);long stateId;stateId = alignmentContext.receiveRequestState(header, getMaxIdleTime());call.setClientStateId(stateId);}} catch (IOException ioe) {throw new RpcServerException("Processing RPC request caught ", ioe);}}try {//将Call对象放入callQueue中,等待Handler处理internalQueueCall(call);} catch (RpcServerException rse) {throw rse;} catch (IOException ioe) {throw new FatalRpcServerException(RpcErrorCodeProto.ERROR_RPC_SERVER, ioe);}incRpcCount(); // Increment the rpc count}

Handler

用于处理 RPC 请求并发回响应。Handler 对象会从 CallQueue 中不停地取出 RPC 请求, 然后执行 RPC 请求对应的本地函数, 最后封装响应并将响应发回客户端。 为了能够并发地处理 RPC 请求,Server 中存在多个 Handler对象

创建

/** Starts the service. Must be called before any calls will be handled. */public synchronized void start() {responder.start();listener.start();if (auxiliaryListenerMap != null && auxiliaryListenerMap.size() > 0) {for (Listener newListener : auxiliaryListenerMap.values()) {newListener.start();}}handlers = new Handler[handlerCount];for (int i = 0; i < handlerCount; i++) {handlers[i] = new Handler(i);handlers[i].start();}}

构造方法

public Handler(int instanceNumber) {this.setDaemon(true);this.setName("IPC Server handler "+ instanceNumber +" on default port " + port);}

run方法

Handler 线程类的主方法会循环从共享队列 callQueue 中取出待处理的 Call 对象,然后调用 Server.call() 方法执行 RPC 调用对应的本地函数,如果在调用过程中发生异常,则将异常信息保存下来。接下来 Handler 会调用setupResponse() 方法构造 RPC 响应, 并调用 responder.doRespond() 方法将响应发回

@Overridepublic void run() {LOG.debug(Thread.currentThread().getName() + ": starting");SERVER.set(Server.this);while (running) {TraceScope traceScope = null;Call call = null;long startTimeNanos = 0;// True iff the connection for this call has been dropped.// Set to true by default and update to false later if the connection// can be succesfully read.boolean connDropped = true;try {//从callQueue中取出请求call = callQueue.take(); // pop the queue; maybe blocked herestartTimeNanos = Time.monotonicNowNanos();if (alignmentContext != null && call.isCallCoordinated() &&call.getClientStateId() > alignmentContext.getLastSeenStateId()) {/** The call processing should be postponed until the client call's* state id is aligned (<=) with the server state id.* NOTE:* Inserting the call back to the queue can change the order of call* execution comparing to their original placement into the queue.* This is not a problem, because Hadoop RPC does not have any* constraints on ordering the incoming rpc requests.* In case of Observer, it handles only reads, which are* commutative.*/// Re-queue the call and continuerequeueCall(call);continue;}if (LOG.isDebugEnabled()) {LOG.debug(Thread.currentThread().getName() + ": " + call + " for RpcKind " + call.rpcKind);}//设置当前线程要处理的 call 任务CurCall.set(call);if (call.traceScope != null) {call.traceScope.reattach();traceScope = call.traceScope;traceScope.getSpan().addTimelineAnnotation("called");}// always update the current call contextCallerContext.setCurrent(call.callerContext);UserGroupInformation remoteUser = call.getRemoteUser();connDropped = !call.isOpen();//通过调用Call对象的run()方法发起本地调用,并返回结果if (remoteUser != null) {remoteUser.doAs(call);} else {// RpcCall#run()call.run();}} catch (InterruptedException e) {if (running) { // unexpected -- log itLOG.info(Thread.currentThread().getName() + " unexpectedly interrupted", e);if (traceScope != null) {traceScope.getSpan().addTimelineAnnotation("unexpectedly interrupted: " +StringUtils.stringifyException(e));}}} catch (Exception e) {LOG.info(Thread.currentThread().getName() + " caught an exception", e);if (traceScope != null) {traceScope.getSpan().addTimelineAnnotation("Exception: " +StringUtils.stringifyException(e));}} finally {CurCall.set(null);IOUtils.cleanupWithLogger(LOG, traceScope);if (call != null) {updateMetrics(call, startTimeNanos, connDropped);ProcessingDetails.LOG.debug("Served: [{}]{} name={} user={} details={}",call, (call.isResponseDeferred() ? ", deferred" : ""),call.getDetailedMetricsName(), call.getRemoteUser(),call.getProcessingDetails());}}}LOG.debug(Thread.currentThread().getName() + ": exiting");}

RpcCall中的 Run 方法

@Overridepublic Void run() throws Exception {if (!connection.channel.isOpen()) {Server.LOG.info(Thread.currentThread().getName() + ": skipped " + this);return null;}long startNanos = Time.monotonicNowNanos();Writable value = null;ResponseParams responseParams = new ResponseParams();try {//通过call()发起本地调用,并返回结果value = call(rpcKind, connection.protocolName, rpcRequest, timestampNanos);} catch (Throwable e) {populateResponseParamsOnError(e, responseParams);}if (!isResponseDeferred()) {long deltaNanos = Time.monotonicNowNanos() - startNanos;ProcessingDetails details = getProcessingDetails();details.set(Timing.PROCESSING, deltaNanos, TimeUnit.NANOSECONDS);deltaNanos -= details.get(Timing.LOCKWAIT, TimeUnit.NANOSECONDS);deltaNanos -= details.get(Timing.LOCKSHARED, TimeUnit.NANOSECONDS);deltaNanos -= details.get(Timing.LOCKEXCLUSIVE, TimeUnit.NANOSECONDS);details.set(Timing.LOCKFREE, deltaNanos, TimeUnit.NANOSECONDS);startNanos = Time.monotonicNowNanos();setResponseFields(value, responseParams);sendResponse();deltaNanos = Time.monotonicNowNanos() - startNanos;details.set(Timing.RESPONSE, deltaNanos, TimeUnit.NANOSECONDS);} else {if (LOG.isDebugEnabled()) {LOG.debug("Deferring response for callId: " + this.callId);}}return null;}

最终会匹配到 ProtobufRpcEngine 里面的 call 方法

/***** This is a server side method, which is invoked over RPC. On success* the return response has protobuf response payload. On failure, the* exception name and the stack trace are returned in the response.* See {@link HadoopRpcResponseProto}** In this method there three types of exceptions possible and they are* returned in response as follows.* <ol>* <li> Exceptions encountered in this method that are returned* as {@link RpcServerException} </li>* <li> Exceptions thrown by the service is wrapped in ServiceException.* In that this method returns in response the exception thrown by the* service.</li>* <li> Other exceptions thrown by the service. They are returned as* it is.</li>* </ol>** call()方法首先会从请求头中提取出RPC调用的接口名和方法名等信息,* 然后根据调用的接口信息获取对应的BlockingService对象,* 再根据调用的方法信息在BlockingService对象上调用callBlockingMethod()方法* 并将调用前转到ClientNamenodeProtocolServerSideTranslatorPB对象上,* 最终这个请求会由 NameNodeRpcServer响应*/public Writable call(RPC.Server server, String connectionProtocolName,Writable writableRequest, long receiveTime) throws Exception {//获取rpc调用头RpcProtobufRequest request = (RpcProtobufRequest) writableRequest;RequestHeaderProto rpcRequest = request.getRequestHeader();//获得调用的接口名、方法名、版本号String methodName = rpcRequest.getMethodName();/*** RPCs for a particular interface (ie protocol) are done using a* IPC connection that is setup using rpcProxy.* The rpcProxy's has a declared protocol name that is* sent form client to server at connection time.** Each Rpc call also sends a protocol name* (called declaringClassprotocolName). This name is usually the same* as the connection protocol name except in some cases.* For example metaProtocols such ProtocolInfoProto which get info* about the protocol reuse the connection but need to indicate that* the actual protocol is different (i.e. the protocol is* ProtocolInfoProto) since they reuse the connection; in this case* the declaringClassProtocolName field is set to the ProtocolInfoProto.*/String declaringClassProtoName =rpcRequest.getDeclaringClassProtocolName();long clientVersion = rpcRequest.getClientProtocolVersion();if (server.verbose)LOG.info("Call: connectionProtocolName=" + connectionProtocolName +", method=" + methodName);//获得该接口在Server侧对应的实现类ProtoClassProtoImpl protocolImpl = getProtocolImpl(server,declaringClassProtoName, clientVersion);BlockingService service = (BlockingService) protocolImpl.protocolImpl;//获取要调用的方法的描述信息MethodDescriptor methodDescriptor = service.getDescriptorForType().findMethodByName(methodName);if (methodDescriptor == null) {String msg = "Unknown method " + methodName + " called on "+ connectionProtocolName + " protocol.";LOG.warn(msg);throw new RpcNoSuchMethodException(msg);}//获取调用的方法描述符以及调用参数Message prototype = service.getRequestPrototype(methodDescriptor);Message param = request.getValue(prototype);Message result;Call currentCall = Server.getCurCall().get();try {server.rpcDetailedMetrics.init(protocolImpl.protocolClass);currentCallInfo.set(new CallInfo(server, methodName));currentCall.setDetailedMetricsName(methodName);//在实现类上调用callBlockingMethod方法,级联适配调用到NameNodeRpcServerresult = service.callBlockingMethod(methodDescriptor, null, param);// Check if this needs to be a deferred response,// by checking the ThreadLocal callback being setif (currentCallback.get() != null) {currentCall.deferResponse();currentCallback.set(null);return null;}} catch (ServiceException e) {Exception exception = (Exception) e.getCause();currentCall.setDetailedMetricsName(exception.getClass().getSimpleName());throw (Exception) e.getCause();} catch (Exception e) {currentCall.setDetailedMetricsName(e.getClass().getSimpleName());throw e;} finally {currentCallInfo.set(null);}return RpcWritable.wrap(result);}}

Responder

用于向客户端发送 RPC 响应,Responder 也是一个线程类,Server 端仅有一个 Responder 对象,Responder 内部包含一个 Selector 对象 responseSelector,用于监听 SelectionKey.OP_WRITE 事件。 当网络环境不佳或者响应信息太大时, Handler 线程可能无法发送完整的响应信息到客户端, 这时 Handler 会在Responder.responseSelector 上注册 SelectionKey.OP_WRITE 事件,responseSelector 会循环监听网络环境是否具备发送数据的条件,之后 responseselector 会触发 Responder 线程发送未完成的响应结果到客户端

doRunLoop

Responder 是一个线程类,所以核心的还是 run 方法中的 doRunLoop 方法

private void doRunLoop() {long lastPurgeTimeNanos = 0; // last check for old calls.while (running) {try {waitPending(); // If a channel is being registered, wait.// 阻塞 15min ,如果超时的话, 会执行后面的清除长期没有发送成功的消息writeSelector.select(TimeUnit.NANOSECONDS.toMillis(PURGE_INTERVAL_NANOS));Iterator<SelectionKey> iter = writeSelector.selectedKeys().iterator();while (iter.hasNext()) {SelectionKey key = iter.next();iter.remove();try {if (key.isWritable()) {//执行写入操作doAsyncWrite(key);}} catch (CancelledKeyException cke) {// something else closed the connection, ex. reader or the// listener doing an idle scan. ignore it and let them clean// upRpcCall call = (RpcCall)key.attachment();if (call != null) {LOG.info(Thread.currentThread().getName() +": connection aborted from " + call.connection);}} catch (IOException e) {LOG.info(Thread.currentThread().getName() + ": doAsyncWrite threw exception " + e);}}long nowNanos = Time.monotonicNowNanos();if (nowNanos < lastPurgeTimeNanos + PURGE_INTERVAL_NANOS) {continue;}lastPurgeTimeNanos = nowNanos;//// If there were some calls that have not been sent out for a// long time, discard them.//if(LOG.isDebugEnabled()) {LOG.debug("Checking for old call responses.");}ArrayList<RpcCall> calls;// get the list of channels from list of keys.synchronized (writeSelector.keys()) {calls = new ArrayList<RpcCall>(writeSelector.keys().size());iter = writeSelector.keys().iterator();while (iter.hasNext()) {SelectionKey key = iter.next();RpcCall call = (RpcCall)key.attachment();if (call != null && key.channel() == call.connection.channel) {calls.add(call);}}}// 移除掉已经很久没有发送调的信息for (RpcCall call : calls) {doPurge(call, nowNanos);}} catch (OutOfMemoryError e) {//// we can run out of memory if we have too many threads// log the event and sleep for a minute and give// some thread(s) a chance to finish//LOG.warn("Out of Memory in server select", e);try { Thread.sleep(60000); } catch (Exception ie) {}} catch (Exception e) {LOG.warn("Exception in Responder", e);}}}

ProcessResponse

异步处理请求的方法

// Processes one response. Returns true if there are no more pending// data for this channel.//private boolean processResponse(LinkedList<RpcCall> responseQueue,boolean inHandler) throws IOException {boolean error = true;boolean done = false; // there is more data for this channel.int numElements = 0;RpcCall call = null;try {synchronized (responseQueue) {//// If there are no items for this channel, then we are done//numElements = responseQueue.size();if (numElements == 0) {error = false;return true; // no more data for this channel.}//// Extract the first call//call = responseQueue.removeFirst();SocketChannel channel = call.connection.channel;if (LOG.isDebugEnabled()) {LOG.debug(Thread.currentThread().getName() + ": responding to " + call);}//// Send as much data as we can in the non-blocking fashion//int numBytes = channelWrite(channel, call.rpcResponse);if (numBytes < 0) {return true;}if (!call.rpcResponse.hasRemaining()) {//Clear out the response buffer so it can be collectedcall.rpcResponse = null;call.connection.decRpcCount();if (numElements == 1) { // last call fully processes.done = true; // no more data for this channel.} else {done = false; // more calls pending to be sent.}if (LOG.isDebugEnabled()) {LOG.debug(Thread.currentThread().getName() + ": responding to " + call+ " Wrote " + numBytes + " bytes.");}} else {//// If we were unable to write the entire response out, then// insert in Selector queue.//call.connection.responseQueue.addFirst(call);if (inHandler) {// set the serve time when the response has to be sent latercall.timestampNanos = Time.monotonicNowNanos();incPending();try {// Wakeup the thread blocked on select, only then can the call// to channel.register() complete.writeSelector.wakeup();channel.register(writeSelector, SelectionKey.OP_WRITE, call);} catch (ClosedChannelException e) {//Its ok. channel might be closed else where.done = true;} finally {decPending();}}if (LOG.isDebugEnabled()) {LOG.debug(Thread.currentThread().getName() + ": responding to " + call+ " Wrote partial " + numBytes + " bytes.");}}error = false; // everything went off well}} finally {if (error && call != null) {LOG.warn(Thread.currentThread().getName()+", call " + call + ": output error");done = true; // error. no more data for this channel.closeConnection(call.connection);}}return done;}