三节点集群搭建

创建3节点swarm cluster的方法

https://labs.play-with-docker.com/ play with docker 网站, 优点是快速方便,缺点是环境不持久,4个小时后环境会被重置

在本地通过虚拟化软件搭建Linux虚拟机,优点是稳定,方便,缺点是占用系统资源,需要电脑内存最好8G及其以上

在云上使用云主机, 亚马逊,Google,微软Azure,阿里云,腾讯云等,缺点是需要消耗金钱(但是有些云服务,有免费试用)

多节点的环境涉及到机器之间的通信需求,所以防火墙和网络安全策略组是大家一定要考虑的问题,特别是在云上使用云主机的情况,下面这些端口记得打开 防火墙 以及 设置安全策略组

- TCP port 2376

- TCP port 2377

- TCP and UDP port 7946

- UDP port 4789

为了简化,以上所有端口都允许节点之间自由访问就行。

请大家注意,请大家使用自己熟悉的方式去创建这样的三节点集群,如果熟悉vagrant和virtualbox,那可以使用我们课程的里方法,如果不熟悉想学习,请参考B站和Youtube视频

Vagrant + Virtualbox

下载安装 VirtualBox: https://www.virtualbox.org/

下载安装 Vagarnt: https://www.vagrantup.com/

Vagrant入门系列视频:https://space.bilibili.com/364122352/channel/seriesdetail?sid=1734443

本节Vagrant搭建的文件下载

Centos 版 vagrant相关文件https://dockertips.readthedocs.io/en/latest/_downloads/01803e0f19cfb47a524c08f4ed905771/vagrant-setup.zip

Ubuntu 版 vagrant相关文件

Vagrant的基本操作:

- 虚拟机的启动:vagrant up

- 虚拟机的停止:vagrant halt

- 虚拟机的删除:vagrant destroy

初始化manager节点报错

docker集群初始化manager节点报错:

$ docker swarm initError response from daemon: could not choose an IP address to advertise since this system has multiple addresses on different interfaces (192.168.0.27 on eth0 and 172.18.0.87 on eth1) - specify one with --advertise-addr

这个错误出现在电脑有多个网卡时,原因是docker不知道该选用那个ip作为地址,解决方式很简单,就是在命令后面加上参数 —advertise-addr ip地址,例如:

docker-machine ssh myvm1 docker swarm init --advertise-addr 192.168.99.100

问题解决。

添加节点

192.168.0.28 管理节点

$ docker swarm init --advertise-addr 192.168.0.28Swarm initialized: current node (cthj34wxr7j2eg2gv8bwxv1ff) is now a manager.To add a worker to this swarm, run the following command:docker swarm join --token SWMTKN-1-3wrw7aibkg34nxw7plpf78sxty17j5uioasjo6neg41wf6gdjr-a7bqdfzh56f8suieomn53al5i 192.168.0.28:2377To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.[node1] (local) root@192.168.0.28 ~

192.168.0.27 子节点

[node2] (local) root@192.168.0.27 ~$ docker swarm join --token SWMTKN-1-3wrw7aibkg34nxw7plpf78sxty17j5uioasjo6neg41wf6gdjr-a7bqdfzh56f8suieomn53al5i 192.168.0.28:2377This node joined a swarm as a worker.

192.168.0.26 子节点

[node3] (local) root@192.168.0.26 ~$ docker swarm join --token SWMTKN-1-3wrw7aibkg34nxw7plpf78sxty17j5uioasjo6neg41wf6gdjr-a7bqdfzh56f8suieomn53al5i 192.168.0.28:2377This node joined a swarm as a worker.

查看管理节点,3个节点都已经添加完成。

[node1] (local) root@192.168.0.28 ~$ docker node lsID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSIONcthj34wxr7j2eg2gv8bwxv1ff * node1 Ready Active Leader 20.10.07gb1bwx68tjw6qtvb4nd3kv9m node2 Ready Active 20.10.0903a2d81kqjrly3ldqex4qima node3 Ready Active 20.10.0

开始体验service集群

$ docker service create --name web nginxhwteuq05xdeggyve8bb1gltquoverall progress: 1 out of 1 tasks1/1: running [==================================================>]verify: Service converged$ docker service ps webID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSfovbjyzwhijm web.1 nginx:latest node2 Running Running 30 seconds ago

创建在node2 192.168.0.27节点上

$ docker ps -aCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESfbea63b73275 nginx:latest "/docker-entrypoint.…" About a minute ago Up About a minute 80/tcp web.1.fovbjyzwhijmbj51qi36j4g21[node2] (local) root@192.168.0.27 ~

扩展到3个service

$ docker service update web --replicas 3weboverall progress: 3 out of 3 tasks1/3: running [==================================================>]2/3: running [==================================================>]3/3: running [==================================================>]verify: Service converged[node1] (local) root@192.168.0.28 ~$ docker service ps webID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSfovbjyzwhijm web.1 nginx:latest node2 Running Running 4 minutes agoyxyjt8i6ozix web.2 nginx:latest node1 Running Running 27 seconds ago4gmtiqy9ho33 web.3 nginx:latest node3 Running Running 27 seconds ago[node1] (local) root@192.168.0.28 ~

这样就可以看到,3个节点都部署一个service

故障模拟

强制删除node2 192.168.0.27节点的service

$ docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESfbea63b73275 nginx:latest "/docker-entrypoint.…" 6 minutes ago Up 6 minutes 80/tcp web.1.fovbjyzwhijmbj51qi36j4g21[node2] (local) root@192.168.0.27 ~$ docker container rm -f fbea63b73275fbea63b73275[node2] (local) root@192.168.0.27 ~

查看管理节点:

$ docker service ps webID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSii0o24kiysdq web.1 nginx:latest node2 Running Running 37 seconds agofovbjyzwhijm \_ web.1 nginx:latest node2 Shutdown Failed 43 seconds ago "task: non-zero exit (137)"yxyjt8i6ozix web.2 nginx:latest node1 Running Running 2 minutes ago4gmtiqy9ho33 web.3 nginx:latest node3 Running Running 2 minutes ago[node1] (local) root@192.168.0.28 ~

可以看到,node2节点被强制杀死的service,同时node2节点又重新创建了一个service

注意:不一定是在宕掉的节点重新创建service,可能在其他节点创建,看swarm的机制来决定。

docker service scale

scale Scale one or multiple replicated services 扩展一个或多个复制服务

创建第4个容器

$ docker service scale web=4web scaled to 4overall progress: 4 out of 4 tasks1/4: running [==================================================>]2/4: running [==================================================>]3/4: running [==================================================>]4/4: running [==================================================>]verify: Service converged[node1] (local) root@192.168.0.28 ~

docker service logs

显示日志,可以看出是那个节点的日志

docker service logs -f 一直监听日志,打印到屏幕上

Swarm 的 overlay 网络详解

可以看到overlay网络

$ docker network lsNETWORK ID NAME DRIVER SCOPEaba37840837a bridge bridge local20a5dcd30faa docker_gwbridge bridge local97d548bf9e22 host host localyta4shlonx9v ingress overlay swarm4644b3cc4c43 none null local

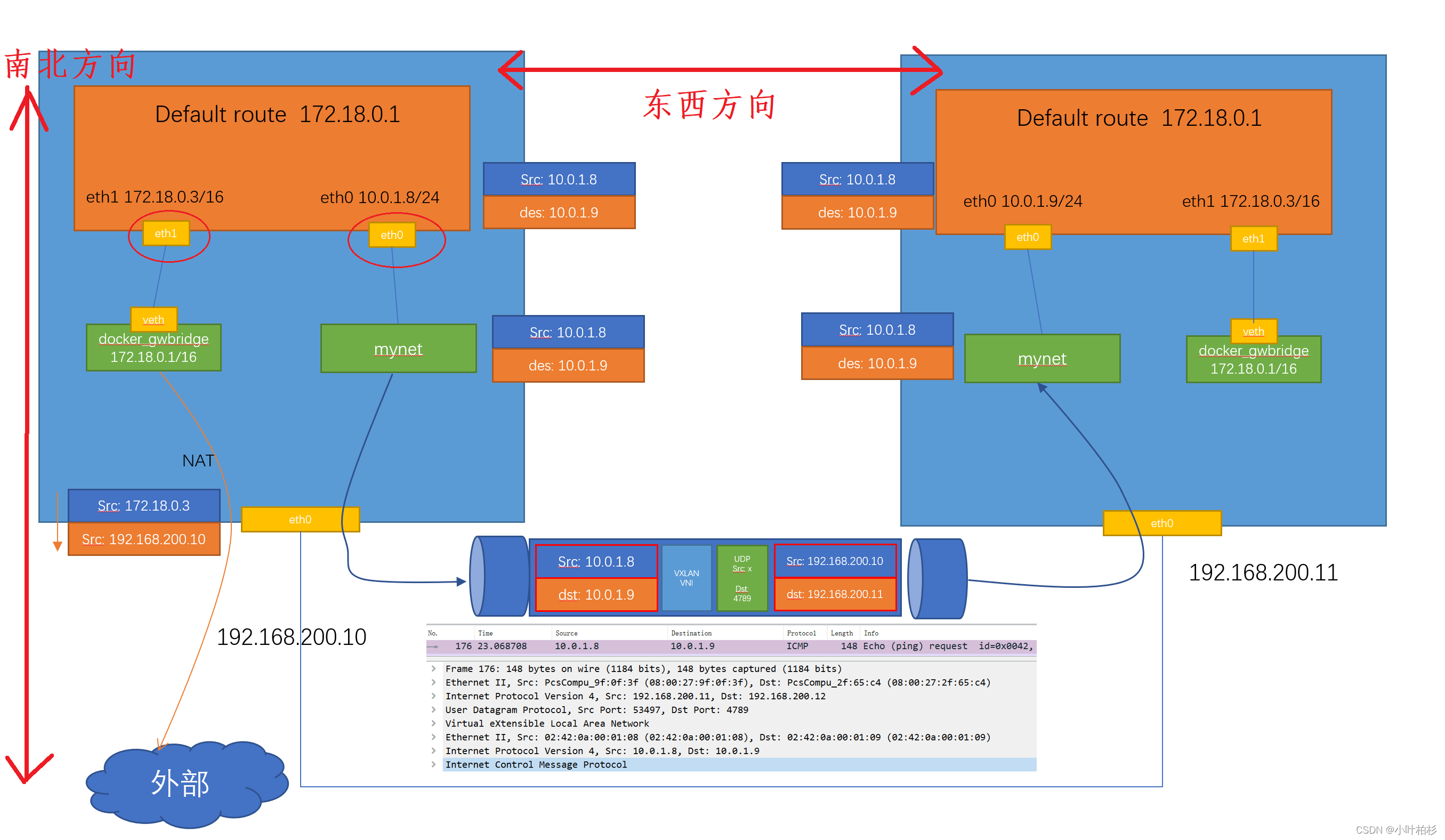

对于理解swarm的网络来讲,个人认为最重要的两个点:

第一是外部如何访问部署运行在swarm集群内的服务,可以称之为 入方向 流量,在swarm里我们通过 ingress 来解决

第二是部署在swarm集群里的服务,如何对外进行访问,这部分又分为两块:

第一,东西向流量 ,也就是不同swarm节点上的容器之间如何通信,swarm通过 overlay 网络来解决;

第二,南北向流量 ,也就是swarm集群里的容器如何对外访问,比如互联网,这个是 Linux bridge + iptables NAT 来解决的

创建 overlay 网络

$ docker network create -d overlay mynet4f05pvu8zzj36c2fwu0208wa4$ docker network lsNETWORK ID NAME DRIVER SCOPEaba37840837a bridge bridge local20a5dcd30faa docker_gwbridge bridge local97d548bf9e22 host host localyta4shlonx9v ingress overlay swarm4f05pvu8zzj3 mynet overlay swarm4644b3cc4c43 none null local

这个网络会同步到所有的swarm节点上

创建服务

创建一个服务连接到这个 overlay网络, name 是 test , replicas 是 2

vagrant@swarm-manager:~$ docker service create --network mynet --name test --replicas 2 busybox ping 8.8.8.8vagrant@swarm-manager:~$ docker service ps testID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSyf5uqm1kzx6d test.1 busybox:latest swarm-worker1 Running Running 18 seconds ago3tmp4cdqfs8a test.2 busybox:latest swarm-worker2 Running Running 18 seconds ago

可以看到这两个容器分别被创建在worker1和worker2两个节点上

网络查看

到worker1和worker2上分别查看容器的网络连接情况

vagrant@swarm-worker1:~$ docker container lsCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMEScac4be28ced7 busybox:latest "ping 8.8.8.8" 2 days ago Up 2 days test.1.yf5uqm1kzx6dbt7n26e4akhsuvagrant@swarm-worker1:~$ docker container exec -it cac sh/ # ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever24: eth0@if25: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueuelink/ether 02:42:0a:00:01:08 brd ff:ff:ff:ff:ff:ffinet 10.0.1.8/24 brd 10.0.1.255 scope global eth0valid_lft forever preferred_lft forever26: eth1@if27: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueuelink/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ffinet 172.18.0.3/16 brd 172.18.255.255 scope global eth1valid_lft forever preferred_lft forever

这个容器有两个接口 eth0和eth1, 其中eth0是连到了mynet这个网络,eth1是连到docker_gwbridge这个网络

vagrant@swarm-worker1:~$ docker network lsNETWORK ID NAME DRIVER SCOPEa631a4e0b63c bridge bridge local56945463a582 docker_gwbridge bridge local9bdfcae84f94 host host local14fy2l7a4mci ingress overlay swarmlpirdge00y3j mynet overlay swarmc1837f1284f8 none null local

在这个容器里是可以直接ping通worker2上容器的IP 10.0.1.9的

结构图讲解

南北向:主要用于访问外部网络。通过eht1网卡,走veth的docker_gwbridge网络,根据NAT把容器地址转换成主机地址,访问到外部网络。

东西向:用于集群之间的网络访问。192.168.200.10上的容器通过eht0访问overlay的网络mynet,将原始数据加一个VXLAN的头,封装成数据包,这时会原始地址就是192.168.200.10,目标IP地址192.168.200.11,通过这个管道发送到目标机器上,再通过overlay网络的mynet接收解封,发送到192.168.200.11的容器上。

集群的两个节点之间 10.0.1.8 - > 10.0.1.9 转换后两台机器 192.168.200.10 -> 192.168.200.11