参考文章

https://blog.csdn.net/alex_yangchuansheng/article/details/106435514

https://blog.csdn.net/alex_yangchuansheng/article/details/106580906

服务器规划

base 10.83.15.10 基础节点

bootstrap 10.83.15.11 引导节点,要先安装好这台,再继续安装后续集群

master1 10.83.15.32

master2 10.83.15.33

master3 10.83.15.34

worker1 10.83.15.35

worker2 10.83.15.36

安装harbor

这里比较麻烦,一定要使用https才行,不然后面openshift同步镜像到harbor仓库会出错

安装oc

oc客户端安装https://mirror.openshift.com/pub/openshift-v4/clients/ocp/4.4.5/openshift-client-linux-4.4.5.tar.gztar -xvf openshift-client-linux-4.4.5.tar.gzcp oc /usr/local/bin/查看版本信息oc adm release info quay.io/openshift-release-dev/ocp-release:4.4.5-x86_64

安装DNS

选用的dns方式是 etcd + coredns

[root@base ~]# yum install -y etcd[root@base ~]# systemctl start etcd[root@base ~]# systemctl enable etcd --now[root@base ~]# wget https://github.com/coredns/coredns/releases/download/v1.6.9/coredns_1.6.9_linux_amd64.tgz[root@base ~]# tar zxvf coredns_1.6.9_linux_amd64.tgz[root@base ~]# mv coredns /usr/local/bin创建Systemd Unit文件[root@base ~]# cat > /etc/systemd/system/coredns.service <<EOF[Unit]Description=CoreDNS DNS serverDocumentation=https://coredns.ioAfter=network.target[Service]PermissionsStartOnly=trueLimitNOFILE=1048576LimitNPROC=512CapabilityBoundingSet=CAP_NET_BIND_SERVICEAmbientCapabilities=CAP_NET_BIND_SERVICENoNewPrivileges=trueUser=corednsWorkingDirectory=~ExecStart=/usr/local/bin/coredns -conf=/etc/coredns/CorefileExecReload=/bin/kill -SIGUSR1 $MAINPIDRestart=on-failure[Install]WantedBy=multi-user.targetEOF新建coredns用户[root@base ~]# useradd coredns -s /sbin/nologin# 新将CoreDNS配置文件[root@base ~]# cat /etc/coredns/Corefile.:53 {template IN A apps.ocp.example.com {match .*apps.ocp.example.comanswer "{{ .Name }} 60 IN A 10.83.15.30"fallthrough}etcd {path /skydnsendpoint http://localhost:2379fallthrough}cache 160loadbalancelog}# 启用coredns[root@base ~]# systemctl start coredns[root@base ~]# systemctl enable coredns --now# 验证泛域名解析[root@base ~]# dig +short apps.ocp.example.com @127.0.0.110.83.15.30[root@base ~]# dig +short xx.apps.ocp.example.com @127.0.0.110.83.15.30# 添加其余DNS记录alias etcdctlv3='ETCDCTL_API=3 etcdctl'etcdctlv3 put /skydns/com/example/ocp/api '{"host":"10.83.15.30","ttl":60}'etcdctlv3 put /skydns/com/example/ocp/api-int '{"host":"10.83.15.30","ttl":60}'etcdctlv3 put /skydns/com/example/ocp/etcd-0 '{"host":"10.83.15.32","ttl":60}'etcdctlv3 put /skydns/com/example/ocp/etcd-1 '{"host":"10.83.15.33","ttl":60}'etcdctlv3 put /skydns/com/example/ocp/etcd-2 '{"host":"10.83.15.34","ttl":60}'etcdctlv3 put /skydns/com/example/ocp/_tcp/_etcd-server-ssl/x1 '{"host":"etcd-0.ocp.example.com","ttl":60,"priority":0,"weight":10,"port":2380}'etcdctlv3 put /skydns/com/example/ocp/_tcp/_etcd-server-ssl/x2 '{"host":"etcd-1.ocp.example.com","ttl":60,"priority":0,"weight":10,"port":2380}'etcdctlv3 put /skydns/com/example/ocp/_tcp/_etcd-server-ssl/x3 '{"host":"etcd-2.ocp.example.com","ttl":60,"priority":0,"weight":10,"port":2380}'# 除此之外再添加各节点主机名记录etcdctlv3 put /skydns/com/example/ocp/bootstrap '{"host":"10.83.15.31","ttl":60}'etcdctlv3 put /skydns/com/example/ocp/master1 '{"host":"10.83.15.32","ttl":60}'etcdctlv3 put /skydns/com/example/ocp/master2 '{"host":"10.83.15.33","ttl":60}'etcdctlv3 put /skydns/com/example/ocp/master3 '{"host":"10.83.15.34","ttl":60}'etcdctlv3 put /skydns/com/example/ocp/worker1 '{"host":"10.83.15.35","ttl":60}'etcdctlv3 put /skydns/com/example/ocp/worker2 '{"host":"10.83.15.36","ttl":60}'etcdctlv3 put /skydns/com/example/ocp/registry '{"host":"10.83.15.30","ttl":60}'etcdctlv3 put /skydns/cn/com/ky-tech/ocp4/registry '{"host":"10.83.15.10","ttl":60}'解析验证[root@base coredns]# dig +short api.ocp.example.com @127.0.0.110.83.15.30[root@base coredns]# dig +short api-int.ocp.example.com @127.0.0.110.83.15.30[root@base coredns]# dig +short bootstrap.ocp.example.com @127.0.0.110.83.15.31[root@base coredns]# dig +short master1.ocp.example.com @127.0.0.110.83.15.32[root@base coredns]# dig +short master2.ocp.example.com @127.0.0.110.83.15.33[root@base coredns]# dig +short master3.ocp.example.com @127.0.0.110.83.15.34[root@base coredns]# dig +short worker1.ocp.example.com @127.0.0.110.83.15.35[root@base coredns]# dig +short worker2.ocp.example.com @127.0.0.110.83.15.36[root@base coredns]# dig +short -t SRV _etcd-server-ssl._tcp.ocp.example.com @127.0.0.110 33 2380 etcd-0.ocp.example.com.10 33 2380 etcd-1.ocp.example.com.10 33 2380 etcd-2.ocp.example.com.

安装负载均衡

[root@base ~]# yum -y install haproxy[root@base ~]# vim /etc/haproxy/haproxy.cfg#---------------------------------------------------------------------# Example configuration for a possible web application. See the# full configuration options online.## http://haproxy.1wt.eu/download/1.4/doc/configuration.txt##---------------------------------------------------------------------#---------------------------------------------------------------------# Global settings#---------------------------------------------------------------------global# to have these messages end up in /var/log/haproxy.log you will# need to:## 1) configure syslog to accept network log events. This is done# by adding the '-r' option to the SYSLOGD_OPTIONS in# /etc/sysconfig/syslog## 2) configure local2 events to go to the /var/log/haproxy.log# file. A line like the following can be added to# /etc/sysconfig/syslog## local2.* /var/log/haproxy.log#log 127.0.0.1 local2# chroot /var/lib/haproxypidfile /var/run/haproxy.pidmaxconn 4000user haproxygroup haproxydaemon# turn on stats unix socketstats socket /var/lib/haproxy/stats#---------------------------------------------------------------------# common defaults that all the 'listen' and 'backend' sections will# use if not designated in their block#---------------------------------------------------------------------defaultsmode httplog globaloption httplogoption dontlognulloption http-server-closeoption forwardfor except 127.0.0.0/8option redispatchretries 3timeout http-request 10stimeout queue 1mtimeout connect 10stimeout client 1mtimeout server 1mtimeout http-keep-alive 10stimeout check 10smaxconn 3000#---------------------------------------------------------------------# main frontend which proxys to the backends#---------------------------------------------------------------------listen admin_statsstats enablebind *:8088mode httpoption httploglog globalmaxconn 10stats refresh 30sstats uri /stats realm haproxystats auth admin:adminstats hide-versionstats admin if TRUEfrontend openshift-api-server6443bind 10.83.15.30:6443default_backend openshift-api-server6443mode tcpoption tcplogbackend openshift-api-server6443balance sourcemode tcpserver bootstrap 10.83.15.31:6443 checkserver master1 10.83.15.32:6443 checkserver master2 10.83.15.33:6443 checkserver master3 10.83.15.34:6443 checkfrontend machine-config-server22623bind 10.83.15.30:22623default_backend machine-config-server22623mode tcpoption tcplogbackend machine-config-server22623mode tcpserver bootstrap 10.83.15.31:22623 checkserver master1 10.83.15.32:22623 checkserver master2 10.83.15.33:22623 checkserver master3 10.83.15.34:22623 checkfrontend ingress-http80bind :80default_backend ingress-http80mode tcpoption tcplogbackend ingress-http80mode tcpserver work1 10.83.15.35:80 checkserver work2 10.83.15.36:80 checkfrontend ingress-https443bind :443default_backend ingress-https443mode tcpoption tcplogbackend ingress-https443mode tcpserver work1 10.83.15.35:443 checkserver work2 10.83.15.36:443 check[root@base ~]# systemctl start haproxy[root@base ~]# systemctl enable haproxy

下载pull secret文件

https://console.redhat.com/openshift/install/pull-secret

OpenShift镜像需要到红帽官网拉取,secret是OpenShift镜像权限认证文件,需要先注册红帽的账号,注意账号信息不要乱填,特别是电话号码,它官网会识别的,信息错误会把这个账号设为不可用状态,是不能下载pull secret文件的

# 把下载的 txt 文件转出 json 格式,如果没有 jq 命令,通过 epel 源安装[root@base ~]# cat ./pull-secret.txt | jq . > pull-secret.json[root@base ~]# cat pull-secret.json{"auths": {"cloud.openshift.com": {"auth": "b3BlbnNo....","email": "15...@163.com"},.....}}

把harbor仓库用户密码转换成base64编码

[root@base ~]# echo -n 'root:password' | base64 -w0xxxxxxxxXNzd29yZA==

然后在 pull-secret.json 里面加一段本地仓库的权限。第一行仓库域名和端口,第二行是上面的 base64,第三行随便填个邮箱:

[root@base ~]# cat pull-secret.json{"auths": {"cloud.openshift.com": {"auth": "b3Blb......","email": "xxxxx@163.com"},......},"registry.ocp4.ky-tech.com.cn:8443": {"auth": "YW...........","email": ".......@163.com"}}}

设置环境变量

export OCP_RELEASE="4.4.5-x86_64"export LOCAL_REGISTRY='registry.ocp4.ky-tech.com.cn:8443'export LOCAL_REPOSITORY='ocp/openshift4'export PRODUCT_REPO='openshift-release-dev'export LOCAL_SECRET_JSON='/root/pull-secret.json'export RELEASE_NAME="ocp-release"

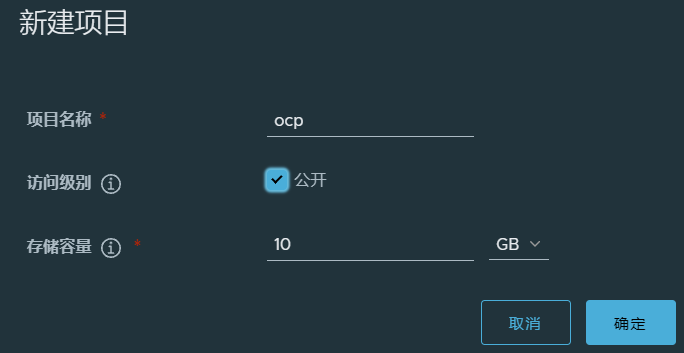

要到harbor中先创建“ocp”仓库

同步镜像,即把quay官方仓库中的镜像,同步到本地仓库,内容大概5G

oc adm -a ${LOCAL_SECRET_JSON} release mirror \--from=quay.io/${PRODUCT_REPO}/${RELEASE_NAME}:${OCP_RELEASE} \--to=${LOCAL_REGISTRY}/${LOCAL_REPOSITORY} \--to-release-image=${LOCAL_REGISTRY}/${LOCAL_REPOSITORY}:${OCP_RELEASE}

注意:同步结束后会输出镜像源信息,保存下来,等会会在安装集群的yaml文件中使用到

imageContentSources:- mirrors:- registry.ocp4.ky-tech.com.cn:8443/ocp/openshift4source: quay.io/openshift-release-dev/ocp-release- mirrors:- registry.ocp4.ky-tech.com.cn:8443/ocp/openshift4source: quay.io/openshift-release-dev/ocp-v4.0-art-dev

harbor仓库缓存好镜像之后,可以通过tag/list 接口查看所有 tag,如果能列出来一堆就说明是正常的

[root@base ~]# curl -s -u root:password -k https://registry.ocp4.ky-tech.com.cn:8443/v2/ocp/openshift4/tags/list|jq .{"name": "ocp/openshift4","tags": ["4.4.5-aws-machine-controllers","4.4.5-azure-machine-controllers","4.4.5-baremetal-installer","4.4.5-baremetal-machine-controllers","4.4.5-baremetal-operator","4.4.5-baremetal-runtimecfg","4.4.5-cli","4.4.5-cli-artifacts","4.4.5-cloud-credential-operator","4.4.5-cluster-authentication-operator","4.4.5-cluster-autoscaler","4.4.5-cluster-autoscaler-operator","4.4.5-cluster-bootstrap","4.4.5-cluster-config-operator","4.4.5-cluster-csi-snapshot-controller-operator","4.4.5-cluster-dns-operator","4.4.5-cluster-etcd-operator","4.4.5-cluster-image-registry-operator","4.4.5-cluster-ingress-operator","4.4.5-cluster-kube-apiserver-operator","4.4.5-cluster-kube-controller-manager-operator","4.4.5-cluster-kube-scheduler-operator","4.4.5-cluster-kube-storage-version-migrator-operator","4.4.5-cluster-machine-approver","4.4.5-cluster-monitoring-operator","4.4.5-cluster-network-operator","4.4.5-cluster-node-tuned","4.4.5-cluster-node-tuning-operator","4.4.5-cluster-openshift-apiserver-operator","4.4.5-cluster-openshift-controller-manager-operator","4.4.5-cluster-policy-controller","4.4.5-cluster-samples-operator","4.4.5-cluster-storage-operator","4.4.5-cluster-svcat-apiserver-operator","4.4.5-cluster-svcat-controller-manager-operator","4.4.5-cluster-update-keys","4.4.5-cluster-version-operator","4.4.5-configmap-reloader","4.4.5-console","4.4.5-console-operator","4.4.5-container-networking-plugins","4.4.5-coredns","4.4.5-csi-snapshot-controller","4.4.5-deployer","4.4.5-docker-builder","4.4.5-docker-registry","4.4.5-etcd","4.4.5-gcp-machine-controllers","4.4.5-grafana","4.4.5-haproxy-router","4.4.5-hyperkube","4.4.5-insights-operator","4.4.5-installer","4.4.5-installer-artifacts","4.4.5-ironic","4.4.5-ironic-hardware-inventory-recorder","4.4.5-ironic-inspector","4.4.5-ironic-ipa-downloader","4.4.5-ironic-machine-os-downloader","4.4.5-ironic-static-ip-manager","4.4.5-jenkins","4.4.5-jenkins-agent-maven","4.4.5-jenkins-agent-nodejs","4.4.5-k8s-prometheus-adapter","4.4.5-keepalived-ipfailover","4.4.5-kube-client-agent","4.4.5-kube-etcd-signer-server","4.4.5-kube-proxy","4.4.5-kube-rbac-proxy","4.4.5-kube-state-metrics","4.4.5-kube-storage-version-migrator","4.4.5-kuryr-cni","4.4.5-kuryr-controller","4.4.5-libvirt-machine-controllers","4.4.5-local-storage-static-provisioner","4.4.5-machine-api-operator","4.4.5-machine-config-operator","4.4.5-machine-os-content","4.4.5-mdns-publisher","4.4.5-multus-admission-controller","4.4.5-multus-cni","4.4.5-multus-route-override-cni","4.4.5-multus-whereabouts-ipam-cni","4.4.5-must-gather","4.4.5-oauth-proxy","4.4.5-oauth-server","4.4.5-openshift-apiserver","4.4.5-openshift-controller-manager","4.4.5-openshift-state-metrics","4.4.5-openstack-machine-controllers","4.4.5-operator-lifecycle-manager","4.4.5-operator-marketplace","4.4.5-operator-registry","4.4.5-ovirt-machine-controllers","4.4.5-ovn-kubernetes","4.4.5-pod","4.4.5-prom-label-proxy","4.4.5-prometheus","4.4.5-prometheus-alertmanager","4.4.5-prometheus-config-reloader","4.4.5-prometheus-node-exporter","4.4.5-prometheus-operator","4.4.5-sdn","4.4.5-service-ca-operator","4.4.5-service-catalog","4.4.5-telemeter","4.4.5-tests","4.4.5-thanos","4.4.5-x86_64"]}

提取openshift-install命令

为了保证安装版本一致性,需要从镜像库中提取 openshift-install 二进制文件,不能直接从 https://mirror.openshift.com/pub/openshift-v4/clients/ocp/4.4.5 下载,不然后面会有 sha256 匹配不上的问题。

# 需要用到上面的export变量oc adm release extract \-a ${LOCAL_SECRET_JSON} \--command=openshift-install \"${LOCAL_REGISTRY}/${LOCAL_REPOSITORY}:${OCP_RELEASE}"[root@base ~]# cp openshift-install /usr/local/bin/[root@base ~]# which openshift-install/usr/local/bin/openshift-install[root@base ~]# openshift-install versionopenshift-install 4.4.5built from commit 15eac3785998a5bc250c9f72101a4a9cb767e494release image registry.ocp4.ky-tech.com.cn:8443/ocp/openshift4@sha256:4a461dc23a9d323c8bd7a8631bed078a9e5eec690ce073f78b645c83fb4cdf74

配置SSH密钥

RHCOS默认用户是core,使用core用户安装集群,把core下的ssh公钥添加到安装集群的yaml中,使用私钥连接集群节点

[root@base ~]# useradd core[root@base ~]# su - core[core@base ~]$ ssh-keygen -t rsa -b 4096 -N '' -f ~/.ssh/new_rsa # 创建无密验证的key[core@base ~]$ eval "$(ssh-agent -s)" # 后台启动ssh-agent[core@base ~]$ ssh-add ~/.ssh/new_rsa # 将ssh私钥添加到ssh-agent

集群安装准备

准备yaml

首先创建一个安装目录,用来存储安装所需要的文件:

[root@base ~]# mkdir /ocpinstall

自定义 install-config.yaml 并将其保存在 /ocpinstall 目录中。配置文件必须命名为 install-config.yaml。配置文件内容:

apiVersion: v1baseDomain: example.com # 根域名compute:- hyperthreading: Enabledname: workerreplicas: 0controlPlane:hyperthreading: Enabledname: masterreplicas: 3metadata:name: ocp # 二级域名 xxx.ocp.example.comnetworking:clusterNetwork:- cidr: 10.133.0.0/16hostPrefix: 24networkType: OpenShiftSDNserviceNetwork:- 172.33.0.0/16platform:none: {}fips: falsepullSecret: '{"auths":{"cloud.openshift.com":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtZGV2K29jbV9hY2Nlc3NfNTdlZWQ3ODE5OWVjNGYyYTkwMmE5YjlmYTVmYjI0YWU6MzBGRU8zSEhBMkpLMlJTR0JPNEU4RFJGUUdaOUhRSklPOEZPOUtEMkIwWjZVR0ZNUldDVFc4WjZQTjBOQkRRRA==","email":"15078007707@163.com"},"quay.io":{"auth":"b3BlbnNoaWZ0LXJlbGVhc2UtZGV2K29jbV9hY2Nlc3NfNTdlZWQ3ODE5OWVjNGYyYTkwMmE5YjlmYTVmYjI0YWU6MzBGRU8zSEhBMkpLMlJTR0JPNEU4RFJGUUdaOUhRSklPOEZPOUtEMkIwWjZVR0ZNUldDVFc4WjZQTjBOQkRRRA==","email":"15078007707@163.com"},"registry.connect.redhat.com":{"auth":"fHVoYy1wb29sLWFhNzkxNmU2LTRjZmItNGE0OC1hZGFiLTcxM2RmMWU3OTIxZTpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSTBaak13TWpRd01XUm1aRE0wTVRRMlltWTNZV1JoTlRVM056Z3hPRFEzTmlKOS5ydFlSdURhX3RQX3VtV2UxWGJkYU5jZWpCZXMwbmNRRDlQbG9TSklpZGZaanVCMWhFejFjLTRMWmJnRHJLVktXWmNLb0RZbkRsalRfMThtSU8zOHJsbU9LTHRGQTVjYnJCaFM3cGMzMkZMazYyYXZscUYxMzhFRTFvUk85UXoydDlqZEF6aDBYRHUxdkxQZ0pXclpGQUlOeFBET1NudmxZdXYwb3ZvMlBsbFNnWHVMcE5sWTRuYlA0OUlIeUF6NXU0RTRiN2JMSjFEaU1UWTJGdmM1dk5TX3FYWWZiWFBSUHhlRHp1NE5HN2htZnhqNFRBcnRXWmVtbDBGUzczTjdkZGljM3ZBZWZDMWduUDZKRTNGODdmLUFFOGlSSk5aYzN2NXY1bTdmdjhGMk5ZY1BqYmlySEZuX3N6NmtCdWxqczhrSVZ1blFfRkFRaWVCdzg1azR0dlliOFREV3otaXRlOWIxcXNsSGFHMlFJUlBfaG5CVTZYbG9vYTRLOTIxc1hvSmxVUnF3TnRraFZyWkd2UXFFNGZZSEh6ZEdFWWJWSGs0VTdscEZoUXllb3RtQzNESTRrMWhQTVhsc3Ixb1Jaekl5LUZTa2xHaUpDNHNFUzJLdkRiSXJod0RkR2VPb0czTnNuNjd1akNXRzZFVDh3T1l5aUJfMG54WXN0NWl0V0IxN2lPVlBaVEtfUGhDMjNxWHlac1dTS3ZRbjhrbFc2YjcxbERSWlBVS1FGQTBpNy1VaDVYTFZUcFB2cklPXzhrcThxYWtzZnJ2M3ZJRXJhM19pNjRXaGxVS3R2RzhHc3h6aWRLZmJlWTRyT3BfMndFZGViOFduWi1QbGlfbnhyYUF2QmFEazlDWVZ0NUduejJ5M2dGdjE2YnQ4cG5PN2llNEo0R1J1ZFozcw==","email":"15078007707@163.com"},"registry.redhat.io":{"auth":"fHVoYy1wb29sLWFhNzkxNmU2LTRjZmItNGE0OC1hZGFiLTcxM2RmMWU3OTIxZTpleUpoYkdjaU9pSlNVelV4TWlKOS5leUp6ZFdJaU9pSTBaak13TWpRd01XUm1aRE0wTVRRMlltWTNZV1JoTlRVM056Z3hPRFEzTmlKOS5ydFlSdURhX3RQX3VtV2UxWGJkYU5jZWpCZXMwbmNRRDlQbG9TSklpZGZaanVCMWhFejFjLTRMWmJnRHJLVktXWmNLb0RZbkRsalRfMThtSU8zOHJsbU9LTHRGQTVjYnJCaFM3cGMzMkZMazYyYXZscUYxMzhFRTFvUk85UXoydDlqZEF6aDBYRHUxdkxQZ0pXclpGQUlOeFBET1NudmxZdXYwb3ZvMlBsbFNnWHVMcE5sWTRuYlA0OUlIeUF6NXU0RTRiN2JMSjFEaU1UWTJGdmM1dk5TX3FYWWZiWFBSUHhlRHp1NE5HN2htZnhqNFRBcnRXWmVtbDBGUzczTjdkZGljM3ZBZWZDMWduUDZKRTNGODdmLUFFOGlSSk5aYzN2NXY1bTdmdjhGMk5ZY1BqYmlySEZuX3N6NmtCdWxqczhrSVZ1blFfRkFRaWVCdzg1azR0dlliOFREV3otaXRlOWIxcXNsSGFHMlFJUlBfaG5CVTZYbG9vYTRLOTIxc1hvSmxVUnF3TnRraFZyWkd2UXFFNGZZSEh6ZEdFWWJWSGs0VTdscEZoUXllb3RtQzNESTRrMWhQTVhsc3Ixb1Jaekl5LUZTa2xHaUpDNHNFUzJLdkRiSXJod0RkR2VPb0czTnNuNjd1akNXRzZFVDh3T1l5aUJfMG54WXN0NWl0V0IxN2lPVlBaVEtfUGhDMjNxWHlac1dTS3ZRbjhrbFc2YjcxbERSWlBVS1FGQTBpNy1VaDVYTFZUcFB2cklPXzhrcThxYWtzZnJ2M3ZJRXJhM19pNjRXaGxVS3R2RzhHc3h6aWRLZmJlWTRyT3BfMndFZGViOFduWi1QbGlfbnhyYUF2QmFEazlDWVZ0NUduejJ5M2dGdjE2YnQ4cG5PN2llNEo0R1J1ZFozcw==","email":"15078007707@163.com"},"registry.ocp4.ky-tech.com.cn:8443":{"auth":"YWRtaW46SGFyYm9yMTIzNDU=","email":"15078007707@163.com"}}}'sshKey: 'ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQC/n/uvSGArFAf8KAstWgi4jnfmApqSytw99A7NIxZn+qBFaTOO2AmOMKG60+cXv68mNv/W1KqvDUkEmhVClg/ND2KRiE3msavs0MJD36ySH5XON0h0pz/XkoOScl9zRk+FSgktiZ7jdjG5MXOMUtINXLGOcTQ9b5nfAQPuGchZilxW9Lht0/c39DNP4PgJPt+r54kBZmBYjy4ppuu3iB8OqtEfUhTDXMNaer5B0A7tv4LFXPg6O3smZAjwDoV6Pj3nRPA0kyV5DW1Uxjt+XhzBFdP9/BKUI4L2TzwEJgeHrPI2DCOX1iZh75dJtkwCrzgYpZtu06TAE5QB97yl9THLFMCoFHgrEHZ7gmamv0C92HpygEmXc83Oeb0DhVx4Mic4gpBv8OleNmuDDKisBR3Qx49LjDtFK32I1pfU6GZXBBFvCTtaGCHZpNJk5bN1D8isU/LO81mhA3ayNlK4RPMlzRUpRQE13eB0xUIgASakYttYiKlI1SU0Hxm2WEiZNRxedk1pfGRjxoAd0RRm8buCKZLiKRnmWmC/by828K0JEYKRX6ttmKOiQw/mZ4VQOlNvn1+5CSG3wFUjtnENqz1hmpS3uGU3mjqhpqqpZgHMHqoGw/zD7b6H1mDXM2Oj+Wypo1RV3ZZ6cBa8GubET6go1U5mBOhB58PFTxlnQ6kWgQ== core@base.ocp.example.com'imageContentSources:- mirrors:- registry.ocp4.ky-tech.com.cn:8443/ocp4/openshift4source: quay.io/openshift-release-dev/ocp-release- mirrors:- registry.ocp4.ky-tech.com.cn:8443/ocp4/openshift4source: quay.io/openshift-release-dev/ocp-v4.0-art-dev

baseDomain : 所有 Openshift 内部的 DNS 记录必须是此基础的子域,并包含集群名称。

compute : 计算节点配置。这是一个数组,每一个元素必须以连字符 - 开头。

hyperthreading : Enabled 表示启用同步多线程或超线程。默认启用同步多线程,可以提高机器内核的性能。如果要禁用,则控制平面和计算节点都要禁用。

compute.replicas : 计算节点数量。因为我们要手动创建计算节点,所以这里要设置为 0。

controlPlane.replicas : 控制平面节点数量。控制平面节点数量必须和 etcd 节点数量一致,为了实现高可用,本文设置为 3。

metadata.name : 集群名称。即前面 DNS 记录中的

cidr : 定义了分配 Pod IP 的 IP 地址段,不能和物理网络重叠。

hostPrefix : 分配给每个节点的子网前缀长度。例如,如果将 hostPrefix 设置为 23,则为每一个节点分配一个给定 cidr 的 /23 子网,允许23-3个 Pod IP 地址。

serviceNetwork : Service IP 的地址池,只能设置一个。

pullSecret : 官网下载的镜像权限认证文件secret,可通过命令 cat /root/pull-secret.json|jq -c 来压缩成一行。

sshKey : 上面创建的公钥,可通过命令 cat ~/.ssh/new_rsa.pub 查看。

additionalTrustBundle : 私有镜像仓库 Quay 的信任证书,可在镜像节点上通过命令 cat /data/quay/config/ssl.cert 查看。没有可以不要。

imageContentSources : 来自前面 oc adm release mirror 的输出结果。

创建集群部署清单

先备份yaml,等会操作会自动被删除了的

[root@base ocpinstall]# cp install-config.yaml install-config.yaml.bak[root@base ocpinstall]# openshift-install create manifests --dir=/ocpinstall

修改 manifests/cluster-scheduler-02-config.yml 文件,将 mastersSchedulable 的值设为 false,以防止 Pod 调度到控制节点。

创建Ignition配置文件,创建前也先备份yaml文件,也是会自动被删除的

[root@base ocpinstall]# cp install-config.yaml.bak install-config.yaml[root@base ocpinstall]# openshift-install create ignition-configs --dir=/ocpinstall生成的文件[root@base ocpinstall]# tree.├── auth│ ├── kubeadmin-password│ └── kubeconfig├── bootstrap.ign├── install-config.yaml.bak├── master.ign├── metadata.json└── worker.ign

nginx

要准备一个http服务,配置下载目录,等会安装的boostrap、master、worker从这个目录下载安装文件

[root@base ~]# yum -y install nginx[root@base ~]# vim /etc/nginx/nginx.conf# For more information on configuration, see:# * Official English Documentation: http://nginx.org/en/docs/# * Official Russian Documentation: http://nginx.org/ru/docs/user nginx;worker_processes auto;error_log /var/log/nginx/error.log;pid /run/nginx.pid;# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.include /usr/share/nginx/modules/*.conf;events {worker_connections 1024;}http {log_format main '$remote_addr - $remote_user [$time_local] "$request" ''$status $body_bytes_sent "$http_referer" ''"$http_user_agent" "$http_x_forwarded_for"';access_log /var/log/nginx/access.log main;sendfile on;tcp_nopush on;tcp_nodelay on;keepalive_timeout 65;types_hash_max_size 4096;include /etc/nginx/mime.types;default_type application/octet-stream;# Load modular configuration files from the /etc/nginx/conf.d directory.# See http://nginx.org/en/docs/ngx_core_module.html#include# for more information.include /etc/nginx/conf.d/*.conf;server {listen 81; # 修改使用端口#listen [::]:81;server_name 10.83.15.30;root /usr/share/nginx/html;# Load configuration files for the default server block.include /etc/nginx/default.d/*.conf;error_page 404 /404.html;location = /404.html {}error_page 500 502 503 504 /50x.html;location = /50x.html {}# 开启显示目录配置autoindex on;autoindex_exact_size off; # 默认为on,显示出文件的确切大小,单位是bytes,改为off后,显示文件大小,单位是kB或者MB、GBautoindex_localtime on; # 默认为off,显示文件时间为GMT时间,改为on后,显示文件时间为文件的服务器时间charset utf-8;# default_type text/plain; # 如果点击时要查看内容,加上这个}# Settings for a TLS enabled server.## server {# listen 443 ssl http2;# listen [::]:443 ssl http2;# server_name _;# root /usr/share/nginx/html;## ssl_certificate "/etc/pki/nginx/server.crt";# ssl_certificate_key "/etc/pki/nginx/private/server.key";# ssl_session_cache shared:SSL:1m;# ssl_session_timeout 10m;# ssl_ciphers HIGH:!aNULL:!MD5;# ssl_prefer_server_ciphers on;## # Load configuration files for the default server block.# include /etc/nginx/default.d/*.conf;## error_page 404 /404.html;# location = /40x.html {# }## error_page 500 502 503 504 /50x.html;# location = /50x.html {# }# }}# 开启nginx[root@base ~]# ststemctl start nginx[root@base ~]# ststemctl enable nginx --now

将 Ignition 配置文件拷贝到 HTTP 服务的 ignition 目录

[root@base ocpinstall]# mkdir /usr/share/nginx/html/ignition[root@base ocpinstall]# cp -r *.ign /usr/share/nginx/html/ignition/

下载RHCOS的BIOS文件

下载用于裸机安装的BISO文件,并上传到nginx下载目录

[root@base ~]# mkdir /usr/share/nginx/html/install[root@base ~]# wget https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/4.4/4.4.17/rhcos-4.4.17-x86_64-metal.x86_64.raw.gz[root@base ~]# cp rhcos-4.4.17-x86_64-metal.x86_64.raw.gz /usr/share/nginx/html/install

下载RHCOS的ISO文件

注意,iso文件版本要跟biso文件版本一致

本地下载iso文件:https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/4.4/4.4.17/rhcos-4.4.17-x86_64-installer.x86_64.iso

然后上传到vSphere

安装集群

Bootstrap

验证查看

安装异常可通过journalctl -xf 查看日记查看端口,要6443 22623都可以[root@bootstrap ~]# ss -luntp | grep 6443tcp LISTEN 0 128 *:6443 *:* users:(("kube-apiserver",pid=8560,fd=7))[root@bootstrap ~]# ss -luntp | grep 22623tcp LISTEN 0 128 *:22623 *:* users:(("machine-config-",pid=7180,fd=6))查看images[root@bootstrap ~]# podman imagesREPOSITORY TAG IMAGE ID CREATED SIZEregistry.ocp4.ky-tech.com.cn:8443/ocp4/openshift4 <none> cceaddf29b8d 16 months ago 306 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> 70bb895d8d3e 16 months ago 322 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> b32c12a78242 16 months ago 302 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> e08dc48322ad 16 months ago 651 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> 48651f43a423 16 months ago 284 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> 3a961e1bfbbd 16 months ago 303 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> 0b273d75c539 16 months ago 301 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> 97fb25aa8ffe 16 months ago 430 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> 935bff2bb618 16 months ago 307 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> 02a1870136be 16 months ago 302 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> c66fdd83e907 16 months ago 291 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> 14e497a561bb 16 months ago 279 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> 8b5f02d19bcb 16 months ago 303 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> ef020184a1ea 16 months ago 278 MBquay.io/openshift-release-dev/ocp-v4.0-art-dev <none> 4b047dfefef7 16 months ago 251 MB查看pods[root@bootstrap ~]# podman ps -a --no-trunc --sort created --format "{{.Command}}"start --tear-down-early=false --asset-dir=/assets --required-pods=openshift-kube-apiserver/kube-apiserver,openshift-kube-scheduler/openshift-kube-scheduler,openshift-kube-controller-manager/kube-controller-manager,openshift-cluster-version/cluster-version-operatorrender --dest-dir=/assets/cco-bootstrap --cloud-credential-operator-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:244ab9d0fcf7315eb5c399bd3fa7c2e662cf23f87f625757b13f415d484621c3bootstrap --etcd-ca=/assets/tls/etcd-ca-bundle.crt --etcd-metric-ca=/assets/tls/etcd-metric-ca-bundle.crt --root-ca=/assets/tls/root-ca.crt --kube-ca=/assets/tls/kube-apiserver-complete-client-ca-bundle.crt --config-file=/assets/manifests/cluster-config.yaml --dest-dir=/assets/mco-bootstrap --pull-secret=/assets/manifests/openshift-config-secret-pull-secret.yaml --etcd-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:aba3c59eb6d088d61b268f83b034230b3396ce67da4f6f6d49201e55efebc6b2 --kube-client-agent-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:8eb481214103d8e0b5fe982ffd682f838b969c8ff7d4f3ed4f83d4a444fb841b --machine-config-operator-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:31dfdca3584982ed5a82d3017322b7d65a491ab25080c427f3f07d9ce93c52e2 --machine-config-oscontent-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:b397960b7cc14c2e2603111b7385c6e8e4b0f683f9873cd9252a789175e5c4e1 --infra-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:d7862a735f492a18cb127742b5c2252281aa8f3bd92189176dd46ae9620ee68a --keepalived-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:a882a11b55b2fc41b538b59bf5db8e4cfc47c537890e4906fe6bf22f9da75575 --coredns-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:b25b8b2219e8c247c088af93e833c9ac390bc63459955e131d89b77c485d144d --mdns-publisher-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:dea1fcb456eae4aabdf5d2d5c537a968a2dafc3da52fe20e8d99a176fccaabce --haproxy-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:7064737dd9d0a43de7a87a094487ab4d7b9e666675c53cf4806d1c9279bd6c2e --baremetal-runtimecfg-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:715bc48eda04afc06827189883451958d8940ed8ab6dd491f602611fe98a6fba --cloud-config-file=/assets/manifests/cloud-provider-config.yaml --cluster-etcd-operator-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:9f7a02df3a5d91326d95e444e2e249f8205632ae986d6dccc7f007ec65c8af77render --prefix=cluster-ingress- --output-dir=/assets/ingress-operator-manifests/usr/bin/cluster-kube-scheduler-operator render --manifest-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:187b9d29fea1bde9f1785584b4a7bbf9a0b9f93e1323d92d138e61c861b6286c --asset-input-dir=/assets/tls --asset-output-dir=/assets/kube-scheduler-bootstrap --config-output-file=/assets/kube-scheduler-bootstrap/config/usr/bin/cluster-kube-controller-manager-operator render --manifest-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:187b9d29fea1bde9f1785584b4a7bbf9a0b9f93e1323d92d138e61c861b6286c --asset-input-dir=/assets/tls --asset-output-dir=/assets/kube-controller-manager-bootstrap --config-output-file=/assets/kube-controller-manager-bootstrap/config --cluster-config-file=/assets/manifests/cluster-network-02-config.yml/usr/bin/cluster-kube-apiserver-operator render --manifest-etcd-serving-ca=etcd-ca-bundle.crt --manifest-etcd-server-urls=https://localhost:2379 --manifest-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:187b9d29fea1bde9f1785584b4a7bbf9a0b9f93e1323d92d138e61c861b6286c --manifest-operator-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:718ca346d5499cccb4de98c1f858c9a9a13bbf429624226f466c3ee2c14ebf40 --asset-input-dir=/assets/tls --asset-output-dir=/assets/kube-apiserver-bootstrap --config-output-file=/assets/kube-apiserver-bootstrap/config --cluster-config-file=/assets/manifests/cluster-network-02-config.yml/usr/bin/cluster-config-operator render --config-output-file=/assets/config-bootstrap/config --asset-input-dir=/assets/tls --asset-output-dir=/assets/config-bootstrap/usr/bin/cluster-etcd-operator render --etcd-ca=/assets/tls/etcd-ca-bundle.crt --etcd-metric-ca=/assets/tls/etcd-metric-ca-bundle.crt --manifest-etcd-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:aba3c59eb6d088d61b268f83b034230b3396ce67da4f6f6d49201e55efebc6b2 --etcd-discovery-domain=ocp.example.com --manifest-cluster-etcd-operator-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:9f7a02df3a5d91326d95e444e2e249f8205632ae986d6dccc7f007ec65c8af77 --manifest-setup-etcd-env-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:31dfdca3584982ed5a82d3017322b7d65a491ab25080c427f3f07d9ce93c52e2 --manifest-kube-client-agent-image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:8eb481214103d8e0b5fe982ffd682f838b969c8ff7d4f3ed4f83d4a444fb841b --asset-input-dir=/assets/tls --asset-output-dir=/assets/etcd-bootstrap --config-output-file=/assets/etcd-bootstrap/config --cluster-config-file=/assets/manifests/cluster-network-02-config.ymlrender --output-dir=/assets/cvo-bootstrap --release-image=registry.ocp4.ky-tech.com.cn:8443/ocp4/openshift4@sha256:4a461dc23a9d323c8bd7a8631bed078a9e5eec690ce073f78b645c83fb4cdf74/usr/bin/grep -oP Managed /manifests/0000_12_etcd-operator_01_operator.cr.yaml[root@bootstrap ~]# crictl podsPOD ID CREATED STATE NAME NAMESPACE ATTEMPTf18651010990b About a minute ago Ready bootstrap-kube-controller-manager-bootstrap.ocp.example.com kube-system 06ee6ae5c4b5ba About a minute ago Ready bootstrap-kube-scheduler-bootstrap.ocp.example.com kube-system 0f22ffbf84bdda About a minute ago Ready bootstrap-cluster-version-operator-bootstrap.ocp.example.com openshift-cluster-version 06a250232068dd About a minute ago Ready bootstrap-kube-apiserver-bootstrap.ocp.example.com kube-system 05211d0aeafe91 About a minute ago Ready cloud-credential-operator-bootstrap.ocp.example.com openshift-cloud-credential-operator 0accd8d31af7cc About a minute ago Ready bootstrap-machine-config-operator-bootstrap.ocp.example.com default 04bc8a0334bdfa About a minute ago Ready etcd-bootstrap-member-bootstrap.ocp.example.com openshift-etcd 0

bootstrap安装正常后继续安装master

Master

登录集群

可以通过导出集群 kubeconfig 文件以默认系统用户身份登录到集群。kubeconfig 文件包含有关 CLI 用于将客户端连接到正确的集群和 API Server 的集群信息,该文件在 OCP 安装期间被创建。

$ mkdir ~/.kube$ cp /ocpinstall/auth/kubeconfig ~/.kube/config$ oc whoamisystem:admin

每安装完一台master,在基础节点这边执行多次,再查看集群node信息,发现新安装的master会加入到集群中oc get csr -o name | xargs oc adm certificate approveoc get nodes

安装完master节点后,在基础节点上执行以下命令完成对生产控制平面的创建

# openshift-install --dir=/ocpinstall wait-for bootstrap-complete --log-level=debugDEBUG OpenShift Installer 4.4.5DEBUG Built from commit 15eac3785998a5bc250c9f72101a4a9cb767e494INFO Waiting up to 20m0s for the Kubernetes API at https://api.ocp.example.com:6443...INFO API v1.17.1 upINFO Waiting up to 40m0s for bootstrapping to complete...DEBUG Bootstrap status: completeINFO It is now safe to remove the bootstrap resources

Worker

每安装完一台master,在基础节点这边执行多次,再查看集群node信息,发现新安装的master会加入到集群中oc get csr -o name | xargs oc adm certificate approveoc get nodes

完成最后安装

oc get nodeoc get clusteroperatorsopenshift-install --dir=/ocpinstall wait-for install-complete --log-level=debug

注意最后提示访问 Web Console 的网址及用户密码。如果密码忘了也没关系,可以查看文件 /ocpinstall/auth/kubeadmin-password 来获得密码。