环境说明:

| hostname | ip | 备注 |

|---|---|---|

| ansible | 192.168.200.3 | 安ansible |

| node1 | 192.168.200.8 | 作为高可用的主节点 |

| node2 | 192.168.200.27 | 从节点 |

| node3 | 192.168.200.6 | 从节点 |

题目要求:

使用OpenStack私有云平台,创建4台系统为centos7.5的云主机,其中一台作为Ansible的母机并命名为ansible,另外三台云主机命名为node1、node2、node3,通过http://

现在开始:

1.基操

- 关闭防火墙,selinux,清空iptables

- 修改主机名……(这些自己操作这里不演示),以及/etc/hosts

- 配置yum源并安装在母机上安装ansible

2.设置ansible免密登陆

方法一:

使用ansible自身认证方法进行设置

在下载安装 ansible后再/etc/ansible/hosts这个文件内,在这个文件内填写相应参数即可

在/etc/ansible/hosts这个文件中添加参数参数:ansible_host 主机地址ansible_user 主机用户ansible_port 主机端口,默认22ansible_ssh_pass 用户认证密码

修改如下格式:[node]192.168.44.200 ansible_user=root ansible_ssh_pass=a192.168.44.22 ansible_user=root ansible_ssh_pass=a

还需将检查对应的服务器host_key取消掉

vi /etc/ansible/ansible.cfghost_key_checking = False #将这行注释取消掉

然后就可以验证一下了

[root@ansible ansible]# ansible all -m ping192.168.44.200 | SUCCESS => {"ansible_facts": {"discovered_interpreter_python": "/usr/bin/python"},"changed": false,"ping": "pong"}192.168.44.22 | SUCCESS => {"ansible_facts": {"discovered_interpreter_python": "/usr/bin/python"},"changed": false,"ping": "pong"}

方法二:

使用在母机上ssh将生成的密钥对将公钥发送到子机上

1.在母机上生成ssh密钥对

[root@ansible ~]# ssh-keygenGenerating public/private rsa key pair.Enter file in which to save the key (/root/.ssh/id_rsa): #存放密钥对的路径这里按回车就默认Enter passphrase (empty for no passphrase): #设置ssh的密码Enter same passphrase again: #确认密码Your identification has been saved in /root/.ssh/id_rsa.Your public key has been saved in /root/.ssh/id_rsa.pub.The key fingerprint is:SHA256:ETpak5kCdN3VIzEOCUYBksMMDZwRmetFJvP1OIKxdDw root@masterThe key's randomart image is:+---[RSA 2048]----+|+X@.o+=+oo+o || X*E o.=o+..o ||. %.+ @ . .. . || + + * + . ||. . o . S || . || || || |+----[SHA256]-----+

2.将母机上的公钥传输到子机上

[root@ansible ~]# cd .ssh/[root@ansible .ssh]# lltotal 12-rw------- 1 root root 1679 Nov 7 02:34 id_rsa-rw-r--r-- 1 root root 393 Nov 7 02:34 id_rsa.pub-rw-r--r-- 1 root root 351 Nov 7 02:09 known_hosts[root@master .ssh]# ssh-copy-id -i ./id_rsa.pub 192.168.44.200/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "./id_rsa.pub"/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keysroot@192.168.44.200's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh '192.168.44.200'"and check to make sure that only the key(s) you wanted were added.

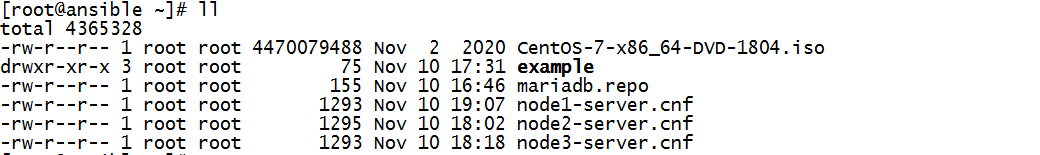

根据题意要将ansible的工作目录设为/root/example

mkdir -p /root/examplecd /root/examplevi ansible.cfg[defaults]inventory = /root/example/hosts inventory 主机源remote_user = roothost_key_checking = Falsevi hosts[node1]192.168.200.8 ansible_user=root ansible_ssh_pass=000000[node2]192.168.44.27 ansible_user=root ansible_ssh_pass=000000[node3]192.168.44.6 ansible_user=root ansible_ssh_pass=000000

[root@ansible example]# ansible all -m ping192.168.44.200 | SUCCESS => {"ansible_facts": {"discovered_interpreter_python": "/usr/bin/python"},"changed": false,"ping": "pong"}192.168.44.22 | SUCCESS => {"ansible_facts": {"discovered_interpreter_python": "/usr/bin/python"},"changed": false,"ping": "pong"}

3.设置集群角色roles

由于ansible对于目录结构要求极其严格所以在做的时候要注意所在目录

[root@ansible example]# pwd/root/example[root@ansible example]# mkdir roles[root@ansible example]# cd roles/[root@ansible roles]# ansible-galaxy init mariadb-galaxy-cluster[root@ansible roles]# lltotal 0drwxr-xr-x 10 root root 154 Nov 7 02:56 mariadb-galaxy-cluster[root@master roles]# cd mariadb-galaxy-cluster/[root@master mariadb-galaxy-cluster]# lltotal 4drwxr-xr-x 2 root root 22 Nov 7 02:56 defaultsdrwxr-xr-x 2 root root 6 Nov 7 02:56 filesdrwxr-xr-x 2 root root 22 Nov 7 02:56 handlersdrwxr-xr-x 2 root root 22 Nov 7 02:56 meta-rw-r--r-- 1 root root 1328 Nov 7 02:56 README.mddrwxr-xr-x 2 root root 22 Nov 7 02:56 tasks tasks 运行任务列表drwxr-xr-x 2 root root 6 Nov 7 02:56 templatesdrwxr-xr-x 2 root root 39 Nov 7 02:56 testsdrwxr-xr-x 2 root root 22 Nov 7 02:56 vars

4.编写yml文件

因为要使用ansible去部署数据库集群所以要将各个步骤编写成yaml文件格式的文件进行部署

思路分析:

- 要想在其他节点安装数据库集群呢么要保证其他节点可以正常下载mariadb的安装包

- 节点安装服务后由于数据库需要先进性初始化才能用所以要进行初始化

- 由于是部署集群化数据库所以要修改配置文件

- 规定那个文件先执行

(1)编写repo.yaml文件

repo文件要设置yum源的包可下载行 ```yamlvi repo.yml

- name: add repo file

copy: src=/opt/mariadb-repo dest=/opt/

```

mariadb-repo这个文件要在ansible主机上提前准备好

(2)编写yum.yml文件

yum.repo文件来设置repo文件,不用多解释一看内容就懂 ```yamlvi yum.yml

- name: rm old repo file shell: “ rm -rf /etc/yum.repos.d/* “

- name: add new repo file copy: src=/root/mariadb.repo dest=/etc/yum.repos.d/

mariadb.repo 文件要提前写好在ansible主机准备好。```yaml# vi mariadb.repo[mariadb]name=mariadbbaseurl=file:///opt/mariadb-repogpgcheck=0enabled=1[centos]name=centosbaseurl=ftp://192.168.200.3/centos #因为galera需要MySQL-python这个包gpgcheck=0enabled=1

(3)编写install.yml文件

install.yml文件用来描述要下载的包

# vi install.yml- name: install mariadb,galers,mariadb-client,MySQL-pythonyum: name=mariadb-server,mariadb,galera,MySQL-python

(4)编写sevice.yml文件

这个文件至关重要!!!很重要

在编写这个文件前首先要搞懂正常部署数据库高可用的步骤,然后将这些步骤转化为ansible方式部署(其实ansible部署东西就是这样的将正常步骤改变成ansible方式部署)

那么部署数据库高可用的步骤是什么呢?

- 首先要在各个节点上下载安装mariadb,galera,MySQL-python(这步有install.yml文件来操作)

- 安装完成后启动各个节点数据库

- 初始化各个节点数据库

- 将提前准备好的server.cnf文件传到相应的节点

- 停掉数据库的主节点

- 用galers的方式启动主数据库

- 其次启动从节点数据库

这就是正常配置数据库高可用的步骤,那么将这些步骤转换为ansible的方式

在这个文件中要传输一个server.cnf文件,由于每个主机的内容有所不同所以要准备好与节点数相同的数量(这里拿一个演示)

我将文件放在/root/目录下面# vi /root/node1-server.cnf[galera]# Mandatory settingswsrep_on=ONwsrep_provider=/usr/lib64/galera/libgalera_smm.sowsrep_cluster_address="gcomm://192.168.200.8,192.168.200.27,192.168.200.6" #启动时要连接的群集节点的地址binlog_format=rowdefault_storage_engine=InnoDBinnodb_autoinc_lock_mode=2wsrep_node_name=node1 #这是哪个节点文件就填哪个wsrep_node_address=192.168.200.8 #同上## Allow server to accept connections on all interfaces.#bind-address=192.168.200.8 # 将注释消掉填写为所在节点ip(同上)## Optional settingwsrep_slave_threads=1innodb_flush_log_at_trx_commit=0innodb_buffer_pool_size=120M #添加这以下三行wsrep_sst_method=rsync #设置状态快照转移的方法,最快的后端同步方式是rsync,同时将数据写入到内存与硬盘中wsrep_causal_reads=ON #可省略# this is only for embedded server

正式开始写service.yml文件

- name: start node1 mariadbservice: name=mariadb state=started enabled=yeswhen: "'node1' in group_names"- name: start node2 mariadbservice: name=mariadb state=started enabled=yeswhen: "'node2' in group_names"- name: start node3 mariadbservice: name=mariadb state=started enabled=yeswhen: "'node3' in group_names"- name: init node1 mariadbmysql_user: user=root password=000000 state=presentwhen: "'node1' in group_names"- name: init node2 mariadbmysql_user: user=root password=000000 state=presentwhen: "'node2' in group_names"- name: init node3 mariadbmysql_user: user=root password=000000 state=presentwhen: "'node3' in group_names"- name: copy node1 server.cnfcopy: src=/root/node1-server.cnf dest=/etc/my.cnf.d/server.cnfwhen: "'node1' in group_names"- name: copy node2 server.cnfcopy: src=/root/node2-server.cnf dest=/etc/my.cnf.d/server.cnfwhen: "'node2' in group_names"- name: copy node3 server.cnfcopy: src=/root/node3-server.cnf dest=/etc/my.cnf.d/server.cnfwhen: "'node3' in group_names"- name: stop node1 mariadbservice: name=mariadb state=stoppedwhen: "'node1' in group_names"- name: stop node2 mariadbservice: name=mariadb state=stoppedwhen: "'node2' in group_names"- name: stop node3 mariadbservice: name=mariadb state=stoppedwhen: "'node3' in group_names"- name: start init node1 mariadbshell: 'galera_new_cluster'when: "'node1' in group_names"- name: start node2 mariadbservice: name=mariadb state=restartedwhen: "'node2' in group_names"- name: start node3 mariadbservice: name=mariadb state=restartedwhen: "'node3' in group_names"

(5)编写main.yml文件

这个文件决定这上面编写的文件执行顺序所以要根据部署过程来填写

# vi main.yml---# tasks file for mariadb-galaxy- include: repo.yml- include: yum.yml- include: install.yml- include: service.yml

(6)编写引入文件

引入文件可以更简洁更明了的指定运行那个roles和主机,当roles多的时候更便捷

# vi scc_install.yml- hosts: allremote_user: allroles:- mariadb-galaxy

目录结构:

[root@ansible example]# tree #examole为题目要求的工作目录.├── ansible.cfg├── cscc_install.yml├── hosts└── roles└── mariadb-galaxy├── defaults│ └── main.yml├── files├── handlers│ └── main.yml├── meta│ └── main.yml├── README.md├── tasks│ ├── install.yml│ ├── main.yml│ ├── repo.yml│ ├── service.yml│ └── yum.yml├── templates├── tests│ ├── inventory│ └── test.yml└── vars└── main.yml

5.运行

在ansible的工作目录下执行运行命令

先检查一下有没有语法错误:

[root@ansible example]# ansible-playbook --syntax-check cscc_install.yml[WARNING]: Unable to parse /root/jing/hosts as an inventory source[WARNING]: No inventory was parsed, only implicit localhost is available[WARNING]: provided hosts list is empty, only localhost is available. Note that the implicit localhost does not match 'all'playbook: cscc_install.yml

没有错误就开始执行:

[root@ansible example]# ansible-playbook cscc_install.yml............................略

6.验证

- 在各个节点上查看3306与4567端口正常开启

- 登入某个节点创建个数据库然后在其它节点看有没有同步过来

- 在节点上进入数据库使用 how status like ‘wsrep%’; 命令查看状态

MariaDB [(none)]> show status like 'wsrep%';+-------------------------------+-----------------------------------------------------------+| Variable_name | Value |+-------------------------------+-----------------------------------------------------------+| wsrep_applier_thread_co unt | 1 || wsrep_apply_oooe | 0.000000 || wsrep_apply_oool | 0.000000 || wsrep_apply_window | 1.000000 || wsrep_causal_reads | 3 || wsrep_cert_deps_distance | 1.000000 || wsrep_cert_index_size | 2 || wsrep_cert_interval | 0.000000 || wsrep_cluster_conf_id | 3 || wsrep_cluster_size | 3 || wsrep_cluster_state_uuid | 5e4cb525-4255-11ec-b769-e20a73e95153 || wsrep_cluster_status | Primary || wsrep_cluster_weight | 3 || wsrep_commit_oooe | 0.000000 || wsrep_commit_oool | 0.000000 || wsrep_commit_window | 1.000000 || wsrep_connected | ON || wsrep_desync_count | 0 || wsrep_evs_delayed | || wsrep_evs_evict_list | || wsrep_evs_repl_latency | 0/0/0/0/0 || wsrep_evs_state | OPERATIONAL || wsrep_flow_control_paused | 0.000000 || wsrep_flow_control_paused_ns | 0 || wsrep_flow_control_recv | 0 || wsrep_flow_control_sent | 0 || wsrep_gcomm_uuid | 5e4a283a-4255-11ec-a16c-460aa988fdf2 || wsrep_incoming_addresses | 192.168.200.8:3306,192.168.200.27:3306,192.168.200.6:3306 |.....略