- 1、部署准备工作

- hostnamectl

- hosts

- ping

- 基础工具安装

- 将当前普通用户加入docker组(需重新登录)

- 服务启用

- 载入指定的个别模块

- 修改配置

- !/bin/bash

- 加载配置

- master setting step one

- master setting step two

- k8s.conf

- k8s.conf

- 创建serviceaccount

- 把serviceaccount绑定在clusteradmin

- 授权serviceaccount用户具有整个集群的访问管理权限

- 获取serviceaccount的secret信息,可得到token令牌的信息

- 通过上边命令获取到dashboard-admin-token-slfcr信息

- 浏览器访问登录并把token粘贴进去登录即可

- 快捷查看token的命令

1、部署准备工作

部署最小化 K8S 集群:master + node1 + node2

Ubuntu 是一款基于 Debian Linux 的以桌面应用为主的操作系统,内容涵盖文字处理、电子邮件、软件开发工具和 Web 服务等,可供用户免费下载、使用和分享。

➜ vgsCurrent machine states:master running (virtualbox)node1 running (virtualbox)node2 running (virtualbox)

1.1 基础环境信息

设置系统主机名以及 Host 文件各节点之间的相互解析

- 使用这个的 Vagrantfile 启动的三节点服务已经配置好了

- 以下使用 master 节点进行演示查看,其他节点操作均一致

```bash

hostnamectl

vagrant@k8s-master:~$ hostnamectl Static hostname: k8s-master

hosts

vagrant@k8s-master:~$ cat /etc/hosts 127.0.0.1 localhost 127.0.1.1 vagrant.vm vagrant 192.168.30.30 k8s-master 192.168.30.31 k8s-node1 192.168.30.32 k8s-node2

ping

vagrant@k8s-master:~$ ping k8s-node1 PING k8s-node1 (192.168.30.31) 56(84) bytes of data. 64 bytes from k8s-node1 (192.168.30.31): icmp_seq=1 ttl=64 time=0.689 ms

<a name="rZRJ4"></a>### 1.2 阿里源配置配置 Ubuntu 的阿里源来加速安装速度- 阿里源镜像地址```bash# 登录服务器➜ vgssh master/node1/nod2Welcome to Ubuntu 18.04.2 LTS (GNU/Linux 4.15.0-50-generic x86_64)# 设置阿里云Ubuntu镜像$ sudo cp /etc/apt/sources.list{,.bak}$ sudo vim /etc/apt/sources.list# 配置kubeadm的阿里云镜像源$ sudo vim /etc/apt/sources.listdeb https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial main$ sudo gpg --keyserver keyserver.ubuntu.com --recv-keys BA07F4FB$ sudo gpg --export --armor BA07F4FB | sudo apt-key add -# 配置docker安装$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -$ sudo apt-key fingerprint 0EBFCD88$ sudo vim /etc/apt/sources.listdeb [arch=amd64] https://download.docker.com/linux/ubuntu bionic stable# 更新仓库$ sudo apt update$ sudo apt dist-upgrade

1.3 基础工具安装

部署阶段的基础工具安装

- 基础组件 docker

- 部署工具 kubeadm

- 路由规则 ipvsadm

- 时间同步 ntp

```bash

基础工具安装

$ sudo apt install -y \ docker-ce docker-ce-cli containerd.io \ kubeadm ipvsadm \ ntp ntpdate \ nginx supervisor

将当前普通用户加入docker组(需重新登录)

$ sudo usermod -a -G docker $USER

服务启用

$ sudo systemctl enable docker.service $ sudo systemctl start docker.service $ sudo systemctl enable kubelet.service $ sudo systemctl start kubelet.service

<a name="Jwamm"></a>### 1.4 操作系统配置操作系统相关配置- 关闭缓存- 配置内核参数- 调整系统时区- 升级内核版本(默认为4.15.0的版本)```bash# 关闭缓存$ sudo swapoff -a# 为K8S来调整内核参数$ sudo touch /etc/sysctl.d/kubernetes.conf$ sudo cat > /etc/sysctl.d/kubernetes.conf <<EOFnet.bridge.bridge-nf-call-iptables = 1 # 开启网桥模式(必须)net.bridge.bridge-nf-call-ip6tables = 1 # 开启网桥模式(必须)net.ipv6.conf.all.disable_ipv6 = 1 # 关闭IPv6协议(必须)net.ipv4.ip_forward = 1 # 转发模式(默认开启)vm.panic_on_oom=0 # 开启OOM(默认开启)vm.swappiness = 0 # 禁止使用swap空间vm.overcommit_memory=1 # 不检查物理内存是否够用fs.inotify.max_user_instances=8192fs.inotify.max_user_watches=1048576fs.file-max = 52706963 # 设置文件句柄数量fs.nr_open = 52706963 # 设置文件的最大打开数量net.netfilter.nf_conntrack_max = 2310720EOF# 查看系统内核参数的方式$ sudo sysctl -a | grep xxx# 使内核参数配置文件生效$ sudo sysctl -p /etc/sysctl.d/kubernetes.conf# 设置系统时区为中国/上海$ sudo timedatectl set-timezone Asia/Shanghai# 将当前的UTC时间写入硬件时钟$ sudo timedatectl set-local-rtc 0

1.5 开启 ipvs 服务

开启 ipvs 服务

修改配置

$ cat > /etc/sysconfig/modules/ipvs.modules <<EOF

!/bin/bash

modprobe — ip_vs modprobe — ip_vs_rr modprobe — ip_vs_wrr modprobe — ip_vs_sh modprobe — nf_conntrack_ipv EOF

加载配置

$ chmod 755 /etc/sysconfig/modules/ipvs.modules \ && bash /etc/sysconfig/modules/ipvs.modules \ && lsmod | grep -e ip_vs -e nf_conntrack_ipv

<a name="mpsSv"></a>## 2、部署 Master 节点节点最低配置: 2C+2G 内存;从节点资源尽量充足<br />kubeadm 工具的 init 命令,即可初始化以单节点部署的 master。为了避免翻墙,这里可以使用阿里云的谷歌源来代替。在执行 kubeadm 部署命令的时候,指定对应地址即可。当然,可以将其加入本地的镜像库之中,更易维护。<br />注意事项- 阿里云谷歌源地址- 使用 kubeadm 定制控制平面配置```bash# 登录服务器➜ vgssh masterWelcome to Ubuntu 18.04.2 LTS (GNU/Linux 4.15.0-50-generic x86_64)# 部署节点(命令行)# 注意pod和service的地址需要不同(否则会报错)$ sudo kubeadm init \--kubernetes-version=1.20.2 \--image-repository registry.aliyuncs.com/google_containers \--apiserver-advertise-address=192.168.30.30 \--pod-network-cidr=10.244.0.0/16 \--service-cidr=10.245.0.0/16# 部署镜像配置(配置文件)$ sudo kubeadm init --config ./kubeadm-config.yamlYour Kubernetes control-plane has initialized successfully!# 查看IP段是否生效(iptable)$ ip route show10.244.0.0/24 dev cni0 proto kernel scope link src 10.244.0.110.244.1.0/24 via 10.244.1.0 dev flannel.1 onlink10.244.2.0/24 via 10.244.2.0 dev flannel.1 onlink# # 查看IP段是否生效(ipvs)$ ipvsadm -L -nIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn

配置文件定义

- 接口使用了 v1beta2 版本

- 配置主节点 IP 地址为 192.168.30.30

- 为 flannel 分配的是 10.244.0.0/16 网段

- 选择的 kubernetes 是当前最新的 1.20.2 版本

- 加入了 controllerManager 的水平扩容功能

执行成功之后会输出如下信息,需要安装如下步骤操作下# kubeadm-config.yaml# sudo kubeadm config print init-defaults > kubeadm-config.yamlapiVersion: kubeadm.k8s.io/v1beta2imageRepository: registry.aliyuncs.com/google_containerskind: ClusterConfigurationkubernetesVersion: v1.20.2apiServer:extraArgs:advertise-address: 192.168.30.30networking:podSubnet: 10.244.0.0/16controllerManager:ExtraArgs:horizontal-pod-autoscaler-use-rest-clients: "true"horizontal-pod-autoscaler-sync-period: "10s"node-monitor-grace-period: "10s"

第一步 在 kubectl 默认控制和操作集群节点的时候,需要使用到 CA 的密钥,传输过程是通过 TLS 协议保障通讯的安全性。通过下面 3 行命令拷贝密钥信息到当前用户家目录下,这样 kubectl 执行时会首先访问 .kube 目录,使用这些授权信息访问集群。

第二步 之后添加 worker 节点时,要通过 token 才能保障安全性。因此,先把显示的这行命令保存下来,以备后续使用会用到。 ```bashmaster setting step one

To start cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf

master setting step two

You should now deploy a pod network to the cluster. Run “kubectl apply -f [podnetwork].yaml” with one of the options listed: https://kubernetes.io/docs/concepts/cluster-administration/addons/

Join any number of worker nodes by running the following on each as root: kubeadm join 192.168.30.30:6443 \ —token lebbdi.p9lzoy2a16tmr6hq \ —discovery-token-ca-cert-hash \ sha256:6c79fd83825d7b2b0c3bed9e10c428acf8ffcd615a1d7b258e9b500848c20cae

将子节点加入主节点中```bash$ kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master NotReady control-plane,master 62m v1.20.2k8s-node1 NotReady <none> 82m v1.20.2k8s-node2 NotReady <none> 82m v1.20.2# 查看token令牌$ sudo kubeadm token list# 生成token令牌$ sudo kubeadm token create# 忘记sha编码$ openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt \| openssl rsa -pubin -outform der 2>/dev/null \| openssl dgst -sha256 -hex | sed 's/^.* //'# 生成一个新的 token 令牌(比上面的方便)$ kubeadm token generate# 直接生成 join 命令(比上面的方便)$ kubeadm token create <token_generate> --print-join-command --ttl=0

执行完成之后可以通过如下命令,查看主节点信息

默认生成四个命名空间

- default、kube-system、kube-public、kube-node-lease

部署的核心服务有以下几个 (kube-system)

- coredns、etcd

- kube-apiserver、kube-scheduler

- kube-controller-manager、kube-controller-manager

此时 master 并没有 ready 状态(需要安装网络插件),下面安装 flannel 这个网络插件

# 命名空间$ kubectl get namespaceNAME STATUS AGEdefault Active 19mkube-node-lease Active 19mkube-public Active 19mkube-system Active 19m# 核心服务$ kubectl get pod -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-7f89b7bc75-bh42f 1/1 Running 0 19mcoredns-7f89b7bc75-dvzpl 1/1 Running 0 19metcd-k8s-master 1/1 Running 0 19mkube-apiserver-k8s-master 1/1 Running 0 19mkube-controller-manager-k8s-master 1/1 Running 0 19mkube-proxy-5rlpv 1/1 Running 0 19mkube-scheduler-k8s-master 1/1 Running 0 19m

3、部署 flannel 网络

网络服务用于管理 K8S 集群中的服务网络

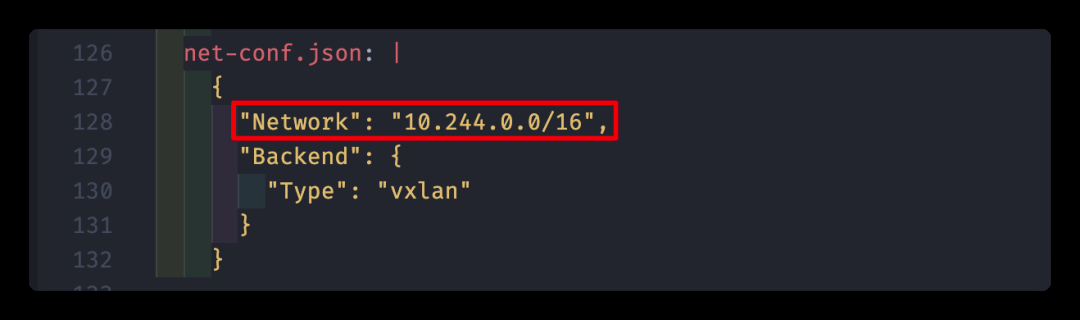

flannel 网络需要指定 IP 地址段,即上一步中通过编排文件设置的 10.244.0.0/16。其实可以通过 flannel 官方和 HELM 工具直接部署服务,但是原地址是需要搭梯子的。所以,可以将其内容保存在如下配置文件中,修改对应镜像地址。

部署 flannel 服务的官方下载地址

# 部署flannel服务# 1.修改镜像地址(如果下载不了的话)# 2.修改Network为--pod-network-cidr的参数IP段$ kubectl apply -f ./kube-flannel.yml# 如果部署出现问题可通过如下命令查看日志$ kubectl logs kube-flannel-ds-6xxs5 --namespace=kube-system$ kubectl describe pod kube-flannel-ds-6xxs5 --namespace=kube-system

如果使用当中存在问题的,可以参考官方的问题手册

因为这里使用的是 Vagrant 虚拟出来的机器进行 K8S 的部署,但是在运行对应 yaml 配置的时候,会报错。通过查看日志发现是因为默认绑定的是虚拟机上面的 eth0 这块网卡,而这块网卡是 Vagrant 使用的,应该绑定的是 eth1 才对。

Vagrant 通常为所有 VM 分配两个接口,第一个为所有主机分配的 IP 地址为 10.0.2.15,用于获得 NAT 的外部流量。这样会导致 flannel 部署存在问题。通过官方问题说明,可以使用 —iface=eth1 这个参数选择第二个网卡。

对应的参数使用方式,可以参考 flannel use –iface=eth1 中的回答自行添加,而这里直接修改了启动的配置文件,在启动服务的时候通过 args 修改了,如下所示。

$ kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-7f89b7bc75-bh42f 1/1 Running 0 61mcoredns-7f89b7bc75-dvzpl 1/1 Running 0 61metcd-k8s-master 1/1 Running 0 62mkube-apiserver-k8s-master 1/1 Running 0 62mkube-controller-manager-k8s-master 1/1 Running 0 62mkube-flannel-ds-zl148 1/1 Running 0 44skube-flannel-ds-ll523 1/1 Running 0 44skube-flannel-ds-wpmhw 1/1 Running 0 44skube-proxy-5rlpv 1/1 Running 0 61mkube-scheduler-k8s-master 1/1 Running 0 62m

配置文件如下所示

---apiVersion: policy/v1beta1kind: PodSecurityPolicymetadata:name: psp.flannel.unprivilegedannotations:seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/defaultseccomp.security.alpha.kubernetes.io/defaultProfileName: docker/defaultapparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/defaultapparmor.security.beta.kubernetes.io/defaultProfileName: runtime/defaultspec:privileged: falsevolumes:- configMap- secret- emptyDir- hostPathallowedHostPaths:- pathPrefix: "/etc/cni/net.d"- pathPrefix: "/etc/kube-flannel"- pathPrefix: "/run/flannel"readOnlyRootFilesystem: false# Users and groupsrunAsUser:rule: RunAsAnysupplementalGroups:rule: RunAsAnyfsGroup:rule: RunAsAny# Privilege EscalationallowPrivilegeEscalation: falsedefaultAllowPrivilegeEscalation: false# CapabilitiesallowedCapabilities: ["NET_ADMIN", "NET_RAW"]defaultAddCapabilities: []requiredDropCapabilities: []# Host namespaceshostPID: falsehostIPC: falsehostNetwork: truehostPorts:- min: 0max: 65535# SELinuxseLinux:# SELinux is unused in CaaSPrule: "RunAsAny"---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata:name: flannelrules:- apiGroups: ["extensions"]resources: ["podsecuritypolicies"]verbs: ["use"]resourceNames: ["psp.flannel.unprivileged"]- apiGroups:- ""resources:- podsverbs:- get- apiGroups:- ""resources:- nodesverbs:- list- watch- apiGroups:- ""resources:- nodes/statusverbs:- patch---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: flannelroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: flannelsubjects:- kind: ServiceAccountname: flannelnamespace: kube-system---apiVersion: v1kind: ServiceAccountmetadata:name: flannelnamespace: kube-system---kind: ConfigMapapiVersion: v1metadata:name: kube-flannel-cfgnamespace: kube-systemlabels:tier: nodeapp: flanneldata:cni-conf.json: |{"name": "cbr0","cniVersion": "0.3.1","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]}net-conf.json: |{"Network": "10.244.0.0/16","Backend": {"Type": "vxlan"}}---apiVersion: apps/v1kind: DaemonSetmetadata:name: kube-flannel-dsnamespace: kube-systemlabels:tier: nodeapp: flannelspec:selector:matchLabels:app: flanneltemplate:metadata:labels:tier: nodeapp: flannelspec:affinity:nodeAffinity:requiredDuringSchedulingIgnoredDuringExecution:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/osoperator: Invalues:- linuxhostNetwork: truepriorityClassName: system-node-criticaltolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.13.1-rc1command:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.13.1-rc1command:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgr- --iface=eth1resources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: falsecapabilities:add: ["NET_ADMIN", "NET_RAW"]env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run/flannel- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run/flannel- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg

至此集群部署成功!如果有参数错误需要修改,也可以在 reset 后重新 init 集群。

$ kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master Ready control-plane,master 62m v1.20.2k8s-node1 Ready control-plane,master 82m v1.20.2k8s-node2 Ready control-plane,master 82m v1.20.2# 重启集群$ sudo kubeadm reset$ sudo kubeadm init

4、部署 dashboard 服务

以 WEB 页面的可视化 dashboard 来监控集群的状态

这个还是会遇到需要搭梯子下载启动配置文件的问题,下面是对应的下载地址,可以下载之后上传到服务器上面在进行部署。

部署 dashboard 服务的官方下载地址

# 部署flannel服务$ kubectl apply -f ./kube-dashboard.yaml# 如果部署出现问题可通过如下命令查看日志$ kubectl logs \kubernetes-dashboard-c9fb67ffc-nknpj \--namespace=kubernetes-dashboard$ kubectl describe pod \kubernetes-dashboard-c9fb67ffc-nknpj \--namespace=kubernetes-dashboard$ kubectl get svc -n kubernetes-dashboardNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEdashboard-metrics-scraper ClusterIP 10.245.214.11 <none> 8000/TCP 26skubernetes-dashboard ClusterIP 10.245.161.146 <none> 443/TCP 26s

需要注意的是 dashboard 默认不允许外网访问,即使通过 kubectl proxy 允许外网访问。但 dashboard 又只允许 HTTPS 访问,这样 kubeadm init 时自签名的 CA 证书是不被浏览器承认的。

这里采用的方案是 Nginx 作为反向代理,使用 Lets Encrypt 提供的有效证书对外提供服务,再经由 proxy_pass 指令反向代理到 kubectl proxy 上,如下所示。此时,本地可经由 8888 访问到 dashboard 服务,再通过 Nginx 访问它。

# 代理(可以使用supervisor)$ kubectl proxy --accept-hosts='^*$'$ kubectl proxy --port=8888 --accept-hosts='^*$'# 测试代理是否正常(默认监听在8001端口上)$ curl -X GET -L http://localhost:8001# 本地(可以使用nginx)proxy_pass http://localhost:8001;proxy_pass http://localhost:8888;# 外网访问如下URL地址https://mydomain/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/#/login

配置文件整理

client_max_body_size 80M; client_body_buffer_size 128k; proxy_connect_timeout 600; proxy_read_timeout 600; proxy_send_timeout 600;

server { listen 8080 ssl; servername ;

ssl_certificate /etc/kubernetes/pki/ca.crt;ssl_certificate_key /etc/kubernetes/pki/ca.key;access_log /var/log/nginx/k8s.access.log;error_log /var/log/nginx/k8s.error.log error;location / {proxy_set_header X-Forwarded-Proto $scheme;proxy_set_header Host $http_host;proxy_set_header X-Real-IP $remote_addr;proxy_pass http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/;}

}

k8s.conf

[program:k8s-master] command=kubectl proxy —accept-hosts=’^*$’ user=vagrant environment=KUBECONFIG=”/home/vagrant/.kube/config” stopasgroup=true stopasgroup=true autostart=true autorestart=unexpected stdout_logfile_maxbytes=1MB stdout_logfile_backups=10 stderr_logfile_maxbytes=1MB stderr_logfile_backups=10 stderr_logfile=/var/log/supervisor/k8s-stderr.log stdout_logfile=/var/log/supervisor/k8s-stdout.log

配置文件如下所示```yaml# Copyright 2017 The Kubernetes Authors.## Licensed under the Apache License, Version 2.0 (the "License");# you may not use this file except in compliance with the License.# You may obtain a copy of the License at## http://www.apache.org/licenses/LICENSE-2.0## Unless required by applicable law or agreed to in writing, software# distributed under the License is distributed on an "AS IS" BASIS,# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.# See the License for the specific language governing permissions and# limitations under the License.apiVersion: v1kind: Namespacemetadata:name: kubernetes-dashboard---apiVersion: v1kind: ServiceAccountmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboard---kind: ServiceapiVersion: v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboardspec:ports:- port: 443targetPort: 8443selector:k8s-app: kubernetes-dashboard---apiVersion: v1kind: Secretmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-certsnamespace: kubernetes-dashboardtype: Opaque---apiVersion: v1kind: Secretmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-csrfnamespace: kubernetes-dashboardtype: Opaquedata:csrf: ""---apiVersion: v1kind: Secretmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-key-holdernamespace: kubernetes-dashboardtype: Opaque---kind: ConfigMapapiVersion: v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-settingsnamespace: kubernetes-dashboard---kind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboardrules:# Allow Dashboard to get, update and delete Dashboard exclusive secrets.- apiGroups: [""]resources: ["secrets"]resourceNames:["kubernetes-dashboard-key-holder","kubernetes-dashboard-certs","kubernetes-dashboard-csrf",]verbs: ["get", "update", "delete"]# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.- apiGroups: [""]resources: ["configmaps"]resourceNames: ["kubernetes-dashboard-settings"]verbs: ["get", "update"]# Allow Dashboard to get metrics.- apiGroups: [""]resources: ["services"]resourceNames: ["heapster", "dashboard-metrics-scraper"]verbs: ["proxy"]- apiGroups: [""]resources: ["services/proxy"]resourceNames:["heapster","http:heapster:","https:heapster:","dashboard-metrics-scraper","http:dashboard-metrics-scraper",]verbs: ["get"]---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardrules:# Allow Metrics Scraper to get metrics from the Metrics server- apiGroups: ["metrics.k8s.io"]resources: ["pods", "nodes"]verbs: ["get", "list", "watch"]---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboardroleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: kubernetes-dashboardsubjects:- kind: ServiceAccountname: kubernetes-dashboardnamespace: kubernetes-dashboard---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: kubernetes-dashboardroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: kubernetes-dashboardsubjects:- kind: ServiceAccountname: kubernetes-dashboardnamespace: kubernetes-dashboard---kind: DeploymentapiVersion: apps/v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboardspec:replicas: 1revisionHistoryLimit: 10selector:matchLabels:k8s-app: kubernetes-dashboardtemplate:metadata:labels:k8s-app: kubernetes-dashboardspec:containers:- name: kubernetes-dashboardimage: registry.cn-shanghai.aliyuncs.com/jieee/dashboard:v2.0.4imagePullPolicy: Alwaysports:- containerPort: 8443protocol: TCPargs:- --auto-generate-certificates- --namespace=kubernetes-dashboard# Uncomment the following line to manually specify Kubernetes API server Host# If not specified, Dashboard will attempt to auto discover the API server and connect# to it. Uncomment only if the default does not work.# - --apiserver-host=http://my-address:portvolumeMounts:- name: kubernetes-dashboard-certsmountPath: /certs# Create on-disk volume to store exec logs- mountPath: /tmpname: tmp-volumelivenessProbe:httpGet:scheme: HTTPSpath: /port: 8443initialDelaySeconds: 30timeoutSeconds: 30securityContext:allowPrivilegeEscalation: falsereadOnlyRootFilesystem: truerunAsUser: 1001runAsGroup: 2001volumes:- name: kubernetes-dashboard-certssecret:secretName: kubernetes-dashboard-certs- name: tmp-volumeemptyDir: {}serviceAccountName: kubernetes-dashboardnodeSelector:"kubernetes.io/os": linux# Comment the following tolerations if Dashboard must not be deployed on mastertolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedule---kind: ServiceapiVersion: v1metadata:labels:k8s-app: dashboard-metrics-scrapername: dashboard-metrics-scrapernamespace: kubernetes-dashboardspec:ports:- port: 8000targetPort: 8000selector:k8s-app: dashboard-metrics-scraper---kind: DeploymentapiVersion: apps/v1metadata:labels:k8s-app: dashboard-metrics-scrapername: dashboard-metrics-scrapernamespace: kubernetes-dashboardspec:replicas: 1revisionHistoryLimit: 10selector:matchLabels:k8s-app: dashboard-metrics-scrapertemplate:metadata:labels:k8s-app: dashboard-metrics-scraperannotations:seccomp.security.alpha.kubernetes.io/pod: "runtime/default"spec:containers:- name: dashboard-metrics-scraperimage: registry.cn-shanghai.aliyuncs.com/jieee/metrics-scraper:v1.0.4ports:- containerPort: 8000protocol: TCPlivenessProbe:httpGet:scheme: HTTPpath: /port: 8000initialDelaySeconds: 30timeoutSeconds: 30volumeMounts:- mountPath: /tmpname: tmp-volumesecurityContext:allowPrivilegeEscalation: falsereadOnlyRootFilesystem: truerunAsUser: 1001runAsGroup: 2001serviceAccountName: kubernetes-dashboardnodeSelector:"kubernetes.io/os": linux# Comment the following tolerations if Dashboard must not be deployed on mastertolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedulevolumes:- name: tmp-volumeemptyDir: {}

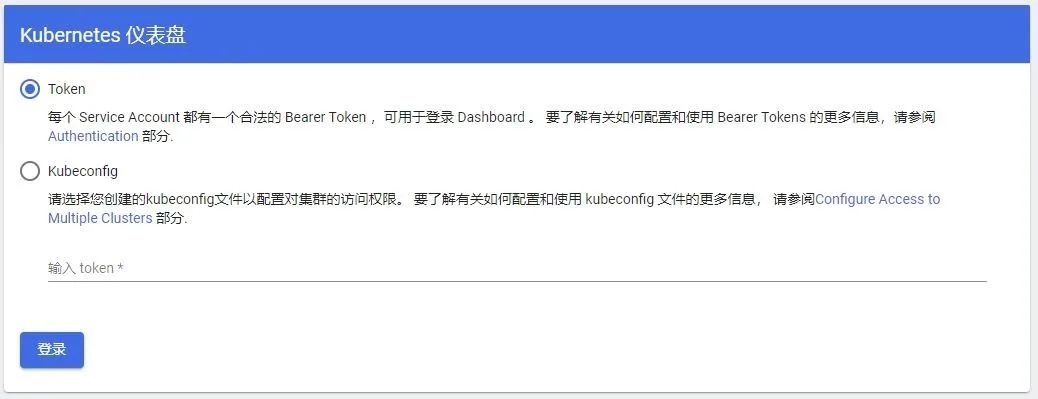

第一种:登录 dashboard 的方式(配置文件)

- 采用 token 方式

- 采用秘钥文件方式

# 创建管理员帐户(dashboard)$ cat <<EOF | kubectl apply -f -apiVersion: v1kind: ServiceAccountmetadata:name: admin-usernamespace: kubernetes-dashboardEOF# 将用户绑定已经存在的集群管理员角色$ cat <<EOF | kubectl apply -f -apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: admin-userroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: cluster-adminsubjects:- kind: ServiceAccountname: admin-usernamespace: kubernetes-dashboardEOF# 获取可用户于访问的token令牌$ kubectl -n kubernetes-dashboard describe secret \$(kubectl -n kubernetes-dashboard get secret \| grep admin-user | awk '{print $1}')

针对 Chrome 浏览器,在空白处点击然后输入:thisisunsafe

针对 Firefox 浏览器,遇到证书过期,添加例外访问

第二种:授权 dashboard 权限(不适用配置文件)

- 如果登录之后提示权限问题的话,可以执行如下操作

- 把 serviceaccount 绑定在 clusteradmin

- 授权 serviceaccount 用户具有整个集群的访问管理权限

```bash

创建serviceaccount

$ kubectl create serviceaccount dashboard-admin -n kube-system

把serviceaccount绑定在clusteradmin

授权serviceaccount用户具有整个集群的访问管理权限

$ kubectl create clusterrolebinding \ dashboard-cluster-admin —clusterrole=cluster-admin \ —serviceaccount=kube-system:dashboard-admin

获取serviceaccount的secret信息,可得到token令牌的信息

$ kubectl get secret -n kube-system

通过上边命令获取到dashboard-admin-token-slfcr信息

$ kubectl describe secret

浏览器访问登录并把token粘贴进去登录即可

快捷查看token的命令

$ kubectl describe secrets -n kube-system \ $(kubectl -n kube-system get secret | awk ‘/admin/{print $1}’) ```