1、使用pipeline

1.1 从pipeline的字典形可以看出来,pipeline可以有多个,而且确实pipeline能够定义多个;

1.2 为什么需要多个pipeline

- 可能会有多个spider,不同的pipeline处理不同的item的内容 ;

- 一个spider的内容可以要做不同的操作,比如存入不同的数据库中;

1.3 pipelines参数介绍

item:在爬虫文件中通过yield返回过来的参数;

spider:当前正在运行,且有返回值的爬虫对象(爬虫文件可能有多个)

class MyspiderPipeline:def process_item(self, item, spider):print(item)return item

1.4 pipelines中有多个class MyspiderPipeline时,如何接收相指定的item

- spider.name == “爬虫名”

- 在item中添加键值对:item[“com_from”] = “爬虫名”

- 通过isinstance(item, MyspiderItem),判断item是否来自items.py中的某一个类;

通过if判断上面的任意一种情况,即

class MyspiderPipeline:def process_item(self, item, spider):# if item.get("com_from") == "jd":if spider.name == "jd":print(item)print(spider.name)return item

1.5、两个默认执行的方法

- open_spider(self, item)在process_item()方法执行前执行;

- close_spider(self, item)在process_item()方法执行后执行;

- 不要漏了item形参,两个方法只会执行一次;

class MyspiderPipeline:def open_spider(self, item):print("爬虫开始了-----------")def process_item(self, item, spider):# if item.get("com_from") == "jd":if spider.name == "jd":print(item)print(spider.name)return itemdef close_spider(self, item):print("爬虫结束了------------")

1.6 注意:

- 设置文件ITEM_PIPELINES字典中pipeline的权重(数字)越小,优先级越高(先执行);

- pipelines.py中的类(包括自定义要处理保存数据的类)必须要有process_item(self, item, spider)方法,且方法名不能修改为其他的名称;

- pipeline.py中接收的item参数,必须由yield返回,且只能返回规定的几种数据类型;

2、logging模块的使用

用来记录日志

2.1 直接打印出错信息

可以使用getLogger()方法传入当前文件名,默认文件名是root

import scrapyimport logginglogger = logging.getLogger(__name__)class JdSpider(scrapy.Spider):name = 'jd'allowed_domains = ['jd.com']start_urls = ['http://www.jd.com/']def parse(self, response):logger.warning("this is warning")logger.error("this is error")

2.2 将出错信息保存到日志文件中

在配置文件中添加LOG_FILE

LOG_FILE = “./log.txt”

这样出错信息就不会打印在控制台,而是保存到了log.txt文件中2.3

logging模块详解 http://www.ityouknow.com/python/2019/10/13/python-logging-032.html

basicConfig样式设置

https://www.cnblogs.com/felixzh/p/6072417.html

回顾

3、腾讯爬虫

通过爬取腾讯招聘的页面的招聘信息,学习如何实现翻页请求

http://hr.tencent.com/position.php

创建项目scrapy startproject tencent创建爬虫scrapy genspider hr careers.tencent.com

3.1 scrapy中发送请求

- scrapy.Request知识点,发送get请求

- scrapy.FormRequest:发送post请求

- 结合yield使用

3.2 常用参数

- url:要请求的url地址;

- callback:回调函数,执行完scrapy.Request后再执行的函数,且scrapy.Request的返回值(response)会作为参数传入callback中;

- meta:是一个字典,实现不同的解析函数中数据的传递(一般用来传递item),使用response.meta.get(“xxx”)获取,meta默认会携带部分信息,比如下载延迟,请求深度

- dont_filter:让scrapy的去重不会过滤当前URL,scrapy默认有URL去重功能,对需要重复请求的URL有重要用途

yield scrapy.Request(url, callback=None, method='GET', headers=None, body=None,cookies=None, meta=None, encoding='utf-8', priority=0,dont_filter=False, errback=None, flags=None)

3.3 爬虫文件代码

class HrSpider(scrapy.Spider):name = 'hr'allowed_domains = ['careers.tencent.com']list_url = 'https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=1589877488190&countryId=&cityId=&bgIds=&productId=&categoryId=&parentCategoryId=&attrId=&keyword=&pageIndex={}&pageSize=10&language=zh-cn&area=cn'detail_url = 'https://careers.tencent.com/tencentcareer/api/post/ByPostId?timestamp=1589878052132&postId={}&language=zh-cn'start_urls = [list_url.format(1)]num = 0def parse(self, response):"""实现翻页,获取首页数据"""res = json.loads(response.text)['Data']['Posts']self.num += 1for data in res:job_msg = {}job_msg['num'] = '%s-%s' % (self.num, res.index(data)+1)job_msg['PostId'] = data.get("PostId")job_msg['RecruitPostName'] = data.get("RecruitPostName")job_msg['CountryName'] = data.get("CountryName")job_msg['LocationName'] = data.get("LocationName")PostId = data.get("PostId")# print(self.num, "--", job_msg)yield scrapy.Request(url=self.detail_url.format(PostId),meta={"job_msg": job_msg},callback=self.parse_detail)# 实现翻页功能for page in range(2, 5):yield scrapy.Request(url=self.list_url.format(page),callback=self.parse)def parse_detail(self, response):"""获取详情页的数据"""job_msg = response.meta.get("job_msg")data = json.loads(response.text)job_msg["Responsibility"] = data.get("Responsibility")job_msg["Requirement"] = data.get("Requirement")yield job_msg

4、item的介绍和使用

items.py文件中可以提前定义爬虫中要爬取的数据名称,一旦定义后,在爬虫文件中就需要使用相对应的键名,否则报错;可以少但是不可以多;

定义方法:要定义的名字 = scrapy.Field()

定义好后,在其他文件中需要导入,实例化item后才可使用

items.pyimport scrapyclass TencentItem(scrapy.Item):# define the fields for your item here like:title = scrapy.Field()position = scrapy.Field()date = scrapy.Field()

5、阳光政务平台

http://wz.sun0769.com/index.php/question/questionType?type=4&page=0

# yg.pyimport scrapyclass YgSpider(scrapy.Spider):name = 'yg'allowed_domains = ['wz.sun0769.com']start_urls = ['http://wz.sun0769.com/political/index/politicsNewest?id=1&page=1']def parse(self, response):ul = response.xpath("//ul[@class='title-state-ul']/li")for li in ul:content_dict = {}content_dict['num'] = li.xpath("./span[@class='state1']/text()").extract_first().strip()content_dict['status'] = li.xpath("./span[@class='state2']/text()").extract_first().strip()content_dict['title'] = li.xpath("./span[@class='state3']/a/text()").extract_first().strip()content_dict['feeback_time'] = li.xpath("./span[@class='state4']/text()").extract_first().strip()content_dict['build_time'] = li.xpath("./span[last()]/text()").extract_first()detail_url = 'http://wz.sun0769.com' + li.xpath("./span[@class='state3']/a/@href").extract_first()yield scrapy.Request(url=detail_url,callback=self.parse_detail,meta={'conten_dict': content_dict})url = response.xpath("//a[@class='arrow-page prov_rota']/@href").extract_first()next_url = 'http://wz.sun0769.com' + urlprint(next_url)yield scrapy.Request(url=next_url,callback=self.parse)def parse_detail(self, response):content_dict = response.meta.get("conten_dict")content_dict['username'] = response.xpath("//span[@class='fl details-head']/text()").extract()[-1].strip()content_dict['content'] = response.xpath("//div[@class='details-box']/pre/text()").extract_first().strip()img = response.xpath("//div[@class='clear details-img-list Picture-img']/img/@src").extract()if img:content_dict['img'] = imgelse:content_dict['img'] = "暂无图片"yield content_dict# print(content_dict)# exit()

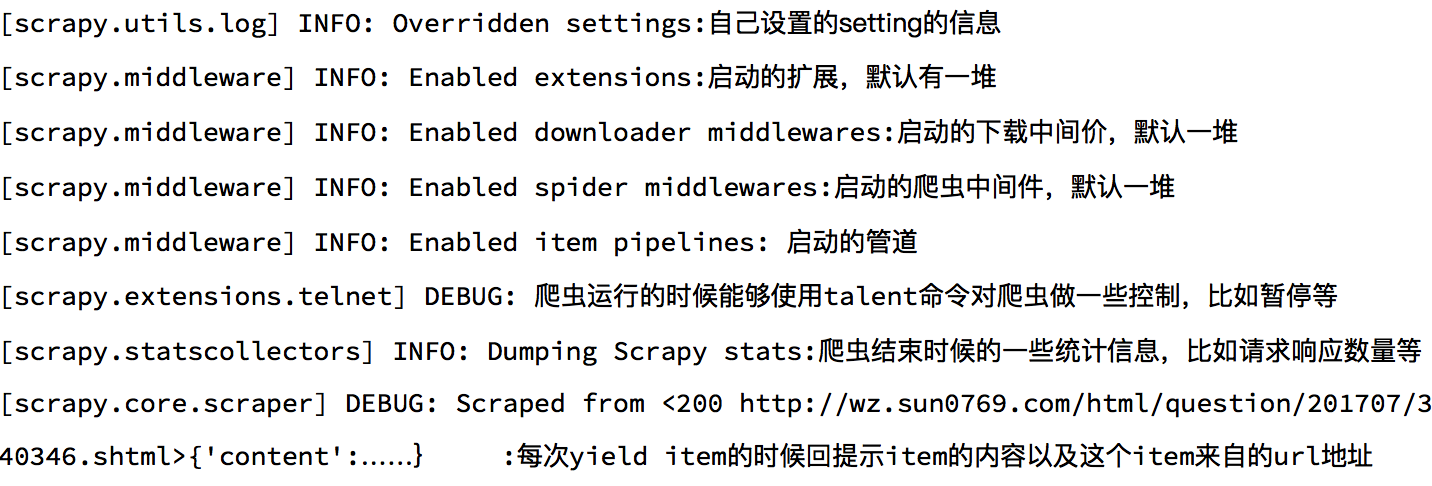

6、debug信息的认识

2019-01-19 09:50:48 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: tencent)2019-01-19 09:50:48 [scrapy.utils.log] INFO: Versions: lxml 4.2.5.0, libxml2 2.9.5, cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.9.0, Python 3.6.5 (v3.6.5:f59c0932b4, Mar 28 2018, 17:00:18) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 18.0.0 (OpenSSL 1.1.0i 14 Aug 2018), cryptography 2.3.1, Platform Windows-10-10.0.17134-SP0 ### 爬虫scrpay框架依赖的相关模块和平台的信息2019-01-19 09:50:48 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'tencent', 'NEWSPIDER_MODULE': 'tencent.spiders', 'ROBOTSTXT_OBEY': True, 'SPIDER_MODULES': ['tencent.spiders'], 'USER_AGENT': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.115 Safari/537.36'} ### 自定义的配置信息哪些被应用了2019-01-19 09:50:48 [scrapy.middleware] INFO: Enabled extensions: ### 插件信息['scrapy.extensions.corestats.CoreStats','scrapy.extensions.telnet.TelnetConsole','scrapy.extensions.logstats.LogStats']2019-01-19 09:50:48 [scrapy.middleware] INFO: Enabled downloader middlewares: ### 启动的下载器中间件['scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware','scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware','scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware','scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware','scrapy.downloadermiddlewares.useragent.UserAgentMiddleware','scrapy.downloadermiddlewares.retry.RetryMiddleware','scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware','scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware','scrapy.downloadermiddlewares.redirect.RedirectMiddleware','scrapy.downloadermiddlewares.cookies.CookiesMiddleware','scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware','scrapy.downloadermiddlewares.stats.DownloaderStats']2019-01-19 09:50:48 [scrapy.middleware] INFO: Enabled spider middlewares: ### 启动的爬虫中间件['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware','scrapy.spidermiddlewares.offsite.OffsiteMiddleware','scrapy.spidermiddlewares.referer.RefererMiddleware','scrapy.spidermiddlewares.urllength.UrlLengthMiddleware','scrapy.spidermiddlewares.depth.DepthMiddleware']2019-01-19 09:50:48 [scrapy.middleware] INFO: Enabled item pipelines: ### 启动的管道['tencent.pipelines.TencentPipeline']2019-01-19 09:50:48 [scrapy.core.engine] INFO: Spider opened ### 开始爬去数据2019-01-19 09:50:48 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)2019-01-19 09:50:48 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:60232019-01-19 09:50:51 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://hr.tencent.com/robots.txt> (referer: None) ### 抓取robots协议内容2019-01-19 09:50:51 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://hr.tencent.com/position.php?&start=#a0> (referer: None) ### start_url发起请求2019-01-19 09:50:51 [scrapy.spidermiddlewares.offsite] DEBUG: Filtered offsite request to 'hr.tencent.com': <GET https://hr.tencent.com/position.php?&start=> ### 提示错误,爬虫中通过yeid交给引擎的请求会经过爬虫中间件,由于请求的url超出allowed_domain的范围,被offsitmiddleware 拦截了2019-01-19 09:50:51 [scrapy.core.engine] INFO: Closing spider (finished) ### 爬虫关闭2019-01-19 09:50:51 [scrapy.statscollectors] INFO: Dumping Scrapy stats: ### 本次爬虫的信息统计{'downloader/request_bytes': 630,'downloader/request_count': 2,'downloader/request_method_count/GET': 2,'downloader/response_bytes': 4469,'downloader/response_count': 2,'downloader/response_status_count/200': 2,'finish_reason': 'finished','finish_time': datetime.datetime(2019, 1, 19, 1, 50, 51, 558634),'log_count/DEBUG': 4,'log_count/INFO': 7,'offsite/domains': 1,'offsite/filtered': 12,'request_depth_max': 1,'response_received_count': 2,'scheduler/dequeued': 1,'scheduler/dequeued/memory': 1,'scheduler/enqueued': 1,'scheduler/enqueued/memory': 1,'start_time': datetime.datetime(2019, 1, 19, 1, 50, 48, 628465)}2019-01-19 09:50:51 [scrapy.core.engine] INFO: Spider closed (finished)

7、Scrapy深入之scrapy shell

Scrapy shell是一个交互终端,我们可以在未启动spider的情况下尝试及调试代码,也可以用来测试XPath表达式

使用方法:

scrapy shell https://www.baidu.com/

response.url:当前相应的URL地址response.request.url:当前相应的请求的URL地址response.headers:响应头response.body:响应体,也就是HTML代码,默认是byte类型response.requests.headers:当前响应的请求头

8、scrapy settings

为什么需要配置文件:

配置文件存放一些公共的变量(比如数据库的地址,账号密码等)

方便自己和别人修改

一般用全大写字母命名变量名 SQL_HOST = ‘192.168.0.1’

8.1 如何在其他文件中使用settings文件中的常量

- 直接使用import导入settings中的常量;

- 通过spider对象获取;

注意:settings中的常量名全都是由大写字母组成,自己添加新的常量时,也要使用大写字母,否则可能无法取值;

项目名/spiders/爬虫名.py(爬虫,获取settings.py中配置的常量):

import scrapyfrom 项目名.settings import MONGO_HOST # 第一种方式:直接导入settings中的常量class DemoSpider(scrapy.Spider):name = '爬虫名'allowed_domains = ['baidu.com']start_urls = ['http://www.baidu.com']def parse(self, response):# 第二种方式:通过spider对象获取self.settings["MONGO_HOST"] # 没有对应的值会报错self.settings.get("MONGO_HOST","默认值") # 没有对应的值会返回默认值pass

项目名/pipelines.py(管道,通过spider对象获取settings.py中配置的常量):

from 项目名.settings import MONGO_HOST # 第一种方式:直接导入settings中的常量class DemoPipeline(object):def process_item(self, item, spider):spider.settings.get("MONGO_HOST") # 第二种方式:通过spider对象获取settings中配置的常量pass

- settings文件详细信息:https://www.cnblogs.com/cnkai/p/7399573.html

**