云原生介绍

- git地址: https://github.com/cncf/toc/blob/main/DEFINITION.md#%E4%B8%AD%E6%96%87%E7%89%88%E6%9C%AC

- 云原生概览图:https://landscape.cncf.io/

- k8s官网:https://kubernetes.io/docs/home/

- 云原生技术栈

- 容器: 以docker为代表的的容器技术

- 服务网格: 比如service mesh等

- 微服务: 松耦合

- 不可变基础设施

- 声明式API

- 云原生特征

- 符合12因素应用

- 基准代码: 一份基准代码

- 依赖: 显式声明隔离和依赖

- 配置: 环境中存储配置

- 后端服务:后端服务当做一种附加资源

- 构建,发布,运行

- 进程: 无状态运行

- 端口绑定: 通过端口绑定提供服务

- 并发: 通过进程模型进行处理

- 易处理: 快速启停

- 开发环境与线上环境等价

- 日志

- 管理进程

- 面向微服务架构

- 自服务敏捷架构

- 基于API的协作

- 抗脆弱性

- 符合12因素应用

- 云原生项目分类:https://www.cncf.io/projects/

k8s简介

- 官网: https://kubernetes.io/docs/home/

github:https://github.com/kubernetes/kubernetes

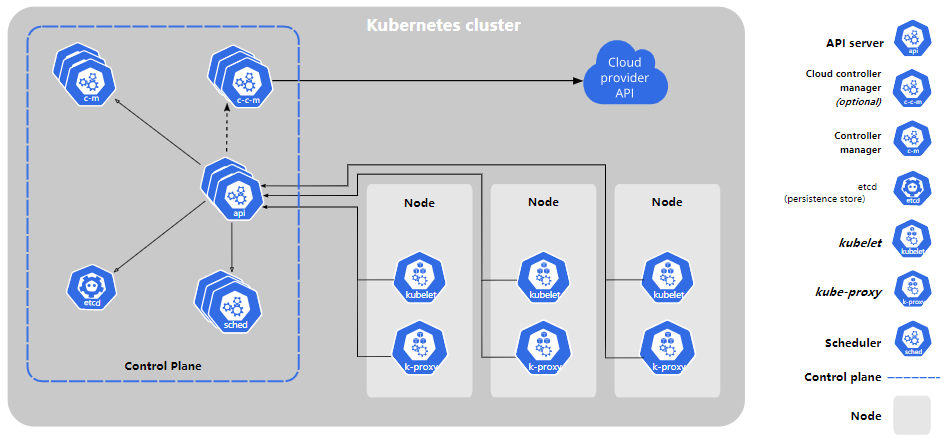

k8s组件

组件官网: https://kubernetes.io/docs/concepts/overview/components/

Master

kube-apiserver

提供了k8s各类资源对象的增删改查和Watch等Restful风格的接口,这些对象 包括pods,services, replicationcrollers等,apiserver为rest操作提供服务并未集群的共享状态提供前端,所有其他组件都通过该前端进行交互 默认端口: 6443

kube-scheduler

控制平面进程,负责将Pod指派到节点上 监听端口:10251

调度步骤如下:

- 通过调度算法为待调度的pod列表中的每个pod从可用node上选择一个最适合的Node

- Node节点上的kubelet会通过Apiserver监听到scheduler产生的pod绑定信息,然后获取pod清单,下载image并启动荣区

Node选择策略:

- LeastRequestedPriority: 优先选择资源消耗最小的节点

- CaculateNodeLabelPriority: 优先选择含有指定label的节点

- BalancedResourceAllocation:优先从备选节点中选择资源使用率最均衡的节点

kube-controller-manager

包括一些子控制器(副本控制器,节点控制器,命名空间控制器和服务账号控制器等),作为集群内部的管理控制中心,负责集群内部的Node,Pod副本,服务端点,namespace,服务账号,资源定额的管理,当某个Node意外宕机时,controller manager会及时发现并执行自动修复流程确保集群中的pod副本数始终处于预期的状态 特点:

- 每隔5s检查一次节点状态

- 如果没有收到自节点的心跳。该节点会被标记为不可达

- 标记为不可达之前会等待40s

- nide节点标记不可达5s之后还没有恢复,controllerManager会删除当前node节点的所有pod并在其他可用节点重建这些pod

ETCD

负责k8s集群中的消息传递和存储

Node

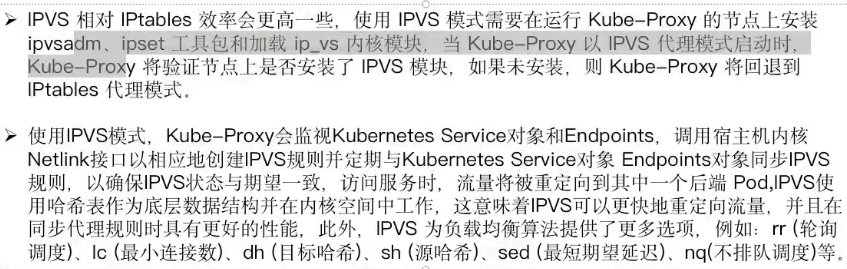

- kube-proxy

运行在k8snode节点上,反映了node节点上由k8s中定义的服务,并可以通过简单的tcp,udp,和sctp流转发或者一组后端进行循环tcp,udp,sctp转发,用户必须使用apiserver来创建一个服务来配置代理,其实就是kube-proxy通过node节点维护网络规则并执行连接转发来自k8s的访问。 不同版本支持三种工作模式:

- UserSpace: k8s1.1版本之前使用,1.2之后淘汰

- iptables: k8s1.1版本开始支持,1.2开始为默认

- ipvs: k8s1.9引入,1.11成为正式版本,需要安装ipvsadm,ipset工具包加载ip_vs内核模块

- kubelet

运行在node节点的代理组件,他会监视已分配给节点的pod,具体功能如下:

- 向master(api-server)汇报node节点的状态信息

- 接受指令并在pod中创建docker容器

- 准备pod所需要的数据卷

- 返回pod状态

- 在node节点执行容日健康检查

其他组件

- coreDNS: 维护DNS和域名解析

-

k8s集群部署

使用

kubeasz自动化部署k8s集群: https://github.com/easzlab/kubeasz

Node节点配置尽量高48c128G,2T,万兆网卡

ETCD配置SSD固态盘8c16G300Gssd

Master节点16c16G集群规划

操作系统是ubuntu-20.04LTS

- 集群所有节点时区必须保持同步

| 主机名 | IP | 角色 | 备注 | | —- | —- | —- | —- | | k8s-ha01 | 10.168.56.113 | haproxy,keepalive | kubeasz部署节点 | | k8s-ha02 | 10.168.56.114 | haproxy,keepalive | | | k8s-harbor01 | 10.168.56.111 | harbor | | | k8s-harbor02 | 10.168.56.112 | harbor | | | k8s-master01 | 10.168.56.211 | kubectl,apiserver,controllermanager,etcd,docker | | | k8s-master02 | 10.168.56.212 | | | | k8s-master03 | 10.168.56.213 | | | | k8s-node01 | 10.168.56.214 | kubelet,kube-proxy,docker | | | k8s-node02 | 10.168.56.215 | | | | k8s-node01 | 10.168.56.216 | | |ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

harbor配置自签名https证书

- 配置harbor-https ```bash root@k8s-harbor01:~# cd /apps/harbor/ root@k8s-harbor01:/apps/harbor# mkdir certs root@k8s-harbor01:/apps/harbor# cd certs/ root@k8s-harbor01:/apps/harbor/certs# openssl genrsa -out harbor-ca.key root@k8s-harbor01:/apps/harbor/certs# openssl req -x509 -new -nodes -key harbor-ca.key -subj “/CN=harbor.cropy.cn” -days 7120 -out harbor-ca.crt root@k8s-harbor01:/apps/harbor/certs# vim ../harbor.yml

hostname: harbor.cropy.cn

http related config

http:

port for http, default is 80. If https enabled, this port will redirect to https port

port: 80

https related config

https:

https port for harbor, default is 443

port: 443 certificate: /apps/harbor/certs/harbor-ca.crt private_key: /apps/harbor/certs/harbor-ca.key

harbor_admin_password: Harbor12345 data_volume: /data/harbor

root@k8s-harbor01:/apps/harbor/certs# cd .. root@k8s-harbor01:/apps/harbor# ./install.sh —with-trivy #安装即可

2. 找一台测试机器测试https```bashroot@k8s-habor02:~# echo "10.168.56.111 harbor.cropy.cn" >> /etc/hostsroot@k8s-habor02:~# ping harbor.cropy.comroot@k8s-habor02:~# mkdir /etc/docker/certs.d/harbor.cropy.cn -pscp /apps/harbor/certs/harbor-ca.crt 10.168.56.112:/etc/docker/certs.d/harbor.cropy.cn #从harbor01将证书传送到测试节点root@k8s-habor02:~# systemctl restart dockerroot@k8s-habor02:~# docker login harbor.crpoy.cn

配置haproxy&keepalived

- 参考docker复习章节,keepalive配置如下 ```bash root@k8s-ha01:~# cat /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { notification_email { acassen } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL }

vrrp_instance VI_1 { state MASTER interface eth1 garp_master_delay 10 smtp_alert virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.56.115 label eth1:1 192.168.56.116 label eth1:2 192.168.56.117 label eth1:3 192.168.56.118 label eth1:4 } }

- haproxy配置文件参考

```bash

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY13

05:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen k8s-6443

bind 192.168.56.116:6443

mode tcp

server k8s1 10.168.56.211:6443 check inter 3s fall 3 rise 5

server k8s2 10.168.56.212:6443 check inter 3s fall 3 rise 5

server k8s3 10.168.56.213:6443 check inter 3s fall 3 rise 5

ha01和ha02配置内核参数防止haproxy不能启动

vim /etc/sysctl.conf net.ipv4.ip_nonlocal_bind = 1kubeasz部署和初始化

集群规划: https://github.com/easzlab/kubeasz/blob/master/docs/setup/00-planning_and_overall_intro.md

- 部署节点: k8s-ha01 ```bash

root@k8s-ha01:~# mkdir /app/kubeasz -p root@k8s-ha01:~# cd /app/kubeasz/ root@k8s-ha01:/app/kubeasz# apt install python3-pip git sshpass -y root@k8s-ha01:/app/kubeasz# pip install ansible root@k8s-ha01:/app/kubeasz# ssh-keygen root@k8s-ha01:/app/kubeasz# vim k8s_auth.sh IPS=” 10.168.56.111 10.168.56.112 10.168.56.113 10.168.56.114 10.168.56.211 10.168.56.212 10.168.56.213 10.168.56.214 10.168.56.215 10.168.56.216 “ for ip in ${IPS};do sshpass -p 123456 ssh-copy-id ${ip} -o StrictHostKeyChecking=no if [ $? -eq 0 ];then echo “ssh key add to ${ip} finished!!” else echo “ssh key add to ${ip} failed!!” fi done root@k8s-ha01:/app/kubeasz# sh k8s_auth.sh root@k8s-ha01:/app/kubeasz# export release=3.1.0 root@k8s-ha01:/app/kubeasz# curl -C- -fLO —retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown root@k8s-ha01:/app/kubeasz# chmod +x ezdown root@k8s-ha01:/app/kubeasz# ./ezdown -D

- 环境准备

```bash

root@k8s-ha01:~# cd /etc/kubeasz/

root@k8s-ha01:/etc/kubeasz# ls -l

total 108

-rw-rw-r-- 1 root root 20304 Apr 26 10:02 ansible.cfg

drwxr-xr-x 3 root root 4096 Sep 21 13:49 bin

drwxrwxr-x 8 root root 4096 Apr 26 11:02 docs

drwxr-xr-x 2 root root 4096 Sep 21 13:53 down

drwxrwxr-x 2 root root 4096 Apr 26 11:02 example

-rwxrwxr-x 1 root root 24629 Apr 26 10:02 ezctl

-rwxrwxr-x 1 root root 15075 Apr 26 10:02 ezdown

drwxrwxr-x 10 root root 4096 Apr 26 11:02 manifests

drwxrwxr-x 2 root root 4096 Apr 26 11:02 pics

drwxrwxr-x 2 root root 4096 Apr 26 11:02 playbooks

-rw-rw-r-- 1 root root 5953 Apr 26 10:02 README.md

drwxrwxr-x 22 root root 4096 Apr 26 11:02 roles

drwxrwxr-x 2 root root 4096 Apr 26 11:02 tools

root@k8s-ha01:/etc/kubeasz# ./ezctl --help

Usage: ezctl COMMAND [args]

-------------------------------------------------------------------------------------

Cluster setups:

list to list all of the managed clusters

checkout <cluster> to switch default kubeconfig of the cluster

new <cluster> to start a new k8s deploy with name 'cluster'

setup <cluster> <step> to setup a cluster, also supporting a step-by-step way

start <cluster> to start all of the k8s services stopped by 'ezctl stop'

stop <cluster> to stop all of the k8s services temporarily

upgrade <cluster> to upgrade the k8s cluster

destroy <cluster> to destroy the k8s cluster

backup <cluster> to backup the cluster state (etcd snapshot)

restore <cluster> to restore the cluster state from backups

start-aio to quickly setup an all-in-one cluster with 'default' settings

Cluster ops:

add-etcd <cluster> <ip> to add a etcd-node to the etcd cluster

add-master <cluster> <ip> to add a master node to the k8s cluster

add-node <cluster> <ip> to add a work node to the k8s cluster

del-etcd <cluster> <ip> to delete a etcd-node from the etcd cluster

del-master <cluster> <ip> to delete a master node from the k8s cluster

del-node <cluster> <ip> to delete a work node from the k8s cluster

Extra operation:

kcfg-adm <cluster> <args> to manage client kubeconfig of the k8s cluster

Use "ezctl help <command>" for more information about a given command.

root@k8s-ha01:/etc/kubeasz# ./ezctl new k8s-01 #创建集群

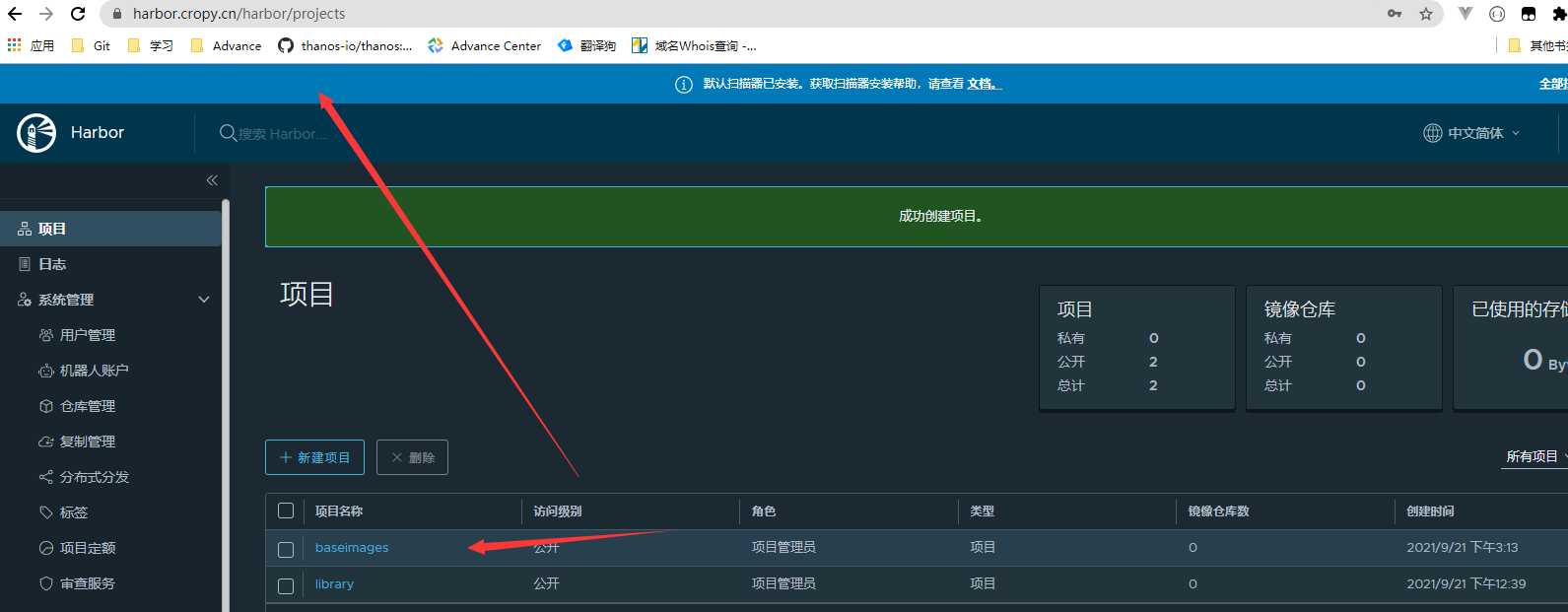

- 基础镜像配置

- harbor新增baseimages仓库

- 基础服务镜像上传 ```bash root@k8s-ha01:/app/kubeasz# docker tag easzlab/pause-amd64:3.4.1 harbor.cropy.cn/baseimages/pause-amd64:3.4.1 root@k8s-ha01:/app/kubeasz# docker push harbor.cropy.cn/baseimages/pause-amd64:3.4.1 root@k8s-ha01:/etc/kubeasz# docker tag calico/cni:v3.15.3 harbor.cropy.cn/baseimages/calico-cni:v3.15.3 root@k8s-ha01:/etc/kubeasz# docker push harbor.cropy.cn/baseimages/calico-cni:v3.15.3 root@k8s-ha01:/etc/kubeasz# docker tag calico/pod2daemon-flexvol:v3.15.3 harbor.cropy.cn/baseimages/calico-pod2daemon-flexvol:v3.15.3 root@k8s-ha01:/etc/kubeasz# docker push harbor.cropy.cn/baseimages/calico-pod2daemon-flexvol:v3.15.3 root@k8s-ha01:/etc/kubeasz# docker tag calico/node:v3.15.3 harbor.cropy.cn/baseimages/calico-node:v3.15.3 root@k8s-ha01:/etc/kubeasz# docker push harbor.cropy.cn/baseimages/calico-node:v3.15.3 root@k8s-ha01:/etc/kubeasz# docker tag calico/kube-controllers:v3.15.3 harbor.cropy.cn/baseimages/calico-kube-controllers:v3.15.3 root@k8s-ha01:/etc/kubeasz# docker push harbor.cropy.cn/baseimages/calico-kube-controllers:v3.15.3

CORE-DNS下载建议fq

root@k8s-ha01:/etc/kubeasz# docker pull k8s.gcr.io/coredns/coredns:v1.8.3 root@k8s-ha01:~# docker tag k8s.gcr.io/coredns/coredns:v1.8.3 harbor.cropy.cn/baseimages/coredns:v1.8.3 root@k8s-ha01:~# docker push harbor.cropy.cn/baseimages/coredns:v1.8.3

dashboard 相关镜像

root@k8s-ha01:~# docker pull kubernetesui/metrics-scraper:v1.0.6 root@k8s-ha01:~# docker tag kubernetesui/metrics-scraper:v1.0.6 harbor.cropy.cn/baseimages/metrics-scraper:v1.0.6 root@k8s-ha01:~# docker push harbor.cropy.cn/baseimages/metrics-scraper:v1.0.6

root@k8s-ha01:~# docker pull kubernetesui/dashboard:v2.3.1 root@k8s-ha01:~# docker tag kubernetesui/dashboard:v2.3.1 harbor.cropy.cn/baseimages/dashboard:v2.3.1 root@k8s-ha01:~# docker push harbor.cropy.cn/baseimages/dashboard:v2.3.1

- 服务配置

```bash

root@k8s-ha01:/etc/kubeasz# vim /etc/kubeasz/clusters/k8s-01/hosts

[etcd]

10.168.56.211

10.168.56.212

10.168.56.213

[kube_master]

10.168.56.211

10.168.56.212

[kube_node]

10.168.56.214

10.168.56.215

[harbor]

[ex_lb]

10.168.56.113 LB_ROLE=backup EX_APISERVER_VIP=192.168.56.116 EX_APISERVER_PORT=8443

10.168.56.114 LB_ROLE=master EX_APISERVER_VIP=192.168.56.116 EX_APISERVER_PORT=8443

[chrony]

[all:vars]

SECURE_PORT="6443"

CONTAINER_RUNTIME="docker"

CLUSTER_NETWORK="calico"

PROXY_MODE="ipvs"

SERVICE_CIDR="10.100.0.0/16"

CLUSTER_CIDR="10.200.0.0/16"

NODE_PORT_RANGE="30000-65000"

CLUSTER_DNS_DOMAIN="cropy.local"

bin_dir="/usr/local/bin"

base_dir="/etc/kubeasz"

cluster_dir="{{ base_dir }}/clusters/k8s-01"

ca_dir="/etc/kubernetes/ssl"

root@k8s-ha01:~# egrep -v "^$|^#" /etc/kubeasz/clusters/k8s-01/config.yml

INSTALL_SOURCE: "online"

OS_HARDEN: false

ntp_servers:

- "ntp1.aliyun.com"

- "time1.cloud.tencent.com"

- "0.cn.pool.ntp.org"

local_network: "0.0.0.0/0"

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""

ENABLE_MIRROR_REGISTRY: false

SANDBOX_IMAGE: "harbor.cropy.cn/baseimages/pause-amd64:3.4.1"

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

DOCKER_STORAGE_DIR: "/var/lib/docker"

ENABLE_REMOTE_API: false

INSECURE_REG: '["127.0.0.1/8","192.168.56.112"]'

MASTER_CERT_HOSTS:

- "10.1.1.1"

- "k8s.test.io"

#- "www.test.com"

NODE_CIDR_LEN: 24

KUBELET_ROOT_DIR: "/var/lib/kubelet"

MAX_PODS: 300

KUBE_RESERVED_ENABLED: "yes"

SYS_RESERVED_ENABLED: "no"

BALANCE_ALG: "roundrobin"

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

flannelVer: "v0.13.0-amd64"

flanneld_image: "easzlab/flannel:{{ flannelVer }}"

flannel_offline: "flannel_{{ flannelVer }}.tar"

CALICO_IPV4POOL_IPIP: "Always"

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

CALICO_NETWORKING_BACKEND: "brid"

calico_ver: "v3.15.3"

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

calico_offline: "calico_{{ calico_ver }}.tar"

ETCD_CLUSTER_SIZE: 1

cilium_ver: "v1.4.1"

cilium_offline: "cilium_{{ cilium_ver }}.tar"

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

kube_ovn_ver: "v1.5.3"

kube_ovn_offline: "kube_ovn_{{ kube_ovn_ver }}.tar"

OVERLAY_TYPE: "full"

FIREWALL_ENABLE: "true"

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

kuberouter_offline: "kube-router_{{ kube_router_ver }}.tar"

busybox_offline: "busybox_{{ busybox_ver }}.tar"

dns_install: "no"

corednsVer: "1.8.0"

ENABLE_LOCAL_DNS_CACHE: false

dnsNodeCacheVer: "1.17.0"

LOCAL_DNS_CACHE: "169.254.20.10"

metricsserver_install: "no"

metricsVer: "v0.3.6"

dashboard_install: "no"

dashboardVer: "v2.2.0"

dashboardMetricsScraperVer: "v1.0.6"

ingress_install: "no"

ingress_backend: "traefik"

traefik_chart_ver: "9.12.3"

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "12.10.6"

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.1"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

HARBOR_VER: "v2.1.3"

HARBOR_DOMAIN: "harbor.yourdomain.com"

HARBOR_TLS_PORT: 8443

HARBOR_SELF_SIGNED_CERT: true

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CLAIR: false

HARBOR_WITH_CHARTMUSEUM: true

- 环境安装

```bash

root@k8s-ha01:~# cd /etc/kubeasz/

root@k8s-ha01:/etc/kubeasz# ./ezctl help setup

Usage: ezctl setup

available steps: 01 prepare to prepare CA/certs & kubeconfig & other system settings 02 etcd to setup the etcd cluster 03 container-runtime to setup the container runtime(docker or containerd) 04 kube-master to setup the master nodes 05 kube-node to setup the worker nodes 06 network to setup the network plugin 07 cluster-addon to setup other useful plugins 90 all to run 01~07 all at once 10 ex-lb to install external loadbalance for accessing k8s from outside 11 harbor to install a new harbor server or to integrate with an existed one

examples: ./ezctl setup test-k8s 01 (or ./ezctl setup test-k8s prepare) ./ezctl setup test-k8s 02 (or ./ezctl setup test-k8s etcd) ./ezctl setup test-k8s all ./ezctl setup test-k8s 04 -t restart_master

root@k8s-ha01:/etc/kubeasz# vim playbooks/01.prepare.yml #去掉lb和chrony配置

hosts:

- kube_master

- kube_node

- etcd roles:

- { role: os-harden, when: “OS_HARDEN|bool” }

- { role: chrony, when: “groups[‘chrony’]|length > 0” }

root@k8s-ha01:/etc/kubeasz# ./ezctl setup k8s-01 01 #初始化环境配置

root@k8s-ha01:/etc/kubeasz# ./ezctl setup k8s-01 02 #安装etcd

root@k8s-ha01:/etc/kubeasz# ./ezctl setup k8s-01 03 #安装docker等运行环境

root@k8s-ha01:/etc/kubeasz# for i in {1..6}; do ssh 10.168.56.21$i mkdir /root/.docker ; done #同步harbor认证文件

root@k8s-ha01:/etc/kubeasz# for i in {1..6}; do scp /root/.docker/config.json 10.168.56.21$i:/root/.docker/config.json ; done

root@k8s-ha01:/etc/kubeasz# ./ezctl setup k8s-01 04 #安装k8s-master

root@k8s-ha01:/etc/kubeasz# vim roles/kube-node/templates/kube-proxy-config.yaml.j2

…

mode: “{{ PROXY_MODE }}”

ipvs:

scheduler: wrr

root@k8s-ha01:/etc/kubeasz# ./ezctl setup k8s-01 05 #安装k8s-node

root@k8s-ha01:/etc/kubeasz# vim roles/calico/templates/calico-v3.15.yaml #修改镜像地址为harbor.cropy.cn私有仓库的地址

root@k8s-ha01:/etc/kubeasz# ./ezctl setup k8s-01 06 #安装网络组件

```

安装addons

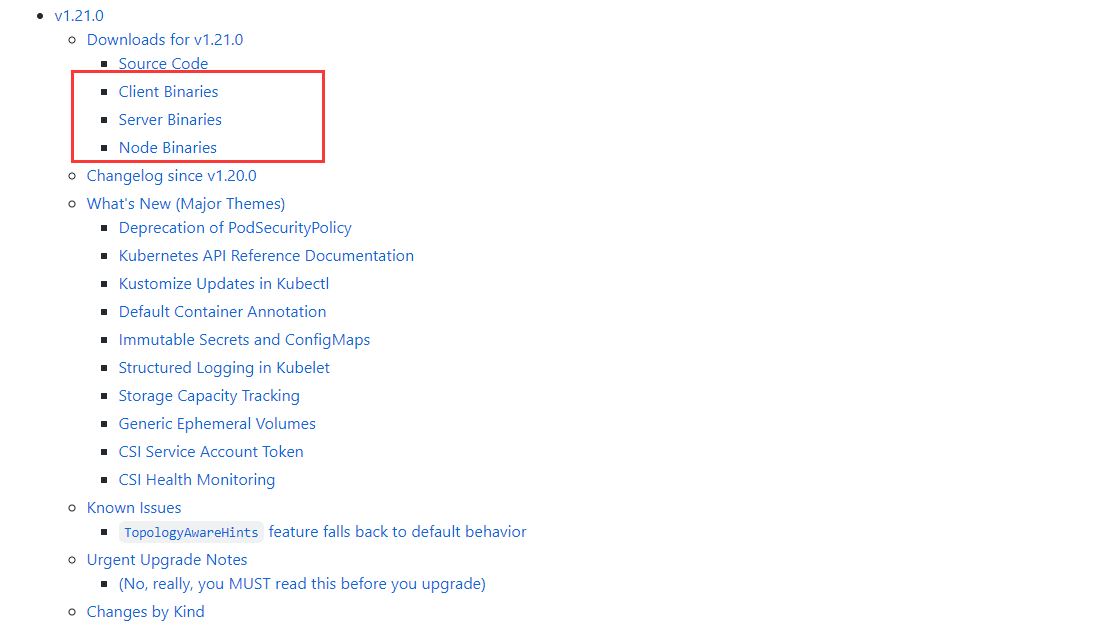

在k8s-master01节点执行,下载https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.21.md

中的二进制程序

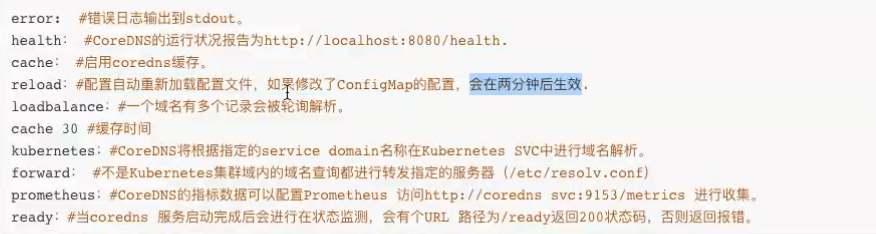

coreDNS配置

准备coredns:v1.8.3

主要配置参数

root@k8s-master01:~# cd /usr/local/src/

root@k8s-master01:/usr/local/src# wget https://storage.googleapis.com/kubernetes-release/release/v1.21.0/kubernetes.tar.gz

root@k8s-master01:/usr/local/src# tar xf kubernetes.tar.gz

root@k8s-master01:~# cd /usr/local/src/kubernetes/cluster/addons/dns/coredns/

root@k8s-master01:/usr/local/src/kubernetes/cluster/addons/dns/coredns# vim coredns.yaml.base

...

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

bind 0.0.0.0

ready

kubernetes cropy.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

...

containers:

- name: coredns

image: harbor.cropy.cn/baseimages/coredns:v1.8.3

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 256Mi

...

spec:

type: NodePort

selector:

k8s-app: kube-dns

clusterIP: 10.100.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

targetPort: 9153

nodePort: 30009

root@k8s-master01:/usr/local/src/kubernetes/cluster/addons/dns/coredns# cp coredns.yaml.base /usr/local/src/coredns.yml

root@k8s-master01:/usr/local/src/kubernetes/cluster/addons/dns/coredns# cd /usr/local/src/

root@k8s-master01:/usr/local/src# kubectl apply -f coredns.yml

root@k8s-master01:/usr/local/src# kubectl exec -it net-test1 sh #测试pod查看dns解析

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ping qq.com

PING qq.com (58.250.137.36): 56 data bytes

64 bytes from 58.250.137.36: seq=0 ttl=127 time=42.997 ms

64 bytes from 58.250.137.36: seq=1 ttl=127 time=43.912 ms

64 bytes from 58.250.137.36: seq=2 ttl=127 time=42.988 ms

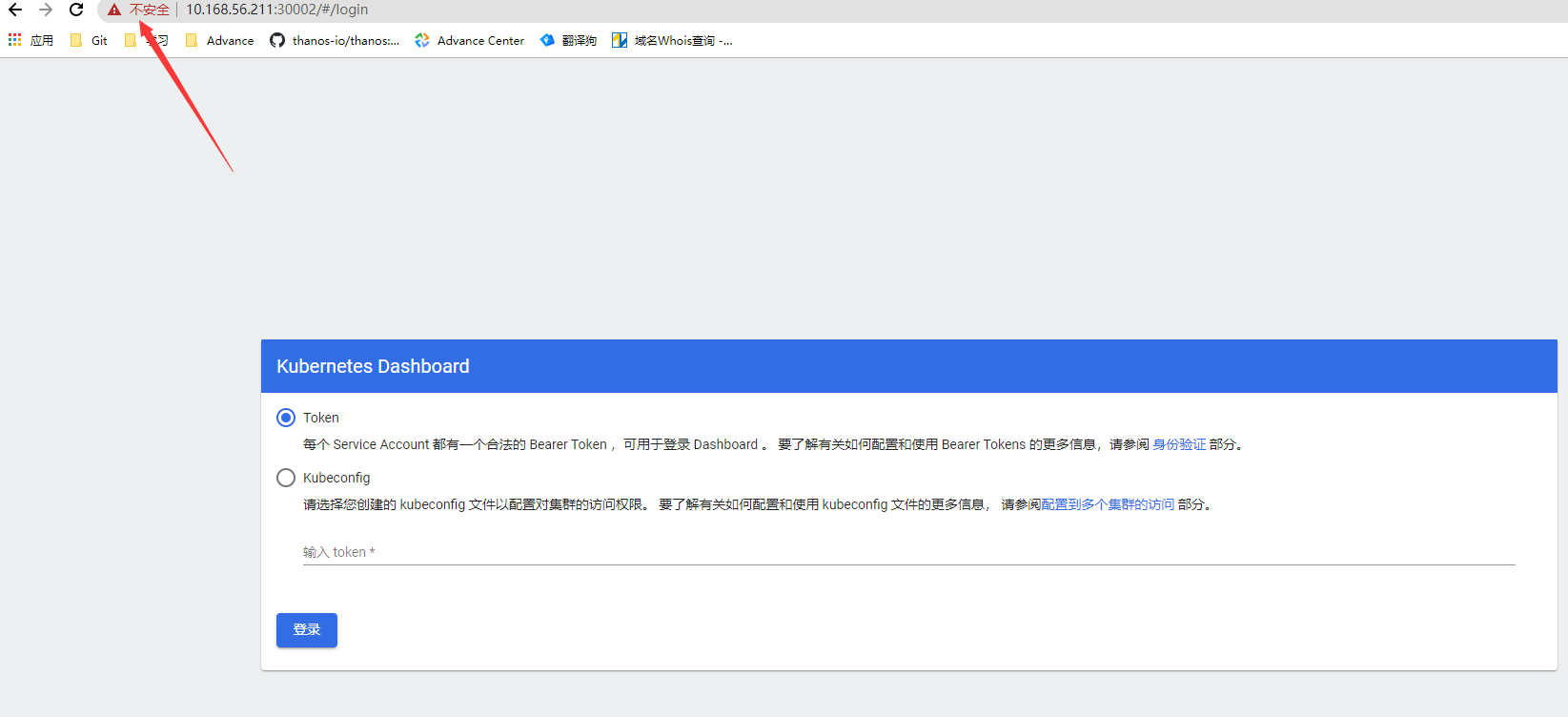

dashborad安装

- git地址: https://github.com/kubernetes/dashboard/releases

- yaml准备

```bash

root@k8s-master01:/usr/local/src# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

root@k8s-master01:/usr/local/src# mv /recommended.yaml dashboard-v2.3.1.yaml

root@k8s-master01:/usr/local/src# vim dashboard-v2.3.1.yaml

…

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30002

selector:

k8s-app: kubernetes-dashboard

…

spec:

containers:

- name: kubernetes-dashboard image: harbor.cropy.cn/baseimages/dashboard:v2.3.1 … spec: containers:

- name: dashboard-metrics-scraper image: harbor.cropy.cn/baseimages/metrics-scraper:v1.0.6

- port: 443

targetPort: 8443

nodePort: 30002

selector:

k8s-app: kubernetes-dashboard

…

spec:

containers:

root@k8s-master01:/usr/local/src# kubectl apply -f dashboard-v2.3.1.yaml root@k8s-master01:/usr/local/src# kubectl get pod -A #查看pod NAMESPACE NAME READY STATUS RESTARTS AGE default net-test1 1/1 Running 0 115m default net-test2 1/1 Running 0 114m default net-test3 1/1 Running 0 114m kube-system calico-kube-controllers-5748f97cd5-fl9f8 1/1 Running 0 151m kube-system calico-node-9t7gg 1/1 Running 0 82m kube-system calico-node-cv5vw 1/1 Running 0 79m kube-system calico-node-hlkcv 1/1 Running 0 81m kube-system calico-node-k2blj 1/1 Running 0 80m kube-system calico-node-mc8zv 1/1 Running 0 79m kube-system calico-node-s58d4 1/1 Running 0 81m kube-system coredns-64b457b8dd-2c8lt 1/1 Running 0 19m kubernetes-dashboard dashboard-metrics-scraper-5d96d7f48b-7wsnc 1/1 Running 0 19s kubernetes-dashboard kubernetes-dashboard-68795d9c8-fx6dt 1/1 Running 0 19s

- 权限配置

```bash

root@k8s-master01:/usr/local/src# vim admin-user.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

root@k8s-master01:/usr/local/src# kubectl apply -f admin-user.yml

root@k8s-master01:/usr/local/src# kubectl get secret -n kubernetes-dashboard | grep admin

root@k8s-master01:/usr/local/src# kubectl describe secret admin-user-token-x7md5 -n kubernetes-dashboard #获取访问dashboard的token

Name: admin-user-token-x7md5

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 34bdf917-96cd-4534-8a7e-0ecdc20d79e4

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1350 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImM4SGktc21uWmZBcEVrSmJaVk5Gdl9GTVEwM1ZCSFJ5MHhkR295NHozdGMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXg3bWQ1Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIzNGJkZjkxNy05NmNkLTQ1MzQtOGE3ZS0wZWNkYzIwZDc5ZTQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.LU7tJySkiGIt6yvC_XhjrENpMtUEXj4ujP-_RZzfjBTd8AiqPn0RnQvD8cCwOVbSEqRjioMA68BfCXHj7YY44v-dr2VdoIrE-sPcVPs_4qnFoJ1bbwbldA7cuZG3zjEt5PAqJmWN-BP-SI0goV-RGuXp95VF1UhXifVXFRV9_XuHOzNcPififk3rVSWpaL2s66ZBabmXA0VqN3AoOgyykPs_oNPN0ZZ92U6CyfIt7bAllEDqg5I4XLmpjA_N3NDB5RpkO3JiM-dreDPSRZjXWDPmggdZ8X6ZPpqARYY0I5CgWg2P96HnmLQjsjmrJ-f0W1i6xNnFmrH7Fq3OhJymyg

root@k8s-master01:/usr/local/src#

- 测试访问(集群任意节点的30002端口即可)

kubeasz集群扩容

扩容master

root@k8s-ha01:~# cd /etc/kubeasz/ root@k8s-ha01:/etc/kubeasz# ./ezctl add-master k8s-01 10.168.56.213扩容Node

root@k8s-ha01:~# cd /etc/kubeasz/ root@k8s-ha01:/etc/kubeasz# ./ezctl add-node k8s-01 10.168.56.216环境验证

ETCD环境验证

在k8s-master01(ETCD复用了此节点,其与配置参照集群规划即可),在执行完成02步骤即可

root@k8s-master01:~# export NODE_IPS="10.168.56.211 10.168.56.212 10.168.56.213" root@k8s-master01:~# for ip in ${NODE_IPS};do ETCD_API=3 /usr/local/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health;done https://10.168.56.211:2379 is healthy: successfully committed proposal: took = 12.402051ms https://10.168.56.212:2379 is healthy: successfully committed proposal: took = 12.167734ms https://10.168.56.213:2379 is healthy: successfully committed proposal: took = 12.928678msk8s集群节点验证

node验证

root@k8s-master01:~# kubectl get node NAME STATUS ROLES AGE VERSION 10.168.56.211 Ready,SchedulingDisabled master 38m v1.21.0 10.168.56.212 Ready,SchedulingDisabled master 38m v1.21.0 10.168.56.214 Ready node 30m v1.21.0 10.168.56.215 Ready node 30m v1.21.0calico验证 ```bash root@k8s-master01:~# calicoctl node status Calico process is running.

IPv4 BGP status +———————-+—————————-+———-+—————+——————-+ | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | +———————-+—————————-+———-+—————+——————-+ | 10.168.56.212 | node-to-node mesh | up | 08:37:37 | Established | | 10.168.56.214 | node-to-node mesh | up | 08:37:37 | Established | | 10.168.56.215 | node-to-node mesh | up | 09:29:26 | Established | +———————-+—————————-+———-+—————+——————-+

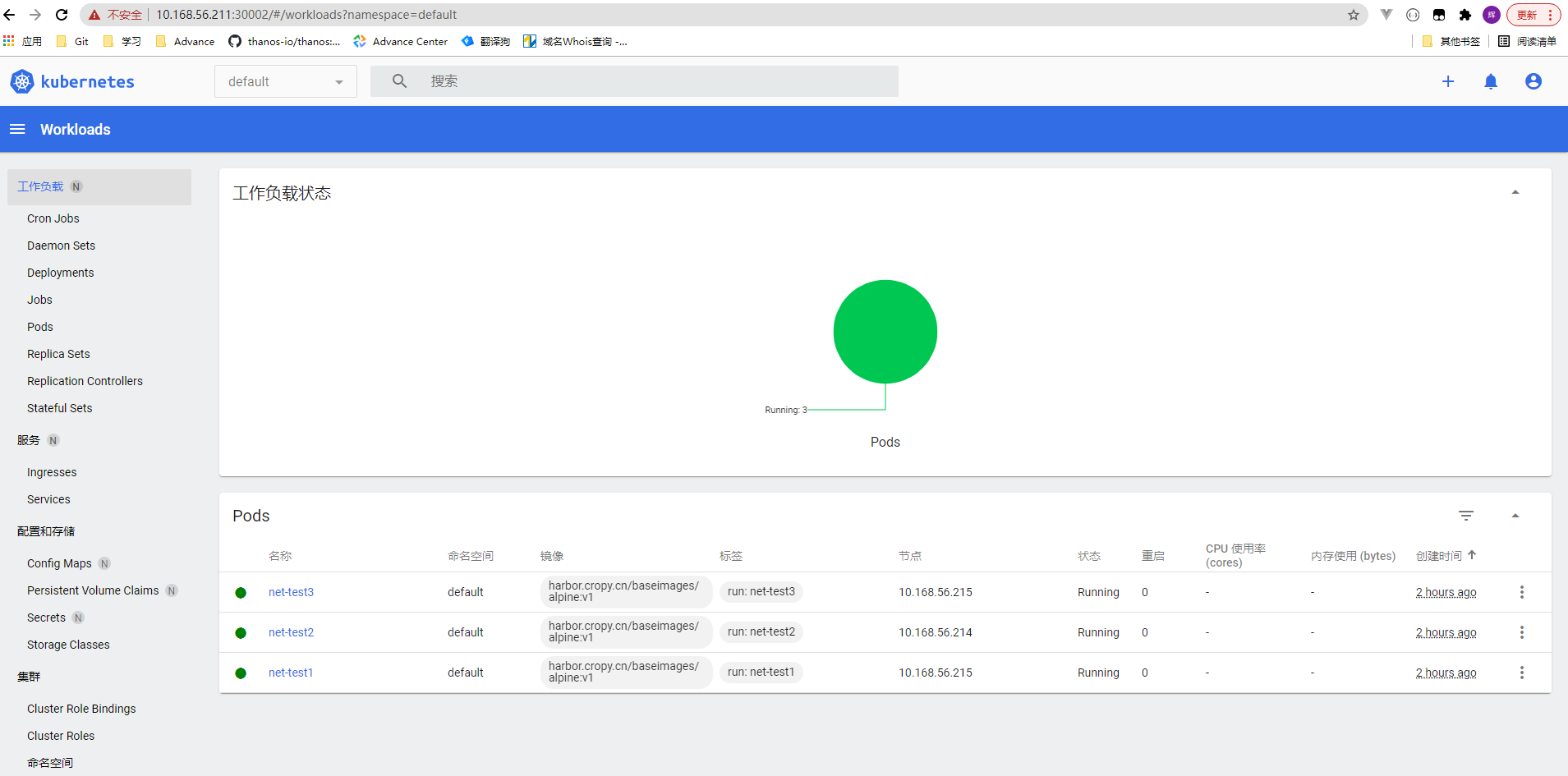

3. 网络验证

```bash

root@k8s-master01:~# kubectl run net-test1 --image harbor.cropy.cn/baseimages/alpine:v1 sleep 300000 #启动测试pod

root@k8s-master01:~# kubectl run net-test2 --image harbor.cropy.cn/baseimages/alpine:v1 sleep 300000

root@k8s-master01:~# kubectl run net-test3 --image harbor.cropy.cn/baseimages/alpine:v1 sleep 300000

root@k8s-master01:~# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default net-test1 1/1 Running 0 23m 10.200.58.193 10.168.56.215 <none> <none>

default net-test2 1/1 Running 0 22m 10.200.85.193 10.168.56.214 <none> <none>

default net-test3 1/1 Running 0 22m 10.200.0.2 10.168.56.215 <none> <none>

root@k8s-master01:~# kubectl exec -it net-test1 sh

/ # ping 10.200.85.193

PING 10.200.85.193 (10.200.85.193): 56 data bytes

64 bytes from 10.200.85.193: seq=0 ttl=62 time=1.053 ms

64 bytes from 10.200.85.193: seq=1 ttl=62 time=1.164 ms

/ # ping 114.114.114.114

PING 114.114.114.114 (114.114.114.114): 56 data bytes

64 bytes from 114.114.114.114: seq=0 ttl=127 time=16.780 ms

64 bytes from 114.114.114.114: seq=1 ttl=127 time=15.098 ms

/ # ping 192.168.56.111

PING 192.168.56.111 (192.168.56.111): 56 data bytes

64 bytes from 192.168.56.111: seq=0 ttl=63 time=0.765 ms

64 bytes from 192.168.56.111: seq=1 ttl=63 time=0.911 ms

/ # ping qq.com

PING qq.com (58.250.137.36): 56 data bytes

64 bytes from 58.250.137.36: seq=0 ttl=127 time=42.997 ms

64 bytes from 58.250.137.36: seq=1 ttl=127 time=43.912 ms

- dashboard

- 扩容节点完成之后全部节点状态查看

root@k8s-master01:~# kubectl get node NAME STATUS ROLES AGE VERSION 10.168.56.211 Ready,SchedulingDisabled master 3h14m v1.21.0 10.168.56.212 Ready,SchedulingDisabled master 3h14m v1.21.0 10.168.56.213 Ready,SchedulingDisabled master 95m v1.21.0 10.168.56.214 Ready node 3h7m v1.21.0 10.168.56.215 Ready node 3h7m v1.21.0 10.168.56.216 Ready node 90m v1.21.0