k8s集群日常维护

集群新增节点

- kubeasz新增节点:

- 需要将待kubeasz节点和待添加节点做好ssh免密认证

- 添加过程如下 ```bash root@k8s-ha01:~# cd /etc/kubeasz/ root@k8s-ha01:/etc/kubeasz# ./ezctl —help Usage: ezctl COMMAND [args]

Cluster setups:

list to list all of the managed clusters

checkout

Cluster ops:

add-etcd

Extra operation:

kcfg-adm

Use “ezctl help

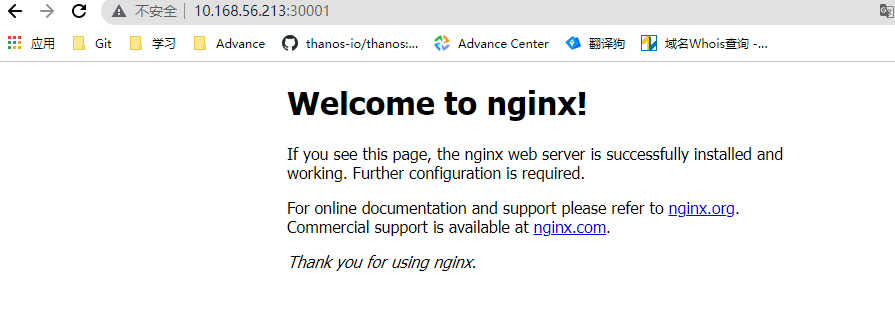

- 新增master节点```bashroot@k8s-ha01:/etc/kubeasz# ./ezctl add-master k8s-01 10.168.56.213

- 节点会安装nginx,监听本地6443端口,并将请求转发到本地6443,然后本地6443将请求转发到api-server

- 参考配置如下(采用nginx四层代理): ```bash root@k8s-master01:~# cat /etc/kube-lb/conf/kube-lb.conf user root; worker_processes 1;

error_log /etc/kube-lb/logs/error.log warn;

events { worker_connections 3000; }

stream { upstream backend { server 10.168.56.213:6443 max_fails=2 fail_timeout=3s; server 10.168.56.211:6443 max_fails=2 fail_timeout=3s; server 10.168.56.212:6443 max_fails=2 fail_timeout=3s; }

server {listen 127.0.0.1:6443;proxy_connect_timeout 1s;proxy_pass backend;}

}

<a name="rkRex"></a>### k8s集群节点软件版本升级二进制程序官方下载站点:[https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.21.md](https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.21.md)> kubernetes源码文件: [https://dl.k8s.io/v1.21.5/kubernetes.tar.gz](https://dl.k8s.io/v1.21.5/kubernetes.tar.gz)> kubernetes-client: [https://dl.k8s.io/v1.21.5/kubernetes-client-linux-amd64.tar.gz](https://dl.k8s.io/v1.21.5/kubernetes-client-linux-amd64.tar.gz)> kubernetes-server: [https://dl.k8s.io/v1.21.5/kubernetes-server-linux-amd64.tar.gz](https://dl.k8s.io/v1.21.5/kubernetes-server-linux-amd64.tar.gz)> kubernetes-node: [https://dl.k8s.io/v1.21.5/kubernetes-node-linux-amd64.tar.gz](https://dl.k8s.io/v1.21.5/kubernetes-node-linux-amd64.tar.gz)> 常见的升级方式> 1. 镜像升级: 准备一套和老集群一样配置的集群,测试通过之后将老集群的服务前移到新集群> 1. 滚动升级: 老集群节点升级> 1. 要先升级master- master升级步骤:- 先摘除节点的nginx配置文件中带升级的master节点- 升级master节点- 配置回填- node升级步骤- 轮循升级node节点的kubelet,kube-proxy- 重启kubelet,kube-proxy 服务<a name="ddHtQ"></a>#### master节点升级> 注意: 此次是小版本升级,由1.21.0升级到1.21.51. 将集群节点的kube-lb配置文件逐个注释,在kubeasz节点操作(该节点配置了到k8s节点的免密登录)```bashroot@k8s-ha01:~# cat ip.txt10.168.56.21110.168.56.21210.168.56.21310.168.56.21410.168.56.21510.168.56.216root@k8s-ha01:~# vim kube-lb.conf #先注释211master节点user root;worker_processes 1;error_log /etc/kube-lb/logs/error.log warn;events {worker_connections 3000;}stream {upstream backend {server 10.168.56.213:6443 max_fails=2 fail_timeout=3s;#server 10.168.56.211:6443 max_fails=2 fail_timeout=3s;server 10.168.56.212:6443 max_fails=2 fail_timeout=3s;}server {listen 127.0.0.1:6443;proxy_connect_timeout 1s;proxy_pass backend;}}root@k8s-ha01:~# for i in $(cat ip.txt); do scp kube-lb.conf $i:/etc/kube-lb/conf/; done #配置文件替换root@k8s-ha01:~# for i in $(cat ip.txt); do ssh $i "systemctl restart kube-lb.service"; done #重启kube-lb

替换master(10.168.56.211)节点的kube-apiserver,kube-controller-manager,kube-proxy,kube-scheduler,kubelet(客户端工具)

root@k8s-ha01:~# mkdir update_k8s && cd update_k8s root@k8s-ha01:~/update_k8s# wget https://dl.k8s.io/v1.21.5/kubernetes-server-linux-amd64.tar.gz root@k8s-ha01:~/update_k8s# tar xf kubernetes-server-linux-amd64.tar.gz root@k8s-ha01:~/update_k8s# cd kubernetes/server/bin/ root@k8s-ha01:~/update_k8s/kubernetes/server/bin# ssh 10.168.56.211 "systemctl stop kube-apiserver.service kube-scheduler.service kube-controller-manager.service kube-proxy.service kubelet.service" #MASTER节点服务停止 root@k8s-ha01:~/update_k8s/kubernetes/server/bin# scp kube-apiserver kubelet kube-proxy kube-controller-manager kube-scheduler kubectl 10.168.56.211:/usr/local/bin/重启服务master(10.168.56.211)节点操作

root@k8s-master01:~# cd /usr/local/bin root@k8s-master01:/usr/local/bin# kube-apiserver --version root@k8s-master01:/usr/local/bin# systemctl start kube-apiserver.service kube-scheduler.service kube-controller-manager.service kube-proxy.service kubelet.service查看状态

root@k8s-master01:~# kubectl get node NAME STATUS ROLES AGE VERSION 10.168.56.211 Ready master 10d v1.21.5 #节点1升级成功 10.168.56.212 Ready master 10d v1.21.0 10.168.56.213 Ready master 10d v1.21.0 10.168.56.214 Ready node 10d v1.21.0 10.168.56.215 Ready node 10d v1.21.0 10.168.56.216 Ready node 10d v1.21.0循环执行其他master节点的升级 ```bash

- 重复执行上述的4步(将第一步中6443的配置滚动注释即可)

- 更新完之后将kube-lb.conf下所有注释的节点注释放开,然后reload kube-lb服务

root@k8s-master01:~# kubectl get node #master节点更新完成

NAME STATUS ROLES AGE VERSION

10.168.56.211 Ready master 10d v1.21.5

10.168.56.212 Ready master 10d v1.21.5

10.168.56.213 Ready master 10d v1.21.5

10.168.56.214 Ready node 10d v1.21.0

10.168.56.215 Ready node 10d v1.21.0

10.168.56.216 Ready node 10d v1.21.0

```

node节点升级

- 需要升级的软件

- kubelet

- kube-proxy

- kubectl客户端

- 升级步骤

- 停止node节点的kubelet,kube-proxy服务

- 更新node节点的kubelet,kube-proxy服务

- 注意: 滚动升级

node节点的kubelet,kube-proxy服务停止

root@k8s-node01:~# systemctl stop kubelet.service kube-proxy.service替换kubelet,kube-proxy, kubectl(可选),在kubeasz节点操作

root@k8s-ha01:~/update_k8s/kubernetes/server/bin# scp kubectl kubelet kube-proxy 10.168.56.214:/usr/local/bin/启动node节点的kubelet和kube-proxy服务

root@k8s-node01:~# systemctl start kubelet.service kube-proxy.service其与node节点按照上面的三步操作

- master节点更新状态查看(所有节点升级版本至1.21.5)

root@k8s-master01:~# kubectl get node NAME STATUS ROLES AGE VERSION 10.168.56.211 Ready master 10d v1.21.5 10.168.56.212 Ready master 10d v1.21.5 10.168.56.213 Ready master 10d v1.21.5 10.168.56.214 Ready node 10d v1.21.5 10.168.56.215 Ready node 10d v1.21.5 10.168.56.216 Ready node 10d v1.21.5etcd

当前集群etcd跟master节点复用,IP为10.168.56.211,10.168.56.212,10.168.56.213etcd生产环境在多个pod的情况下要使用ssd磁盘,内存要大一些,磁盘空间可以不用过大

- 官网: etcd.io

- github: https://github.com/etcd-io/website

特性

客户端命令行工具: etcdctl

- api版本: 3

集群健康检查

root@k8s-master01:~# export NODE_IPS="10.168.56.211 10.168.56.212 10.168.56.213" root@k8s-master01:~# for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/local/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health;done https://10.168.56.211:2379 is healthy: successfully committed proposal: took = 22.692654ms https://10.168.56.212:2379 is healthy: successfully committed proposal: took = 18.28155ms https://10.168.56.213:2379 is healthy: successfully committed proposal: took = 10.484608ms显示集群成员信息

root@k8s-master01:~# ETCDCTL_API=3 /usr/local/bin/etcdctl --write-out=table member list --endpoints=https://10.168.56.211:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem +------------------+---------+--------------------+----------------------------+----------------------------+------------+ | ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER | +------------------+---------+--------------------+----------------------------+----------------------------+------------+ | 4bd00448b804d970 | started | etcd-10.168.56.212 | https://10.168.56.212:2380 | https://10.168.56.212:2379 | false | | d63247ce9e9a27d1 | started | etcd-10.168.56.211 | https://10.168.56.211:2380 | https://10.168.56.211:2379 | false | | d708eab99ef0ddbf | started | etcd-10.168.56.213 | https://10.168.56.213:2380 | https://10.168.56.213:2379 | false | +------------------+---------+--------------------+----------------------------+----------------------------+------------+查看endpoint节点状态

root@k8s-master01:~# export NODE_IPS="10.168.56.211 10.168.56.212 10.168.56.213" root@k8s-master01:~# for ip in ${NODE_IPS};do ETCDCTL_API=3 /usr/local/bin/etcdctl --write-out=table endpoint status --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem;done +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | https://10.168.56.211:2379 | d63247ce9e9a27d1 | 3.4.13 | 3.7 MB | false | false | 16 | 211690 | 211690 | | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | https://10.168.56.212:2379 | 4bd00448b804d970 | 3.4.13 | 3.7 MB | false | false | 16 | 211690 | 211690 | | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | https://10.168.56.213:2379 | d708eab99ef0ddbf | 3.4.13 | 3.8 MB | true | false | 16 | 211691 | 211691 | | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+查询所有key信息

root@k8s-master01:~# /usr/local/bin/etcdctl get / --prefix --keys-only查询单个key信息

root@k8s-master01:~# /usr/local/bin/etcdctl get /registry/services/specs/kube-system/kube-dnspod信息查询

root@k8s-master01:~# /usr/local/bin/etcdctl get / --prefix --keys-only | grep pod数据的增删改查

root@k8s-master01:~# etcdctl put /cropy/bbs cn #数据写入,key,value之间都好分隔 root@k8s-master01:~# etcdctl get /cropy/bbs #数据查看 root@k8s-master01:~# etcdctl put /cropy/bbs com #数据更新 root@k8s-master01:~# etcdctl del /cropy/bbs #数据删除watch机制

基于不断查看数据,如果数据发生变化就通知客户端, etcd v3的watch机制支出watch某个固定的key,也可以watch一个范围

- v3 通过grpc提供rpc接口

- 纯kv

root@k8s-master01:~# etcdctl watch /cropy/bbs root@k8s-master01:~# etcdctl put /cropy/bbs cometcd数据备份与恢复

WAL机制: 是在数据写入之前先写一个日志(预写日志),用于数据恢复,记录了数据变化的全部过程

数据备份

root@k8s-master01:~# ETCDCTL_API=3 /usr/local/bin/etcdctl snapshot save etcd-20211003.db数据还原,还原之后修改

root@k8s-master01:~# ETCDCTL_API=3 /usr/local/bin/etcdctl snapshot restore etcd-20211003.db --data-dir=/tmp/etcd数据备份的话建议使用计划任务去执行备份脚本即可,脚本如下

root@k8s-master01:~# vim save_etcddata.sh DATE=`date +%Y-%m-%d_%H-%M-%S` #!/bin/bash source /etc/profile DATE=`date +%Y-%m-%d_%H-%M-%S` ETCDCTL_API=3 /usr/local/bin/etcdctl snapshot save /data/etcd_backup_dir/etcd-snapshot-${DATE}.db使用kubeasz工具执行数据备份与恢复

root@k8s-ha01:~# cd /etc/kubeasz/ root@k8s-ha01:/etc/kubeasz# ./ezctl backup k8s-01 #集群数据备份 root@k8s-ha01:/etc/kubeasz# ll clusters/k8s-01/backup/ #查看数据备份 total 7272 drwxr-xr-x 2 root root 4096 Oct 3 10:51 ./ drwxr-xr-x 5 root root 4096 Sep 21 17:47 ../ -rw------- 1 root root 3715104 Oct 3 10:51 snapshot_202110031051.db -rw------- 1 root root 3715104 Oct 3 10:51 snapshot.db root@k8s-ha01:/etc/kubeasz# ./ezctl restore k8s-01 #集群数据恢复k8s集群数据恢复流程

停止k8s master节点服务: apiserver,controller-manager,scheduler

- 停止k8s node节点服务: kube-proxy,kubelet

- 停止etcd集群

- 恢复etcd数据

- 启动etcd集群

- 检查etcd集群状态

- 启动k8s master服务

- 启动k8s node节点服务

-

k8s yaml文件及语法基础

需要提前创建好yaml文件,并创建好pod运行所需要的namespace等资源的yaml文件

namespace

root@k8s-master01:~# mkdir yaml/namespace -p root@k8s-master01:~# kubectl api-resources root@k8s-master01:~# kubectl get ns default -o yaml #查看namespace语法 root@k8s-master01:~# kubectl explain ns #查看yaml写法 root@k8s-master01:~# cd yaml/namespace/ root@k8s-master01:~/yaml/namespace# vim devops-ns.yam apiVersion: v1 kind: Namespace metadata: name: devops root@k8s-master01:~/yaml/namespace# kubectl apply -f devops-ns.yaml root@k8s-master01:~/yaml/namespace# kubectl get ns | grep devops devops Active 30sdeployment

root@k8s-master01:~/yaml/namespace# kubectl get deployment coredns -n kube-system -o yaml root@k8s-master01:~/yaml/namespace# docker tag nginx:latest harbor.cropy.cn/baseimages/nginx:v1 root@k8s-master01:~/yaml/namespace# docker push harbor.cropy.cn/baseimages/nginx:v1 root@k8s-master01:~/yaml/namespace# vim nginx.yaml kind: Deployment #类型,是deployment控制器,kubectl explain Deployment apiVersion: apps/v1 #API版本,# kubectl explain Deployment.apiVersion metadata: #pod的元数据信息,kubectl explain Deployment.metadata labels: #自定义pod的标签,# kubectl explain Deployment.metadata.labels app: devops-nginx-deployment-label #标签名称为app值为n56-nginx-deployment-label,后面会用到此标签 name: devops-nginx-deployment #pod的名称 namespace: devops #pod的namespace,默认是defaule spec: #定义deployment中容器的详细信息,kubectl explain Deployment.spec replicas: 1 #创建出的pod的副本数,即多少个pod,默认值为1 selector: #定义标签选择器 matchLabels: #定义匹配的标签,必须要设置 app: devops-nginx-selector #匹配的目标标签, template: #定义模板,必须定义,模板是起到描述要创建的pod的作用 metadata: #定义模板元数据 labels: #定义模板label,Deployment.spec.template.metadata.labels app: devops-nginx-selector #定义标签,等于Deployment.spec.selector.matchLabels spec: containers: - name: devops-nginx-container #容器名称 image: nginx:1.16.1 #command: ["/apps/tomcat/bin/run_tomcat.sh"] #容器启动执行的命令或脚本 #imagePullPolicy: IfNotPresent imagePullPolicy: Always #拉取镜像策略 ports: #定义容器端口列表 - containerPort: 80 #定义一个端口 protocol: TCP #端口协议 name: http #端口名称 - containerPort: 443 #定义一个端口 protocol: TCP #端口协议 name: https #端口名称 env: #配置环境变量 - name: "password" #变量名称。必须要用引号引起来 value: "123456" #当前变量的值 - name: "age" #另一个变量名称 value: "18" #另一个变量的值 resources: #对资源的请求设置和限制设置 limits: #资源限制设置,上限 cpu: 500m #cpu的限制,单位为core数,可以写0.5或者500m等CPU压缩值 memory: 512Mi #内存限制,单位可以为Mib/Gib,将用于docker run --memory参数 requests: #资源请求的设置 cpu: 200m #cpu请求数,容器启动的初始可用数量,可以写0.5或者500m等CPU压缩值 memory: 256Mi #内存请求大小,容器启动的初始可用数量,用于调度pod时候使用service

root@k8s-master01:~/yaml/namespace# vim nginx-svc.yaml kind: Service #类型为service apiVersion: v1 #service API版本, service.apiVersion metadata: #定义service元数据,service.metadata labels: #自定义标签,service.metadata.labels app: devops-nginx #定义service标签的内容 name: devops-nginx-service #定义service的名称,此名称会被DNS解析 namespace: devops #该service隶属于的namespaces名称,即把service创建到哪个namespace里面 spec: #定义service的详细信息,service.spec type: NodePort #service的类型,定义服务的访问方式,默认为ClusterIP, service.spec.type ports: #定义访问端口, service.spec.ports - name: http #定义一个端口名称 port: 81 #service 80端口 protocol: TCP #协议类型 targetPort: 80 #目标pod的端口 nodePort: 30001 #node节点暴露的端口 - name: https #SSL 端口 port: 1443 #service 443端口 protocol: TCP #端口协议 targetPort: 443 #目标pod端口 nodePort: 30043 #node节点暴露的SSL端口 selector: app: devops-nginx-selector创建资源并测试访问

root@k8s-master01:~/yaml/namespace# kubectl apply -f devops-ns.yaml root@k8s-master01:~/yaml/namespace# kubectl apply -f nginx.yaml root@k8s-master01:~/yaml/namespace# kubectl apply -f nginx-svc.yaml root@k8s-master01:~/yaml/namespace# kubectl get svc -n devops NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE devops-nginx-service NodePort 10.100.72.4 <none> 81:30001/TCP,1443:30043/TCP 5m52s root@k8s-master01:~/yaml/namespace# kubectl get ep -n devops NAME ENDPOINTS AGE devops-nginx-service 10.200.135.135:443,10.200.135.135:80 6m1s

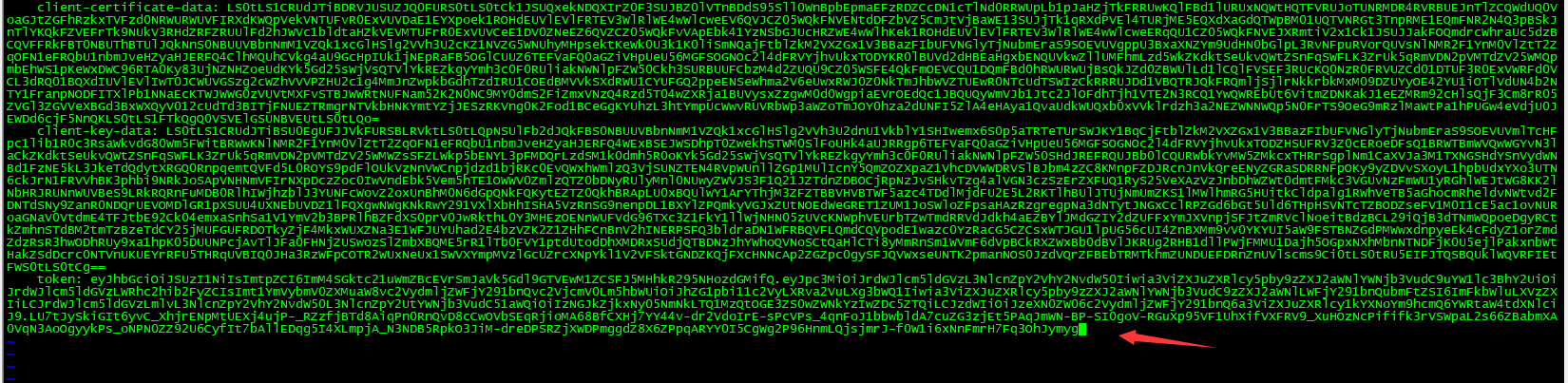

制作kubeconfig文件登录dashboard ```bash root@k8s-master01:~/yaml/namespace# cp /root/.kube/config kubeconfig root@k8s-master01:~/yaml/namespace# kubectl describe secret admin-user-token-x7md5 -n kubernetes-dashboard Name: admin-user-token-x7md5 Namespace: kubernetes-dashboard Labels:

Annotations: kubernetes.io/service-account.name: admin-user kubernetes.io/service-account.uid: 34bdf917-96cd-4534-8a7e-0ecdc20d79e4

Type: kubernetes.io/service-account-token

Data

ca.crt: 1350 bytes namespace: 20 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImM4SGktc21uWmZBcEVrSmJaVk5Gdl9GTVEwM1ZCSFJ5MHhkR295NHozdGMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXg3bWQ1Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIzNGJkZjkxNy05NmNkLTQ1MzQtOGE3ZS0wZWNkYzIwZDc5ZTQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.LU7tJySkiGIt6yvC_XhjrENpMtUEXj4ujP-_RZzfjBTd8AiqPn0RnQvD8cCwOVbSEqRjioMA68BfCXHj7YY44v-dr2VdoIrE-sPcVPs_4qnFoJ1bbwbldA7cuZG3zjEt5PAqJmWN-BP-SI0goV-RGuXp95VF1UhXifVXFRV9_XuHOzNcPififk3rVSWpaL2s66ZBabmXA0VqN3AoOgyykPs_oNPN0ZZ92U6CyfIt7bAllEDqg5I4XLmpjA_N3NDB5RpkO3JiM-dreDPSRZjXWDPmggdZ8X6ZPpqARYY0I5CgWg2P96HnmLQjsjmrJ-f0W1i6xNnFmrH7Fq3OhJymyg

将token放到kubeconfig文件末尾,如下截图添加即可

<a name="lSeEV"></a>

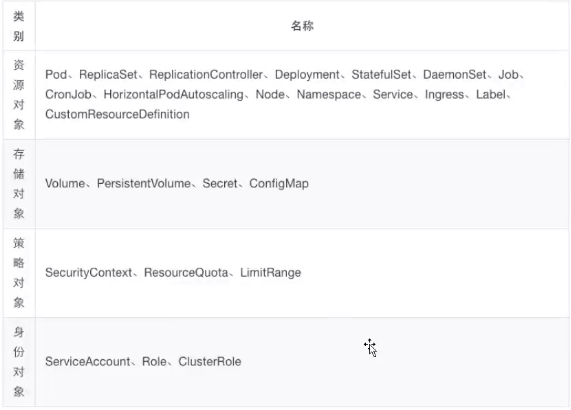

## kubernetes资源对象

<a name="VOmBA"></a>

### k8s资源管理核心概念

- CRI: 容器运行时接口

- CSI: 存储接口

- CNI: 网络接口

<a name="UrPNA"></a>

### 常见的资源类型

<a name="Ayryq"></a>

#### POD

1. k8s中的最小单位

1. 一个pod可以运行一个容器,也可以运行多个容器

1. 运行多个容器的话,这些容器是一起被调度的

1. Pod的生命周期是短暂的,不会自愈

1. 一般是通过controller来创建和管理pod的

> pod的声明周期:

> 1. 初始化容器

> 1. 启动前操作

> 1. 就绪探针: 检测pod启动成功之后pod才可以接收流量

> 1. 存活探针: pod服务异常会重启pod

> 1. 删除pod

<a name="kUFTb"></a>

#### rc/rs/deployment

确保一组或者同类型的pod以用户期望的状态运行

1. raplication controller: 第一代副本控制器

> 选择器只支持`=`或者`!=`,不支持正则

> 官方文档: [https://kubernetes.io/zh/docs/concepts/workloads/controllers/replicationcontroller/](https://kubernetes.io/zh/docs/concepts/workloads/controllers/replicationcontroller/)

```bash

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 3

selector:

app: nginx

template:

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

- replicaSet:第二代pod副本控制器

选择器支持正则 官方文档:https://kubernetes.io/zh/docs/concepts/workloads/controllers/replicaset/

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: frontend

spec:

replicas: 2

selector:

matchExpressions:

- {key: app, operator: In, values: [ng-rs-80,ng-rs-81]}

template:

metadata:

labels:

app: ng-rs-80

spec:

containers:

- name: ng-rs-80

image: nginx

ports:

- containerPort: 80

- Deployment: 第三代pod副本控制器

具备replicaset的功能,还支持滚动升级和代码回滚 官方文档: https://kubernetes.io/docs/concepts/workloads/controllers/deployment/

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 2

selector:

#app: ng-deploy-80 #rc

matchLabels: #rs or deployment

app: ng-deploy-80

# matchExpressions:

# - {key: app, operator: In, values: [ng-deploy-80,ng-rs-81]}

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx:1.16.1

ports:

- containerPort: 80

service

解决服务访问IP变化的问题

- 常用的service

- 集群内部的service: selector指定pod,自动创建endpoints

- 集群外部的service: 手动创建endpoints,指定外部服务的ip,端口和协议

- kube-proxy和service的关系

- kube-proxy watch apiserver

- service资源发生变化,kube-proxy会生成对应的负载调度的调整,从而保证service的最新状态

- kube-proxy的三种调度模型

- userspace

- iptables

- ipvs: 若未开启,自动降级到iptables

deploy

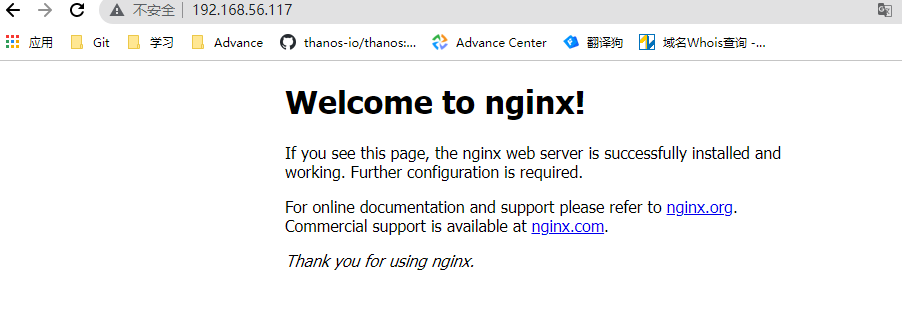

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 1 selector: #matchLabels: #rs or deployment # app: ng-deploy3-80 matchExpressions: - {key: app, operator: In, values: [ng-deploy-80,ng-rs-81]} template: metadata: labels: app: ng-deploy-80 spec: containers: - name: ng-deploy-80 image: nginx:1.16.1 ports: - containerPort: 80clusterIP

apiVersion: v1 kind: Service metadata: name: ng-deploy-80 spec: ports: - name: http port: 88 targetPort: 80 protocol: TCP type: ClusterIP selector: app: ng-deploy-80Nodeport

apiVersion: v1 kind: Service metadata: name: ng-deploy-80 spec: ports: - name: http port: 90 targetPort: 80 nodePort: 30012 protocol: TCP type: NodePort selector: app: ng-deploy-80配置haproxy_proxy代理nodeport流量到80端口 ```bash root@k8s-ha01:~# vim /etc/haproxy/haproxy.cfg listen k8s-6443

bind 192.168.56.116:6443 mode tcp server k8s1 10.168.56.211:6443 check inter 3s fall 3 rise 5 server k8s2 10.168.56.212:6443 check inter 3s fall 3 rise 5 server k8s3 10.168.56.213:6443 check inter 3s fall 3 rise 5

listen ngx-30012 bind 192.168.56.117:80 mode tcp server k8s1 10.168.56.211:30012 check inter 3s fall 3 rise 5 server k8s2 10.168.56.212:30012 check inter 3s fall 3 rise 5 server k8s3 10.168.56.213:30012 check inter 3s fall 3 rise 5

root@k8s-ha01:~# systemctl restart haproxy

root@k8s-ha01:~# netstat -tanlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 192.168.56.117:80 0.0.0.0:* LISTEN 151509/haproxy

5. 浏览器测试

<a name="KXD0R"></a>

#### volume

用于数据解耦以及pod间的数据共享

- 常用的几种卷

- emptyDir : 本地临时卷,pod删除的话emptyDir也会被删除,数据不能共享

- hostpath:将文件系统中的目录挂载到pod中,当pod被删除数据依然还在,数据不能共享

- NFS

- configmap

1. emptyDir

```bash

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels: #rs or deployment

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /cache

name: cache-volume

volumes:

- name: cache-volume

emptyDir: {}

hostpath

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 1 selector: matchLabels: app: ng-deploy-80 template: metadata: labels: app: ng-deploy-80 spec: containers: - name: ng-deploy-80 image: nginx ports: - containerPort: 80 volumeMounts: - mountPath: /data/cropy name: cache-volume volumes: - name: cache-volume hostPath: path: /opt/cropyNFS共享存储

配置nfs-server节点

root@k8s-ha01:~# apt update && apt install nfs-server -y root@k8s-ha01:~# mkdir /data/nfs/cropy -p root@k8s-ha01:~# vim /etc/exports /data/nfs/cropy *(rw,no_root_squash) root@k8s-ha01:~# systemctl restart nfs-server root@k8s-ha01:~# showmount -e 10.168.56.113k8s配置nfs共享 ```bash apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 1 selector: matchLabels:

app: ng-deploy-80template: metadata:

labels: app: ng-deploy-80spec:

containers: - name: ng-deploy-80 image: nginx ports: - containerPort: 80 volumeMounts: - mountPath: /usr/share/nginx/html/mysite name: my-nfs-volume - mountPath: /usr/share/nginx/html/js name: my-nfs-js volumes: - name: my-nfs-volume nfs: server: 10.168.56.113 path: /data/nfs/cropy - name: my-nfs-js nfs: server: 10.168.56.113 path: /data/nfs/cropy/js

apiVersion: v1 kind: Service metadata: name: ng-deploy-80 spec: ports:

- name: http port: 81 targetPort: 80 nodePort: 30016 protocol: TCP type: NodePort selector: app: ng-deploy-80 ```

- v2 版本

```bash

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment-site2

spec:

replicas: 1

selector:

matchLabels:

template: metadata:app: ng-deploy-81

spec:labels: app: ng-deploy-81containers: - name: ng-deploy-81 image: nginx ports: - containerPort: 80 volumeMounts: - mountPath: /usr/share/nginx/html/mysite name: my-nfs-volume volumes: - name: my-nfs-volume nfs: server: 10.168.56.113 path: /data/k8sdata

apiVersion: v1 kind: Service metadata: name: ng-deploy-81 spec: ports:

- name: http port: 80 targetPort: 80 nodePort: 30017 protocol: TCP type: NodePort selector: app: ng-deploy-81

<a name="NNEf7"></a>

### k8s命令使用

- 资源查看类

```bash

kubectl get svc -n {namespace}

kubectl get pod -n {namespace}

kubectl get ep -n {namespace}

kubectl get nodes

kubectl get deploy -n {namespace}

kubectl api-resources

kubectl api-versions

kubectl describe pod -n {namespace}

资源创建类

kubectl create -f file.yaml --save-config=true kubectl apply -f file.yaml --record #推荐命令问题排查

kubectl describe pod/svc -n {namespace} kubectl logs -f pod -n {namespace} kubectl exec -it pod -n {namespace}节点打标签

root@k8s-master01:~# kubectl label node 10.168.56.214 project=cropy nodeSelector配合node label使用 root@k8s-master01:~# kubectl label node 10.168.56.214 project- #删除节点label设置节点不可调度 ```bash root@k8s-master01:~# kubectl get node NAME STATUS ROLES AGE VERSION 10.168.56.211 Ready master 11d v1.21.5 10.168.56.212 Ready master 11d v1.21.5 10.168.56.213 Ready master 11d v1.21.5 10.168.56.214 Ready node 11d v1.21.5 10.168.56.215 Ready node 11d v1.21.5 10.168.56.216 Ready node 11d v1.21.5 root@k8s-master01:~# kubectl cordon 10.168.56.211 node/10.168.56.211 cordoned root@k8s-master01:~# kubectl get node NAME STATUS ROLES AGE VERSION 10.168.56.211 Ready,SchedulingDisabled master 11d v1.21.5 10.168.56.212 Ready master 11d v1.21.5 10.168.56.213 Ready master 11d v1.21.5 10.168.56.214 Ready node 11d v1.21.5 10.168.56.215 Ready node 11d v1.21.5 10.168.56.216 Ready node 11d v1.21.5

root@k8s-master01:~# kubectl uncordon 10.168.56.211 #取消不可调度

- 节点pod驱逐: node下线需求,节点pod会被驱逐,然后节会被设置为不可调度

```bash

root@k8s-master01:~# kubectl drain 10.168.56.215 --force --ignore-daemonsets

- taint: 反亲和

root@k8s-master01:~# kubectl taint --help