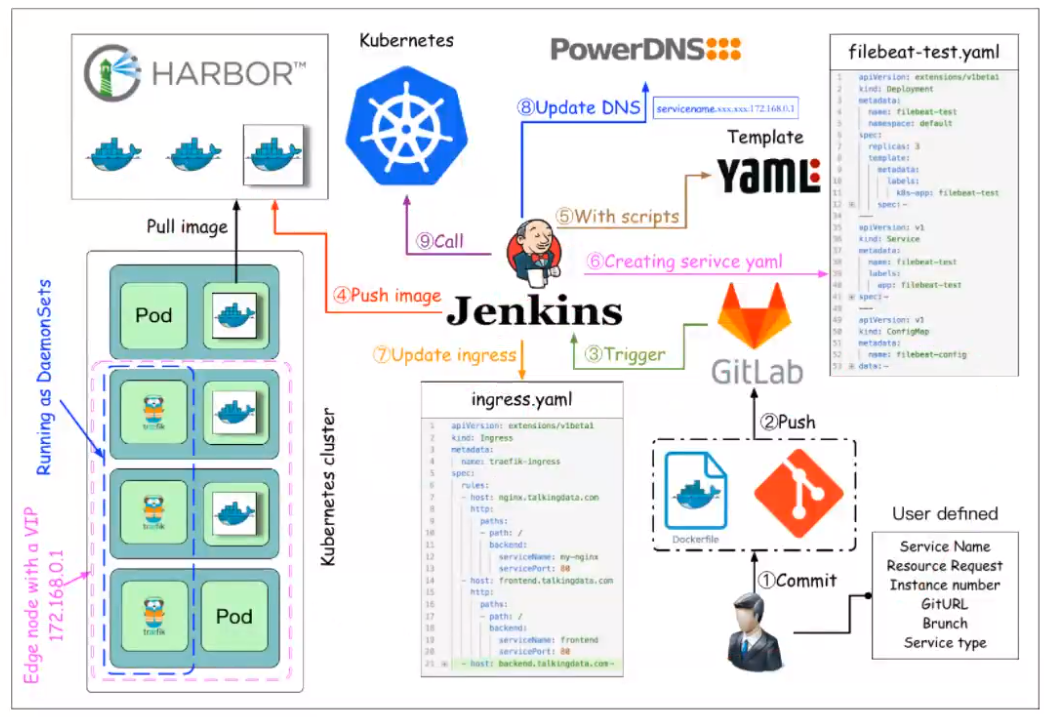

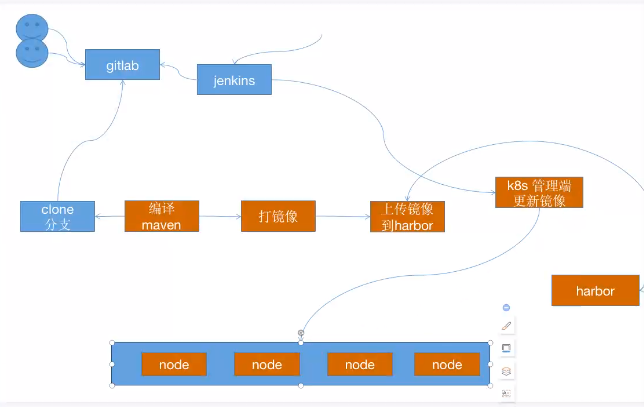

- k8s pod版本更新流程

- OS specific support. $var must be set to either true or false.

- !/bin/bash

- !/bin/bash

- !/bin/bash

- !/bin/bash

- Version: v1

- 记录脚本开始执行时间

- 变量

- cd ${Git_Dir} && mvn clean package

- echo “代码编译完成,即将开始将IP地址等信息替换为测试环境”

- 将打包好的压缩文件拷贝到k8s 控制端服务器

- 到控制端执行脚本制作并上传镜像

- 到控制端更新k8s yaml文件中的镜像版本号,从而保持yaml文件中的镜像版本号和k8s中版本号一致

- 到控制端更新k8s中容器的版本号,有两种更新办法,一是指定镜像版本更新,二是apply执行修改过的yaml文件

- 第一种方法

- 第二种方法,推荐使用第一种

- ssh root@${K8S_CONTROLLER1} “cd /root/k8s/08-cicd/jenkins_deploy && kubectl apply -f tomcat-app1.yaml —record”

- 计算脚本累计执行时间,如果不需要的话可以去掉下面四行

- 基于k8s 内置版本管理回滚到上一个版本

- 使用帮助

- 主函数

- !/bin/bash

- !/bin/bash

- Environment=”PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_231/bin”

- Environment=”PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_231/bin”

- Environment=”PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_231/bin”

- After=network.target zookeeper.service

- Environment=”PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_231/bin”

- After=network.target zookeeper.service

- Environment=”PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_231/bin”

- After=network.target zookeeper.service

- Environment=”PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_231/bin”

- !/bin/bash

- !/bin/bash

- stdout {

- codec => rubydebug

- }

k8s pod版本更新流程

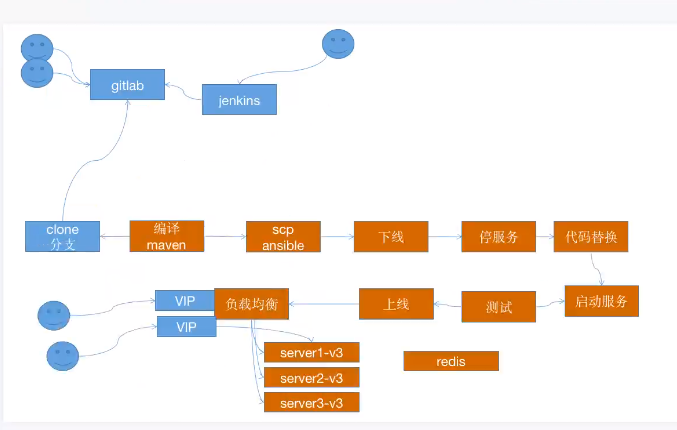

代码升级流程

- 分支管理

- main: 生产分支

- develop: 开发分支

- 版本管理

- 灰度发布

- 传统项目自动化构建流程

手动更新deployment控制器镜像

滚动更新

deploy 控制器支持的更新策略:

- 滚动更新: 在升级过程中服务存在新老版本并存的情况,需要在应用侧进行兼容

a. deployment.spec.strategy.rollingUpdate.maxSurge: 在更新期间可以临时创建的总pod数的25%的pod b. deployment.spec.strategy.rollingUpdate.maxUnavaliable: 在更新期间可以临时创建的总pod数的25%(默认)的pod是不可用的

- 重建更新: 先删除现有pod,然后再根据新的镜像创建pod, 缺点就是会存在服务中断的情况

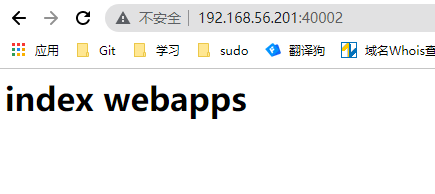

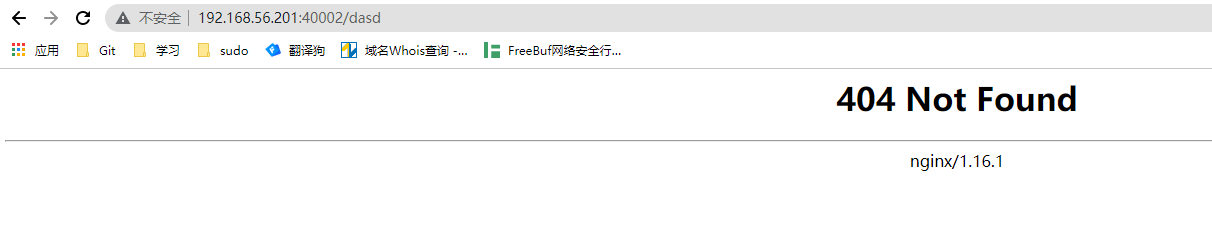

构建nginx k8s业务部署 ``` root@k8s-master1:~# cd k8s/08-cicd/nginx_deploy/ root@k8s-master1:~/k8s/08-cicd/nginx_deploy# vim nginx-deploy.yaml kind: Deployment apiVersion: apps/v1 metadata: labels: app: cropy-nginx-deployment-label name: cropy-nginx-deployment namespace: cropy spec: replicas: 1 selector: matchLabels: app: cropy-nginx-selector template: metadata: labels:

app: cropy-nginx-selector

spec: containers:

name: cropy-nginx-container image: harbor.cropy.cn/cropy/nginx-web1:v1 imagePullPolicy: Always ports:

- containerPort: 80 protocol: TCP name: http

- containerPort: 443 protocol: TCP name: https env:

- name: “password” value: “123456”

- name: “age” value: “20” resources: limits: cpu: 2 memory: 2Gi requests: cpu: 200m memory: 1Gi

volumeMounts:

- name: cropy-images mountPath: /usr/local/nginx/html/webapp/images readOnly: false

- name: cropy-static mountPath: /usr/local/nginx/html/webapp/static readOnly: false volumes:

- name: cropy-images nfs: server: 10.168.56.110 path: /data/nfs/cropy/nginx-web/images

- name: cropy-static nfs: server: 10.168.56.110 path: /data/nfs/cropy/nginx-web/data

kind: Service apiVersion: v1 metadata: labels: app: cropy-nginx-service-label name: cropy-nginx-service namespace: cropy spec: type: NodePort ports:

- name: http port: 80 protocol: TCP targetPort: 80 nodePort: 40002

- name: https port: 443 protocol: TCP targetPort: 443 nodePort: 40443 selector: app: cropy-nginx-selector

root@k8s-master1:~/k8s/08-cicd/nginx_deploy# kubectl apply -f nginx-deploy.yaml —record

2. 测试站点

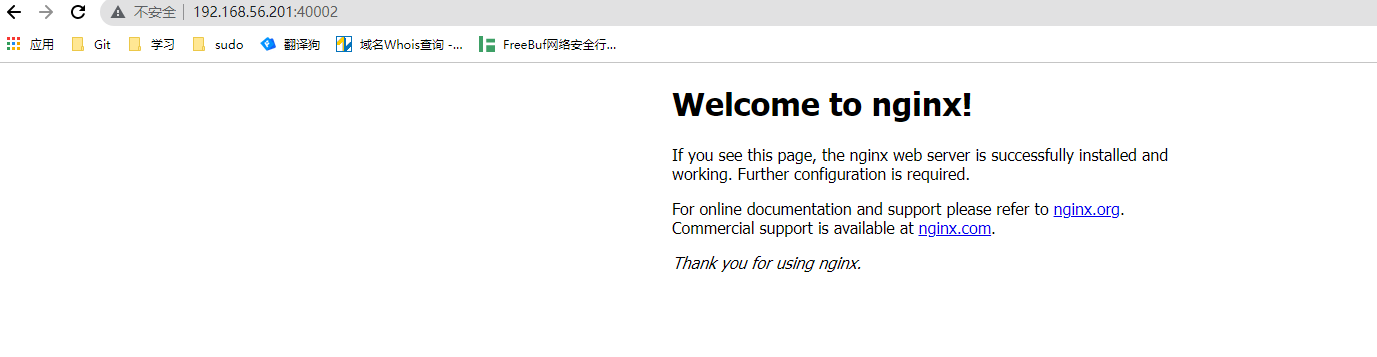

3. 进行手动更新

root@k8s-master1:~/k8s/08-cicd/nginx_deploy# docker pull nginx:1.16.1 root@k8s-master1:~/k8s/08-cicd/nginx_deploy# docker tag nginx:1.16.1 harbor.cropy.cn/cropy/nginx:v2 root@k8s-master1:~/k8s/08-cicd/nginx_deploy# docker push harbor.cropy.cn/cropy/nginx:v2 root@k8s-master1:~/k8s/08-cicd/nginx_deploy# kubectl set image deployment cropy-nginx-deployment cropy-nginx-container=harbor.cropy.cn/cropy/nginx:v2 -n cropy —record=true

4. 查看更新结果

5. 查看升级历史

root@k8s-master1:~/k8s/08-cicd/nginx_deploy# kubectl rollout history deployment cropy-nginx-deployment -n cropy deployment.apps/cropy-nginx-deployment REVISION CHANGE-CAUSE 1 kubectl apply —filename=nginx-deploy.yaml —record=true 2 kubectl apply —filename=nginx-deploy.yaml —record=true root@k8s-master1:~/k8s/08-cicd/nginx_deploy# kubectl set image deployment cropy-nginx-deployment cropy-nginx-container=nginx:1.18.0 -n cropy —record=true root@k8s-master1:~/k8s/08-cicd/nginx_deploy# kubectl rollout history deployment cropy-nginx-deployment -n cropy

6. 回滚到上一个版本

root@k8s-master1:~/k8s/08-cicd/nginx_deploy# kubectl rollout undo deployment cropy-nginx-deployment -n cropy root@k8s-master1:~/k8s/08-cicd/nginx_deploy# kubectl rollout history deployment cropy-nginx-deployment -n cropy deployment.apps/cropy-nginx-deployment REVISION CHANGE-CAUSE 1 kubectl apply —filename=nginx-deploy.yaml —record=true 3 kubectl set image deployment cropy-nginx-deployment cropy-nginx-container=nginx:1.18.0 —namespace=cropy —record=true 4 kubectl apply —filename=nginx-deploy.yaml —record=true

7. 指定版本回滚(--to-revision)

root@k8s-master1:~/k8s/08-cicd/nginx_deploy# kubectl rollout undo deployment cropy-nginx-deployment -n cropy —to-revision=1

<a name="caLFA"></a>

#### 金丝雀更新

1. 构建业务镜像(v1/v2)

root@k8s-master01:~# mkdir /root/k8s/web/dockerfile/tomcat_web root@k8s-master01:~# cd /root/k8s/web/dockerfile/tomcat_web root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# echo “tomcat webapps v1” > index.html root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# tar czf app1.tar.gz index.html

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# docker run -itd —name t1 harbor.cropy.cn/baseimages/tomcat-base:v8.5.72 sleep 36000 root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# docker cp t1:/apps/tomcat/conf/server.xml ./ root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# docker cp t1:/apps/tomcat/bin/catalina.sh ./ root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# vim catalina.sh JAVA_OPTS=”-server -Xms1g -Xmx1g -Xss512k -Xmn1g -XX:CMSInitiatingOccupancyFraction=65 -XX:+UseFastAccessorMethods -XX:+AggressiveOpts -XX:+UseBiasedLocking -X X:+DisableExplicitGC -XX:MaxTenuringThreshold=10 -XX:NewSize=2048M -XX:MaxNewSize=2048M -XX:NewRatio=2 -XX:PermSize=128m -XX:MaxPermSize=512m -XX:CMSFullGCsBefo reCompaction=5 -XX:+ExplicitGCInvokesConcurrent -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+CMSParallelRemarkEnabled”

OS specific support. $var must be set to either true or false.

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# vim server.xml

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# vim run_tomcat.sh

!/bin/bash

su - nginx -c “/apps/tomcat/bin/catalina.sh start” tail -f /etc/hosts

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# vim Dockerfile FROM harbor.cropy.cn/baseimages/tomcat-base:v8.5.72

ADD catalina.sh /apps/tomcat/bin/catalina.sh ADD server.xml /apps/tomcat/conf/server.xml ADD app1.tar.gz /data/tomcat/webapps/myapp/ ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh RUN chown -R nginx.nginx /data/ /apps/

EXPOSE 8080 8443 CMD [“/apps/tomcat/bin/run_tomcat.sh”]

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# chmod +x *.sh root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# vim build.sh

!/bin/bash

TAG=$1 docker build -t harbor.cropy.cn/cropy/tomcat-app1:${TAG} . sleep 3 docker push harbor.cropy.cn/cropy/tomcat-app1:${TAG}

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# sh build.sh v1

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# echo “tomcat webapps v2” > index.html root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# tar czf app1.tar.gz index.html root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# bash build.sh v2

2. 业务k8s yaml

root@k8s-master1:~/k8s/08-cicd/jinsique# vim tomcat.yaml kind: Deployment apiVersion: apps/v1 metadata: labels: app: cropy-tomcat-app1-deployment-label name: cropy-tomcat-app1-deployment namespace: cropy spec: replicas: 6 selector: matchLabels: app: cropy-tomcat-app1-selector template: metadata: labels: app: cropy-tomcat-app1-selector spec: containers:

- name: cropy-tomcat-app1-container

image: harbor.cropy.cn/cropy/tomcat-app1:v1

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

resources:

limits:

cpu: 1

memory: "512Mi"

requests:

cpu: 500m

memory: "512Mi"

kind: Service apiVersion: v1 metadata: labels: app: cropy-tomcat-app1-service-label name: cropy-tomcat-app1-service namespace: cropy spec: type: NodePort ports:

- name: http port: 80 protocol: TCP targetPort: 8080 nodePort: 40003 selector: app: cropy-tomcat-app1-selector

root@k8s-master1:~/k8s/08-cicd/jinsique# kubectl apply -f tomcat.yaml

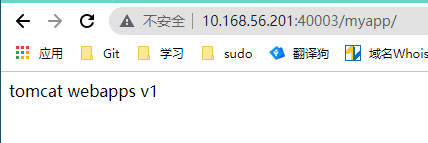

3. 测试访问(v1版本)

4. 升级版本并快速pause

root@k8s-master1:~/k8s/08-cicd/jinsique# kubectl get deploy -n cropy NAME READY UP-TO-DATE AVAILABLE AGE cropy-tomcat-app1-deployment 6/6 6 6 7m2s

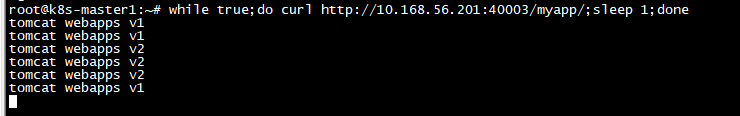

root@k8s-master1:~/k8s/08-cicd/jinsique# kubectl set image deployment/cropy-tomcat-app1-deployment cropy-tomcat-app1-container=harbor.cropy.cn/cropy/tomcat-app1:v2 -n cropy && kubectl rollout pause deployment cropy-tomcat-app1-deployment -n cropy

5. 另起终端查看

root@k8s-master1:~# while true;do curl http://10.168.56.201:40003/myapp/;sleep 1;done tomcat webapps v1

6. 如果没问题的话就可以继续更新操作了

root@k8s-master1:~/k8s/08-cicd/jinsique# kubectl rollout resume deployment cropy-tomcat-app1-deployment -n cropy

7. 若有问题就使用如下命令恢复到上一个版本

root@k8s-master1:~/k8s/08-cicd/jinsique# kubectl rollout undo deployment cropy-tomcat-app1-deployment -n cropy

<a name="qxMl7"></a>

## k8s结合jenkins与gitlab实现代码升级与回滚

<a name="okr9F"></a>

### 手动部署不同版本的项目

1. 创建不同版本的业务deployment

root@k8s-master1:~/k8s/08-cicd/jinsique/cicd# cat tomcat-k8s-v1.yaml kind: Deployment apiVersion: apps/v1 metadata: labels: app: cropy-tomcat-app1-deployment-label-v1 name: cropy-tomcat-app1-deployment-v1 namespace: cropy spec: replicas: 1 selector: matchLabels: app: cropy-tomcat-app1-selector version: v1 template: metadata: labels: app: cropy-tomcat-app1-selector version: v1 spec: containers:

- name: cropy-tomcat-app1-container

image: harbor.cropy.cn/cropy/tomcat-app1:v1

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

resources:

limits:

cpu: 1

memory: "512Mi"

requests:

cpu: 500m

memory: "512Mi"

root@k8s-master1:~/k8s/08-cicd/jinsique/cicd# cat tomcat-k8s-v2.yaml kind: Deployment apiVersion: apps/v1 metadata: labels: app: cropy-tomcat-app1-deployment-label-v2 version: v2 name: cropy-tomcat-app1-deployment-v2 namespace: cropy spec: replicas: 1 selector: matchLabels: app: cropy-tomcat-app1-selector version: v2 template: metadata: labels: app: cropy-tomcat-app1-selector version: v2 spec: containers:

- name: cropy-tomcat-app1-container

image: harbor.cropy.cn/cropy/tomcat-app1:v2

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

resources:

limits:

cpu: 1

memory: "512Mi"

requests:

cpu: 500m

memory: "512Mi"

2. service 存在版本的控制来选择将请求转发到哪一个版本: version: v1

root@k8s-master1:~/k8s/08-cicd/jinsique/cicd# cat service.yaml kind: Service apiVersion: v1 metadata: labels: app: cropy-tomcat-app1-service-label name: cropy-tomcat-app1-service namespace: cropy spec: type: NodePort ports:

- name: http port: 80 protocol: TCP targetPort: 8080 nodePort: 40003 selector: app: cropy-tomcat-app1-selector version: v2 ```

- 实现金丝雀发布的办法 ```

- 保证v1,v2版本的k8s项目同时间运行,根据其中的副本数调整pod数

- serverce 版本信息不做填写即可实现v1,v2版本共存

如果某个版本是现在要使用的版本,那就需要调整service,在selector加上version信息然后就能实现最终版本请求的转发 ```

部署并验证

root@k8s-master1:~/k8s/08-cicd/jinsique/cicd# kubectl get svc -n cropy NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE cropy-tomcat-app1-service NodePort 10.100.206.160 <none> 80:40003/TCP 4m49s root@k8s-master1:~/k8s/08-cicd/jinsique/cicd# kubectl get ep -n cropy NAME ENDPOINTS AGE cropy-tomcat-app1-service 10.200.135.209:8080 4m53s rroot@k8s-master1:~/k8s/08-cicd/jinsique/cicd# while true;do curl http://10.168.56.201:40003/myapp/index.html && sleep 0.5;done #测试部署jenkins

- 下载地址: https://mirrors.tuna.tsinghua.edu.cn/jenkins/debian-stable/jenkins_2.319.2_all.deb

- 官方文档地址: https://www.jenkins.io/doc/

- ip:10.168.56.104

- 操作系统:ubuntu20.04LTS

root@k8s-jenkins:~# mkdir /data/apps/jenkins -p && cd /data/apps/jenkins root@k8s-jenkins:/data/apps/jenkins# wget https://mirrors.tuna.tsinghua.edu.cn/jenkins/debian-stable/jenkins_2.319.2_all.deb root@k8s-jenkins:/data/apps/jenkins# apt update -y root@k8s-jenkins:/data/apps/jenkins# apt install openjdk-11-jdk daemon nginx -y root@k8s-jenkins:/data/apps/jenkins# dpkg -i jenkins_2.319.2_all.deb配置jenkins

停止jenkins服务

root@k8s-jenkins:~# systemctl stop jenkins.service配置jenkins

root@k8s-jenkins:~# vim /etc/default/jenkins JAVA_ARGS="-Djava.awt.headless=true -Dhudson.security.csrf.GlobalCrumbIssuerConfiguration.DISABLE_CSRF_PROTECTION=true" JENKINS_USER=root JENKINS_GROUP=root JENKINS_HOME=/data/apps/$NAME JENKINS_LOG=/data/apps/jenkins/log/$NAME.log root@k8s-jenkins:~# systemctl start jenkins.service配置jenkins 插件加速 ``` root@k8s-jenkins:~# vim /etc/nginx/conf.d/jenkins-proxy.conf server { listen 80; server_name updates.jenkins-ci.org; location /download/plugins {

proxy_set_header Host mirrors.tuna.tsinghua.edu.cn; rewrite ^/download/plugins/(.*)$ /jenkins/plugins/$1 break; proxy_pass https://mirrors.tuna.tsinghua.edu.cn;} }

root@k8s-jenkins:~# echo “127.0.0.1 updates.jenkins-ci.org” >> /etc/hosts root@k8s-jenkins:~# systemctl restart nginx root@k8s-jenkins:~# systemctl start jenkins.service

4. 配置jenkins

root@k8s-jenkins:~# cat /data/apps/jenkins/secrets/initialAdminPassword e39a991e0a8c4c0eab0b348a8bf66881

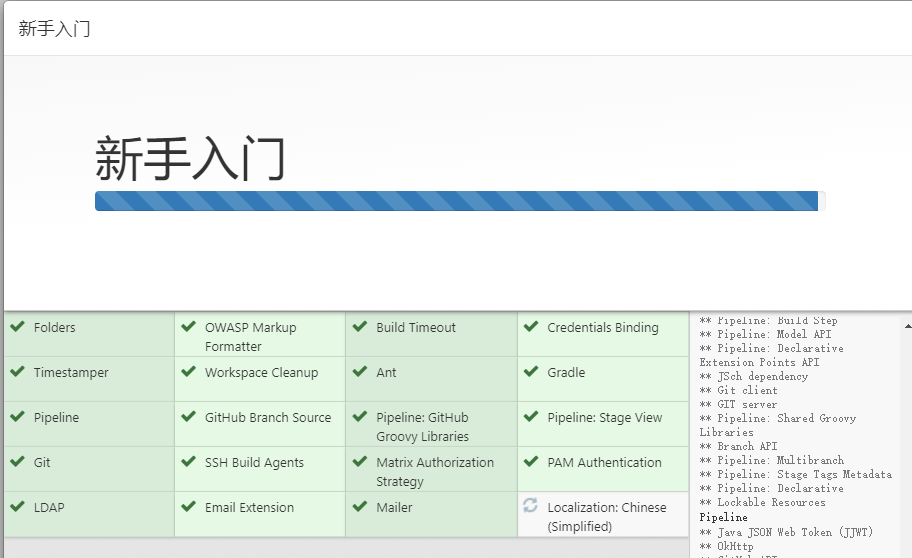

选择推荐插件安装即可<br />

<a name="T3SJV"></a>

### 部署gitlab

- 下载地址:

- ip:10.168.56.103

- 操作系统:ubuntu20.04LTS

root@k8s-gitlab:~# mkdir -p /data/apps/gitlab-ce root@k8s-gitlab:~# cd /data/apps/gitlab-ce root@k8s-gitlab:/data/apps/gitlab-ce# wget https://mirrors.tuna.tsinghua.edu.cn/gitlab-ce/ubuntu/pool/focal/main/g/gitlab-ce/gitlab-ce_14.7.0-ce.0_amd64.deb root@k8s-gitlab:/data/apps/gitlab-ce# apt update -y && dpkg -i gitlab-ce_14.7.0-ce.0_amd64.deb

<a name="Hmcf9"></a>

#### 配置gitlab

1. 修改配置并reconfig

root@k8s-gitlab:/data/apps/gitlab-ce# vim /etc/gitlab/gitlab.rb external_url ‘http://10.168.56.103‘ root@k8s-gitlab:/data/apps/gitlab-ce# gitlab-ctl reconfigure

2. 配置完成查询root密码

root@k8s-gitlab:/data/apps/gitlab-ce# cat /etc/gitlab/initial_root_password

3. [http://10.168.56.103/-/profile/password/edit](http://10.168.56.103/-/profile/password/edit) 首次登陆之后修改密码

3. [http://10.168.56.103/-/profile/preferences](http://10.168.56.103/-/profile/preferences) 修改中文界面

3. [http://10.168.56.103/admin](http://10.168.56.103/admin) 新建用户跟项目,并对用户设置权限

1. 设置devops用户,并设置密码为qwer1234@

1. 创建项目群组devops,并设置群组url

1. 创建项目并提交index.html代码片段

1. 在管理员界面对devops用户授权[http://10.168.56.103/cropy-devops/devops.git](http://10.168.56.103/cropy-devops/devops.git) 项目权限

6. 测试devops代码拉取

1. 需要web页面使用devops用户登陆并修改密码才能进行代码克隆,否则即使设置好了密码和权限,都不能clone代码

root@k8s-jenkins:/data# git clone http://10.168.56.103/cropy-devops/devops.git Cloning into ‘devops’… Username for ‘http://10.168.56.103‘: devops Password for ‘http://devops@10.168.56.103‘: remote: HTTP Basic: Access denied fatal: Authentication failed for ‘http://10.168.56.103/cropy-devops/devops.git/‘ root@k8s-jenkins:/data# git clone http://10.168.56.103/cropy-devops/devops.git Cloning into ‘devops’… Username for ‘http://10.168.56.103‘: devops Password for ‘http://devops@10.168.56.103‘: remote: Enumerating objects: 12, done. remote: Counting objects: 100% (12/12), done. remote: Compressing objects: 100% (8/8), done. remote: Total 12 (delta 0), reused 0 (delta 0), pack-reused 0 Unpacking objects: 100% (12/12), 1.03 KiB | 75.00 KiB/s, done.

b. 新增并提交代码

root@k8s-jenkins:/data# cd devops/ root@k8s-jenkins:/data/devops# ls index.html README.md root@k8s-jenkins:/data/devops# echo “

app version 1.0.2

“ >> index.html root@k8s-jenkins:/data/devops# git add . root@k8s-jenkins:/data/devops# git commit -m “add 1.0.2” [main dd912b5] add 1.0.2 2 files changed, 1 insertion(+), 3 deletions(-) delete mode 100644 “\“ root@k8s-jenkins:/data/devops# git push Username for ‘http://10.168.56.103‘: devops Password for ‘http://devops@10.168.56.103‘: Enumerating objects: 5, done. Counting objects: 100% (5/5), done. Delta compression using up to 4 threads Compressing objects: 100% (3/3), done. Writing objects: 100% (3/3), 319 bytes | 319.00 KiB/s, done. Total 3 (delta 1), reused 0 (delta 0) To http://10.168.56.103/cropy-devops/devops.git 79672e5..dd912b5 main -> main<a name="yB5SR"></a>

### jenkins结合gitlab配置

<a name="GsSys"></a>

#### Demo演示配置流程

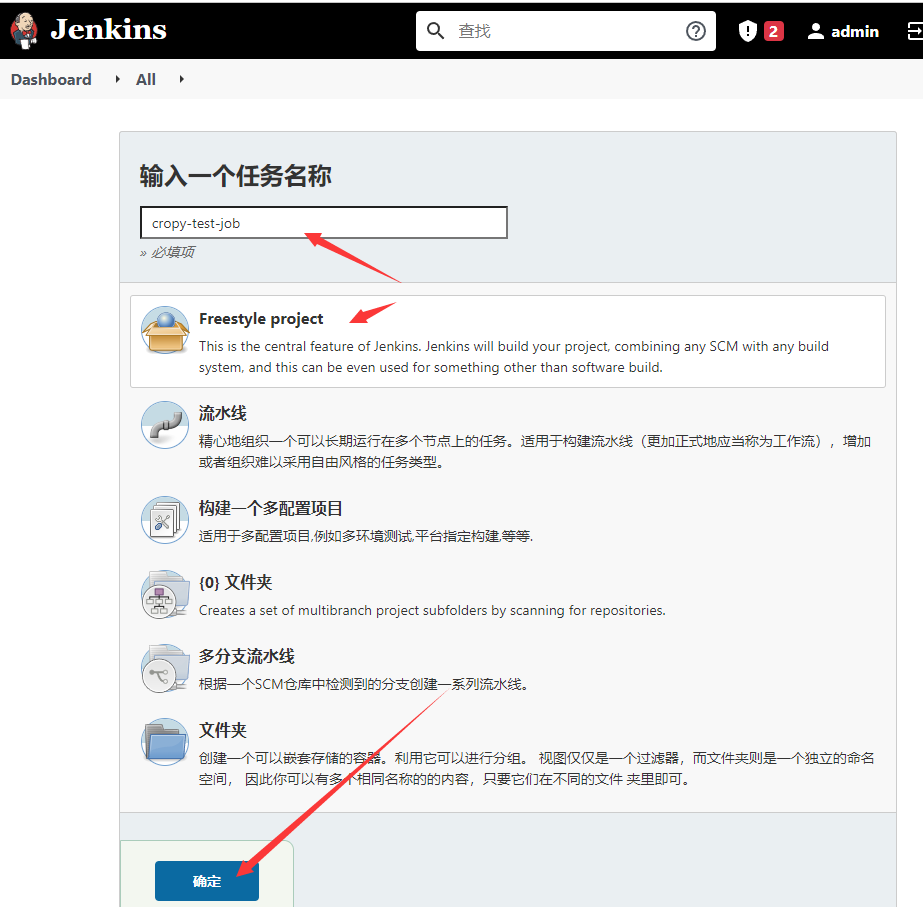

1. 配置freestyle job

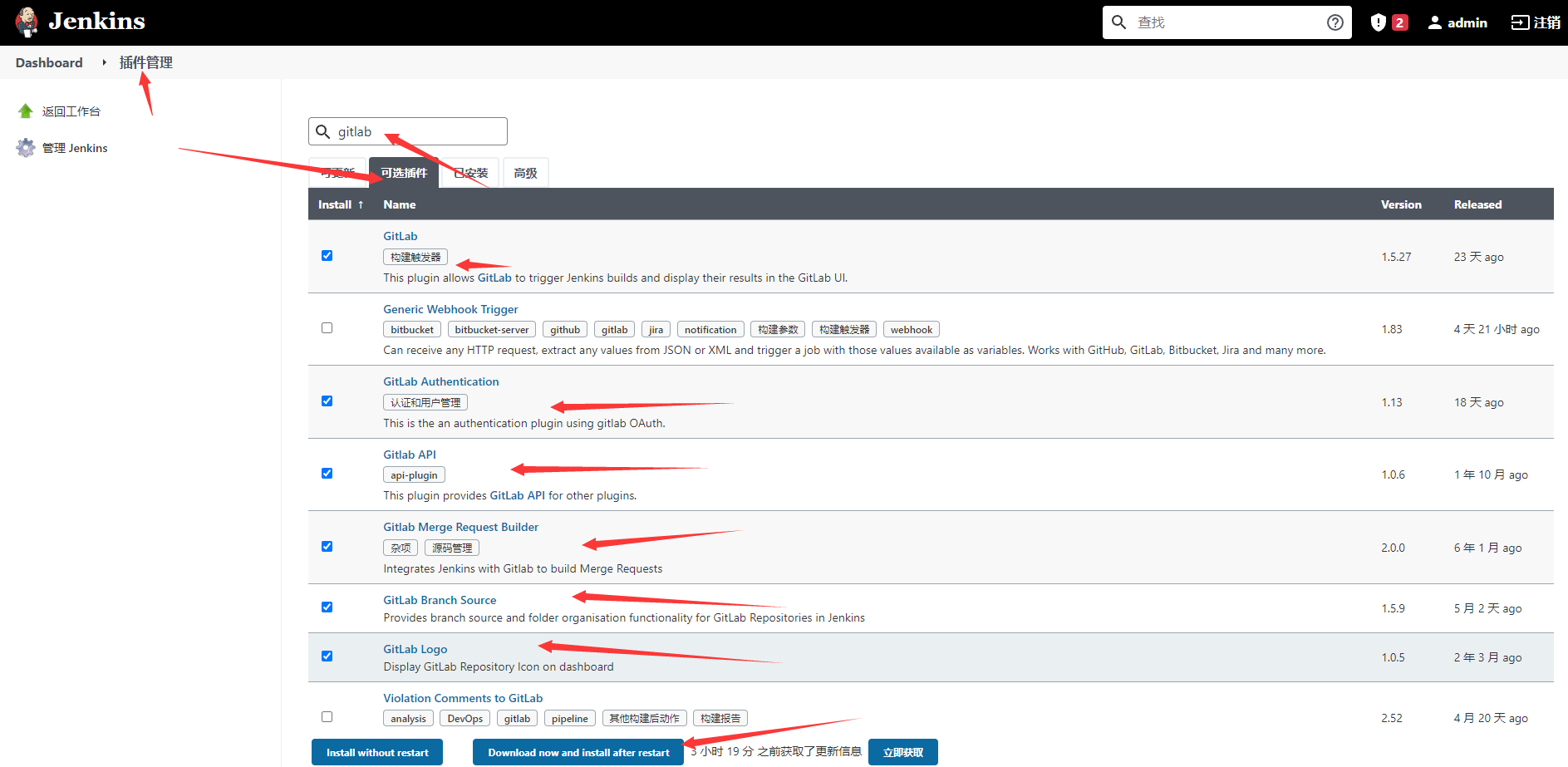

2. 安装jenkins的gitlab相关插件

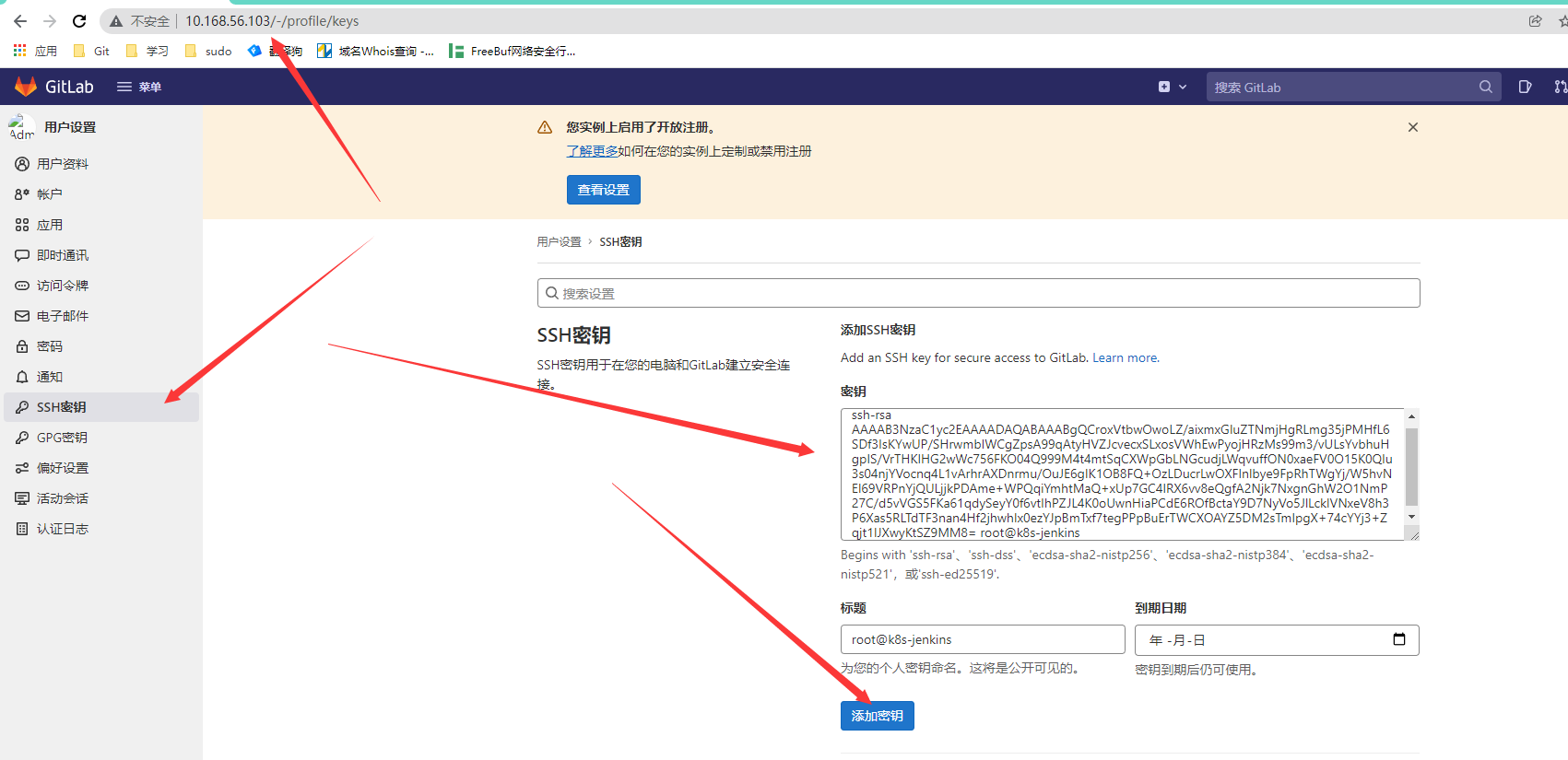

3. jenkins服务器生成公钥

root@k8s-jenkins:~# ssh-keygen root@k8s-jenkins:~# cat .ssh/id_rsa.pub ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQCroxVtbwOwoLZ/aixmxGIuZTNmjHgRLmg35jPMHfL6SDf3IsKYwUP/SHrwmbIWCgZpsA99qAtyHVZJcvecxSLxosVWhEwPyojHRzMs99m3/vULsYvbhuHgpIS/VrTHKIHG2wWc756FKO04Q999M4t4mtSqCXWpGbLNGcudjLWqvuffON0xaeFV0O15K0QIu3s04njYVocnq4L1vArhrAXDnrmu/OuJE6gIK1OB8FQ+OzLDucrLwOXFInlbye9FpRhTWgYj/W5hvNEl69VRPnYjQULjjkPDAme+WPQqiYmhtMaQ+xUp7GC4IRX6vv8eQgfA2Njk7NxgnGhW2O1NmP27C/d5vVGS5FKa61qdySeyY0f6vtIhPZJL4K0oUwnHiaPCdE6ROfBctaY9D7NyVo5JlLcklVNxeV8h3P6Xas5RLTdTF3nan4Hf2jhwhIx0ezYJpBmTxf7tegPPpBuErTWCXOAYZ5DM2sTmIpgX+74cYYj3+Zqjt1IJXwyKtSZ9MM8= root@k8s-jenkins

4. 将jenkins公钥放到gitlab ssh密钥

1. [http://10.168.56.103/-/profile/keys](http://10.168.56.103/-/profile/keys)

5. 测试jenkins克隆项目(免密)

root@k8s-jenkins:~# git clone git@10.168.56.103:cropy-devops/devops.git Cloning into ‘devops’… The authenticity of host ‘10.168.56.103 (10.168.56.103)’ can’t be established. ECDSA key fingerprint is SHA256:YqNnHoTCd4PwAUCvVgB4bfmYsMBwcEBBzShSWFYuyTc. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added ‘10.168.56.103’ (ECDSA) to the list of known hosts.

6. jenkins服务器创建测试脚本

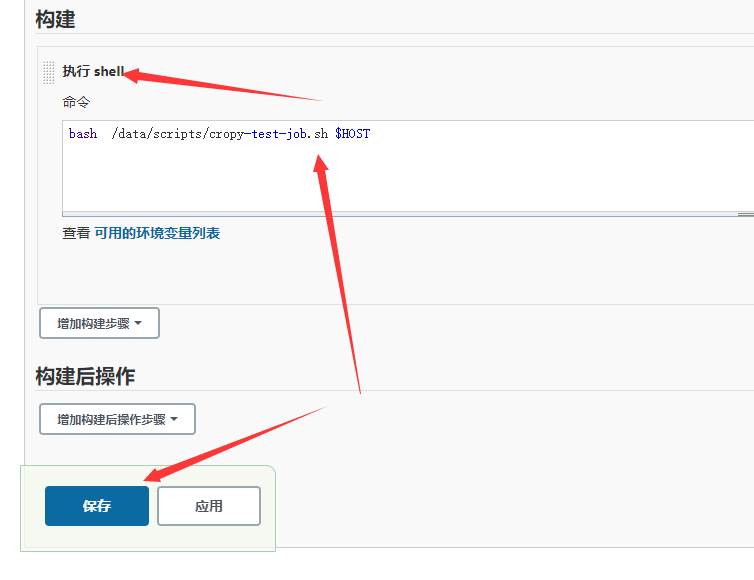

root@k8s-jenkins:~# mkdir /data/scripts root@k8s-jenkins:~# cd /data/scripts root@k8s-jenkins:/data/scripts# vim cropy-test-job.sh

!/bin/bash

echo $1

root@k8s-jenkins:/data/scripts# chmod +x cropy-test-job.sh

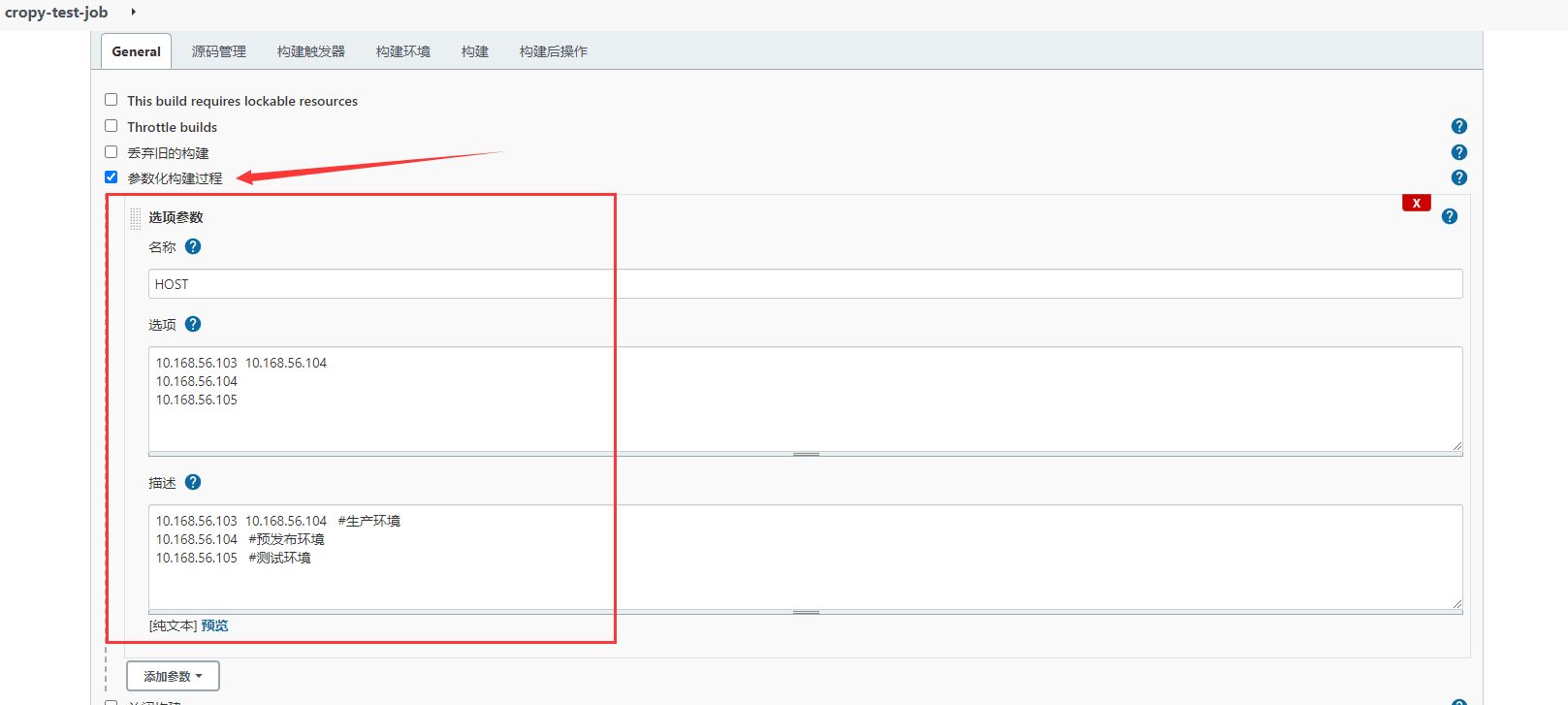

7. jenkins 创建参数化构建流程

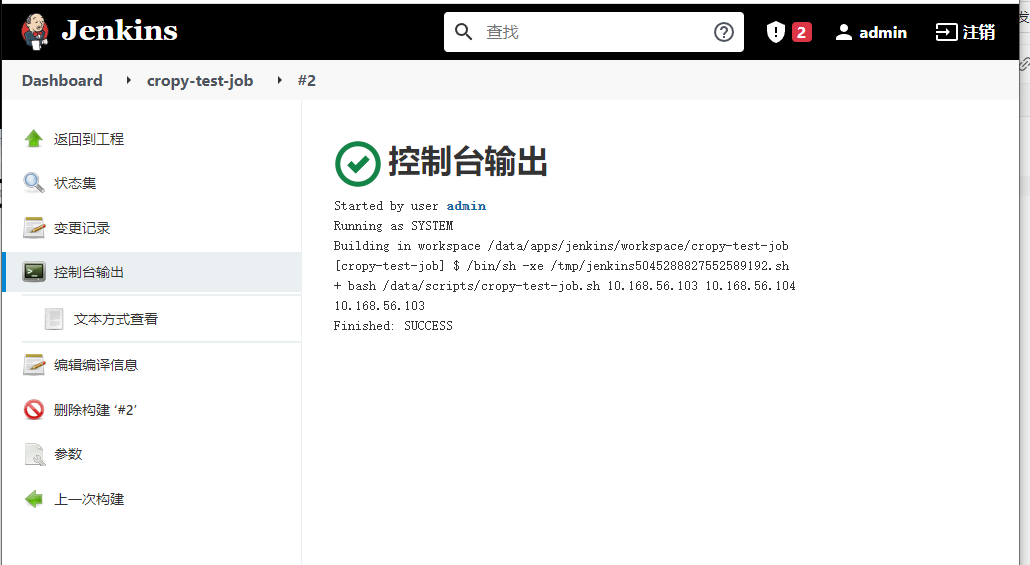

8. 构建

<a name="VMgD7"></a>

#### 配置k8s项目发布流程

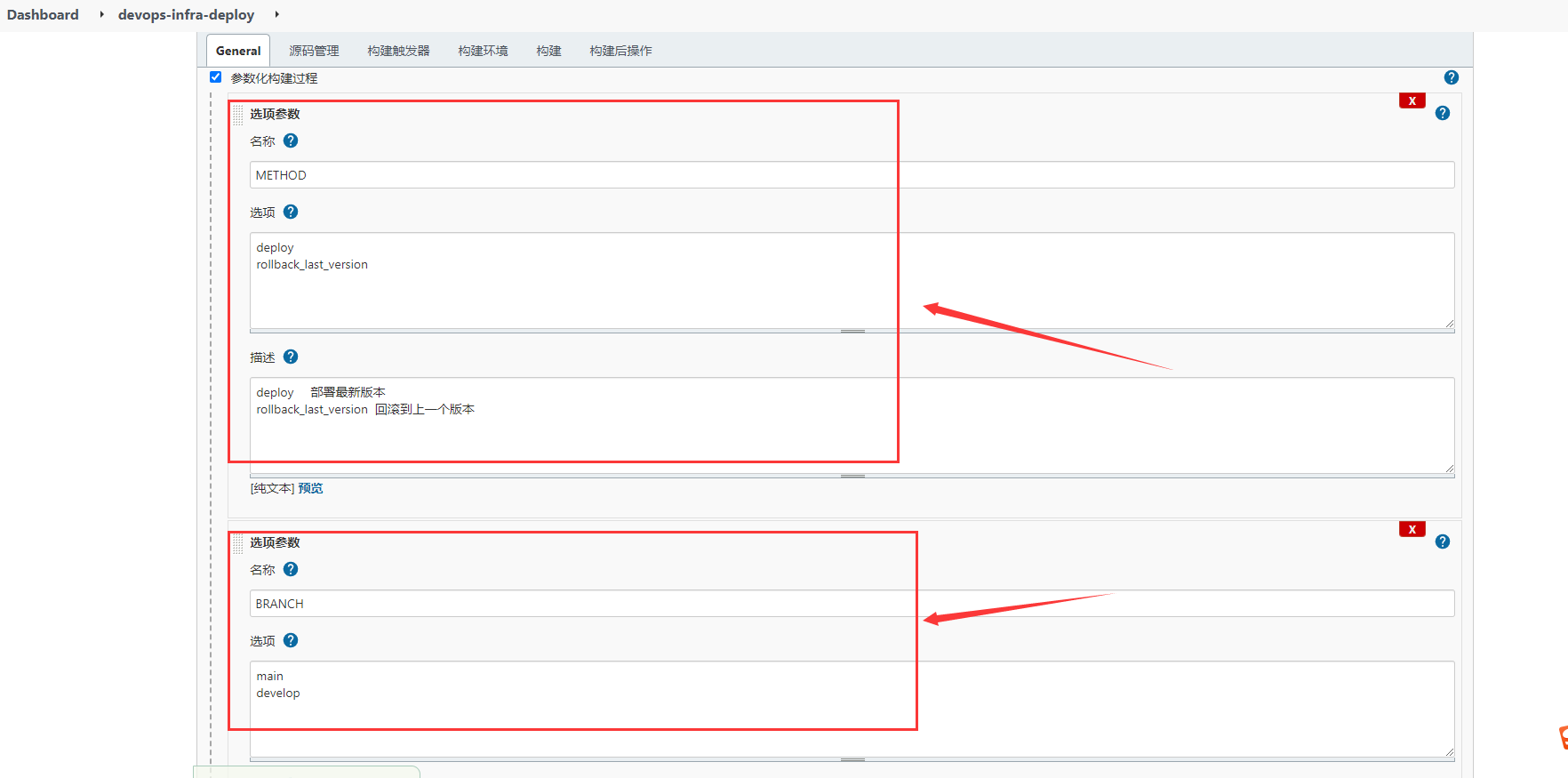

1. k8s-master节点配置新的freestyleJOB->devops-infra-deploy

2. 配置两个选项参数

3. jenkins服务器加入代码配置

root@k8s-jenkins:~# cd /data/scripts/

root@k8s-jenkins:/data/scripts# vim deploy-k8s.sh

!/bin/bash

Version: v1

记录脚本开始执行时间

starttime=date +'%Y-%m-%d %H:%M:%S'

变量

SHELLDIR=”/root/scripts” SHELL_NAME=”$0” K8S_CONTROLLER1=”10.168.56.201” K8S_CONTROLLER2=”10.168.56.202” DATE=`date +%Y-%m-%d%H%M%S` METHOD=$1 Branch=$2

if test -z $Branch;then Branch=develop fi

function Code_Clone(){

Git_URL=”git@10.168.56.103:cropy-devops/devops.git”

DIR_NAME=echo ${Git_URL} |awk -F "/" '{print $2}' | awk -F "." '{print $1}'

DATA_DIR=”/data/gitdata/cropy”

Git_Dir=”${DATA_DIR}/${DIR_NAME}”

cd ${DATA_DIR} && echo “即将清空上一版本代码并获取当前分支最新代码” && sleep 1 && rm -rf ${DIR_NAME}

echo “即将开始从分支${Branch} 获取代码” && sleep 1

git clone -b ${Branch} ${Git_URL}

echo “分支${Branch} 克隆完成,即将进行代码编译!” && sleep 1

cd ${Git_Dir} && mvn clean package

echo “代码编译完成,即将开始将IP地址等信息替换为测试环境”

#

sleep 1 cd ${Git_Dir} tar czf ${DIR_NAME}.tar.gz ./* }

将打包好的压缩文件拷贝到k8s 控制端服务器

function Copy_File(){ echo “压缩文件打包完成,即将拷贝到k8s 控制端服务器${K8S_CONTROLLER1}” && sleep 1 scp ${Git_Dir}/${DIR_NAME}.tar.gz root@${K8S_CONTROLLER1}:/root/k8s/08-cicd/jenkins_deploy echo “压缩文件拷贝完成,服务器${K8S_CONTROLLER1}即将开始制作Docker 镜像!” && sleep 1 }

到控制端执行脚本制作并上传镜像

function Make_Image(){ echo “开始制作Docker镜像并上传到Harbor服务器” && sleep 1 ssh root@${K8S_CONTROLLER1} “cd /root/k8s/08-cicd/jenkins_deploy && bash build-command.sh ${DATE}” echo “Docker镜像制作完成并已经上传到harbor服务器” && sleep 1 }

到控制端更新k8s yaml文件中的镜像版本号,从而保持yaml文件中的镜像版本号和k8s中版本号一致

function Update_k8s_yaml(){ echo “即将更新k8s yaml文件中镜像版本” && sleep 1 ssh root@${K8S_CONTROLLER1} “cd /root/k8s/08-cicd/jenkins_deploy && sed -i ‘s/image: harbor.cropy.*/image: harbor.cropy.cn\/cropy\/tomcat-app1:${DATE}/g’ tomcat-k8s.yaml” echo “k8s yaml文件镜像版本更新完成,即将开始更新容器中镜像版本” && sleep 1 }

到控制端更新k8s中容器的版本号,有两种更新办法,一是指定镜像版本更新,二是apply执行修改过的yaml文件

function Update_k8s_container(){

第一种方法

ssh root@${K8S_CONTROLLER1} “kubectl set image deployment/cropy-tomcat-app1-deployment cropy-tomcat-app1-container=harbor.cropy.cn/cropy/tomcat-app1:${DATE} -n cropy”

第二种方法,推荐使用第一种

ssh root@${K8S_CONTROLLER1} “cd /root/k8s/08-cicd/jenkins_deploy && kubectl apply -f tomcat-app1.yaml —record”

echo “k8s 镜像更新完成” && sleep 1 echo “当前业务镜像版本: harbor.cropy.cn/cropy/tomcat-app1:${DATE}”

计算脚本累计执行时间,如果不需要的话可以去掉下面四行

endtime=date +'%Y-%m-%d %H:%M:%S'

start_seconds=$(date —date=”$starttime” +%s);

end_seconds=$(date —date=”$endtime” +%s);

echo “本次业务镜像更新总计耗时:”$((end_seconds-start_seconds))”s”

}

基于k8s 内置版本管理回滚到上一个版本

function rollback_last_version(){ echo “即将回滚之上一个版本” ssh root@${K8S_CONTROLLER1} “kubectl rollout undo deployment/cropy-tomcat-app1-deployment -n cropy” sleep 1 echo “已执行回滚至上一个版本” }

使用帮助

usage(){ echo “部署使用方法为 ${SHELL_DIR}/${SHELL_NAME} deploy “ echo “回滚到上一版本使用方法为 ${SHELL_DIR}/${SHELL_NAME} rollback_last_version” }

主函数

main(){ case ${METHOD} in deploy) Code_Clone; Copy_File; Make_Image; Update_k8s_yaml; Update_k8s_container; ;; rollback_last_version) rollback_last_version; ;; *) usage; esac; }

main $1 $2

root@k8s-jenkins:/data/scripts# chmod +x deploy-k8s.sh root@k8s-jenkins:/data/scripts# mkdir /data/gitdata/cropy -p

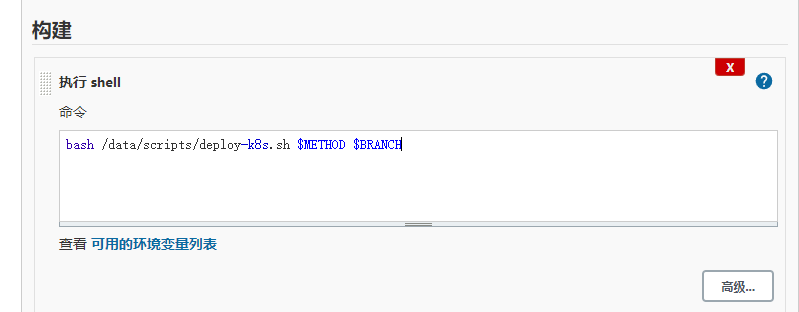

4. jenkins配置shell构建流程

5. k8s-master节点配置处理

root@k8s-master1:~# mkdir /root/k8s/08-cicd/jenkins_deploy && cd /root/k8s/08-cicd/jenkins_deploy root@k8s-master1:~/k8s/08-cicd/jenkins_deploy# ll total 68 drwxr-xr-x 2 root root 4096 Jan 30 18:48 ./ drwxr-xr-x 5 root root 4096 Jan 30 17:58 ../ -rw-r—r— 1 root root 142 Jan 30 17:59 build-command.sh -rwxr-x—x 1 root root 25801 Jan 30 17:59 catalina.sh -rw-r—r— 1 root root 231 Jan 30 18:48 devops.tar.gz -rw-r—r— 1 root root 334 Jan 30 18:22 Dockerfile -rw-r—r— 1 root root 18 Jan 30 17:59 index.html -rwxr-xr-x 1 root root 82 Jan 30 17:59 run_tomcat.sh -rw———- 1 root root 7602 Jan 30 17:59 server.xml -rw-r—r— 1 root root 1053 Jan 30 18:48 tomcat-k8s.yaml

root@k8s-master1:~/k8s/08-cicd/jenkins_deploy# cat Dockerfile FROM harbor.cropy.cn/baseimages/tomcat-base:v8.5.72

ADD catalina.sh /apps/tomcat/bin/catalina.sh ADD server.xml /apps/tomcat/conf/server.xml ADD devops.tar.gz /data/tomcat/webapps/myapp/ ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh RUN chown -R nginx.nginx /data/ /apps/

EXPOSE 8080 8443 CMD [“/apps/tomcat/bin/run_tomcat.sh”] root@k8s-master1:~/k8s/08-cicd/jenkins_deploy# cat build-command.sh

!/bin/bash

TAG=$1 docker build -t harbor.cropy.cn/cropy/tomcat-app1:${TAG} . sleep 3 docker push harbor.cropy.cn/cropy/tomcat-app1:${TAG}

root@k8s-master1:~/k8s/08-cicd/jenkins_deploy# cat run_tomcat.sh

!/bin/bash

su - nginx -c “/apps/tomcat/bin/catalina.sh start” tail -f /etc/hosts root@k8s-master1:~/k8s/08-cicd/jenkins_deploy# cat tomcat-k8s.yaml kind: Deployment apiVersion: apps/v1 metadata: labels: app: cropy-tomcat-app1-deployment-label name: cropy-tomcat-app1-deployment namespace: cropy spec: replicas: 1 selector: matchLabels: app: cropy-tomcat-app1-selector template: metadata: labels: app: cropy-tomcat-app1-selector spec: containers:

- name: cropy-tomcat-app1-container

image: harbor.cropy.cn/cropy/tomcat-app1:2022-01-30_18_48_10

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

resources:

limits:

cpu: 1

memory: "512Mi"

requests:

cpu: 500m

memory: "512Mi"

kind: Service apiVersion: v1 metadata: labels: app: cropy-tomcat-app1-service-label name: cropy-tomcat-app1-service namespace: cropy spec: type: NodePort ports:

- name: http port: 80 protocol: TCP targetPort: 8080 nodePort: 40003 selector: app: cropy-tomcat-app1-selector ```

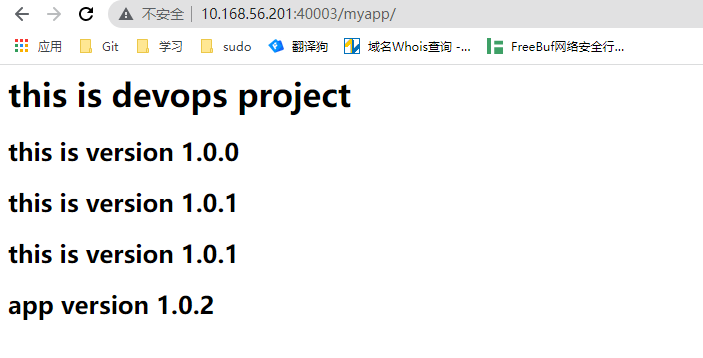

- jenkins执行构建过程(deploy)

- 浏览器测试

- jenkins执行回滚过程(rollback)

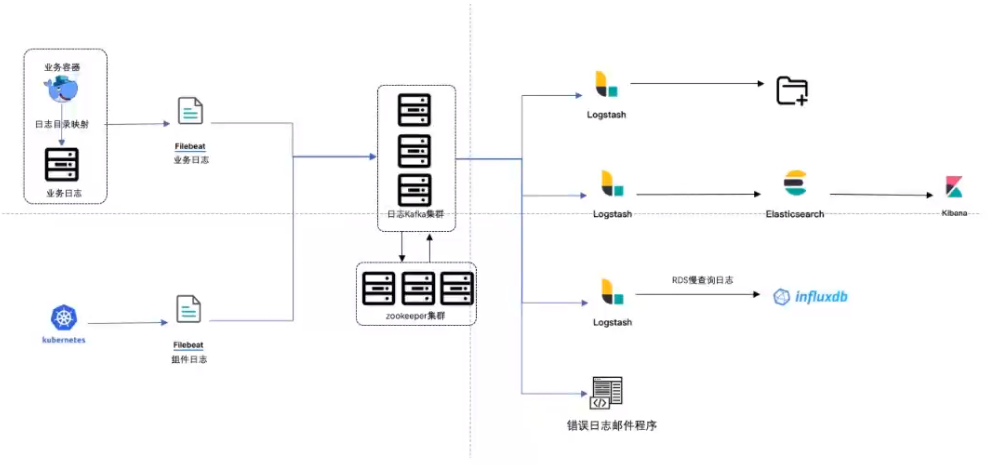

k8s日志采集之ELK

- 官方文档: https://kubernetes.io/zh/docs/concepts/cluster-administration/logging/

- 日志采集类型

- 系统日志

- 业务日志

- 应用程序日志

- 系统日志

/var/log/syslog - 应用程序的日志

error.log

accesslog.log-访问统计、分析

1.在k8s运行daemonset,收集每一个node节点/var/lib/docker/的日志

优点:

a. 配置简单

b. 后期维护简单

缺点:

a.日志类型不好分类

2.每一个pod启动一个日志收集工具

- filebeat

- 两个实现方式:

1.在一个pod的同一个容器里面,先启动filebeat进程。然后启动web服务

2.在一个pod里面启动两个容器。一个容器是web服务,另外一个容器是filebeatkafka集群搭建

集群规划

| 主机名 | IP | 部署服务 | | —- | —- | —- | | kafka01 | 10.168.56.41 | kafka,zookeeper | | kafka02 | 10.168.56.42 | kafka,zookeeper | | kafka03 | 10.168.56.43 | kafka,zookeeper |

软件下载

- zk: https://dlcdn.apache.org/zookeeper/zookeeper-3.6.3/apache-zookeeper-3.6.3-bin.tar.gz

- kafka: https://www.apache.org/dyn/closer.cgi?path=/kafka/3.1.0/kafka_2.13-3.1.0.tgz

集群部署

java环境部署(所有节点)

apt update -y apt install openjdk-8-jdk -yzookeeper 部署

- zk01部署 ``` root@kafka01:~# mkdir /data/{softs,apps,apps_data/zookeeper,apps_logs} -p root@kafka01:~# cd /data/softs/ root@kafka01:/data/softs# wget https://dlcdn.apache.org/zookeeper/zookeeper-3.6.3/apache-zookeeper-3.6.3-bin.tar.gz root@kafka01:/data/softs# tar xf apache-zookeeper-3.6.3-bin.tar.gz -C /data/apps/ root@kafka01:/data/softs# cd /data/apps/ && ln -sv /data/apps/apache-zookeeper-3.6.3-bin /data/apps/zookeeper && cd root@kafka01:~# echo ‘1’ > /data/apps_data/zookeeper/myid root@kafka01:~# egrep -v “^$|^#” /data/apps/zookeeper/conf/zoo.cfg tickTime=2000 initLimit=10 syncLimit=5 dataDir=/data/apps_data/zookeeper clientPort=2181 maxClientCnxns=1024 autopurge.snapRetainCount=3 autopurge.purgeInterval=1 server.1=10.168.56.41:2888:3888 server.2=10.168.56.42:2888:3888 server.3=10.168.56.43:2888:3888

root@kafka01:~# vim /etc/systemd/system/zookeeper.service [Unit] Description=zookeeper.service After=network.target ConditionPathExists=/data/apps/zookeeper/conf/zoo.cfg [Service] Type=forking

Environment=”PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_231/bin”

User=root Group=root ExecStart=/data/apps/zookeeper/bin/zkServer.sh start ExecStop=/data/apps/zookeeper/bin/zkServer.sh stop ExecStatus=/data/apps/zookeeper/bin/zkServer.sh status [Install] WantedBy=multi-user.target

2. zk02部署

root@kafka02:~# mkdir /data/{softs,apps,apps_data/zookeeper,apps_logs} -p root@kafka02:~# cd /data/softs/ root@kafka02:/data/softs# wget https://dlcdn.apache.org/zookeeper/zookeeper-3.6.3/apache-zookeeper-3.6.3-bin.tar.gz root@kafka02:/data/softs# tar xf apache-zookeeper-3.6.3-bin.tar.gz -C /data/apps/ root@kafka02:/data/softs# cd /data/apps/ && ln -sv /data/apps/apache-zookeeper-3.6.3-bin /data/apps/zookeeper && cd root@kafka02:~# echo ‘2’ > /data/apps_data/zookeeper/myid root@kafka02:~# egrep -v “^$|^#” /data/apps/zookeeper/conf/zoo.cfg tickTime=2000 initLimit=10 syncLimit=5 dataDir=/data/apps_data/zookeeper clientPort=2181 maxClientCnxns=1024 autopurge.snapRetainCount=3 autopurge.purgeInterval=1 server.1=10.168.56.41:2888:3888 server.2=10.168.56.42:2888:3888 server.3=10.168.56.43:2888:3888

root@kafka02:~# vim /etc/systemd/system/zookeeper.service [Unit] Description=zookeeper.service After=network.target ConditionPathExists=/data/apps/zookeeper/conf/zoo.cfg [Service] Type=forking

Environment=”PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_231/bin”

User=root Group=root ExecStart=/data/apps/zookeeper/bin/zkServer.sh start ExecStop=/data/apps/zookeeper/bin/zkServer.sh stop ExecStatus=/data/apps/zookeeper/bin/zkServer.sh status [Install] WantedBy=multi-user.target

3. zk03部署

root@kafka03:~# mkdir /data/{softs,apps,apps_data/zookeeper,apps_logs} -p root@kafka03:~# cd /data/softs/ root@kafka03:/data/softs# wget https://dlcdn.apache.org/zookeeper/zookeeper-3.6.3/apache-zookeeper-3.6.3-bin.tar.gz root@kafka03:/data/softs# tar xf apache-zookeeper-3.6.3-bin.tar.gz -C /data/apps/ root@kafka03:/data/softs# cd /data/apps/ && ln -sv /data/apps/apache-zookeeper-3.6.3-bin /data/apps/zookeeper && cd root@kafka03:~# echo ‘3’ > /data/apps_data/zookeeper/myid root@kafka03:~# egrep -v “^$|^#” /data/apps/zookeeper/conf/zoo.cfg tickTime=2000 initLimit=10 syncLimit=5 dataDir=/data/apps_data/zookeeper clientPort=2181 maxClientCnxns=1024 autopurge.snapRetainCount=3 autopurge.purgeInterval=1 server.1=10.168.56.41:2888:3888 server.2=10.168.56.42:2888:3888 server.3=10.168.56.43:2888:3888

root@kafka01:~# vim /etc/systemd/system/zookeeper.service [Unit] Description=zookeeper.service After=network.target ConditionPathExists=/data/apps/zookeeper/conf/zoo.cfg [Service] Type=forking

Environment=”PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_231/bin”

User=root Group=root ExecStart=/data/apps/zookeeper/bin/zkServer.sh start ExecStop=/data/apps/zookeeper/bin/zkServer.sh stop ExecStatus=/data/apps/zookeeper/bin/zkServer.sh status [Install] WantedBy=multi-user.target

4. 服务启动状态查看

root@kafka01~# systemctl start zookeeper.service && systemctl enable zookeeper.service && systemctl status zookeeper.service root@kafka02~# systemctl start zookeeper.service && systemctl enable zookeeper.service && systemctl status zookeeper.service root@kafka03~# systemctl start zookeeper.service && systemctl enable zookeeper.service && systemctl status zookeeper.service

3. kafka环境部署

1. kafka01安装部署

root@kafka01:~# cd /data/softs/ root@kafka01:/data/softs# wget https://dlcdn.apache.org/kafka/3.1.0/kafka_2.13-3.1.0.tgz && tar xf kafka_2.13-3.1.0.tgz -C /data/apps root@kafka01:/data/softs# ln -sv /data/apps/kafka_2.13-3.1.0/ /data/apps/kafka root@kafka01:/data/softs# mkdir /data/apps_data/kafka root@kafka01:/data/softs# egrep -v “^$|^#” /data/apps/kafka/config/server.properties broker.id=41 listeners=PLAINTEXT://10.168.56.41:9092 num.network.threads=3 num.io.threads=8 socket.send.buffer.bytes=102400 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 log.dirs=/data/apps_data/kafka num.partitions=3 num.recovery.threads.per.data.dir=1 offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 log.retention.hours=168 log.segment.bytes=1073741824 log.retention.check.interval.ms=300000 zookeeper.connect=10.168.56.41:2181,10.168.56.42:2181,10.168.56.43:2181 zookeeper.connection.timeout.ms=18000 group.initial.rebalance.delay.ms=0 auto.create.topics.enable=true

root@kafka01:/data/softs# vim /etc/systemd/system/kafka.service [Unit] Description=Apache Kafka server (broker)

After=network.target zookeeper.service

After=network.target [Service] Type=simple

Environment=”PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_231/bin”

User=root Group=root ExecStart=/data/apps/kafka/bin/kafka-server-start.sh /data/apps/kafka/config/server.properties ExecStop=/data/apps/kafka/bin/kafka-server-stop.sh [Install] WantedBy=multi-user.target

2. kafka02安装部署

root@kafka02:~# cd /data/softs/ root@kafka02:/data/softs# wget https://dlcdn.apache.org/kafka/3.1.0/kafka_2.13-3.1.0.tgz && tar xf kafka_2.13-3.1.0.tgz -C /data/apps root@kafka02:/data/softs# ln -sv /data/apps/kafka_2.13-3.1.0/ /data/apps/kafka root@kafka02:/data/softs# mkdir /data/apps_data/kafka root@kafka02:/data/softs# egrep -v “^$|^#” root@kafka03:/data/softs# broker.id=42 listeners=PLAINTEXT://10.168.56.42:9092 num.network.threads=3 num.io.threads=8 socket.send.buffer.bytes=102400 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 log.dirs=/data/apps_data/kafka num.partitions=3 num.recovery.threads.per.data.dir=1 offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 log.retention.hours=168 log.segment.bytes=1073741824 log.retention.check.interval.ms=300000 zookeeper.connect=10.168.56.41:2181,10.168.56.42:2181,10.168.56.43:2181 zookeeper.connection.timeout.ms=18000 group.initial.rebalance.delay.ms=0 auto.create.topics.enable=true

root@kafka02:/data/softs# vim /etc/systemd/system/kafka.service [Unit] Description=Apache Kafka server (broker)

After=network.target zookeeper.service

After=network.target [Service] Type=simple

Environment=”PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_231/bin”

User=root Group=root ExecStart=/data/apps/kafka/bin/kafka-server-start.sh /data/apps/kafka/config/server.properties ExecStop=/data/apps/kafka/bin/kafka-server-stop.sh [Install] WantedBy=multi-user.target

3. kafka03安装部署

root@kafka03:~# cd /data/softs/ root@kafka03:/data/softs# wget https://dlcdn.apache.org/kafka/3.1.0/kafka_2.13-3.1.0.tgz && tar xf kafka_2.13-3.1.0.tgz -C /data/apps root@kafka03:/data/softs# ln -sv /data/apps/kafka_2.13-3.1.0/ /data/apps/kafka root@kafka03:/data/softs# mkdir /data/apps_data/kafka root@kafka03:/data/softs# egrep -v “^$|^#” /data/apps/kafka/config/server.properties broker.id=43 listeners=PLAINTEXT://10.168.56.43:9092 num.network.threads=3 num.io.threads=8 socket.send.buffer.bytes=102400 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 log.dirs=/data/apps_data/kafka num.partitions=3 num.recovery.threads.per.data.dir=1 offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 log.retention.hours=168 log.segment.bytes=1073741824 log.retention.check.interval.ms=300000 zookeeper.connect=10.168.56.41:2181,10.168.56.42:2181,10.168.56.43:2181 zookeeper.connection.timeout.ms=18000 group.initial.rebalance.delay.ms=0 auto.create.topics.enable=true

root@kafka03:/data/softs# vim /etc/systemd/system/kafka.service [Unit] Description=Apache Kafka server (broker)

After=network.target zookeeper.service

After=network.target [Service] Type=simple

Environment=”PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_231/bin”

User=root Group=root ExecStart=/data/apps/kafka/bin/kafka-server-start.sh /data/apps/kafka/config/server.properties ExecStop=/data/apps/kafka/bin/kafka-server-stop.sh [Install] WantedBy=multi-user.target

4. 启动并查看状态(kafka01~kafka03节点逐个执行)

root@kafka01:/data/softs# systemctl start kafka.service && systemctl enable kafka.service && systemctl status kafka.service root@kafka02:/data/softs# systemctl start kafka.service && systemctl enable kafka.service && systemctl status kafka.service root@kafka03:/data/softs# systemctl start kafka.service && systemctl enable kafka.service && systemctl status kafka.service

<a name="WOEF6"></a>

### ES集群搭建

<a name="RMi96"></a>

#### 集群规划

- 软件路径与日志路径

- data路径:

- 日志路径:

- 主机分布

| 主机名 | IP | 部署服务 |

| --- | --- | --- |

| es01 | 10.168.56.44 | es7.16,kibana |

| es02 | 10.168.56.45 | es7.16 |

| es03 | 10.168.56.46 | es7.16 |

| logstash | 10.168.56.47 | logstash7.16 |

<a name="pa9iZ"></a>

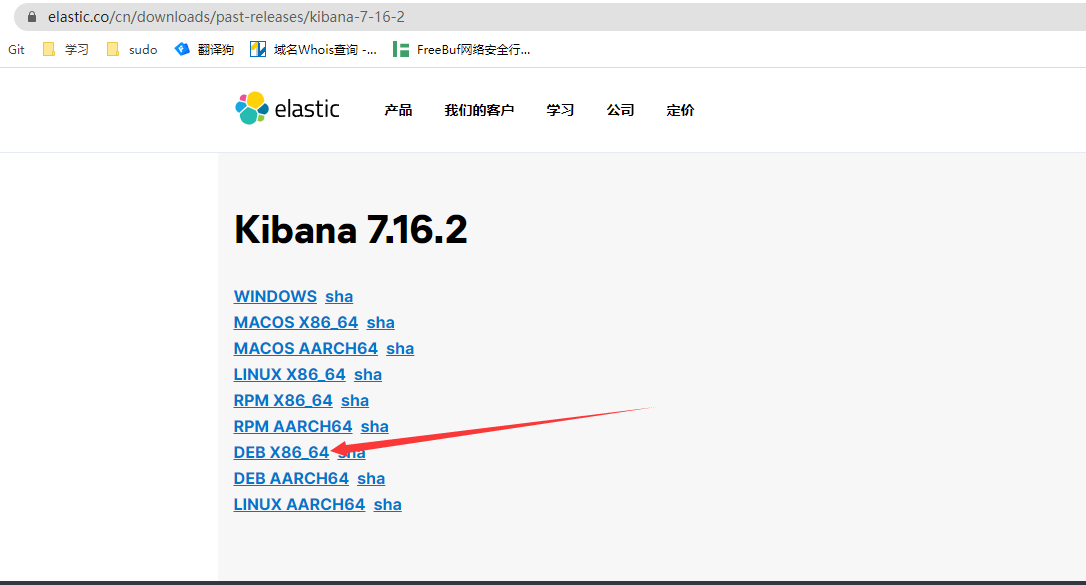

#### 软件下载

- 环境使用的是ubuntu20.04LTS操作系统,所以需要下载deb包,下载链接如下

- es: [https://www.elastic.co/cn/downloads/past-releases/elasticsearch-7-16-2](https://www.elastic.co/cn/downloads/past-releases/elasticsearch-7-16-2)

- kibana: [https://www.elastic.co/cn/downloads/past-releases/kibana-7-16-2](https://www.elastic.co/cn/downloads/past-releases/kibana-7-16-2)

- logstash: [https://www.elastic.co/cn/downloads/past-releases/logstash-7-16-2](https://www.elastic.co/cn/downloads/past-releases/logstash-7-16-2)

<a name="WKCTy"></a>

#### 集群搭建

1. es01搭建

root@es01:/data# mkdir /data/softs/ root@es01:/data/softs# ls elasticsearch-7.16.2-amd64.deb kibana-7.16.2-amd64.deb root@es01:/data/softs# dpkg -i elasticsearch-7.16.2-amd64.deb #安装 root@es01:/data/softs# cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime root@es01:/data/softs# apt update -y && apt install ntpdate && ntpdate ntp.aliyun.com

2. 配置es01

root@es01:/data/softs# egrep -v “^$|^#” /etc/elasticsearch/elasticsearch.yml cluster.name: cropy-elk node.name: es01 path.data: /data/apps/elasticsearch path.logs: /data/apps/log/elasticsearch network.host: 10.168.56.44 http.port: 9200 discovery.seed_hosts: [“10.168.56.44”, “10.168.56.45”,”10.168.56.46”] cluster.initial_master_nodes: [“10.168.56.44”, “10.168.56.45”,”10.168.56.46”]

root@es01:/data/softs# egrep -v “^$|^#” /etc/elasticsearch/jvm.options -Xms1g -Xmx1g 8-13:-XX:+UseConcMarkSweepGC 8-13:-XX:CMSInitiatingOccupancyFraction=75 8-13:-XX:+UseCMSInitiatingOccupancyOnly 14-:-XX:+UseG1GC -Djava.io.tmpdir=${ES_TMPDIR} -XX:+HeapDumpOnOutOfMemoryError 9-:-XX:+ExitOnOutOfMemoryError -XX:HeapDumpPath=/var/lib/elasticsearch -XX:ErrorFile=/var/log/elasticsearch/hs_err_pid%p.log 8:-XX:+PrintGCDetails 8:-XX:+PrintGCDateStamps 8:-XX:+PrintTenuringDistribution 8:-XX:+PrintGCApplicationStoppedTime 8:-Xloggc:/var/log/elasticsearch/gc.log 8:-XX:+UseGCLogFileRotation 8:-XX:NumberOfGCLogFiles=32 8:-XX:GCLogFileSize=64m 9-:-Xlog:gc*,gc+age=trace,safepoint:file=/var/log/elasticsearch/gc.log:utctime,pid,tags:filecount=32,filesize=64m

root@es01:/data/softs# mkdir /data/apps/elasticsearch -p root@es01:/data/softs# mkdir /data/apps/log/elasticsearch -p root@es01:/data/softs# chown -R elasticsearch:elasticsearch /data/apps/

3. es02搭建

root@es02:/data# mkdir /data/softs/ root@es02:/data/softs# ls elasticsearch-7.16.2-amd64.deb kibana-7.16.2-amd64.deb root@es02:/data/softs# dpkg -i elasticsearch-7.16.2-amd64.deb #安装 root@es02:/data/softs# cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime root@es02:/data/softs# apt update -y && apt install ntpdate && ntpdate ntp.aliyun.com

4. es02配置

root@es02:/data/softs# egrep -v “^$|^#” /etc/elasticsearch/elasticsearch.yml cluster.name: cropy-elk node.name: es01 path.data: /data/apps/elasticsearch path.logs: /data/apps/log/elasticsearch network.host: 10.168.56.45 http.port: 9200 discovery.seed_hosts: [“10.168.56.44”, “10.168.56.45”,”10.168.56.46”] cluster.initial_master_nodes: [“10.168.56.44”, “10.168.56.45”,”10.168.56.46”]

root@es02:/data/softs# egrep -v “^$|^#” /etc/elasticsearch/jvm.options -Xms1g -Xmx1g 8-13:-XX:+UseConcMarkSweepGC 8-13:-XX:CMSInitiatingOccupancyFraction=75 8-13:-XX:+UseCMSInitiatingOccupancyOnly 14-:-XX:+UseG1GC -Djava.io.tmpdir=${ES_TMPDIR} -XX:+HeapDumpOnOutOfMemoryError 9-:-XX:+ExitOnOutOfMemoryError -XX:HeapDumpPath=/data/apps/elasticsearch -XX:ErrorFile=/data/apps/log/elasticsearch/hs_err_pid%p.log 8:-XX:+PrintGCDetails 8:-XX:+PrintGCDateStamps 8:-XX:+PrintTenuringDistribution 8:-XX:+PrintGCApplicationStoppedTime 8:-Xloggc:/data/apps/log/elasticsearch/gc.log 8:-XX:+UseGCLogFileRotation 8:-XX:NumberOfGCLogFiles=32 8:-XX:GCLogFileSize=64m 9-:-Xlog:gc*,gc+age=trace,safepoint:file=/data/apps/log/elasticsearch/gc.log:utctime,pid,tags:filecount=32,filesize=64m

root@es02:/data/softs# mkdir /data/apps/elasticsearch -p root@es02:/data/softs# mkdir /data/apps/log/elasticsearch -p root@es02:/data/softs# chown -R elasticsearch:elasticsearch /data/apps/

5. es03 搭建

root@es03:/data# mkdir /data/softs/ root@es03:/data/softs# ls elasticsearch-7.16.2-amd64.deb kibana-7.16.2-amd64.deb root@es03:/data/softs# dpkg -i elasticsearch-7.16.2-amd64.deb #安装 root@es03:/data/softs# cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime root@es03:/data/softs# apt update -y && apt install ntpdate && ntpdate ntp.aliyun.com

6. es03配置

root@es03:/data/softs# egrep -v “^$|^#” /etc/elasticsearch/elasticsearch.yml cluster.name: cropy-elk node.name: es03 path.data: /data/apps/elasticsearch path.logs: /data/apps/log/elasticsearch network.host: 10.168.56.46 http.port: 9200 discovery.seed_hosts: [“10.168.56.44”, “10.168.56.45”,”10.168.56.46”] cluster.initial_master_nodes: [“10.168.56.44”, “10.168.56.45”,”10.168.56.46”]

root@es03:/data/softs# egrep -v “^$|^#” /etc/elasticsearch/jvm.options -Xms1g -Xmx1g 8-13:-XX:+UseConcMarkSweepGC 8-13:-XX:CMSInitiatingOccupancyFraction=75 8-13:-XX:+UseCMSInitiatingOccupancyOnly 14-:-XX:+UseG1GC -Djava.io.tmpdir=${ES_TMPDIR} -XX:+HeapDumpOnOutOfMemoryError 9-:-XX:+ExitOnOutOfMemoryError -XX:HeapDumpPath=/data/apps/elasticsearch -XX:ErrorFile=/data/apps/log/elasticsearch/hs_err_pid%p.log 8:-XX:+PrintGCDetails 8:-XX:+PrintGCDateStamps 8:-XX:+PrintTenuringDistribution 8:-XX:+PrintGCApplicationStoppedTime 8:-Xloggc:/data/apps/log/elasticsearch/gc.log 8:-XX:+UseGCLogFileRotation 8:-XX:NumberOfGCLogFiles=32 8:-XX:GCLogFileSize=64m 9-:-Xlog:gc*,gc+age=trace,safepoint:file=/data/apps/log/elasticsearch/gc.log:utctime,pid,tags:filecount=32,filesize=64m

root@es03:/data/softs# mkdir /data/apps/elasticsearch -p root@es03:/data/softs# mkdir /data/apps/log/elasticsearch -p root@es03:/data/softs# chown -R elasticsearch:elasticsearch /data/apps/

7. 服务启动(es01~es03逐个启动)

root@es01:/data/softs# systemctl start elasticsearch.service root@es01:/data/softs# systemctl enable elasticsearch.service root@es01:/data/softs# systemctl status elasticsearch.service

root@es02:/data/softs# systemctl start elasticsearch.service root@es02:/data/softs# systemctl enable elasticsearch.service root@es02:/data/softs# systemctl status elasticsearch.service

root@es03:/data/softs# systemctl start elasticsearch.service root@es03:/data/softs# systemctl enable elasticsearch.service root@es03:/data/softs# systemctl status elasticsearch.service

8. 服务状态查看

root@es01:/data/softs# curl http://10.168.56.43:9200 root@es01:/data/softs# curl http://10.168.56.44:9200 root@es01:/data/softs# curl http://10.168.56.45:9200

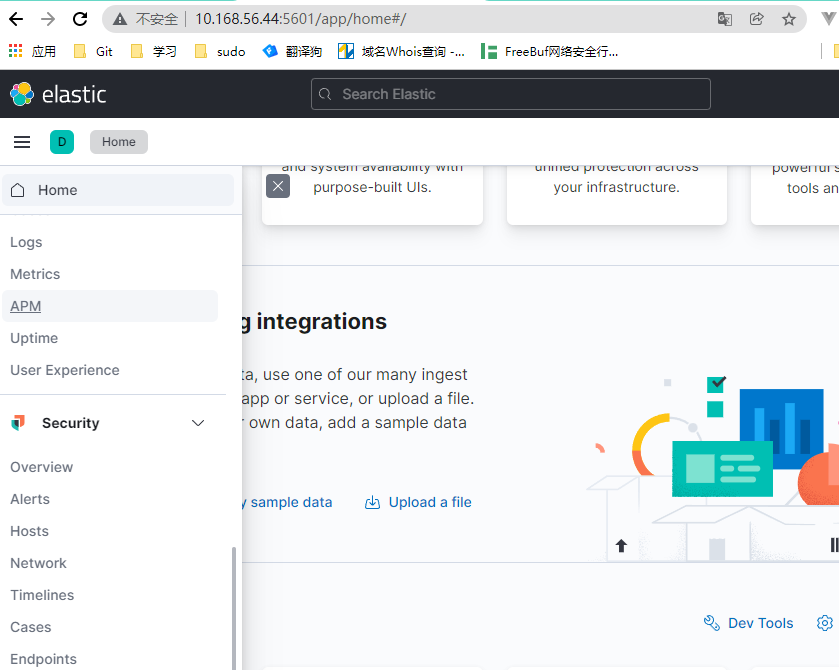

9. kibana安装与配置

root@es01:/data/softs# dpkg -i kibana-7.16.2-amd64.deb root@es01:/data/softs# egrep -v “^$|^#” /etc/kibana/kibana.yml server.port: 5601 server.host: “10.168.56.44” server.name: “cropy-kibana” elasticsearch.hosts: [“http://10.168.56.45:9200“]

root@es01:/data/softs# systemctl start kibana.service && systemctl enable kibana.service

<a name="V579q"></a>

### 采集k8s业务日志

<a name="fPR8y"></a>

#### 日志采集部分操作

1. 基础镜像构建

root@k8s-master1:~/k8s/09-ELK/tomcat_elk# ll total 35104 drwxr-xr-x 2 root root 4096 Jan 30 23:27 ./ drwxr-xr-x 3 root root 4096 Jan 30 23:27 ../ -rw-r—r— 1 root root 139 Jan 30 23:27 app1.tar.gz -rw-r—r— 1 root root 142 Jan 30 23:27 build.sh -rwxr-x—x 1 root root 25801 Jan 30 23:27 catalina.sh -rw-r—r— 1 root root 332 Jan 30 23:27 Dockerfile -rw-r—r— 1 root root 3587 Dec 19 18:52 filebeat.yml -rw-r—r— 1 root root 18 Jan 30 23:27 index.html -rwxr-xr-x 1 root root 82 Jan 30 23:27 run_tomcat.sh -rw———- 1 root root 7602 Jan 30 23:27 server.xml -rw-r—r— 1 root root 1036 Jan 30 23:27 tomcat-k8s.yaml

2. Dockerfile

root@k8s-master1:~/k8s/09-ELK/tomcat_elk# cat Dockerfile FROM harbor.cropy.cn/baseimages/tomcat-base:v8.5.72

ADD catalina.sh /apps/tomcat/bin/catalina.sh ADD server.xml /apps/tomcat/conf/server.xml ADD app1.tar.gz /data/tomcat/webapps/myapp/ ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh ADD filebeat.yml /etc/filebeat/filebeat.yml RUN chown -R nginx.nginx /data/ /apps/

EXPOSE 8080 8443 CMD [“/apps/tomcat/bin/run_tomcat.sh”]

3. run_tomcat.sh

root@k8s-master1:~/k8s/09-ELK/tomcat_elk# cat run_tomcat.sh

!/bin/bash

/usr/share/filebeat/bin/filebeat -e -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat & su - nginx -c “/apps/tomcat/bin/catalina.sh start” tail -f /etc/hosts

4. filebeat.yml

filebeat.inputs:

type: log enabled: true paths:

- /apps/tomcat/logs/catalina.out fields: type: tomcat-catalina

type: log enabled: true paths:

- /apps/tomcat/logs/localhost_access_log.*.txt fields: type: tomcat-acccesslog

filebeat.config.modules: path: ${path.config}/modules.d/*.yml reload.enabled: false

setup.template.settings: index.number_of_shards: 1

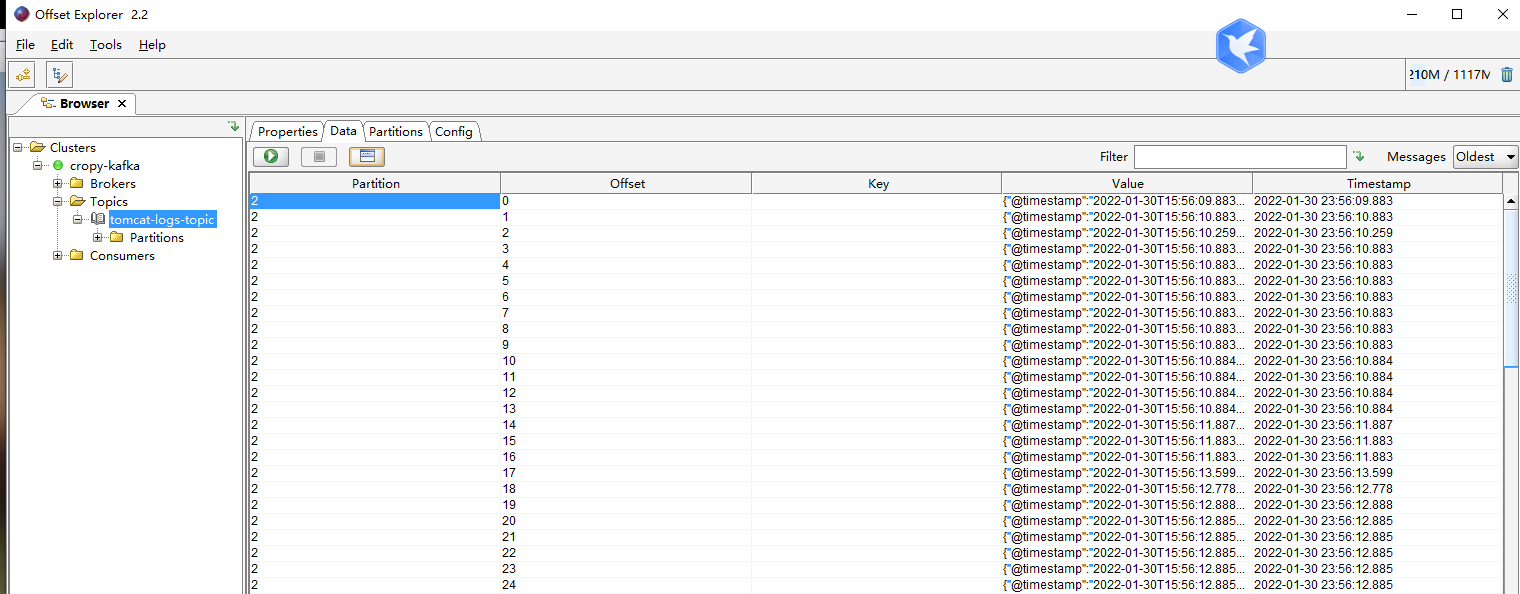

setup.kibana: output.kafka: hosts: [“10.168.56.41:9092”,”10.168.56.42:9092”,”10.168.56.43:9092”] topic: ‘tomcat-logs-topic’ required_acks: 1 max_message_bytes: 1000000

5. build镜像

root@k8s-master1:~/k8s/09-ELK/tomcat_elk# cat build.sh

!/bin/bash

TAG=$1 docker build -t harbor.cropy.cn/cropy/tomcat-app1:${TAG} . sleep 3 docker push harbor.cropy.cn/cropy/tomcat-app1:${TAG}

root@k8s-master1:~/k8s/09-ELK/tomcat_elk# bash build.sh elk

6. k8s运行

root@k8s-master1:~/k8s/09-ELK/tomcat_elk# vim tomcat-k8s.yaml #修改镜像 …. image: harbor.cropy.cn/cropy/tomcat-app1:elk ….

root@k8s-master1:~/k8s/09-ELK/tomcat_elk# kubectl apply -f tomcat-k8s.yaml

6. web请求

root@k8s-master1:~/k8s/09-ELK/tomcat_elk# while true;do curl http://10.168.56.201:40003/myapp/index.html && sleep 0.5;done tomcat webapps v2

7. kafka日志查看

1. kafkatools下载查看

<a name="WcmNm"></a>

#### 日志写入ES步骤

1. logstash安装

root@logstash:# apt update -y && apt install openjdk-8-jdk -y root@logstash:~# mkdir /data/softs/ && cd /data/softs/ root@logstash:/data/softs# wget https://artifacts.elastic.co/downloads/logstash/logstash-7.16.2-amd64.deb root@logstash:/data/softs# dpkg -i logstash-7.16.2-amd64.deb

2. logstash读写配置

1. 官方文档: [https://www.elastic.co/guide/en/logstash/current/index.html](https://www.elastic.co/guide/en/logstash/current/index.html)

1. 接收kafka日志并输入es

root@logstash:~# vim /etc/logstash/conf.d/kafka_es.conf input { kafka { bootstrap_servers => “10.168.56.41:9092,10.168.56.42:9092,10.168.56.43:9092” topics => [“tomcatlogs-topic”] codec => “json”

} }

output {

stdout {

codec => rubydebug

}

if [fields|type] == “tomcat-acccesslog” { elasticsearch { hosts => [“10.168.56.44:9200”,”10.168.56.45:9200”,”10.168.56.46:9200”] index => “tomcat-acccesslog-%{+YYYY-MM-dd}” } } if [fields|type] == “tomcat-catalina” { elasticsearch { hosts => [“10.168.56.44:9200”,”10.168.56.45:9200”,”10.168.56.46:9200”] index => “tomcat-catalina-%{+YYYY-MM-dd}” } } }

3. 测试

root@logstash:~# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/kafka_es.conf

4. 服务启动

root@logstash:~# systemctl start logstash root@logstash:~# systemctl status logstash

5. ES数据状态查看

root@kafka01:~# curl http://10.168.56.44:9200/_cat/indices green open .geoip_databases UCq8I6x0T0qJMNi2QZ3hhw 1 1 42 0 80.6mb 40.3mb green open .apm-custom-link 73QPk4e_QE6OXVA-5-9lkQ 1 1 0 0 452b 226b green open .kibana_task_manager_7.16.2_001 r7r12JJHQQ-yj9xSI-TYPg 1 1 17 36804 7.9mb 3.9mb green open .kibana_7.16.2_001 5xDGQztLRfyiHrizQVfMIA 1 1 583 1514 5.5mb 2.9mb green open .apm-agent-configuration 4Ficiul-SRqD__xP5v1POg 1 1 0 0 452b 226b ```

- kibana数据查看: 上述配置数据没写入es,需要进一步验证es7.16版本