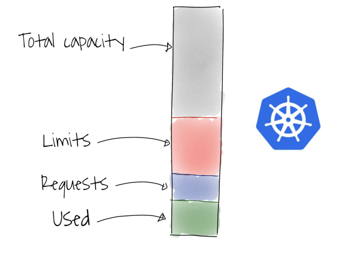

kubernets资源限制

- CPU 以核心为单位。

- memory 以字节为单位。

- requests 为kubernetes scheduler执行pod调度时node节点至少需要拥有的资源。

- limits 为pod运行成功后最多可以使用的资源上限。

- case1:基于stress实现容器资源限制

```

apiVersion: extensions/v1beta1

apiVersion: apps/v1 kind: Deployment metadata: name: limit-test-deployment namespace: cropy spec: replicas: 1 selector: matchLabels: #rs or deployment app: limit-test-podmatchExpressions:

- {key: app, operator: In, values: [ng-deploy-80,ng-rs-81]}

template: metadata: labels:

spec: containers:app: limit-test-pod

root@k8s-master1:~/k8s/10-resource-limit# kubectl label node 10.168.56.206 env=group1

root@k8s-master1:~/k8s/10-resource-limit# kubectl apply -f case1-stress.yml

root@k8s-master1:~/k8s/10-resource-limit# kubectl get pod -n cropy -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

limit-test-deployment-f89dff47f-5mk7z 1/1 Running 0 72s 10.200.107.210 10.168.56.206

2. 实现pod资源限制

1. 官方文档: [https://kubernetes.io/zh/docs/concepts/policy/limit-range/](https://kubernetes.io/zh/docs/concepts/policy/limit-range/)

apiVersion: v1 kind: LimitRange metadata: name: limitrange-cropy namespace: cropy spec: limits:

- type: Container #限制的资源类型

max:

cpu: “2” #限制单个容器的最大CPU

memory: “2Gi” #限制单个容器的最大内存

min:

cpu: “500m” #限制单个容器的最小CPU

memory: “512Mi” #限制单个容器的最小内存

default:

cpu: “500m” #默认单个容器的CPU限制

memory: “512Mi” #默认单个容器的内存限制

defaultRequest:

cpu: “500m” #默认单个容器的CPU创建请求

memory: “512Mi” #默认单个容器的内存创建请求

maxLimitRequestRatio:

cpu: 2 #限制CPU limit/request比值最大为2

memory: 2 #限制内存limit/request比值最大为1.5 - type: Pod max: cpu: “4” #限制单个Pod的最大CPU memory: “4Gi” #限制单个Pod最大内存

- type: PersistentVolumeClaim max: storage: 50Gi #限制PVC最大的requests.storage min: storage: 30Gi #限制PVC最小的requests.storage

root@k8s-master1:~/k8s/10-resource-limit# kubectl apply -f case2-ns-pod-request.yml root@k8s-master1:~/k8s/10-resource-limit# kubectl get limitranges -n cropy

3. pod request资源限制case

1. 官方文档:[https://kubernetes.io/zh/docs/concepts/policy/resource-quotas/](https://kubernetes.io/zh/docs/concepts/policy/resource-quotas/)

kind: Deployment apiVersion: apps/v1 metadata: labels: app: cropy-wordpress-deployment-label name: cropy-wordpress-deployment namespace: cropy spec: replicas: 1 selector: matchLabels: app: cropy-wordpress-selector template: metadata: labels: app: cropy-wordpress-selector spec: containers:

- name: cropy-wordpress-nginx-container

image: nginx:1.16.1

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

resources:

limits:

cpu: 2

memory: 3Gi

requests:

cpu: 2

memory: 1.5Gi

- name: cropy-wordpress-php-container

image: php:5.6-fpm-alpine

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

resources:

limits:

cpu: 1

#cpu: 2

memory: 1Gi

requests:

cpu: 500m

memory: 512Mi

nodeSelector:

env: group1

kind: Service apiVersion: v1 metadata: labels: app: cropy-wordpress-service-label name: cropy-wordpress-service namespace: cropy spec: type: NodePort ports:

- name: http port: 80 protocol: TCP targetPort: 8080 nodePort: 30063 selector: app: cropy-wordpress-selector

**资源限制相关的pod无法创建的排查思路**

- kubectl get deploy -n cropy

- kubectl get deploy -n cropy-o json

- kubectl get limitranges -n cropy

调整resources.request下的值,修改小即可 ```

基于namespace的资源限制

apiVersion: v1 kind: ResourceQuota metadata: name: quota-cropy namespace: cropy spec: hard: requests.cpu: "46" limits.cpu: "46" requests.memory: 120Gi limits.memory: 120Gi requests.nvidia.com/gpu: 4 pods: "20" services: "20"a. NS-POD资源限制实例 ``` kind: Deployment apiVersion: apps/v1 metadata: labels: app: cropy-nginx-deployment-label name: cropy-nginx-deployment namespace: cropy spec: replicas: 5 selector: matchLabels: app: cropy-nginx-selector template: metadata: labels:

app: cropy-nginx-selectorspec: containers:

- name: cropy-nginx-container

image: nginx:1.16.1

imagePullPolicy: Always

ports:

- containerPort: 80 protocol: TCP name: http env:

- name: “password” value: “123456”

- name: “age” value: “18” resources: limits: cpu: 1 memory: 1Gi requests: cpu: 500m memory: 512Mi

- name: cropy-nginx-container

image: nginx:1.16.1

imagePullPolicy: Always

ports:

kind: Service apiVersion: v1 metadata: labels: app: cropy-nginx-service-label name: cropy-nginx-service namespace: cropy spec: type: NodePort ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30033

selector: app: cropy-nginx-selector

kind: Deployment apiVersion: apps/v1 metadata: labels: app: cropy-nginx-deployment-label name: cropy-nginx-deployment namespace: cropy spec: replicas: 2 selector: matchLabels: app: cropy-nginx-selector template: metadata: labels:b. NS-cpu资源限制实例

spec: containers:app: cropy-nginx-selector- name: cropy-nginx-container

image: nginx:1.16.1

imagePullPolicy: Always

ports:

- containerPort: 80 protocol: TCP name: http env:

- name: “password” value: “123456”

- name: “age” value: “18” resources: limits: cpu: 1 memory: 1Gi requests: cpu: 1 memory: 512Mi

- name: cropy-nginx-container

image: nginx:1.16.1

imagePullPolicy: Always

ports:

kind: Service apiVersion: v1 metadata: labels: app: cropy-nginx-service-label name: cropy-nginx-service namespace: cropy spec: type: NodePort ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 50033

selector:

app: cropy-nginx-selector

```

k8s账号和权限控制

- 官方文档: https://kubernetes.io/zh/docs/reference/access-authn-authz/rbac/

普通账号/权限管理

账号创建

root@k8s-master1:~# kubectl create serviceaccount cropy-user -n cropy root@k8s-master1:~# kubectl create serviceaccount cropy-user1 -n cropy root@k8s-master1:~# kubectl create serviceaccount cropy-user2 -n cropy\ root@k8s-master1:~# kubectl get serviceaccounts -n cropy NAME SECRETS AGE cropy-user 1 33s cropy-user1 1 9s cropy-user2 1 4srole规则创建 ``` root@k8s-master1:~/k8s/11-rbac# vim cropy-role.yml kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: cropy name: cropy-role rules:

apiGroups: [“*”] resources: [“pods/exec”]

verbs: [“*”]

RO-Role

verbs: [“get”, “list”, “watch”, “create”]

apiGroups: [“*”] resources: [“pods”]

verbs: [“*”]

RO-Role

verbs: [“get”, “list”, “watch”]

apiGroups: [“apps/v1”] resources: [“deployments”]

verbs: [“get”, “list”, “watch”, “create”, “update”, “patch”, “delete”]

RO-Role

verbs: [“get”, “watch”, “list”]

root@k8s-master1:~/k8s/11-rbac# kubectl apply -f cropy-role.yml root@k8s-master1:~/k8s/11-rbac# kubectl get role -n cropy

3. rolebinding: 角色绑定

root@k8s-master1:~/k8s/11-rbac# vim rolebinding-cropy-cropy-user.yml kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: role-bind-cropy namespace: cropy subjects:

- kind: ServiceAccount name: cropy-user namespace: cropy roleRef: kind: Role name: cropy-role apiGroup: rbac.authorization.k8s.io

root@k8s-master1:~/k8s/11-rbac# kubectl apply -f rolebinding-cropy-cropy-user.yml root@k8s-master1:~/k8s/11-rbac# kubectl get rolebindings -n cropy

4. 查看用户secret token并做测试

root@k8s-master1:~/k8s/11-rbac# kubectl get secrets -n cropy NAME TYPE DATA AGE cropy-tls-secret Opaque 2 82d cropy-user-token-vzzlk kubernetes.io/service-account-token 3 9m1s cropy-user1-token-65kdz kubernetes.io/service-account-token 3 8m37s cropy-user2-token-5txt7 kubernetes.io/service-account-token 3 8m32s default-token-d6n5d kubernetes.io/service-account-token 3 84d mobile-tls-secret Opaque 2 82d

root@k8s-master1:~/k8s/11-rbac# kubectl describe secrets cropy-user-token-vzzlk -n cropy

Name: cropy-user-token-vzzlk

Namespace: cropy

Labels:

Type: kubernetes.io/service-account-token

Data

ca.crt: 1350 bytes namespace: 5 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6Im5FelhQMGhJT0hSTU9PbFk1YzBsZVB5NTRJaGxzT2l3cTZOczA3ekpheDQifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJjcm9weSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJjcm9weS11c2VyLXRva2VuLXZ6emxrIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImNyb3B5LXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJlZGM2OTk1YS1lMTFiLTRhMDctYmI3Yi02M2NlZmMxOTExOWEiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6Y3JvcHk6Y3JvcHktdXNlciJ9.cdB0J1ZtrtlYstCecxqFkLELmznUfBugxKzh2PkgvJmHxAaUr-UVgB40aXpYbW5P1NiKSFvs7R3S3WUQnADlYf3rfwewNrJpwuJmbi5t_SvdY3w7yIAg4BYKsJGs5-uBLmgT9-kVZhxMUnFWY16H4uPdQVnW8odXSI7j7Jn6ICExEcBlmbnY_zxjKeznWX4OvQW8JnGoxVL3ADBn7onZkC7w-I4joN58E07AhVBxWJLatpiNv4wh_oLBiX0gw9IcEGSYes4ZwGOutCCmIxB2oWjY96Lfl_dvA4PIIV1WR8Fq-MSAvd8HFqp2JmuHeqoG8llM4K-ep2MlLkG5nRiAoQ

<a name="DwY6W"></a>

### 基于普通账号的kubeconfig制作

1. 从kubeasz将cfssl*相关命令拷贝到k8s-master01节点

1. 将ca-config.json拷贝到k8s-master01节点

root@k8s-deploy:/etc/kubeasz/clusters/k8s-01/ssl# cat ca-config.json { “signing”: { “default”: { “expiry”: “438000h” }, “profiles”: { “kubernetes”: { “usages”: [ “signing”, “key encipherment”, “server auth”, “client auth” ], “expiry”: “438000h” } }, “profiles”: { “kcfg”: { “usages”: [ “signing”, “key encipherment”, “client auth” ], “expiry”: “438000h” } } } }

3. 基于cfssl创建cropy-user 证书

root@k8s-master1:~/k8s/11-rbac/cropy-user-ssl# vim cropy-user-csr.json { “CN”: “China”, “hosts”: [], “key”: { “algo”: “rsa”, “size”: 2048 }, “names”: [ { “C”: “CN”, “ST”: “BeiJing”, “L”: “BeiJing”, “O”: “k8s”, “OU”: “System” } ] }

root@k8s-master1:~/k8s/11-rbac/cropy-user-ssl# cat ca-config.json { “signing”: { “default”: { “expiry”: “438000h” }, “profiles”: { “kubernetes”: { “usages”: [ “signing”, “key encipherment”, “server auth”, “client auth” ], “expiry”: “438000h” } }, “profiles”: { “kcfg”: { “usages”: [ “signing”, “key encipherment”, “client auth” ], “expiry”: “438000h” } } } }

root@k8s-master1:~/k8s/11-rbac/cropy-user-ssl# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem -ca-key=/etc/kubernetes/ssl/ca-key.pem -config=./ca-config.json -profile=kubernetes cropy-user-csr.json | cfssljson -bare cropy-user

4. 生成kubeconfig文件

oot@k8s-master1:~/k8s/11-rbac/cropy-user-ssl# kubectl config set-cluster cluster1 —certificate-authority=/etc/kubernetes/ssl/ca.pem —embed-certs=true —server=https://10.168.56.201:6443 —kubeconfig=cropy-user.kubeconfig root@k8s-master1:~/k8s/11-rbac/cropy-user-ssl# cat cropy-user.kubeconfig

5. 设置客户端认证参数

root@k8s-master1:~/k8s/11-rbac/cropy-user-ssl# ls .pem cropy-user-key.pem cropy-user.pem root@k8s-master1:~/k8s/11-rbac/cropy-user-ssl# cp .pem /etc/kubernetes/ssl/ root@k8s-master1:~/k8s/11-rbac/cropy-user-ssl# kubectl config set-credentials cropy-user \ —client-certificate=/etc/kubernetes/ssl/cropy-user.pem \ —client-key=/etc/kubernetes/ssl/cropy-user-key.pem \ —embed-certs=true \ —kubeconfig=cropy-user.kubeconfig

6. 设置上下文参数

root@k8s-master1:~/k8s/11-rbac/cropy-user-ssl# kubectl config set-context cluster1 \ —cluster=cluster1 \ —user=cropy-user \ —namespace=cropy \ —kubeconfig=cropy-user.kubeconfig

7. 设置上下文

root@k8s-master1:~/k8s/11-rbac/cropy-user-ssl# kubectl config use-context cluster1 —kubeconfig=cropy-user.kubeconfig

8. 获取cropy-user的token

root@k8s-master1:~/k8s/11-rbac/cropy-user-ssl# kubectl describe secret $(kubectl get secrets -n cropy| grep cropy-user-|awk ‘{print $1}’) -n cropy

9. 将token写入cropy-user.kubeconfig

将8的token添加到cropy-user.kubeconfig即可

<a name="NMKLw"></a>

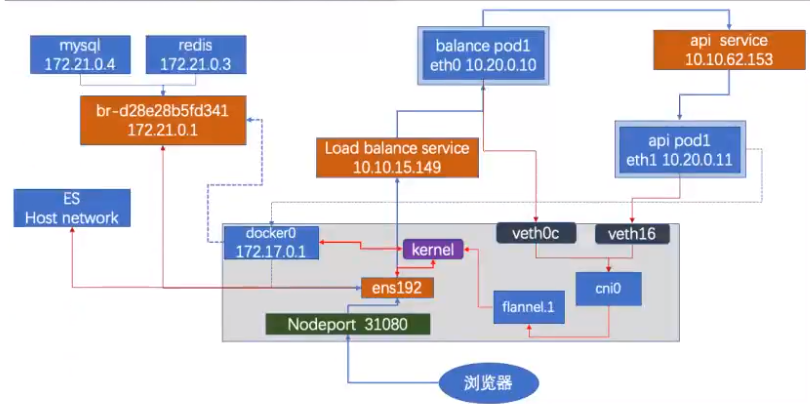

## k8s网络

<a name="wy7GA"></a>

### 容器网络

- 官方文档: [https://kubernetes.io/zh/docs/tasks/network/](https://kubernetes.io/zh/docs/tasks/network/)

> 容器网络目的:

> 1. 实现同一个pod中的不同容器间(LNMP)通信

> 1. 实现POD与POD同主机与跨主机的容器通信

> 1. pod和服务之间的通信(nginx通过service代理tomcat)

> 1. pod与k8s集群之外的通信

> 1. 外部到pod

> 1. pod到外部

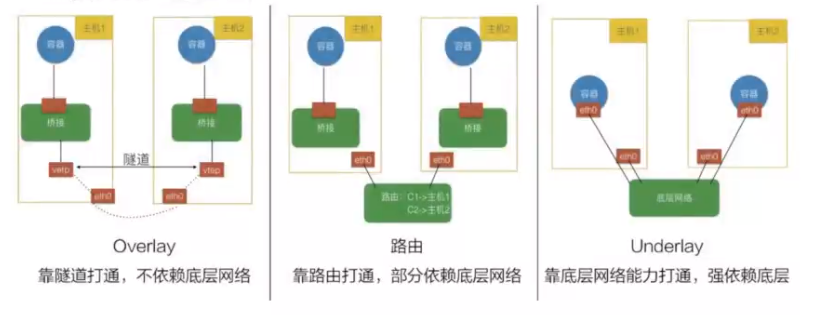

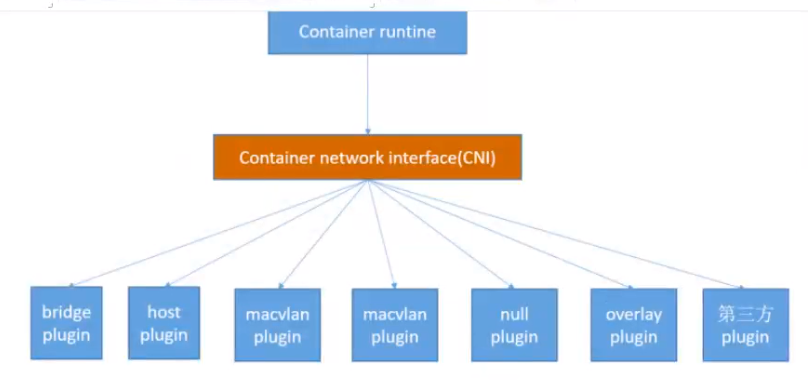

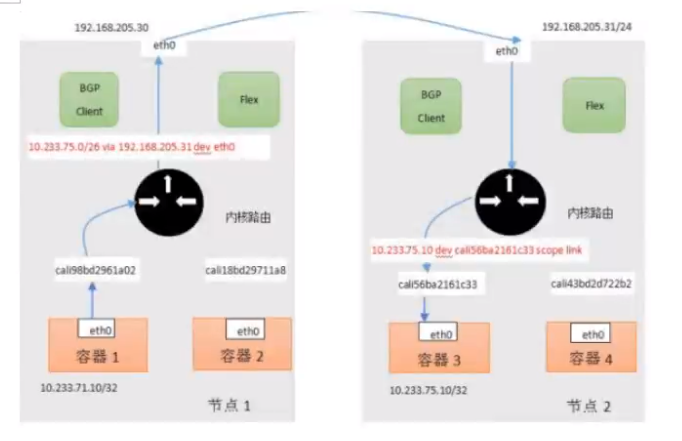

CNI网络插件的三种实现模式<br />

<a name="ouaNl"></a>

### 网络通信方式

<a name="gRCKD"></a>

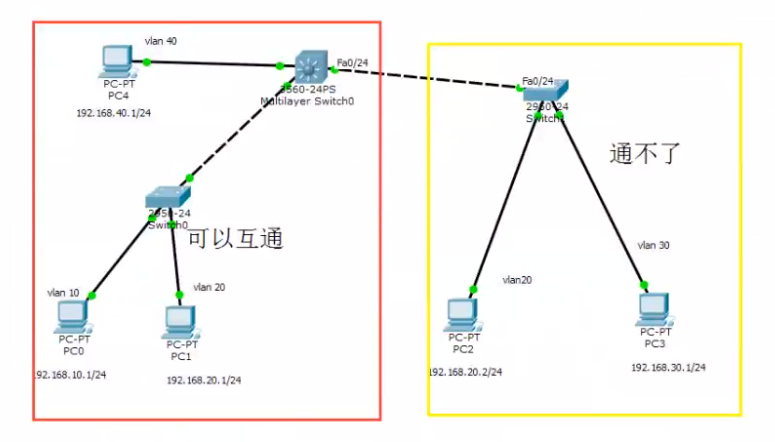

#### 二层网络通信

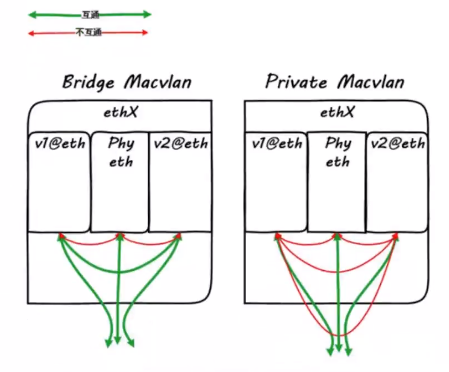

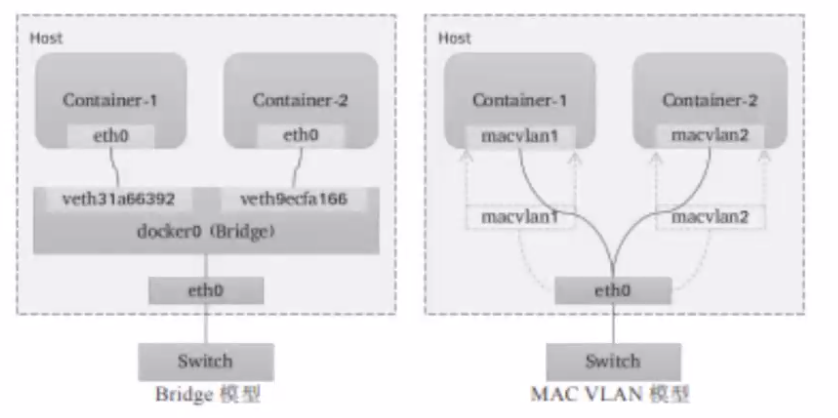

基于目标mac地址通信,不能跨局域网通信,通常由交换机实现报文转发

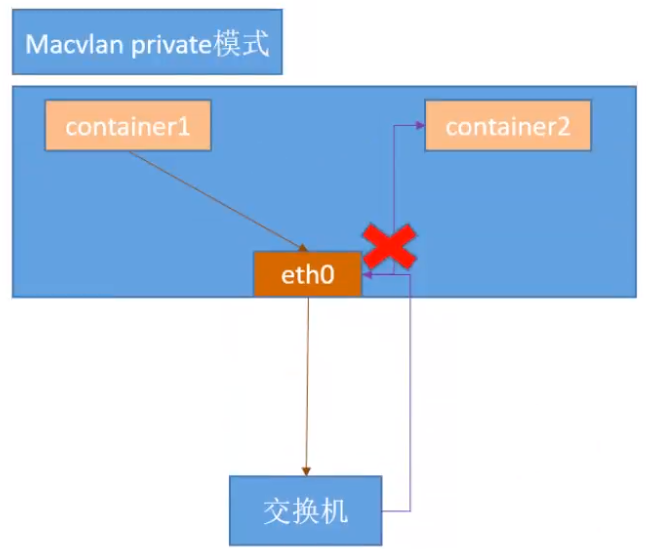

- private mode

> 此模式下的同一接口下的子接口之间彼此隔离,不能通信,从外部也无法访问

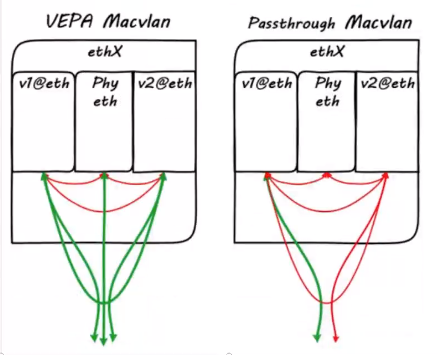

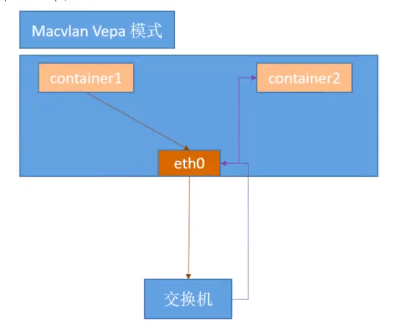

- vepa mode

> 子接口之间的通信流量需要到外部802.1/vepa 功能的交换机上,经由外部交换机转发,再绕回来

- bridge mode

> 模拟linux bridge的功能,该模式下的每个网络接口的mac地址是已知的,所以这种模式下,子接口之间是可以直接通信的

- passthru mode

> 只允许单个子接口连接父接口

- source mode

> 只接收源mac为指定mac地址的报文

<br />

<a name="ob55K"></a>

#### 三层通信

<br />

<a name="EjkoA"></a>

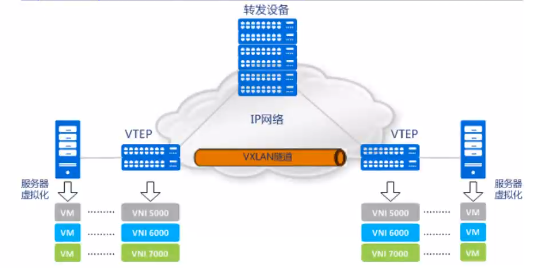

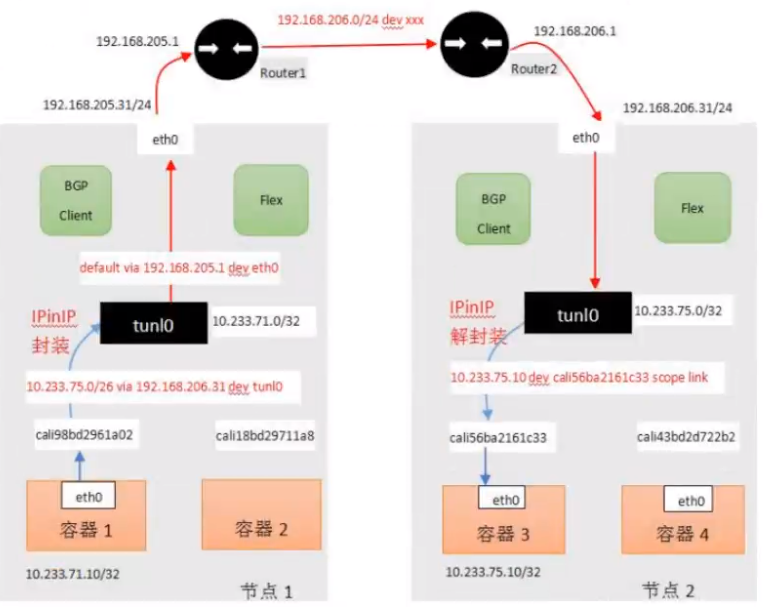

### overlay网络简介

叠加网络或者覆盖网络,在物理网络基础上叠加实现新的虚拟网络,即可使网络中的容器可以相互通信

<a name="esuZa"></a>

#### overlay网络实现方式

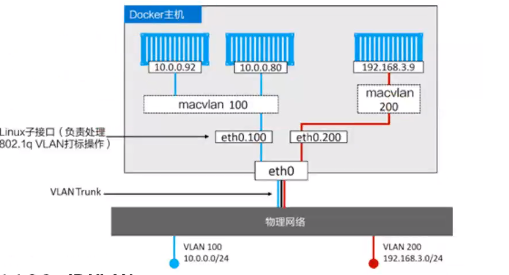

- vxlan: 拓展vlan,支持2^24个vlan

- nvgre

- vni

<a name="EQw6w"></a>

#### overlay网络验证

- udp 8472端口进行抓包即可

tcpdump udp port 8472

<a name="hLD8j"></a>

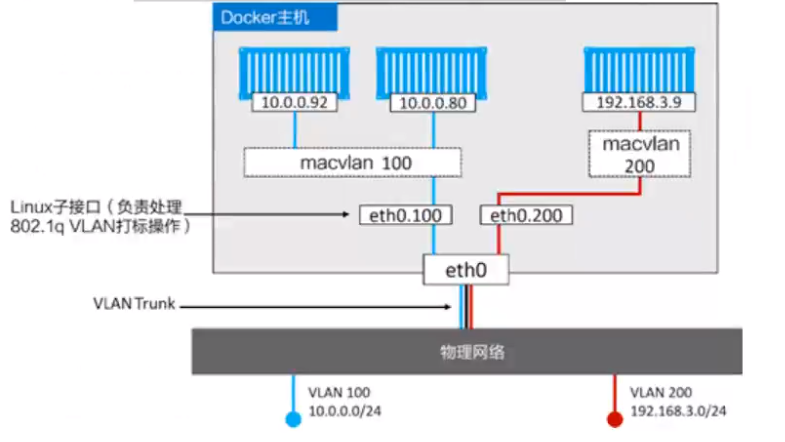

### underlay

- 解决方案

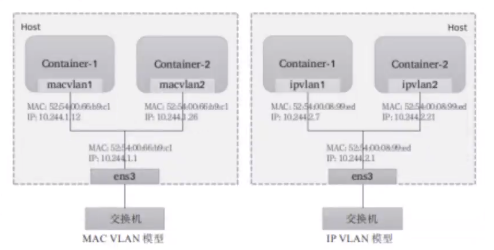

- mac vlan: 支持在同一个以太网接口上虚拟出多个网络接口,每个虚拟接口都拥有唯一的mac地址,并配置网卡子接口IP

- ip vlan:类似于mac vlan,不同之处在于每个虚拟接口将共享使用物理接口的mac地址,从而不再违反防止mac欺骗的交换机安全策略,且不要求在物理接口上启用混杂模式

<br /><br /><br />IP VLAN有L2,L3两种模型,其中ip vlan L2的工作模式类似于mac vlan被作用为网桥或者交换机,L3模式中,子接口地址不一样,但是公用宿主机的mac地址,虽然支持多种网络模型,但mac vlan和 ip vlan不能在同一个物理接口上使用,一般使用mac vlan,Linux 内核4.2版本之后才开始支持ip vlan

<a name="gZhPB"></a>

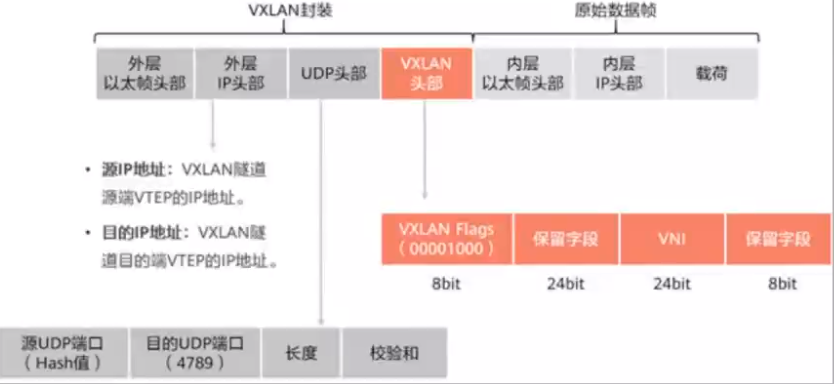

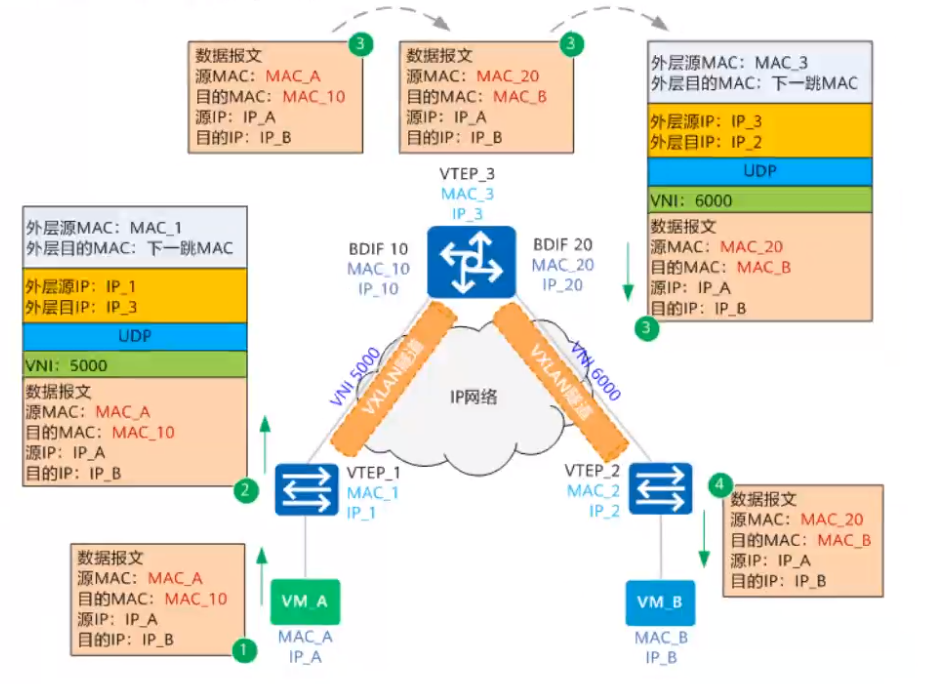

### Vxlan通信过程

<a name="NYJZA"></a>

#### 基于虚拟化的vxlan通信

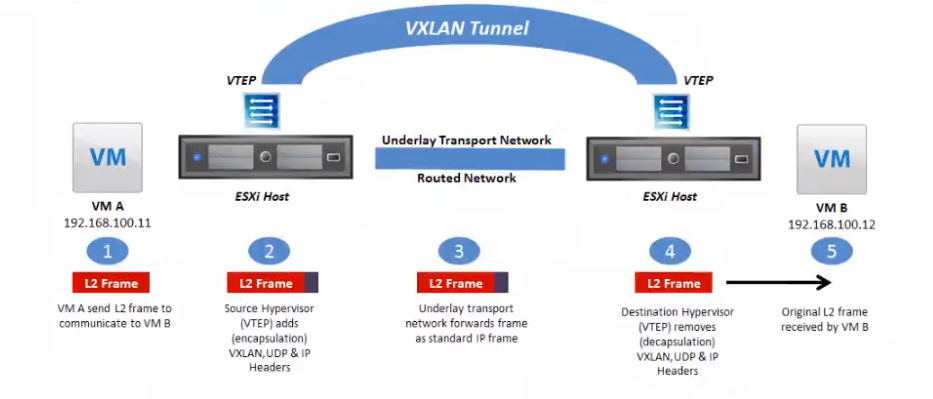

1. VM A发送L2帧与VM请求VM B通信

1. 源宿主机VTEP添加或者封装vxlan,udp以及ip头部报文

1. 网络层设备将封装后的报文通过标准报文在三层网络之间进行转发

1. 目标宿主机VTEP删除或者解封装vxlan,upd,以及IP头部

1. 将原始L2帧发送给目标VM

<a name="by8mG"></a>

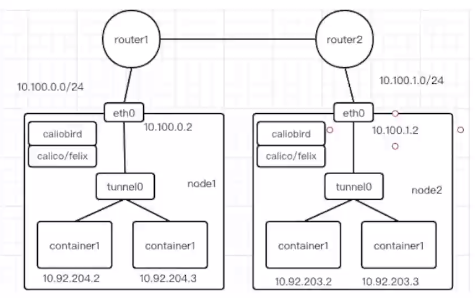

#### 基于k8s flannel网络插件的通信流程

<a name="uktfp"></a>

#### vxlan通信流程

<a name="Cp6to"></a>

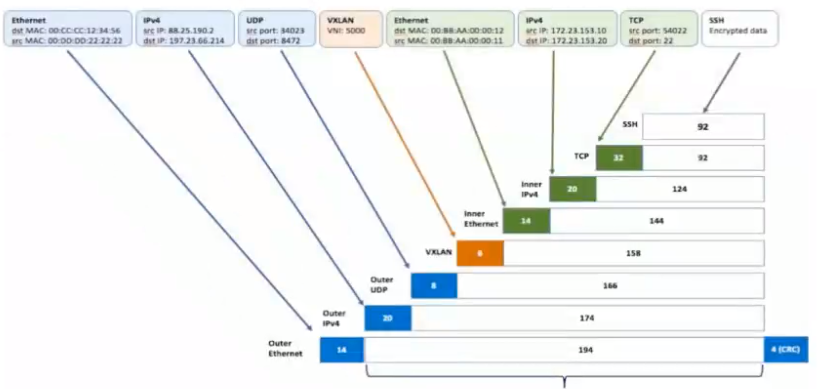

#### vxlan报文格式

<a name="gZoVJ"></a>

#### docker跨主机通信方案总结

1. 查询docker支持的网络插件

root@k8s-deploy:~# docker info | grep Network Network: bridge host ipvlan macvlan null overlay

- bridge网络: docker0就是默认的桥接网络

- docker网络驱动

- overlay

- underlay

<a name="X051N"></a>

### calico下的ipip与BGP

<a name="vIzUJ"></a>

#### BGP

<a name="WCiTV"></a>

#### ipip

<a name="WfL9v"></a>

### Docker多主机通信之macvlan

- docker macvlan的四种模式

- Private: 私有模式

root@k8s-deploy:~# docker network create -d macvlan —subnet=172.31.0.0/21 —gateway=172.31.7.254 -o parent=eth0 -o macvlan_mode=private cropy_macvlan_private root@k8s-deploy:~# docker run -it —rm —net=cropy_macvlan_private —name=c1 —ip=172.31.5.222 centos:7.7.1908 bash [root@a5919f129df5 /]# ping 172.31.5.223 PING 172.31.5.223 (172.31.5.223) 56(84) bytes of data. From 172.31.5.222 icmp_seq=1 Destination Host Unreachable From 172.31.5.222 icmp_seq=2 Destination Host Unreachable From 172.31.5.222 icmp_seq=3 Destination Host Unreachable

另起一个终端执行

root@k8s-deploy:~# docker run -it —rm —net=cropy_macvlan_private —name=c2 —ip=172.31.5.223 centos:7.7.1908 bash root@e7ba702bdf0e /]# ping 172.31.5.222 PING 172.31.5.222 (172.31.5.222) 56(84) bytes of data. From 172.31.5.223 icmp_seq=1 Destination Host Unreachable From 172.31.5.223 icmp_seq=2 Destination Host Unreachable From 172.31.5.223 icmp_seq=3 Destination Host Unreachable

root@k8s-deploy:~# docker network inspect cropy_macvlan_private [ { “Name”: “cropy_macvlan_private”, “Id”: “b5a8dc707aeb712f8c52d65cfff109d3d28fd6c957eac8b1d611d7071641f801”, “Created”: “2022-02-03T21:00:31.36225123+08:00”, “Scope”: “local”, “Driver”: “macvlan”, “EnableIPv6”: false, “IPAM”: { “Driver”: “default”, “Options”: {}, “Config”: [ { “Subnet”: “172.31.0.0/21”, “Gateway”: “172.31.7.254” } ] }, “Internal”: false, “Attachable”: false, “Ingress”: false, “ConfigFrom”: { “Network”: “” }, “ConfigOnly”: false, “Containers”: {}, “Options”: { “macvlan_mode”: “private”, “parent”: “eth0” }, “Labels”: {} } ]

- VEPA:macvlan内的容器不能直接来接受同一个物理网卡的容器的请求数据包,但可以通过交换机的端口回流再转发可以实现通信

root@k8s-deploy:~# docker network create -d macvlan —subnet=172.31.6.0/21 —gateway=172.31.7.254 -o parent=eth0 -o macvlan_mode=vepa cropy_macvlan_vepa root@k8s-deploy:~# docker run -it —rm —net=cropy_macvlan_vepa —name=c1 —ip=172.31.6.22 centos:7.7.1908 bash [root@ab97a6272145 /]# ping 172.31.6.23 PING 172.31.6.23 (172.31.6.23) 56(84) bytes of data. From 172.31.6.22 icmp_seq=1 Destination Host Unreachable From 172.31.6.22 icmp_seq=2 Destination Host Unreachab

另起终端

root@k8s-deploy:~# docker run -it —rm —net=cropy_macvlan_vepa —name=c2 —ip=172.31.6.23 centos:7.7.1908 bash [root@1ecd2283206d /]# ping 172.31.6.22 PING 172.31.6.22 (172.31.6.22) 56(84) bytes of data. From 172.31.6.23 icmp_seq=1 Destination Host Unreachable From 172.31.6.23 icmp_seq=2 Destination Host Unreachable From 172.31.6.23 icmp_seq=3 Destination Host Unreachable From 172.31.6.23 icmp_seq=4 Destination Host Unreachable

- Passthru: 只能创建一个容器,当运行一个容器后再创建其他容器会报错

docker network create -d macvlan —subnet=172.31.6.0/21 —gateway=172.31.7.254 -o parent=eth0 -o macvlan_mode=passthru cropy_macvlan_passthru docker run -it —rm —net=cropy_macvlan_passthru —name=c1 —ip=172.31.6.122 centos:7.7.1908 bash docker run -it —rm —net=cropy_macvlan_passthru —name=c2 —ip=172.31.6.123 centos:7.7.1908 bash

- Bridge: 默认模式: 需要两台docker节点测试(结论是可以通信的)

docker network create -d macvlan —subnet=172.31.6.0/21 —gateway=172.31.7.254 -o parent=eth0 -o macvlan_mode=bridge cropy_macvlan_bridge docker run -it —rm —net=cropy_macvlan_bridge —name=c1 —ip=172.31.6.22 centos:7.7.1908 bash #docker1 docker run -it —rm —net=cropy_macvlan_bridge —name=c2 —ip=172.31.6.23 centos:7.7.1908 bash #docker2 ```

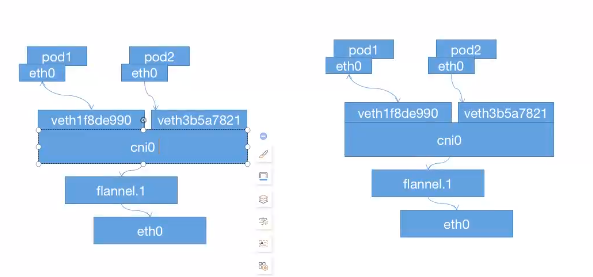

Flannel