Docker基础

Docker起源

- GitHub: https://github.com/moby/moby

- 2017年分为企业版和社区版

理念:

- 构建: image, harbor

- 运输;harbor

- 运行: 端口,运行时runc

docker的组成

官方文档: https://docs.docker.com/get-started/overview/

client

- docker daemon

- containers

- image

-

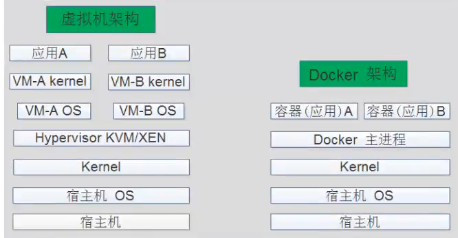

Docker VS 虚拟机

docker 存储引擎

- 目前主要在用: verlay,overlay2

- 注意事项: 修改存储引擎,重启docker服务会导致原有的镜像和所有容器会被清空,谨慎操作,建议不修改

docker安装与基本使用

- docker安装: https://docs.docker.com/engine/install/ubuntu/ ```bash root@docker01:~# apt-get remove docker docker-engine docker.io containerd runc root@docker01:~# sudo apt-get update root@dockerroot@docker01:~# curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg —dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg root@dockerroot@docker01:~# sudo apt-get install apt-transport-https ca-certificates curl gnupg lsb-release -y root@docker01:~# echo “deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) stable” | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

root@docker01:~# apt update root@docker01:~# apt install docker-ce=5:19.03.15~3-0~ubuntu-focal docker-ce-cli=5:19.03.15~3-0~ubuntu-focal containerd.io=1.3.9-1 -y

2. 修改data-root```bashroot@docker01:~# mkdir /etc/docker/root@docker01:~# cd /etc/docker/root@docker01:/etc/docker# cat daemon.json{"registry-mirrors": ["https://0b8hhs68.mirror.aliyuncs.com"],"storage-driver": "overlay2","data-root":"/data/docker"}root@docker01:/etc/docker# systemctl restart docker

镜像管理

镜像命名规则: 域名/镜像仓库/镜像名称:tag

搜索: hub.docker.com

docker search nginx下载

root@docker01:~# docker pull nginx:1.18.0-alpine root@docker01:~# docker pull alpine root@docker01:~# docker pull mysql:5.6.51导出

root@docker01:/etc/docker# docker save alpine -o alpine.tar导入

root@docker01:/etc/docker# docker load -i alpine.tar查看本地镜像

root@docker01:~# docker images删除,建议使用id删除

root@docker01:~# docker images REPOSITORY TAG IMAGE ID CREATED SIZE mysql 5.6.51 e05271ec102f 2 days ago 303MB alpine latest 14119a10abf4 9 days ago 5.6MB nginx 1.18.0-alpine 684dbf9f01f3 4 months ago 21.9MB root@docker01:~# docker rmi e05271ec102f修改镜像名称

root@docker01:~# docker images REPOSITORY TAG IMAGE ID CREATED SIZE mysql 5.6.51 e05271ec102f 2 days ago 303MB alpine latest 14119a10abf4 9 days ago 5.6MB nginx 1.18.0-alpine 684dbf9f01f3 4 months ago 21.9MB root@docker01:~# docker tag nginx:1.18.0-alpine nginx:dev root@docker01:~# docker images REPOSITORY TAG IMAGE ID CREATED SIZE mysql 5.6.51 e05271ec102f 2 days ago 303MB alpine latest 14119a10abf4 9 days ago 5.6MB nginx 1.18.0-alpine 684dbf9f01f3 4 months ago 21.9MB nginx dev 684dbf9f01f3 4 months ago 21.9MB容器

docker run: 运行容器

root@docker01:~# docker run -it --name test-nginx -p 9800:80 684dbf9f01f3 #前台启动 root@docker01:~# docker run -it --rm --name test-nginx -p 9800:80 684dbf9f01f3 #停止后删除 root@docker01:~# docker run -itd --name test-nginx -p 9800:80 684dbf9f01f3 #后台运行查看容器

docker ps #查看运行的容器 docker ps -a #查看所有容器 docker ps -a -q #查看所有容器id docker ps -a -q -f status=exited端口映射

docker run -itd -p 8080:9999/udp nginx #映射udp端口 docker run -itd -p 80:80 -p 443:443 nginx #多端口映射进入容器

root@docker01:~# docker run --name nginx -itd nginx:dev root@docker01:~# docker exec -it nginx sh #以sh方式进入nginx容器容器的启动,停止,删除

docker stop nginx docker start nginx docker rm $(docker ps -a -q -f status=exited) #删除停止的容器 docker rm -f nginx #强制删除容器NameSpace

内核级别的名称空间隔离。具体如下:

MNT namespace: 提供磁盘挂载点和文件系统的隔离能力

每个容器都要有独立的根文件系统,有独立的用户空间,一是现在容器启动服务并且使用容器的运行环境,即一个宿主机是ubuntu的服务器,可以启动一个centos的运行环境启动一个nginx服务,这个nginx服务运行时使用的环境是centos系统目录的运行环境。但是在容器里面是不能访问宿主机的资源,宿主机是使用了chroot技术把容器锁定到一个指定的目录里面

IPC namespace:提供进程间通信的隔离能力

一个容器内的进程间通信,允许一个容器内运行的不同进程的(内存,缓存等)数据空间,但是不能夸容器直接访问其他容器的数据

UTS namespace: 主机名隔离

用于标识系统内核版本,底层体系结构等信息,其中包含了主机名和domainname,它使得一个容器拥有属于自己的名称标识,这个名称标识独立于宿主机系统和宿主机上的其他容器

PID namespace: 进程隔离

linux系统的PID为1的进程是其他所有进程的父进程,多个容器的启动是通过PID进行隔离

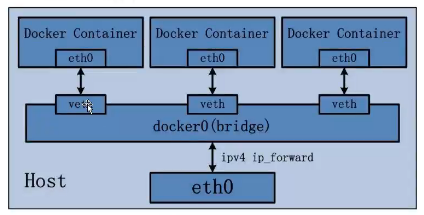

NET namespace: 网络隔离

docker 使用network namespace启动一个vehtX的接口,这样的话容器将拥有属于自己的桥接IP地址,通常是docker0做为网桥进行数据的对内对外转发

- USER namespace: 用户隔离

将容器间相同的用户ID,组ID进行隔离,使得容器能够正常在同一台宿主机上运行,且互不影响

Cgroups

验证系统Cgroups

内核版本越高,提供的限制功能越全面

root@docker01:~# cat /boot/config-5.4.0-81-generic | grep CGROUP

CONFIG_CGROUPS=y

CONFIG_BLK_CGROUP=y

CONFIG_CGROUP_WRITEBACK=y

CONFIG_CGROUP_SCHED=y

CONFIG_CGROUP_PIDS=y

CONFIG_CGROUP_RDMA=y

CONFIG_CGROUP_FREEZER=y

CONFIG_CGROUP_HUGETLB=y

CONFIG_CGROUP_DEVICE=y

CONFIG_CGROUP_CPUACCT=y

CONFIG_CGROUP_PERF=y

CONFIG_CGROUP_BPF=y

# CONFIG_CGROUP_DEBUG is not set

CONFIG_SOCK_CGROUP_DATA=y

# CONFIG_BLK_CGROUP_IOLATENCY is not set

CONFIG_BLK_CGROUP_IOCOST=y

# CONFIG_BFQ_CGROUP_DEBUG is not set

CONFIG_NETFILTER_XT_MATCH_CGROUP=m

CONFIG_NET_CLS_CGROUP=m

CONFIG_CGROUP_NET_PRIO=y

CONFIG_CGROUP_NET_CLASSID=y

Cgroup的具体实现

blkio: 块设备IO限制

cpu: 使用调度程序为cgroup任务提供CPU的访问

cpuacct: 产生cgroup任务的cpu资源报告

cpuset: 如果是多核心的cpu,这个子系统会为cgroup任务分配单独的CPU和内存

devices: 允许或者拒绝cgroup任务对设备的访问

freezer: 暂停和恢复cgroup任务

memory: 设置每个cgroup的内存限制以及资源报告

net_cls: 标记网络包给cgroup使用

ns: 命名空间子系统

perf_event: 增加了对每个cgroup的监测追踪能力,可以监测属于某个特定cgroup的所有溪县城以及运行在特定cpu上的线程

查看系统cgroups

root@docker01:~# ll /sys/fs/cgroup/

total 0

drwxr-xr-x 15 root root 380 Sep 5 17:09 ./

drwxr-xr-x 10 root root 0 Sep 5 17:09 ../

dr-xr-xr-x 6 root root 0 Sep 5 17:09 blkio/

lrwxrwxrwx 1 root root 11 Sep 5 17:09 cpu -> cpu,cpuacct/

lrwxrwxrwx 1 root root 11 Sep 5 17:09 cpuacct -> cpu,cpuacct/

dr-xr-xr-x 6 root root 0 Sep 5 17:09 cpu,cpuacct/

dr-xr-xr-x 3 root root 0 Sep 5 17:09 cpuset/

dr-xr-xr-x 6 root root 0 Sep 5 17:09 devices/

dr-xr-xr-x 4 root root 0 Sep 5 17:09 freezer/

dr-xr-xr-x 3 root root 0 Sep 5 17:09 hugetlb/

dr-xr-xr-x 6 root root 0 Sep 5 17:09 memory/

lrwxrwxrwx 1 root root 16 Sep 5 17:09 net_cls -> net_cls,net_prio/

dr-xr-xr-x 3 root root 0 Sep 5 17:09 net_cls,net_prio/

lrwxrwxrwx 1 root root 16 Sep 5 17:09 net_prio -> net_cls,net_prio/

dr-xr-xr-x 3 root root 0 Sep 5 17:09 perf_event/

dr-xr-xr-x 6 root root 0 Sep 5 17:09 pids/

dr-xr-xr-x 2 root root 0 Sep 5 17:09 rdma/

dr-xr-xr-x 6 root root 0 Sep 5 17:09 systemd/

dr-xr-xr-x 5 root root 0 Sep 5 17:09 unified/

有了以上的chroot,namespace,cgroups就具备了基础的容器运行环境,但是还需要对应的容器创建和管理工具将容器管理起来

早期使用的管理工具是lxc,现在是docker

资源限制

不添加容器运行时资源限制,默认会使用宿主机的全部资源

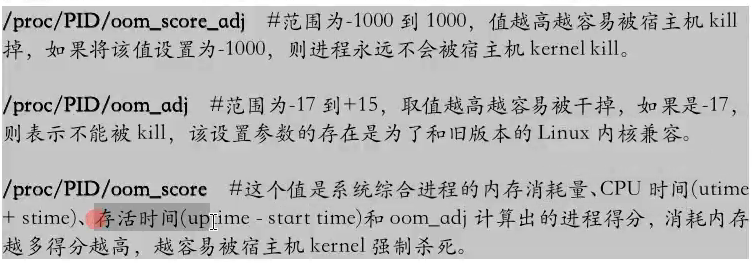

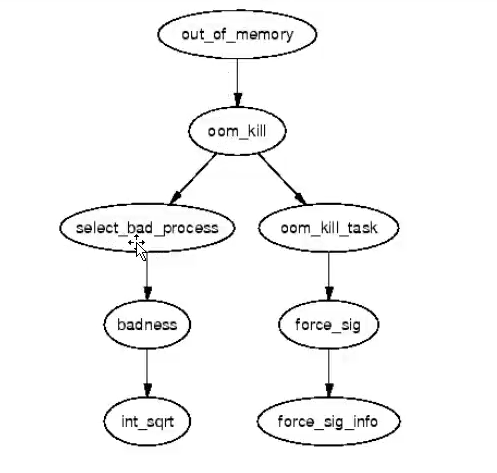

- OOM 优先级机制: linux会为每个进程计算一个分数,最终这个机制会将分数最高的进程kill

容器的内存限制

官方文档: https://docs.docker.com/config/containers/resource_constraints/

内存限制参数:

-m 容器可以使用的最大内存 —memory-swap: 设置交换分区大小 —memory-swappiness: 设置交换分区使用倾向性,值范围0~100,0为能不用就不用 —kernel-memory: 最大内核内存量 —memory-reservation: 允许指定-m的软限制 —oom-kill-disable: 如果未设置-m 的值,当产生OOM时,系统会杀死容器并释放内存

内存限制验证

下载和运行容器压测工具镜像

root@docker01:~# docker pull lorel/docker-stress-ng root@docker01:~# docker run -it lorel/docker-stress-ng --help #查看帮助压测

root@docker01:~# docker run -it --rm -m 128m --name container1 lorel/docker-stress-ng --vm 2 --vm-bytes 256M #512M内存使用,但是限制到128M root@docker01:~# docker stats #查看资源使用情况 root@docker01:~# cat /sys/fs/cgroup/memory/docker/容器ID/memory.limit_in_bytes root@docker01:~# echo "24567890" > /sys/fs/cgroup/memory/docker/d370ddbfb7ac295cf974dc87cc7bd4d495e34f0adffe79dd3a54924ab1db84c5/memory.limit_in_bytes #手动调整内存限制 root@docker01:~# docker run -it --rm -m 128m --memory-reservation 100m --name container1 lorel/docker-stress-ng --vm 2 --vm-bytes 256M #增加软限制100m关闭OOM机制

root@docker01:~# docker run -it --rm -m 128m --oom-kill-disable --name container1 lorel/docker-stress-ng --vm 2 --vm-bytes 256M root@docker01:~# cat /sys/fs/cgroup/memory/docker/容器ID/memory.oom_control关闭交换分区: 不推荐使用

root@docker01:~# docker run -it --rm -m 128m --memory-swap 512m --name container1 lorel/docker-stress-ng --vm 2 --vm-bytes 256M root@docker01:~# cat /sys/fs/cgroup/memory/docker/容器ID/容器的CPU限制

进程在CPU上的执行和调度方法:

- 实时优先级: 0~99

- 非实时优先级: -20~19对应100~139的进程优先级

CPU限制示例

root@docker01:~# docker run -it --rm --cpus=1 --name container1 lorel/docker-stress-ng --vm 2 #限制CPU单核 root@docker01:~# cat /sys/fs/cgroup/cpu/docker/容器ID/cpu.cfs_quota_us #查看cpu配额将容器运行到指定的CPU核心上

root@docker01:~# docker run -it --rm --cpus=2 --cpuset-cpus 0,1 --name container1 lorel/docker-stress-ng --vm 2 #多核比价容易测试 root@docker01:~# cat /sys/fs/cgroup/cpuset/docker/容器ID/cpuset.cpus #查看绑定结果基于cpu-shares对cpu进行切分

root@docker01:~# docker run -it --rm --cpu-shares 1000 --name container1 lorel/docker-stress-ng --vm 4 --cpu 4 root@docker01:~# docker run -it --rm --cpu-shares 2000 --name container2 lorel/docker-stress-ng --vm 4 --cpu 4 root@docker01:~# docker stats #发现container2分配的cpu是container1的2倍总结

资源限制实例

nginx pod

1c/2g, 2c/2g #生产环境 0.5c/512m #测试,预发

java

1c/2g

es

4c12g

kafka

2c6g

jenkins

2c/4g

tomcat

1c2g/2c4g

Dockerfile构建镜像

- 手动提交镜像 ```bash root@docker01:~# docker commit —help Usage: docker commit [OPTIONS] CONTAINER [REPOSITORY[:TAG]] Create a new image from a container’s changes Options: -a, —author string Author (e.g., “John Hannibal Smith hannibal@a-team.com“) -c, —change list Apply Dockerfile instruction to the created image -m, —message string Commit message -p, —pause Pause container during commit (default true)

root@docker01:~# docker run -itd —name nginx nginx:1.20 #启动容器 root@docker01:~# docker commit -a “wanghui@qq.com” -m “nginx v1” nginx nginx:v1 #提交镜像 root@docker01:~# docker images #查看镜像 REPOSITORY TAG IMAGE ID CREATED SIZE nginx v1 61f063a35990 5 seconds ago 133MB

<a name="WePZN"></a>

### dockerfile制作nginx镜像

常见的dockerfile指令

> 官方文档: [https://docs.docker.com/engine/reference/builder](https://docs.docker.com/engine/reference/builder/)

> FROM: 定义基础镜像

> LABEL: 定义镜像维护者等基本信息

> ADD: 拷贝文件到镜像中,能支持tar,tar.gz 自动解压

> COPY: 拷贝文件到镜像中,不支持自动解压

> RUN: 容器中执行命令

> ENV: 指定环境变量

> EXPOSE: 指定暴露端口

> WORKDIR: 指定工作路径

> VOLUME: 定义卷,用于后期容器启动挂载

> USER: 指定用户

> STOPSIGNAL

> CMD: 指定执行命令

> ENTRYPOINT

- 创建dockerfile

```bash

root@docker01:~# mkdir /data/dockerfiles/nginx -p

root@docker01:~# cd /data/dockerfiles/nginx/

root@docker01:/data/dockerfiles/nginx# docker pull centos:7.9.2009 #下载基础镜像

root@docker01:/data/dockerfiles/nginx# wget https://nginx.org/download/nginx-1.18.0.tar.gz #下载nginx源码包

root@docker01:/data/dockerfiles/nginx# docker cp nginx:/etc/nginx/nginx.conf ./

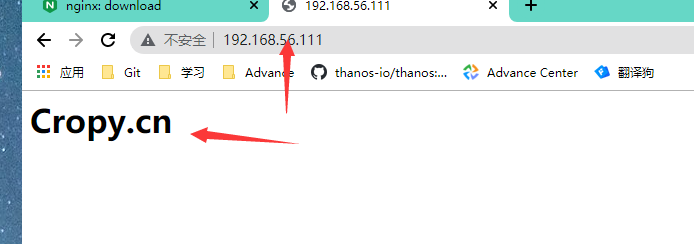

root@docker01:/data/dockerfiles/nginx# echo "<h1>Cropy.cn</h1>" > index.html

root@docker01:/data/dockerfiles/nginx# tar czf static.tar index.html

root@docker01:/data/dockerfiles/nginx# cat nginx.conf

#user nginx;

worker_processes auto;

#error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /apps/nginx/conf/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

gzip on;

server {

listen 80;

server_name localhost;

location / {

root /data/nginx/html;

index index.html;

}

}

include /etc/nginx/conf.d/*.conf;

}

root@docker01:/data/dockerfiles/nginx# vim Dockerfile

FROM centos:7.9.2009

maintainer "cropy wanghui@cropy.cn"

RUN yum update -y && yum -y install vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop

ADD nginx-1.18.0.tar.gz /usr/local/src

RUN cd /usr/local/src/nginx-1.18.0 && ./configure --prefix=/apps/nginx && make && make install

ADD nginx.conf /apps/nginx/conf/nginx.conf

RUN mkdir /data/nginx/html

ADD static.tar /data/nginx/html

CMD ["/apps/nginx/sbin/nginx","-g","daemon off;"]

构建镜像

root@docker01:/data/dockerfiles/nginx# docker build -t harbor.cropy.cn/devops/nginx:v1.0 .启动镜像

root@docker01:/data/dockerfiles/nginx# docker run -it --rm -p 80:80 harbor.cropy.cn/devops/nginx:v1.0测试结果

- entrypoint结合cmd的使用

```bash

root@docker01:/data/dockerfiles/nginx# cd

root@docker01:~# cd /data/dockerfiles/nginx

root@docker01:/data/dockerfiles/nginx# cat docker-entrypoint.sh

!/bin/bash

/apps/nginx/sbin/nginx $1 “$2”

root@docker01:/data/dockerfiles/nginx# cat Dockerfile

centos nginx image

FROM centos:7.9.2009

maintainer “cropy wanghui@cropy.cn”

RUN yum update -y && yum -y install vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop

ADD nginx-1.18.0.tar.gz /usr/local/src

RUN cd /usr/local/src/nginx-1.18.0 && ./configure —prefix=/apps/nginx && make && make install

ADD docker-entrypoint.sh / ADD nginx.conf /apps/nginx/conf/nginx.conf RUN mkdir /data/nginx/html -p ADD static.tar /data/nginx/html

ENTRYPOINT [“/docker-entrypoint.sh”] CMD [“-g”,”daemon off;”]

root@docker01:/data/dockerfiles/nginx# docker build -t harbor.cropy.cn/devops/nginx:v1.0 .

<a name="vnMdT"></a>

### 自定义tomcat业务镜像

1. 自定义centos基础镜像

```bash

root@docker01:~# cd /data/dockerfiles/

root@docker01:/data/dockerfiles# mkdir base_centos

root@docker01:/data/dockerfiles# cd base_centos/

root@docker01:/data/dockerfiles/base_centos# vim Dockerfile

FROM centos:7.9.2009

MAINTAINER "wanghui@cropy.cn"

RUN rpm -ivh http://mirrors.aliyun.com/epel/epel-release-latest-7.noarch.rpm && \

yum -y install vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop && \

groupadd www -g 2021 && useradd www -u 2021 -g www && \

yum clean all

root@docker01:/data/dockerfiles/base_centos# vim build-command.sh

#!/bin/bash

docker build -t harbor.cropy.cn/devops/centos:$1 .

root@docker01:/data/dockerfiles/base_centos# sh build-command.sh v1 #构建基础镜像

- 构建jdk镜像 ```bash root@docker01:~# cd /data/dockerfiles/ root@docker01:/data/dockerfiles# mkdir jdk root@docker01:/data/dockerfiles# cd jdk/ root@docker01:/data/dockerfiles/jdk# docker run -itd —name c2 centos:7.9.2009 bash root@docker01:/data/dockerfiles/jdk# docker cp c2:/etc/profile ./ root@docker01:/data/dockerfiles/jdk# vim profile #最后追加如下配置 export JAVA_HOME=/usr/local/jdk export JRE_HOME=$JAVA_HOME/jre export CLASSPATH=$JAVA_HOME/lib/:$JRE_HOME/lib/ export PATH=$PATH:$JAVA_HOME/bin

root@docker01:/data/dockerfiles/jdk# vim Dockerfile FROM harbor.cropy.cn/devops/centos:v1

ADD jdk-8u301-linux-x64.tar.gz /usr/local/src/ RUN ln -sv /usr/local/src/jdk1.8.0_301 /usr/local/jdk

ADD profile /etc/profile ENV JAVA_HOME /usr/local/jdk ENV JRE_HOME $JAVA_HOME/jre ENV CLASSPATH $JAVA_HOME/lib/:$JRE_HOME/lib/ ENV PATH $PATH:$JAVA_HOME/bin RUN rm -fr /etc/localtime && ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

root@docker01:/data/dockerfiles/jdk# docker build -t harbor.cropy.cn/devops/jdk:v1 . root@docker01:/data/dockerfiles/jdk# docker images REPOSITORY TAG IMAGE ID CREATED SIZE harbor.cropy.cn/devops/jdk v1 164838db175a 3 minutes ago 760MB harbor.cropy.cn/devops/centos v1 bfbf180e7506 28 minutes ago 399MB

root@docker01:/data/dockerfiles/jdk# docker run -it —rm harbor.cropy.cn/devops/jdk:v1 bash [root@b3b09b155cd1 /]# java -version #验证镜像

3. 构建tomcat镜像

```bash

root@docker01:~# cd /data/dockerfiles/

root@docker01:/data/dockerfiles# mkdir tomcat

root@docker01:/data/dockerfiles# cd tomcat/

root@docker01:/data/dockerfiles/tomcat# wget https://dlcdn.apache.org/tomcat/tomcat-8/v8.5.70/bin/apache-tomcat-8.5.70.tar.gz

root@docker01:/data/dockerfiles/tomcat# vim Dockerfile

FROM harbor.cropy.cn/devops/jdk:v1

Maintainer "wanghui@cropy.cn"

ENV TZ "Asia/Shanghai"

ENV LANG en_US.UTF-8

env TERM xterm

ENV TOMCAT_MAJOR_VERSION 8

ENV TOMCAT_MINOR_VERSION 8.5.70

ENV CATALINA_HOME /apps/tomcat

ENV APP_DIR ${CATALINA_HOME}/webapps

RUN mkdir /apps

ADD apache-tomcat-8.5.70.tar.gz /apps

RUN ln -sv /apps/apache-tomcat-8.5.70 /apps/tomcat

root@docker01:/data/dockerfiles/tomcat# docker build -t harbor.cropy.cn/devops/tomcat:v1 .

root@docker01:/data/dockerfiles/tomcat# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

harbor.cropy.cn/devops/tomcat v1 bd0574c9ee74 20 seconds ago 775MB

harbor.cropy.cn/devops/jdk v1 164838db175a 12 minutes ago 760MB

harbor.cropy.cn/devops/centos v1 bfbf180e7506 37 minutes ago 399MB

- 构建业务镜像 ```bash root@docker01:~# cd /data/dockerfiles/ root@docker01:/data/dockerfiles# mkdir tomcat_app1 tomcat_app2 root@docker01:/data/dockerfiles# cd tomcat_app1/

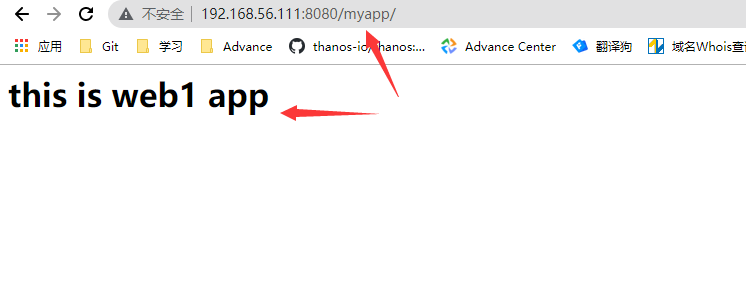

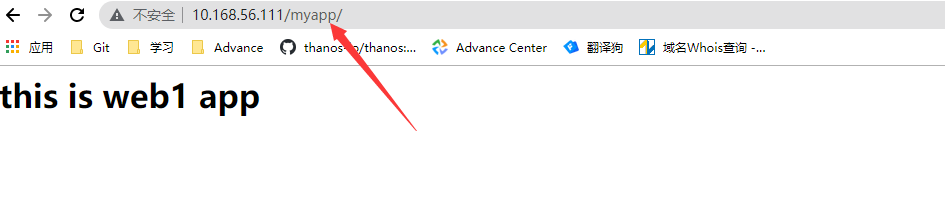

root@docker01:/data/dockerfiles/tomcat_app1# mkdir myapp root@docker01:/data/dockerfiles/tomcat_app1# echo “

this is web1 app

“> myapp/index.htmlroot@docker01:/data/dockerfiles/tomcat_app1# vim run_tomcat.sh

!/bin/bash

echo “nameserver 114.114.114.114” > /etc/resolv.conf su - www -c “/apps/tomcat/bin/catalina.sh start” su - www -c “tail -f /etc/hosts”

root@docker01:/data/dockerfiles/tomcat_app1# chmod +x run_tomcat.sh

root@docker01:/data/dockerfiles/tomcat_app1# vim Dockerfile FROM harbor.cropy.cn/devops/tomcat:v1 ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh ADD myapp/* /apps/tomcat/webapps/myapp/ RUN chown www.www /apps/ -R EXPOSE 8080 8009 CMD [“/apps/tomcat/bin/run_tomcat.sh”]

root@docker01:/data/dockerfiles/tomcat_app1# docker build -t harbor.cropy.cn/devops/tomcat_web1:v1 .

5. 运行业务镜像并测试

```bash

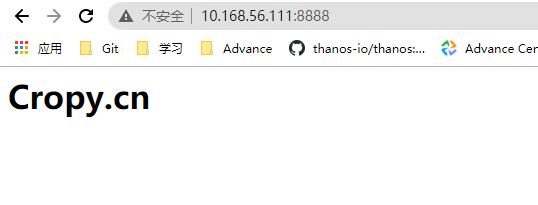

root@docker01:/data/dockerfiles/tomcat_app1# docker run -it --rm -p 8080:8080 harbor.cropy.cn/devops/tomcat_web1:v1

构建haproxy镜像

- 基础环境准备

```bash

root@docker01:~# docker run -itd -p 8080:8080 harbor.cropy.cn/devops/tomcat_web1:v1 #启动镜像为测试代理使用

root@docker01:~# mkdir /data/dockerfiles/haproxy

root@docker01:~# cd /data/dockerfiles/haproxy

root@docker01:/data/dockerfiles/haproxy# wget https://src.fedoraproject.org/repo/pkgs/haproxy/haproxy-2.2.17.tar.gz

root@docker01:/data/dockerfiles/haproxy# vim run_haproxy.sh

!/bin/bash

haproxy -f /etc/haproxy/haproxy.cfg tail -f /etc/hosts

root@docker01:/data/dockerfiles/haproxy# chmod +x run_haproxy.sh root@docker01:/data/dockerfiles/haproxy# vim haproxy.cfg global log 127.0.0.1 local0 uid 200 gid 200 chroot /usr/local/haproxy nbproc 4 daemon stats bind-process 1 defaults timeout connect 5s timeout client 1m timeout server 1m listen stats bind-process 1 mode http bind 0.0.0.0:9999 stats enable log global stats uri /haproxy-status stats auth haadmin:123456

listen web_port bind 0.0.0.0:80 mode http server web01 10.168.56.111:8080 check inter 3000 fall 3 rise 5

root@docker01:/data/dockerfiles/haproxy# vim Dockerfile FROM harbor.cropy.cn/devops/centos:v1 RUN yum -y install gcc gcc-c++ glibc glibc-devel pcre pcre-devel openssl openssl-develsystemd-devel net-tools vim iotop bc zip unzip zlib-devel lrzsz tree screen lsof cmake make systemd-devel ADD haproxy-2.2.17.tar.gz /usr/local/src/ RUN cd /usr/local/src/haproxy-2.2.17 && \ make ARCH=x86_64 TARGET=linux-glibc USE_PCRE=1 USE_OPENSSL=1 USE_ZLIB=1 USE_SYSTEMD=1 USE_CPU_AFFINITY=1 PREFIX=/usr/local/haproxy && \ make install PREFIX=/usr/local/haproxy && cp haproxy /usr/sbin/ && mkdir /usr/local/haproxy/run && rm -fr /usr/local/src/* ADD haproxy.cfg /etc/haproxy ADD run_haproxy.sh /usr/bin/ EXPOSE 80 9999 CMD [“/usr/bin/run_haproxy.sh”]

2. 构建镜像

```bash

root@docker01:/data/dockerfiles/haproxy# docker build -t habor.cropy.cn/devops/haproxy:v1 .

测试镜像

root@docker01:/data/dockerfiles/haproxy# docker run -it --rm -p 80:80 habor.cropy.cn/devops/haproxy:v1基于alpine制作镜像

基础环境准备 ```bash root@docker01:~# mkdir /data/dockerfiles/alpine/ root@docker01:~# cd /data/dockerfiles/alpine/ root@docker01:/data/dockerfiles/alpine# cp ../nginx/nginx-1.18.0.tar.gz ./ root@docker01:/data/dockerfiles/alpine# cp ../nginx/nginx.conf ./ root@docker01:/data/dockerfiles/alpine# cp ../nginx/static.tar ./ root@docker01:/data/dockerfiles/alpine# vim Dockerfile from alpine:3.13.5

ADD nginx-1.18.0.tar.gz /opt/

RUN sed -i ‘s/dl-cdn.alpinelinux.org/mirrors.tuna.tsinghua.edu.cn/g’ /etc/apk/repositories &&\ apk update && apk add iotop gcc libgcc libc-dev libcurl libc-utils pcre-dev zlib-dev libnfs make pcre pcre2 zip openssl openssl-dev unzip net-tools wget libevent libevent-dev iproute2 &&\ addgroup -g 2021 -S nginx && adduser -s /sbin/nologin -S -D -u 2021 -G nginx nginx && cd /opt/nginx-1.18.0 && ./configure —prefix=/apps/nginx && make && make install &&\ ln -sv /apps/nginx/sbin/nginx /usr/bin/ && rm -fr /opt/* && mkdir /data/nginx/html -p && chown -R nginx.nginx /apps/nginx /data/nginx

ADD nginx.conf /apps/nginx/conf/ ADD static.tar /data/nginx/html/

EXPOSE 80 443 CMD [“nginx”,”-g”,”daemon off;”]

2. 镜像构建

```bash

root@docker01:/data/dockerfiles/alpine# docker build -t harbor.cropy.cn/devops/nginx-alpine:v1 .

- 测试镜像

root@docker01:/data/dockerfiles/alpine# docker run -it --rm -p 8888:80 harbor.cropy.cn/devops/nginx-alpine:v1

Docker数据卷

为什么需要数据卷(存储卷)

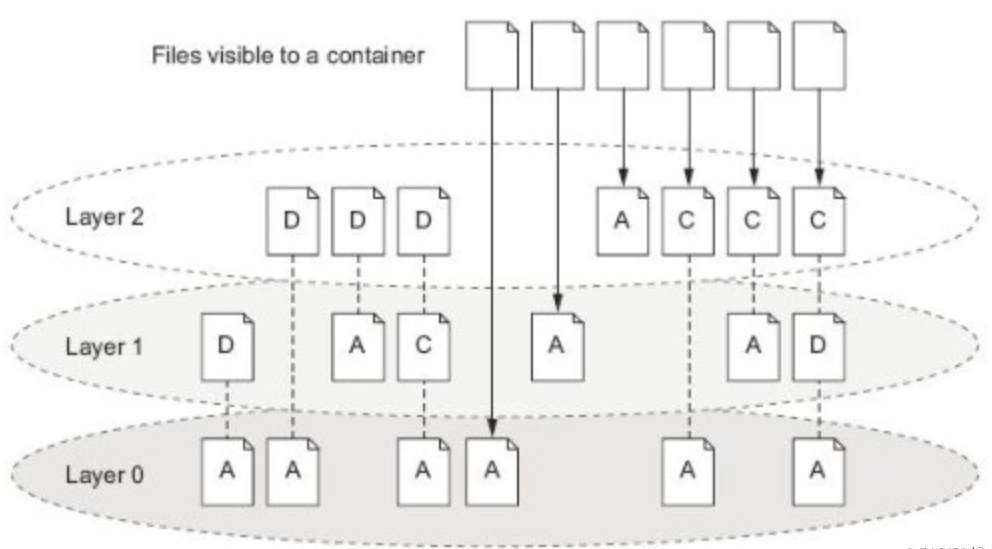

- docker镜像由多个只读层叠加而成,启动容器时,docker会加载只读镜像层,并在镜像层添加一个读写层

- 如果运行中的容器修改了现有的一个已经存在的文件,那么该文件将会从读写层下面的只读层复制到读写层,该文件的只读版本仍然存在,只是已经被读写层中该文件的副本所隐藏,这就是COW(写时复制)机制

- 关闭并重启容器,其数据不受影响,但是删除容器,则其更改将会全部丢失

- 存在的问题:

- 存储与联合文件系统中,不易于宿主机访问

- 容器键数据共享不便

- 删除容器其数据会丢失

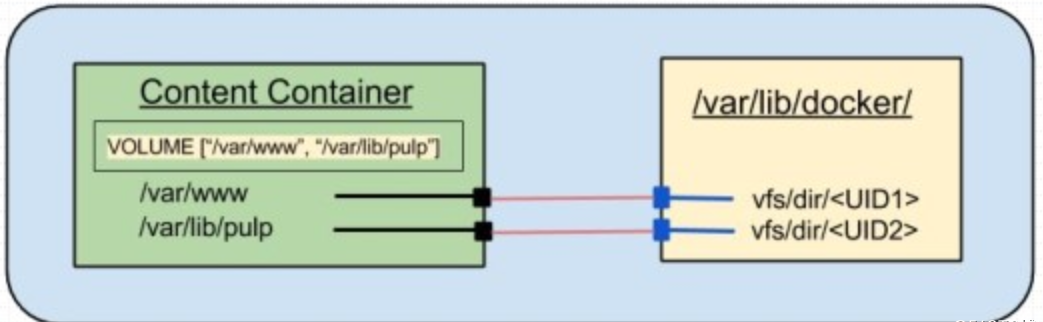

- 什么是卷

- 卷是容器上的一个或者多个目录,此类目录可以绕过联合文件系统,与宿主机上的某个目录可以绑定(关联)

- 绑定本地指定目录到容器的卷

- 特点:

- 删除容器之后本地目录不会被删除,数据还在

- 可以脱离容器的生命周期而存在

- 若有NFS存储的话,数据也可以脱离本机而存在

- 可以实现容器间数据的共享

- 卷的继承

父卷存在即可继承,只要父卷的容器不被rm即可

root@docker01:~# mkdir /data/nginx/html -p

root@docker01:~# echo "index" > /data/nginx/html/index.html

root@docker01:~# docker run -itd --name volume-server -v /data/nginx/html/:/data/nginx/html/ -v/etc/localtime:/etc/localtime harbor.cropy.cn/devops/nginx-alpine:v1

root@docker01:~# docker run -itd --name container1 --volumes-from volume-server harbor.cropy.cn/devops/nginx-alpine:v1

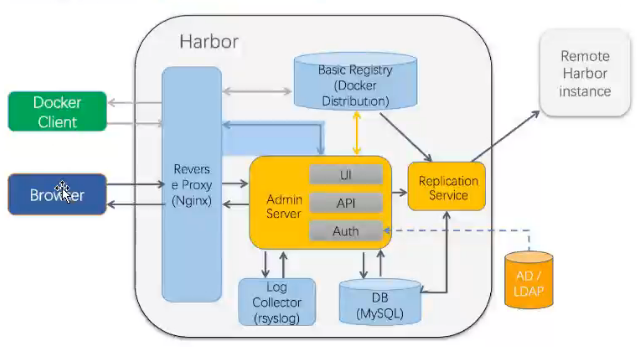

Harbor

存储构建好的镜像,用于分发

harbor的安装与配置

- harbor服务器: 10.168.56.111, 10.168.56.112

- 基础软件: docker19.03, docker-compose

- harbor-v2.3.2

```bash

root@k8s-harbor01:~# mkdir /app

root@k8s-harbor01:~# mkdir /data/harbor -p

root@k8s-harbor01:~# apt install docker-compose -y

root@k8s-harbor01:~# wget https://github.com/goharbor/harbor/releases/download/v2.3.2/harbor-offline-installer-v2.3.2.tgz

root@k8s-harbor01:~# tar xf harbor-offline-installer-v2.3.2.tgz -C /apps/

root@k8s-harbor01:~# cd /apps/harbor

root@k8s-harbor01:~# mv harbor.yml.tmpl harbor.yml

root@k8s-harbor01:~# vim harbor.yml

hostname: 192.168.56.111

https:

https port for harbor, default is 443

port: 443

The path of cert and key files for nginx

certificate: /your/certificate/path

private_key: /your/private/key/path

harbor_admin_password: Harbor12345

root@k8s-harbor01:/apps/harbor# ./install.sh —with-trivy —with-chartmuseum #安装harbor

- 访问harbor

<a name="bq0H4"></a>

#### harbor高可用

- 部署另外节点的harbor

```bash

root@k8s-harbor02:~# mkdir /app

root@k8s-harbor02:~# mkdir /data/harbor -p

root@k8s-harbor02:~# apt install docker-compose -y

root@k8s-harbor02:~# wget https://github.com/goharbor/harbor/releases/download/v2.3.2/harbor-offline-installer-v2.3.2.tgz

root@k8s-harbor02:~# tar xf harbor-offline-installer-v2.3.2.tgz -C /apps/

root@k8s-harbor02:~# cd /apps/harbor

root@k8s-harbor02:~# mv harbor.yml.tmpl harbor.yml

root@k8s-harbor02:~# vim harbor.yml

hostname: 192.168.56.112

#https:

# https port for harbor, default is 443

# port: 443

# The path of cert and key files for nginx

#certificate: /your/certificate/path

#private_key: /your/private/key/path

harbor_admin_password: Harbor12345

root@k8s-harbor02:/apps/harbor# ./install.sh --with-trivy --with-chartmuseum #安装harbor

配置两个节点的insecure-registry

root@k8s-harbor01:/apps/harbor# vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://0b8hhs68.mirror.aliyuncs.com"],

"storage-driver": "overlay2",

"data-root":"/data/docker",

"insecure-registries": ["192.168.56.111","192.168.56.112"]

}

root@k8s-harbor01:/apps/harbor# systemctl restart docker

root@k8s-harbor01:/apps/harbor# docker info| grep -A2 "Insecure Registries"

WARNING: No swap limit support

Insecure Registries:

192.168.56.111

192.168.56.112

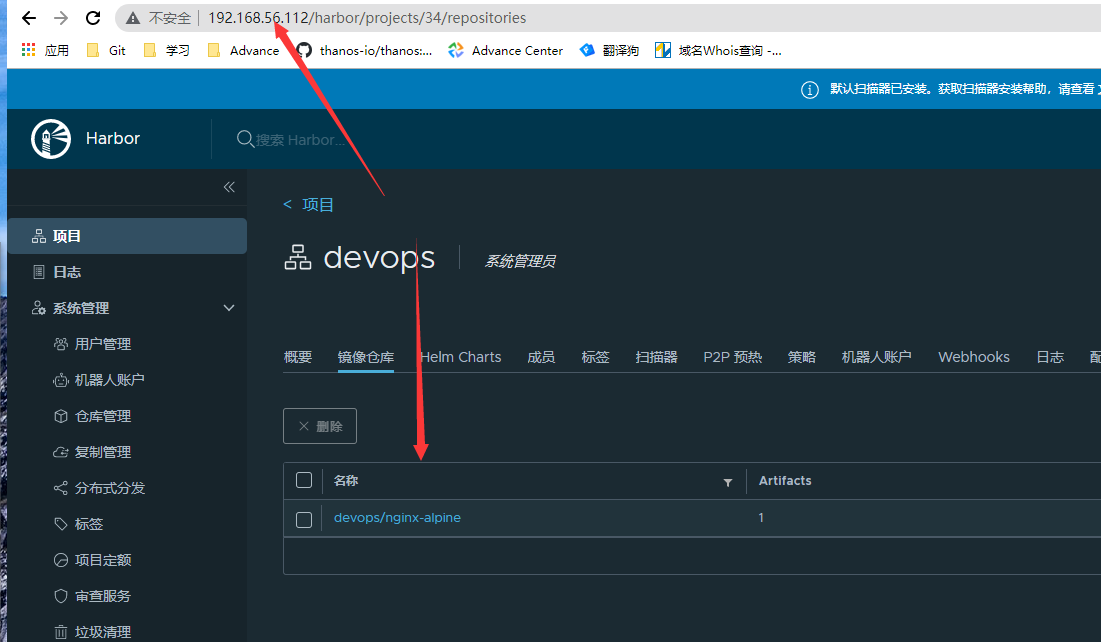

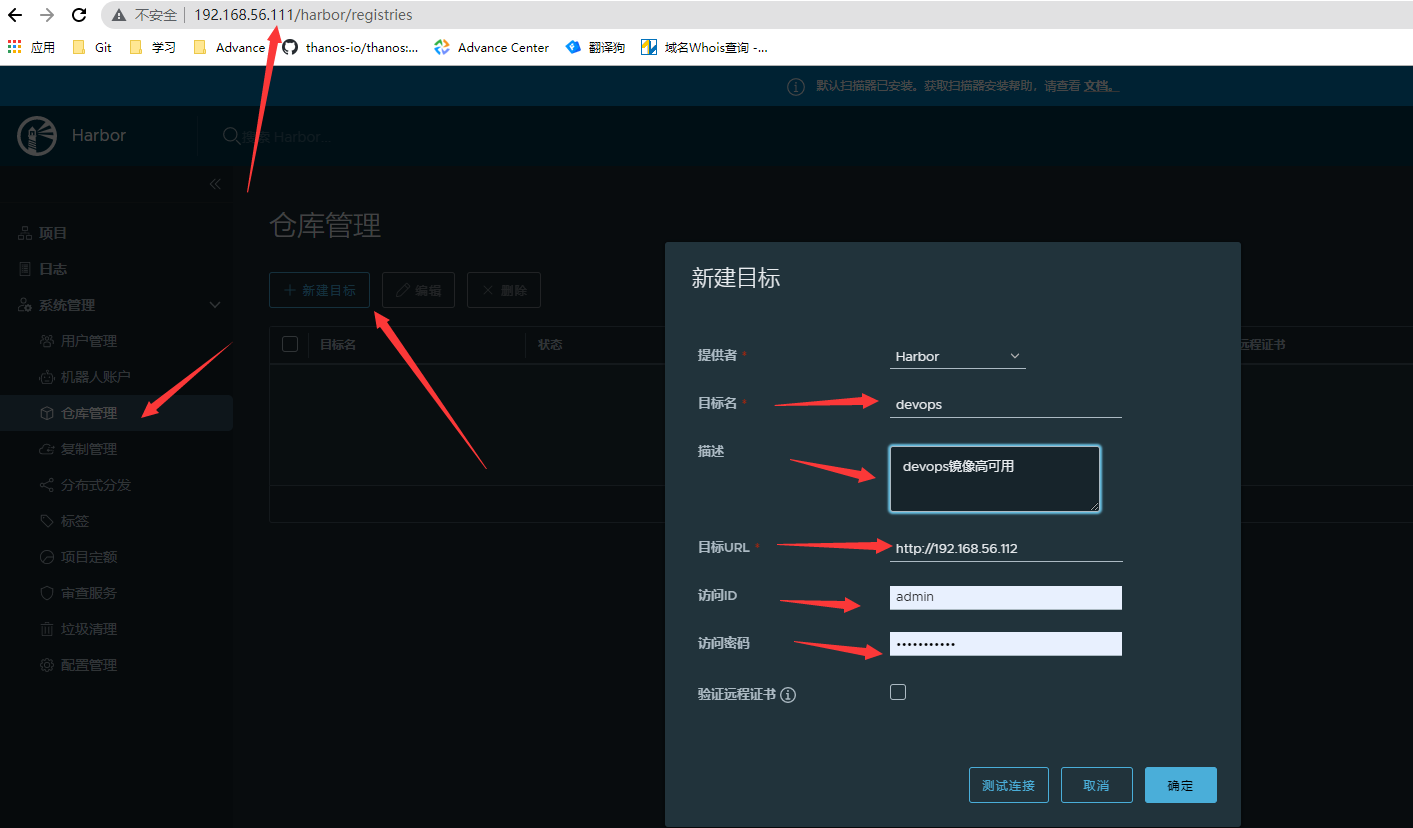

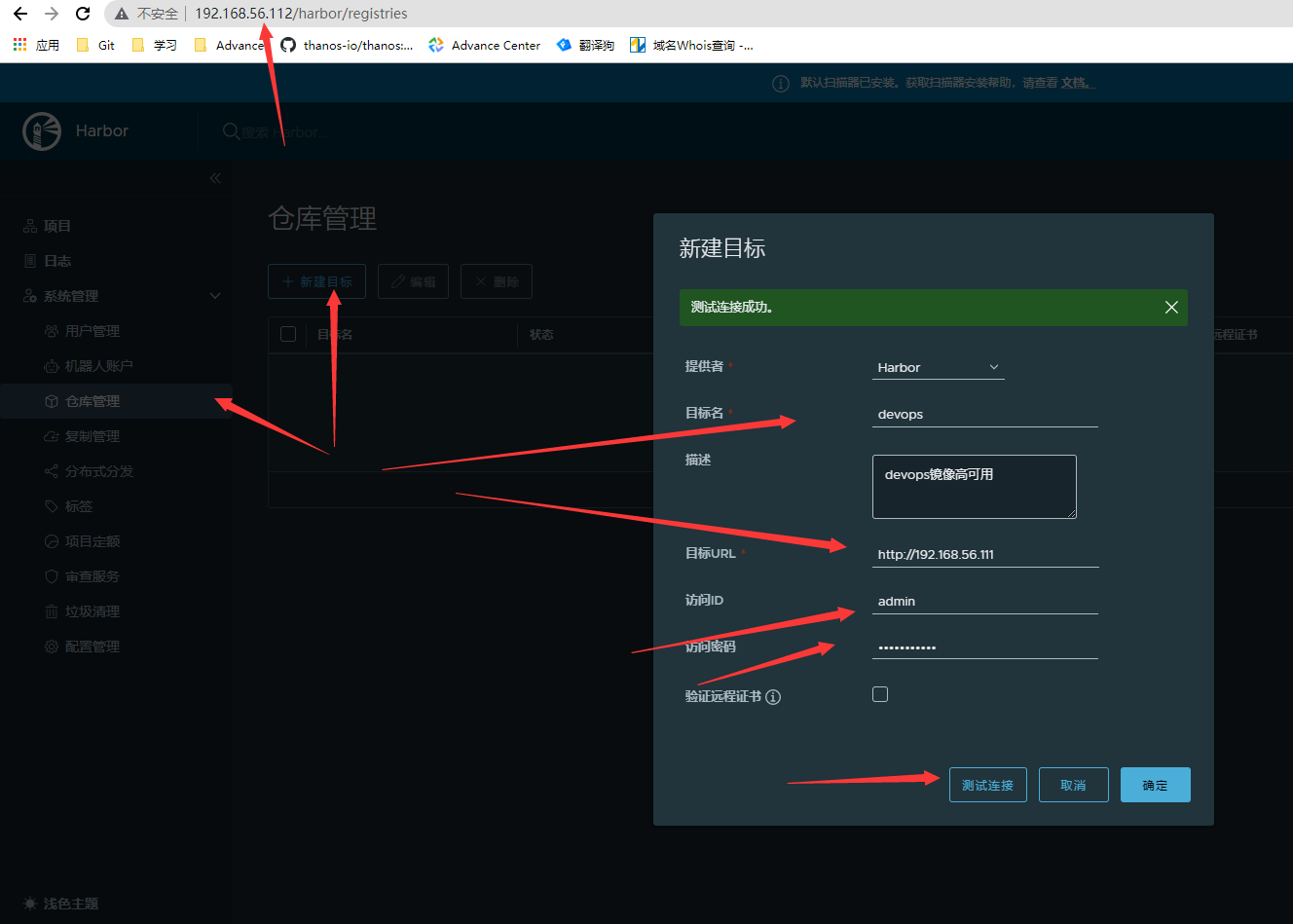

高可用数据同步

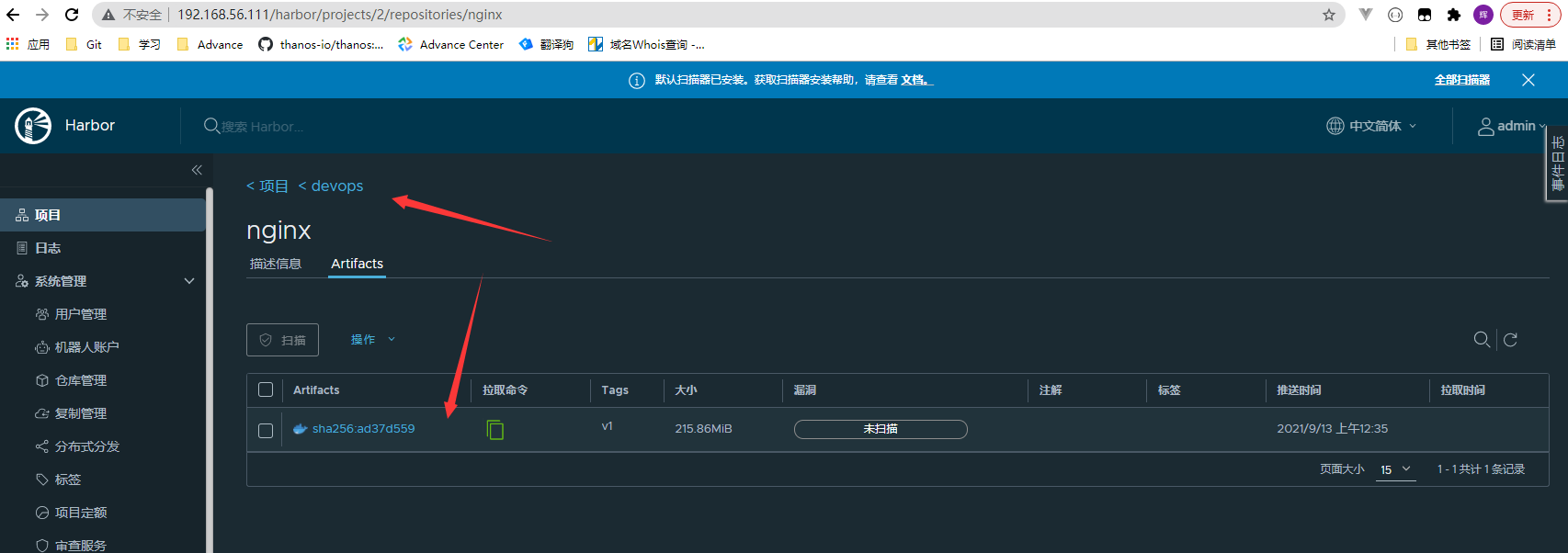

- 在两个仓库创建devops,baseimages 两个公开仓库

192.168.56.111作为master,上传镜像

root@k8s-harbor01:/data/dockerfile/nginx# docker images REPOSITORY TAG IMAGE ID CREATED SIZE harbor.cropy.cn/devops/nginx v1.0 0e0b0139c773 11 seconds ago 613MB centos 7.9.2009 8652b9f0cb4c 10 months ago 204MB ... root@k8s-harbor01:/data/dockerfile/nginx# docker tag harbor.cropy.cn/devops/nginx:v1.0 192.168.56.111/devops/nginx:v1 root@k8s-harbor01:/data/dockerfile/nginx# docker login 192.168.56.111 #使用admin登录 root@k8s-harbor01:/data/dockerfile/nginx# docker push 192.168.56.111/devops/nginx:v1 #上传镜像查看上传结果

- 主节点192.168.56.111创建仓库管理

- 从节点192.168.56.113配置仓库管理

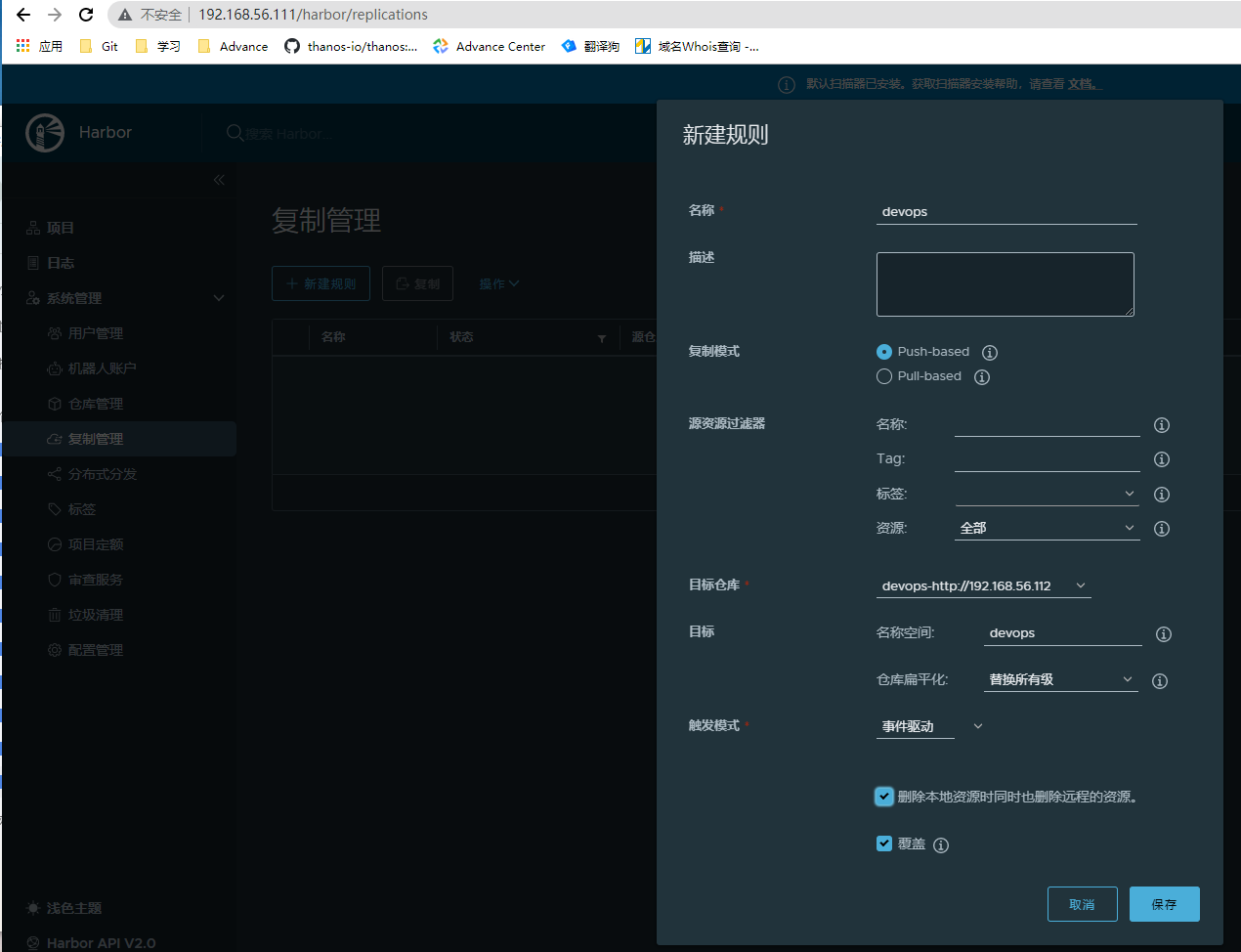

- master节点配置复制

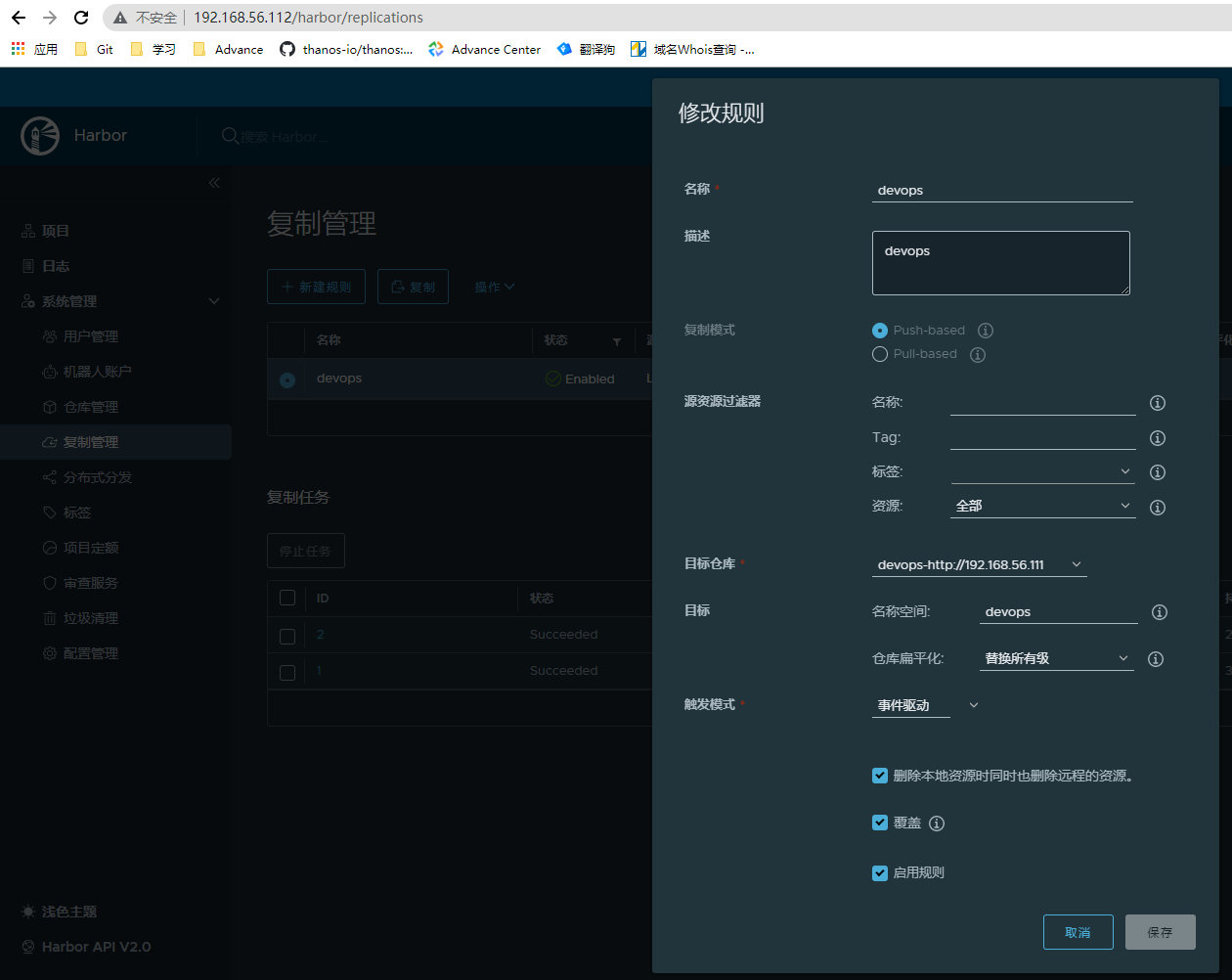

- 从节点复制配置

主节点推送镜像测试

root@k8s-harbor01:/data/dockerfile/alpine-nginx# docker tag harbor.cropy.cn/devops/nginx-alpine:v1 192.168.56.111/devops/nginx-alpine:v1 root@k8s-harbor01:/data/dockerfile/alpine-nginx# docker push 192.168.56.111/devops/nginx-alpine:v1从节点结果查看

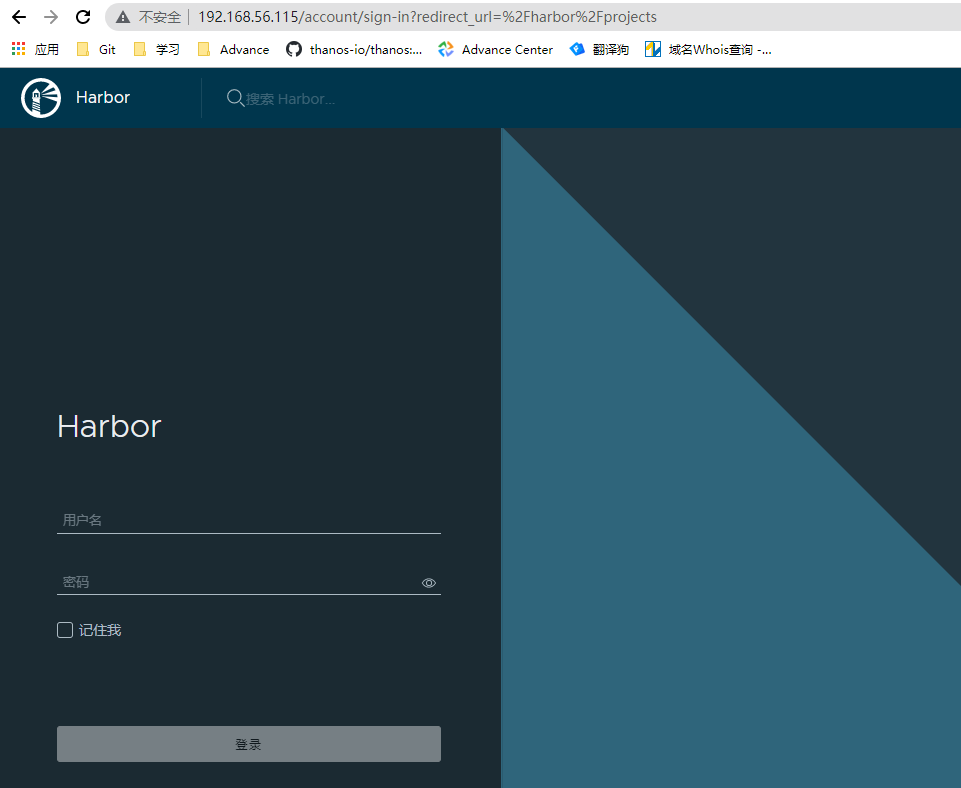

结合负载均衡实现harbor高可用

- 节点信息

- k8s-ha01 (192.168.56.113): haproxy,keepalived

- k8s-ha02(192.168.56.114): haproxy, keepalived

- k8s-ha01部署和配置keepalived ```bash root@k8s-ha01:~# apt install keepalived -y root@k8s-ha01:~# vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { notification_email { acassen } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL }

vrrp_instance VI_1 { state MASTER interface eth1 garp_master_delay 10 smtp_alert virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.56.115 label eth1:1 192.168.56.116 label eth1:2 192.168.56.117 label eth1:3 192.168.56.118 label eth1:4 } }

root@k8s-ha01:~# systemctl restart keepalived

root@k8s-ha01:~# ifconfig | grep eth1 #查看虚IP

eth1: flags=4163

2. k8s-ha02部署和配置keepalived

```bash

root@k8s-ha02:~# apt install keepalived -y

root@k8s-ha02:~# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface eth1

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.56.115 label eth1:1

192.168.56.116 label eth1:2

192.168.56.117 label eth1:3

192.168.56.118 label eth1:4

}

}

root@k8s-ha02:~# systemctl restart keepalived

root@k8s-ha02:~# ifconfig | grep eth1 #查看虚IP, 没有出现,除非关闭ha01节点的keepalived服务

root@k8s-ha02:~# vim /etc/sysctl.conf #开启非本地端口绑定

net.ipv4.ip_nonlocal_bind = 1

root@k8s-ha02:~# sysctl -p

- k8s-ha01,k8s-ha02两个节点都安装haproxy并配置 ```bash root@k8s-ha01:~# apt install haproxy -y root@k8s-ha01:~# vim /etc/haproxy.conf ….

listen harbor-80

# listen 一个vip,在k8s-ha02也需要配置同样的vip

bind 192.168.56.115:80

mode tcp

server harbor1 192.168.56.111:80 check inter 3s fail 3 rise 5

server harbor2 192.168.56.112:80 check inter 3s fail 3 rise 5

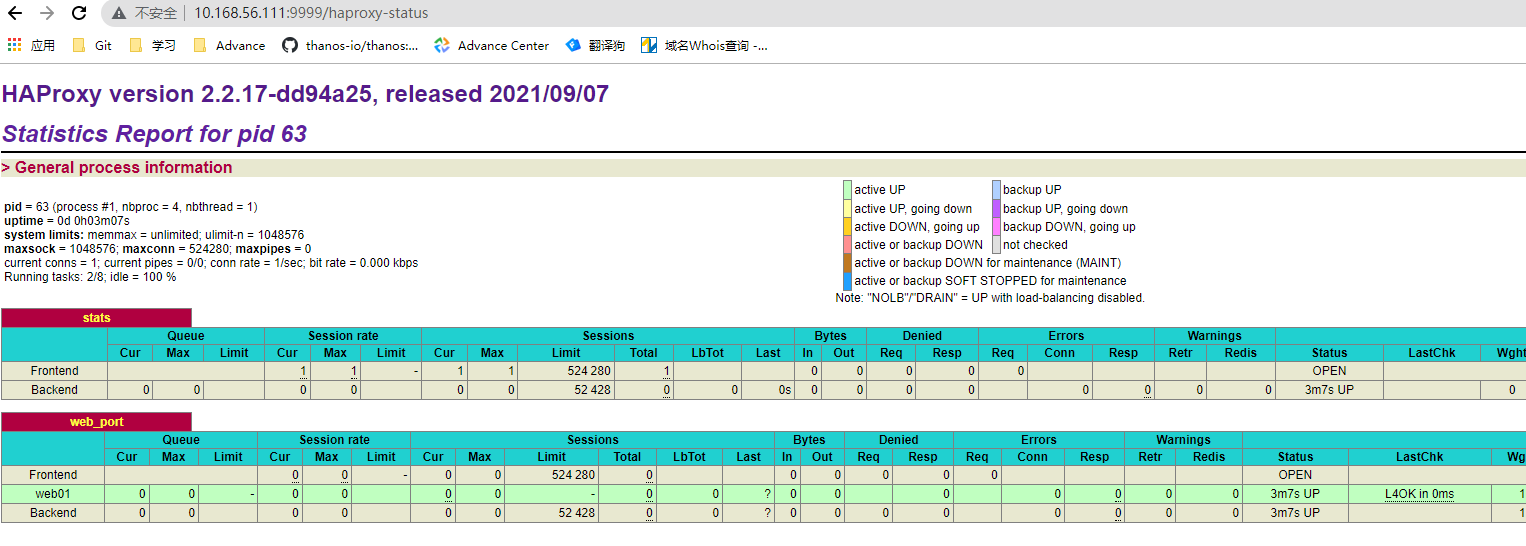

4. 测试haproxy

5. 配置insecure-registories

```bash

root@k8s-harbor01:/data/dockerfile/alpine-nginx# vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://0b8hhs68.mirror.aliyuncs.com"],

"storage-driver": "overlay2",

"data-root":"/data/docker",

"insecure-registries": ["192.168.56.111","192.168.56.112","192.168.56.115"]

}

root@k8s-harbor01:/data/dockerfile/alpine-nginx# systemctl restart docker

- 通过vip上传镜像

root@k8s-harbor01:~# docker tag alpine 192.168.56.115/devops/alpine:v1 root@k8s-harbor01:~# docker login 192.168.56.115 root@k8s-harbor01:~# docker push 192.168.56.115/devops/alpine:v1