configmap

- 提供配置文件或者环境变量变更

- 将配置和镜像解耦

将配置信息放到configmap对象中,然后在pod的对象中导入configmap对象,实现导入配置的操作

nginx配置文件示例

```bash root@k8s-master01:~/controller/configmap# vim deploy_configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: nginx-config data: default: | server {

listen 80;server_name www.mysite.com;index index.html;location / {root /data/nginx/html;if (!-e $request_filename) {rewrite ^/(.*) /index.html last;}}

}

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 1 selector: matchLabels: app: ng-deploy-80 template: metadata: labels: app: ng-deploy-80 spec: containers:

- name: ng-deploy-8080

image: tomcat

ports:

- containerPort: 8080

volumeMounts:

- name: nginx-config

mountPath: /data

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /data/nginx/html

name: nginx-static-dir

- name: nginx-config

mountPath: /etc/nginx/conf.d

volumes:

- name: nginx-static-dir

hostPath:

path: /data/nginx/linux39

- name: nginx-config

configMap:

name: nginx-config

items:

- key: default

path: mysite.conf

apiVersion: v1 kind: Service metadata: name: ng-deploy-80 spec: ports:

- name: http port: 81 targetPort: 80 nodePort: 30019 protocol: TCP type: NodePort selector: app: ng-deploy-80

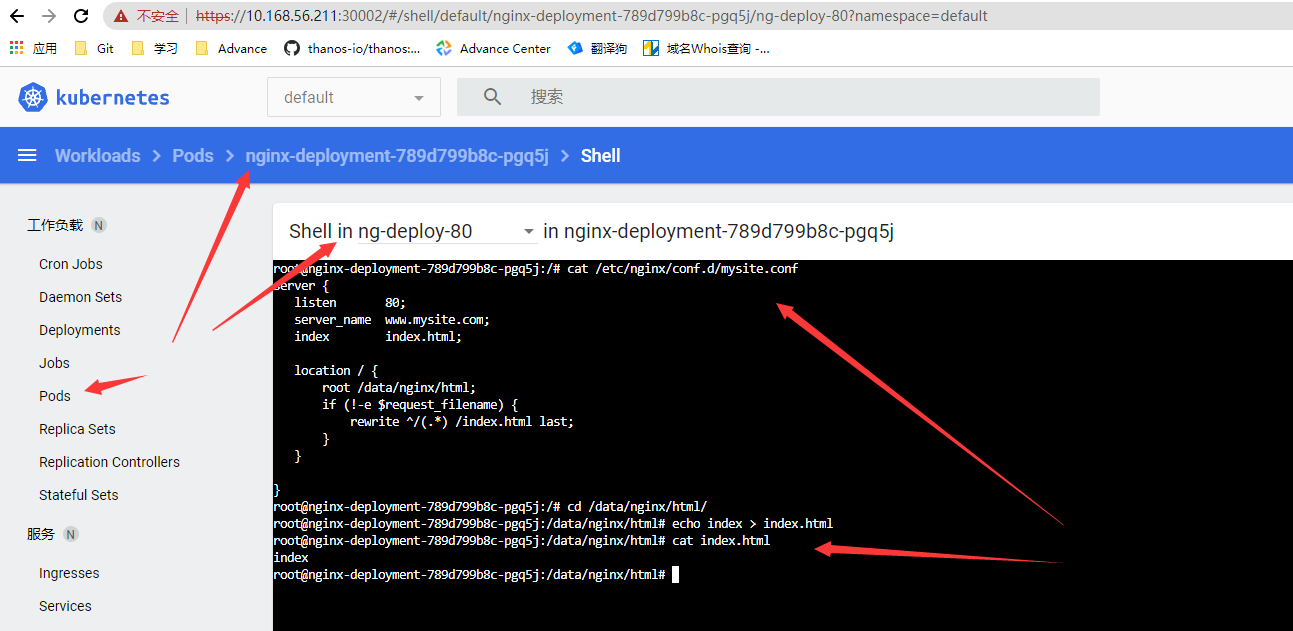

测试configmap挂载结果

```bash

root@k8s-master01:~/controller/configmap# kubectl get configmap nginx-config -o yaml

dashboard查看nginx挂载的配置,并创建index文件

配置haproxy负载均衡,将上面示例定义的30019代理到haproxy所在负载均很配置的80端口

root@k8s-ha01:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface eth1

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.56.115 label eth1:1

192.168.56.116 label eth1:2

192.168.56.117 label eth1:3

192.168.56.118 label eth1:4

}

}

root@k8s-ha01:~# vim /etc/haproxy/haproxy.cfg

...

listen ngx-30012

bind 192.168.56.117:80

mode tcp

server k8s1 10.168.56.211:30012 check inter 3s fall 3 rise 5

server k8s2 10.168.56.212:30012 check inter 3s fall 3 rise 5

server k8s3 10.168.56.213:30012 check inter 3s fall 3 rise 5

listen ngix-30019

bind 192.168.56.118:80

server k8s1 10.168.56.211:30019 check inter 3s fall 3 rise 5

server k8s2 10.168.56.212:30019 check inter 3s fall 3 rise 5

server k8s3 10.168.56.213:30019 check inter 3s fall 3 rise 5

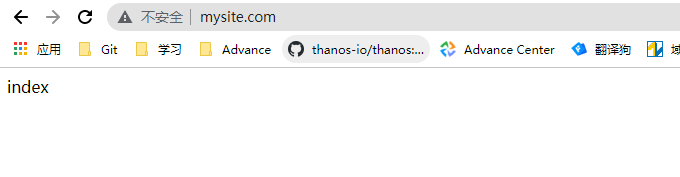

绑定 192.168.56.118 www.mysite.com到hosts文件并测试结果

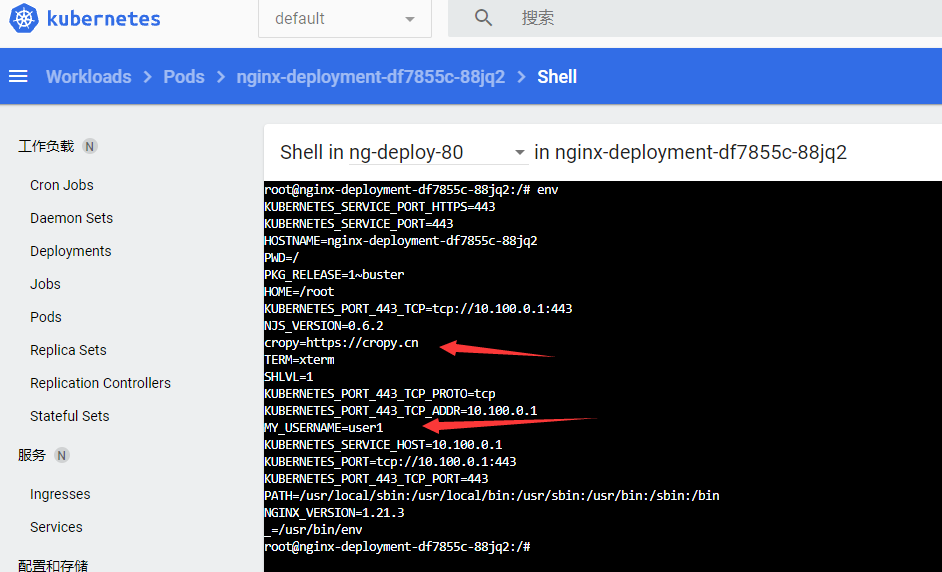

提供环境变量

root@k8s-master01:~/controller/configmap# vim configmap_env.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

username: user1

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

env:

- name: "cropy"

value: "https://cropy.cn"

- name: MY_USERNAME

valueFrom:

configMapKeyRef:

name: nginx-config

key: username

ports:

- containerPort: 80

daemonSet

- 官方文档: https://kubernetes.io/zh/docs/concepts/workloads/controllers/daemonset/

- 会在当前集群中的每个节点运行同一个pod,当新节点加入集群时会为新的节点配置相同的pod,当节点从集群中移除时pod也会被kubernetes回收,当daemonSet被删除的话,其创建的pod也会被删除

- 每个节点上运行集群守护进程

-

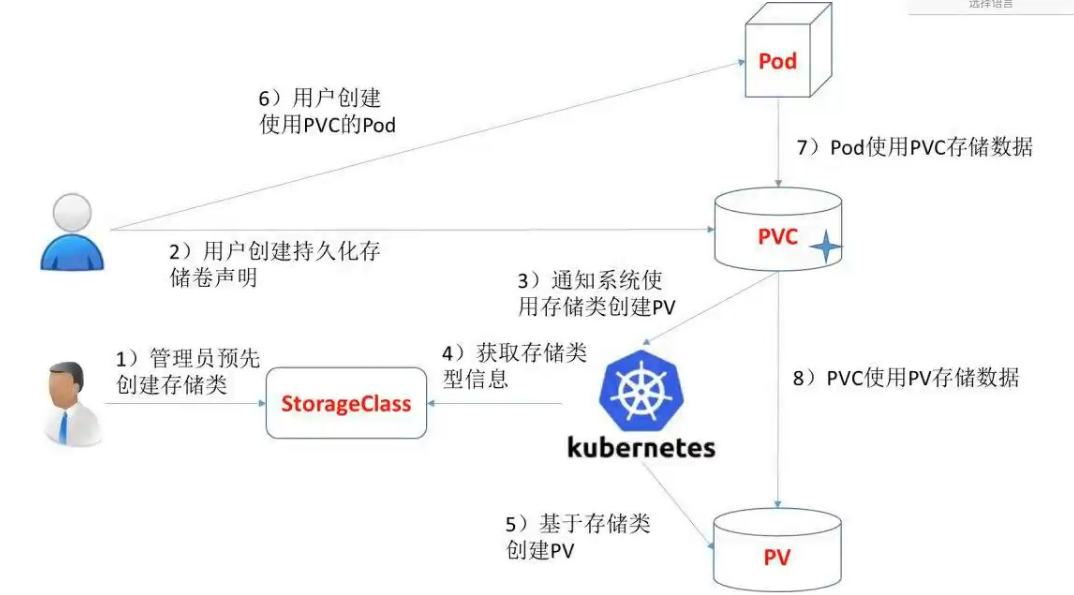

PV&PVC

实现pod和storage的解耦,这样我们修改storage的时候不需要修改pod,也可以实现存储和对应权限的隔离

- pv:由k8s管理员设置的存储,是集群中的一部分。就行节点是k8s集群的资源一样,pv也是集群中的资源,pv是volume之类的卷插件,它具有独立于使用pv的pod的声明周期,此API对象包含存储实现的细节,即NFS,iscsi或者特定于云厂商的存储系统,为了隔离项目数据

- pvc: 是用户存储的请求,它与pod相似,pod小号节点的资源,pvc消耗pv资源,pod可以请求特定级别的资源,声明请求特定大小和访问模式(支持可读/写一次或者只读多次模式挂载)

- 官方文档: https://kubernetes.io/zh/docs/concepts/storage/persistent-volumes/

redis使用NFS PV实例

在nfs(10.168.56.113)节点创建pv目录

root@k8s-ha01:~# mkdir -pv /data/nfs/cropy/redis-datadir-pv1

创建pv和pvc

root@k8s-master01:~/controller/redis-pv# vim redis-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-datadir-pv1

namespace: cropy

spec:

capacity:

storage: 1G

accessModes:

- ReadWriteOnce

nfs:

path: /data/nfs/cropy/redis-datadir-pv1

server: 10.168.56.113

root@k8s-master01:~/controller/redis-pv# vim redis-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: redis-datadir-pvc1

namespace: cropy

spec:

volumeName: redis-datadir-pv1

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

root@k8s-master01:~/controller/redis-pv# kubectl create ns cropy

root@k8s-master01:~/controller/redis-pv# kubectl apply -f redis-pv.yaml

root@k8s-master01:~/controller/redis-pv# kubectl apply -f redis-pvc.yaml

实战zookeeper集群搭建

a. 构建zookeeper镜像

root@k8s-master01:~# mkdir ~/controller/zookeeper/{docker/bin,docker/conf,k8s/pv_pvc} -p

root@k8s-master01:~# cd ~/controller/zookeeper/docker/

root@k8s-master01:~/controller/zookeeper/docker# wget https://archive.apache.org/dist/zookeeper/zookeeper-3.4.14/zookeeper-3.4.14.tar.gz.asc

root@k8s-master01:~/controller/zookeeper/docker# wget https://archive.apache.org/dist/zookeeper/zookeeper-3.4.14/zookeeper-3.4.14.tar.gz

root@k8s-master01:~/controller/zookeeper/docker# docker pull elevy/slim_java:8

root@k8s-master01:~/controller/zookeeper/docker# docker tag elevy/slim_java:8 harbor.cropy.cn/baseimages/slim_java:8

root@k8s-master01:~/controller/zookeeper/docker# docker push harbor.cropy.cn/baseimages/slim_java:8

root@k8s-master01:~/controller/zookeeper/docker# cat repositories

http://mirrors.aliyun.com/alpine/v3.6/main

http://mirrors.aliyun.com/alpine/v3.6/community

root@k8s-master01:~/controller/zookeeper/docker# cat entrypoint.sh

#!/bin/bash

echo ${MYID:-1} > /zookeeper/data/myid

if [ -n "$SERVERS" ]; then

IFS=\, read -a servers <<<"$SERVERS"

for i in "${!servers[@]}"; do

printf "\nserver.%i=%s:2888:3888" "$((1 + $i))" "${servers[$i]}" >> /zookeeper/conf/zoo.cfg

done

fi

cd /zookeeper

exec "$@"

root@k8s-master01:~/controller/zookeeper/docker# vim bin/zkReady.sh

#!/bin/bash

/zookeeper/bin/zkServer.sh status | egrep 'Mode: (standalone|leading|following|observing)'

root@k8s-master01:~/controller/zookeeper/docker# vim conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/zookeeper/data

dataLogDir=/zookeeper/wal

#snapCount=100000

autopurge.purgeInterval=1

clientPort=2181

quorumListenOnAllIPs=true

# Define some default values that can be overridden by system properties

zookeeper.root.logger=INFO, CONSOLE, ROLLINGFILE

zookeeper.console.threshold=INFO

zookeeper.log.dir=/zookeeper/log

zookeeper.log.file=zookeeper.log

zookeeper.log.threshold=INFO

zookeeper.tracelog.dir=/zookeeper/log

zookeeper.tracelog.file=zookeeper_trace.log

root@k8s-master01:~/controller/zookeeper/docker# vim conf/log4j.properties

#

# ZooKeeper Logging Configuration

#

# Format is "<default threshold> (, <appender>)+

# DEFAULT: console appender only

log4j.rootLogger=${zookeeper.root.logger}

# Example with rolling log file

#log4j.rootLogger=DEBUG, CONSOLE, ROLLINGFILE

# Example with rolling log file and tracing

#log4j.rootLogger=TRACE, CONSOLE, ROLLINGFILE, TRACEFILE

#

# Log INFO level and above messages to the console

#

log4j.appender.CONSOLE=org.apache.log4j.ConsoleAppender

log4j.appender.CONSOLE.Threshold=${zookeeper.console.threshold}

log4j.appender.CONSOLE.layout=org.apache.log4j.PatternLayout

log4j.appender.CONSOLE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n

#

# Add ROLLINGFILE to rootLogger to get log file output

# Log DEBUG level and above messages to a log file

log4j.appender.ROLLINGFILE=org.apache.log4j.RollingFileAppender

log4j.appender.ROLLINGFILE.Threshold=${zookeeper.log.threshold}

log4j.appender.ROLLINGFILE.File=${zookeeper.log.dir}/${zookeeper.log.file}

# Max log file size of 10MB

log4j.appender.ROLLINGFILE.MaxFileSize=10MB

# uncomment the next line to limit number of backup files

log4j.appender.ROLLINGFILE.MaxBackupIndex=5

log4j.appender.ROLLINGFILE.layout=org.apache.log4j.PatternLayout

log4j.appender.ROLLINGFILE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L] - %m%n

#

# Add TRACEFILE to rootLogger to get log file output

# Log DEBUG level and above messages to a log file

log4j.appender.TRACEFILE=org.apache.log4j.FileAppender

log4j.appender.TRACEFILE.Threshold=TRACE

log4j.appender.TRACEFILE.File=${zookeeper.tracelog.dir}/${zookeeper.tracelog.file}

log4j.appender.TRACEFILE.layout=org.apache.log4j.PatternLayout

### Notice we are including log4j's NDC here (%x)

log4j.appender.TRACEFILE.layout.ConversionPattern=%d{ISO8601} [myid:%X{myid}] - %-5p [%t:%C{1}@%L][%x] - %m%n

root@k8s-master01:~/controller/zookeeper/docker# vim Dockerfile

FROM harbor.cropy.cn/baseimages/slim_java:8

ENV ZK_VERSION 3.4.14

ADD repositories /etc/apk/repositories

COPY zookeeper-3.4.14.tar.gz /tmp/zk.tgz

COPY zookeeper-3.4.14.tar.gz.asc /tmp/zk.tgz.asc

COPY KEYS /tmp/KEYS

RUN apk add --no-cache --virtual .build-deps \

ca-certificates \

gnupg \

tar \

wget && \

apk add --no-cache \

bash && \

export GNUPGHOME="$(mktemp -d)" && \

gpg -q --batch --import /tmp/KEYS && \

gpg -q --batch --no-auto-key-retrieve --verify /tmp/zk.tgz.asc /tmp/zk.tgz && \

mkdir -p /zookeeper/data /zookeeper/wal /zookeeper/log && \

tar -x -C /zookeeper --strip-components=1 --no-same-owner -f /tmp/zk.tgz && \

cd /zookeeper && \

cp dist-maven/zookeeper-${ZK_VERSION}.jar . && \

rm -rf \

*.txt \

*.xml \

bin/README.txt \

bin/*.cmd \

conf/* \

contrib \

dist-maven \

docs \

lib/*.txt \

lib/cobertura \

lib/jdiff \

recipes \

src \

zookeeper-*.asc \

zookeeper-*.md5 \

zookeeper-*.sha1 && \

apk del .build-deps && \

rm -rf /tmp/* "$GNUPGHOME"

COPY conf /zookeeper/conf/

COPY bin/zkReady.sh /zookeeper/bin/

COPY entrypoint.sh /

ENV PATH=/zookeeper/bin:${PATH} \

ZOO_LOG_DIR=/zookeeper/log \

ZOO_LOG4J_PROP="INFO, CONSOLE, ROLLINGFILE" \

JMXPORT=9010

ENTRYPOINT [ "/entrypoint.sh" ]

CMD [ "zkServer.sh", "start-foreground" ]

EXPOSE 2181 2888 3888 9010

root@k8s-master01:~/controller/zookeeper/docker# chmod a+x *.sh

root@k8s-master01:~/controller/zookeeper/docker# docker build . -t harbor.cropy.cn/baseimages/zookeeper:v1

root@k8s-master01:~/controller/zookeeper/docker# docker push harbor.cropy.cn/baseimages/zookeeper:v1

root@k8s-master01:~/controller/zookeeper/docker# docker run -it --rm harbor.cropy.cn/baseimages/zookeeper:v1 #测试启动

b. 创建pv&pvc

## 现在nfs(10.168.56.113)节点创建zk数据目录

root@k8s-ha01:~# mkdir /data/nfs/cropy/zookeeper/zk-data-{1..3}

## 返回k8s-master01创建pv&pvc

root@k8s-master01:~# cd ~/controller/zookeeper/k8s/pv_pvc/

root@k8s-master01:~/controller/zookeeper/k8s/pv_pvc# vim zk-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-1

spec:

capacity:

storage: 2Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.168.56.113

path: /data/nfs/cropy/zookeeper/zk-data-1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-2

spec:

capacity:

storage: 2Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.168.56.113

path: /data/nfs/cropy/zookeeper/zk-data-2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-3

spec:

capacity:

storage: 2Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.168.56.113

path: /data/nfs/cropy/zookeeper/zk-data-3

root@k8s-master01:~/controller/zookeeper/k8s/pv_pvc# vim zk-pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-1

namespace: cropy

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-1

resources:

requests:

storage: 2Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-2

namespace: cropy

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-2

resources:

requests:

storage: 2Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-3

namespace: cropy

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-3

resources:

requests:

storage: 2Gi

root@k8s-master01:~/controller/zookeeper/k8s/pv_pvc# kubectl apply -f zk-pv.yaml

root@k8s-master01:~/controller/zookeeper/k8s/pv_pvc# kubectl apply -f zk-pvc.yaml

root@k8s-master01:~/controller/zookeeper/k8s/pv_pvc# kubectl get pvc -n cropy

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

redis-datadir-pvc1 Bound redis-datadir-pv1 5G RWO 8h

zookeeper-datadir-pvc-1 Bound zookeeper-datadir-pv-1 2Gi RWO 31s

zookeeper-datadir-pvc-2 Bound zookeeper-datadir-pv-2 2Gi RWO 31s

zookeeper-datadir-pvc-3 Bound zookeeper-datadir-pv-3 2Gi RWO 30s

c. 创建zk集群

root@k8s-master01:~# cd ~/controller/zookeeper/k8s/

root@k8s-master01:~/controller/zookeeper/k8s# vim zk-cluster.yaml

apiVersion: v1

kind: Service

metadata:

name: zookeeper

namespace: cropy

spec:

ports:

- name: client

port: 2181

selector:

app: zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper1

namespace: cropy

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 42181

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "1"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper2

namespace: cropy

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 42182

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "2"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper3

namespace: cropy

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 42183

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "3"

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: zookeeper1

namespace: cropy

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "1"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.cropy.cn/baseimages/zookeeper:v1

imagePullPolicy: Always

env:

- name: MYID

value: "1"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-1

volumes:

- name: zookeeper-datadir-pvc-1

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-1

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: zookeeper2

namespace: cropy

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "2"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.cropy.cn/baseimages/zookeeper:v1

imagePullPolicy: Always

env:

- name: MYID

value: "2"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-2

volumes:

- name: zookeeper-datadir-pvc-2

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-2

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: zookeeper3

namespace: cropy

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "3"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.cropy.cn/baseimages/zookeeper:v1

imagePullPolicy: Always

env:

- name: MYID

value: "3"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-3

volumes:

- name: zookeeper-datadir-pvc-3

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-3

root@k8s-master01:~/controller/zookeeper/k8s# kubectl apply -f zk-cluster.yaml

root@k8s-master01:~/controller/zookeeper/k8s# kubectl get pod -n cropy

NAME READY STATUS RESTARTS AGE

zookeeper1-67846568bb-2ntfh 1/1 Running 0 4m24s

zookeeper2-56696f779f-r62h8 1/1 Running 0 4m24s

zookeeper3-7ccdcffbb4-82sv5 1/1 Running 0 4m24s

d. zk状态验证

d-1: 验证共享存储id文件是否唯一(nfs:10.168.56.113节点)

root@k8s-ha01:~# cat /data/nfs/cropy/zookeeper/zk-data-1/myid

1

root@k8s-ha01:~# cat /data/nfs/cropy/zookeeper/zk-data-2/myid

2

root@k8s-ha01:~# cat /data/nfs/cropy/zookeeper/zk-data-3/myid

3

d-2: k8s 上验证zk状态

root@k8s-master01:~/controller/zookeeper/k8s# kubectl exec -it zookeeper1-6b65f4f88b-2slpv -n zk-cluster -- bash

bash-4.3# /zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Error contacting service. It is probably not running.

server.3=zookeeper3:2888:3888bash-4.3# ping zookeeper1

ping: bad address 'zookeeper1'

bash-4.3# nslookup zookeeper1

nslookup: can't resolve '(null)': Name does not resolve

nslookup: can't resolve 'zookeeper1': Name does not resolve

bash-4.3#

实战静态网站类的k8s化

使用到dockerfile和k8s yaml存放位置:

dockerfile: /root/k8s/web/dockerfile yaml: /root/k8s/web/yaml/ harbor创建cropy, pub-images镜像仓库

- 构建base镜像 ```bash root@k8s-master01:~# mkdir /root/k8s/web/{dockerfile/base,dockerfile/nginx,dockerfile/nginx_web,yaml} -p root@k8s-master01:~/k8s/web/dockerfile/base# curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.15.1-x86_64.rpm root@k8s-master01:~/k8s/web/dockerfile/base# vim Dockerfile FROM centos:7.8.2003 MAINTAINER hui.wang@qq.com ADD filebeat-7.15.1-x86_64.rpm /tmp/ RUN yum install -y /tmp/filebeat-7.15.1-x86_64.rpm vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-t ools iotop && rm -rf /etc/localtime /tmp/filebeat-7.6.2-x86_64.rpm && ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && useradd www -u 2020 && usera dd nginx -u 2021 && rm -fr /var/cache/yum/* /tmp/filebeat-7.15.1-x86_64.rpm

root@k8s-master01:~/k8s/web/dockerfile/base# vim build.sh

!/bin/bash

docker build -t harbor.cropy.cn/baseimages/centos-base:7.8.2003 . docker push harbor.cropy.cn/baseimages/centos-base:7.8.2003

root@k8s-master01:~/k8s/web/dockerfile/base# sh build.sh

2. 构建nginx镜像

```bash

root@k8s-master01:~# cd /root/k8s/web/dockerfile/nginx

root@k8s-master01:~/k8s/web/dockerfile/nginx# wget https://nginx.org/download/nginx-1.20.1.tar.gz

root@k8s-master01:~/k8s/web/dockerfile/nginx# vim Dockerfile

FROM harbor.cropy.cn/baseimages/centos-base:7.8.2003

MAINTAINER hui.wang@qq.com

ADD nginx-1.20.1.tar.gz /usr/local/src/

RUN yum install -y vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop && cd /usr/local/src/nginx-1.20.1 && ./configure --prefix=/usr/

local/nginx && make && make install && ln -sv /usr/local/nginx/sbin/nginx /usr/sbin/nginx &&rm -rf /usr/local/src/nginx-1.20.1

root@k8s-master01:~/k8s/web/dockerfile/nginx# vim build.sh

#!/bin/bash

docker build -t harbor.cropy.cn/pub-images/nginx-base:v1.20.1 .

sleep 1

docker push harbor.cropy.cn/pub-images/nginx-base:v1.20.1

root@k8s-master01:~/k8s/web/dockerfile/nginx# sh build.sh

- 构建业务镜像

```bash

root@k8s-master01:~# cd /root/k8s/web/dockerfile/nginx_web/

root@k8s-master01:~/k8s/web/dockerfile/nginx_web# echo “

index

“ > index.html root@k8s-master01:~/k8s/web/dockerfile/nginx_web# tar czf app1.tar.gz index.html root@k8s-master01:~/k8s/web/dockerfile/nginx_web# echo “index webapps

“ > index.html root@k8s-master01:~/k8s/web/dockerfile/nginx_web# vim nginx.conf user nginx nginx; worker_processes auto;

daemon off;

events { worker_connections 1024; }

http { include mime.types; default_type application/octet-stream; sendfile on; tcp_nopush on; keepalive_timeout 65; gzip on;

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm;

}

location /webapp {

root html;

index index.html index.htm;

}

}

}

root@k8s-master01:~/k8s/web/dockerfile/nginx_web# vim Dockerfile FROM harbor.cropy.cn/pub-images/nginx-base:v1.20.1

ADD nginx.conf /usr/local/nginx/conf/nginx.conf ADD app1.tar.gz /usr/local/nginx/html/webapp/ ADD index.html /usr/local/nginx/html/index.html

静态资源挂载路径

RUN mkdir -p /usr/local/nginx/html/webapp/static /usr/local/nginx/html/webapp/images

EXPOSE 80 443

CMD [“nginx”]

root@k8s-master01:~/k8s/web/dockerfile/nginx_web# vim build.sh

!/bin/bash

TAG=$1 docker build -t harbor.cropy.cn/cropy/nginx-web1:${TAG} . echo “镜像构建完成,即将上传到harbor” sleep 1 docker push harbor.cropy.cn/cropy/nginx-web1:${TAG} echo “镜像上传到harbor完成”

root@k8s-master01:~/k8s/web/dockerfile/nginx_web# sh build.sh v1 root@k8s-master01:~/k8s/web/dockerfile/nginx_web# docker run -it —rm -p 8888:80 harbor.cropy.cn/cropy/nginx-web1:v1 bash # 测试镜像 [root@f548eb4a6cdd /]# cat /usr/local/nginx/conf/nginx.conf user nginx nginx; worker_processes auto;

daemon off;

events { worker_connections 1024; }

http { include mime.types; default_type application/octet-stream; sendfile on; tcp_nopush on; keepalive_timeout 65; gzip on;

server {

listen 80;

server_name localhost;

location / {

root html;

index index.html index.htm;

}

location /webapp {

root html;

index index.html index.htm;

}

}

} [root@f548eb4a6cdd /]# nginx

4. 构建k8s yaml

1. 创建nginx数据在nfs上的数据挂载点

```bash

root@k8s-ha01:~# mkdir /data/nfs/cropy/nginx-web/{images,data} -p

b. 创建nginx.yaml

root@k8s-master01:~# cd /root/k8s/web/yaml/

root@k8s-master01:~/k8s/web/yaml# mkdir nginx_web

root@k8s-master01:~/k8s/web/yaml# cd nginx_web/

root@k8s-master01:~/k8s/web/yaml/nginx_web# vim nginx.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: cropy-nginx-deployment-label

name: cropy-nginx-deployment

namespace: cropy

spec:

replicas: 1

selector:

matchLabels:

app: cropy-nginx-selector

template:

metadata:

labels:

app: cropy-nginx-selector

spec:

containers:

- name: cropy-nginx-container

image: harbor.cropy.cn/cropy/nginx-web1:v1

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

env:

- name: "password"

value: "123456"

- name: "age"

value: "20"

resources:

limits:

cpu: 2

memory: 2Gi

requests:

cpu: 200m

memory: 1Gi

volumeMounts:

- name: cropy-images

mountPath: /usr/local/nginx/html/webapp/images

readOnly: false

- name: cropy-static

mountPath: /usr/local/nginx/html/webapp/static

readOnly: false

volumes:

- name: cropy-images

nfs:

server: 10.168.56.113

path: /data/nfs/cropy/nginx-web/images

- name: cropy-static

nfs:

server: 10.168.56.113

path: /data/nfs/cropy/nginx-web/data

---

kind: Service

apiVersion: v1

metadata:

labels:

app: cropy-nginx-service-label

name: cropy-nginx-service

namespace: cropy

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 40002

- name: https

port: 443

protocol: TCP

targetPort: 443

nodePort: 40443

selector:

app: cropy-nginx-selector

root@k8s-master01:~/k8s/web/yaml/nginx_web# kubectl apply -f nginx.yaml

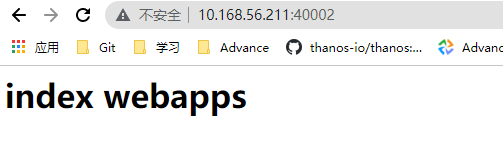

测试

root@k8s-master01:~# kubectl get pod -n cropy NAME READY STATUS RESTARTS AGE cropy-nginx-deployment-7ccf74f48c-2rgnf 1/1 Running 0 62s root@k8s-master01:~# kubectl get svc -n cropy NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE cropy-nginx-service NodePort 10.100.213.140 <none> 80:40002/TCP,443:40443/TCP 6m47stomcat项目k8s容器化改造

构建jdk镜像 ```bash root@k8s-master01:~# cd /root/k8s/web/dockerfile/ root@k8s-master01:~/k8s/web/dockerfile# mkdir jdk tomcat root@k8s-master01:~/k8s/web/dockerfile# jdk-8u212-linux-x64.tar.gz #自主下载jdk root@k8s-master01:~/k8s/web/dockerfile/jdk# vim Dockerfile FROM harbor.cropy.cn/baseimages/centos-base:7.8.2003

MAINTAINER hui.wang@qq.com

ADD jdk-8u212-linux-x64.tar.gz /usr/local/src/ RUN ln -sv /usr/local/src/jdk1.8.0_212 /usr/local/jdk && rm -fr /var/cache/yum/* &&\ echo “export JAVA_HOME=/usr/local/jdk” >> /etc/profile &&\ echo “export TOMCAT_HOME=/apps/tomcat” >> /etc/profile &&\ echo “export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$TOMCAT_HOME/bin:$PATH” >> /etc/profile &&\ echo “export CLASSPATH=.$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$JAVA_HOME/lib/tools.jar” >> /etc/profile

ENV JAVA_HOME /usr/local/jdk ENV JRE_HOME $JAVA_HOME/jre ENV CLASSPATH $JAVA_HOME/lib/:$JRE_HOME/lib/ ENV PATH $PATH:$JAVA_HOME/bin

root@k8s-master01:~/k8s/web/dockerfile/jdk# vim build.sh

!/bin/bash

docker build -t harbor.cropy.cn/baseimages/jdk-base:v8.212 . sleep 1 docker push harbor.cropy.cn/baseimages/jdk-base:v8.212

root@k8s-master01:~/k8s/web/dockerfile/jdk# bash build.sh

2. 构建tomcat镜像

```bash

root@k8s-master01:~# cd /root/k8s/web/dockerfile/tomcat/

root@k8s-master01:~/k8s/web/dockerfile/tomcat# wget https://dlcdn.apache.org/tomcat/tomcat-8/v8.5.70/bin/apache-tomcat-8.5.70.tar.gz

root@k8s-master01:~/k8s/web/dockerfile/tomcat# vim Dockerfile

FROM harbor.cropy.cn/baseimages/jdk-base:v8.212

MAINTAINER hui.wang@qq.com

RUN mkdir /apps /data/tomcat/webapps /data/tomcat/logs -pv

ADD apache-tomcat-8.5.72.tar.gz /apps

RUN useradd tomcat -u 2022 && ln -sv /apps/apache-tomcat-8.5.72 /apps/tomcat && chown -R tomcat.tomcat /apps /data -R

root@k8s-master01:~/k8s/web/dockerfile/tomcat# vim build.sh

#!/bin/bash

docker build -t harbor.cropy.cn/baseimages/tomcat-base:v8.5.72 .

sleep 3

docker push harbor.cropy.cn/baseimages/tomcat-base:v8.5.72

root@k8s-master01:~/k8s/web/dockerfile/tomcat# bash build.sh

- 构建业务镜像 ```bash root@k8s-master01:~# mkdir /root/k8s/web/dockerfile/tomcat_web root@k8s-master01:~# cd /root/k8s/web/dockerfile/tomcat_web root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# echo “tomcat webapps v1” > index.html root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# tar czf app1.tar.gz index.html

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# docker run -itd —name t1 harbor.cropy.cn/baseimages/tomcat-base:v8.5.72 sleep 36000 root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# docker cp t1:/apps/tomcat/conf/server.xml ./ root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# docker cp t1:/apps/tomcat/bin/catalina.sh ./ root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# vim catalina.sh JAVA_OPTS=”-server -Xms1g -Xmx1g -Xss512k -Xmn1g -XX:CMSInitiatingOccupancyFraction=65 -XX:+UseFastAccessorMethods -XX:+AggressiveOpts -XX:+UseBiasedLocking -X X:+DisableExplicitGC -XX:MaxTenuringThreshold=10 -XX:NewSize=2048M -XX:MaxNewSize=2048M -XX:NewRatio=2 -XX:PermSize=128m -XX:MaxPermSize=512m -XX:CMSFullGCsBefo reCompaction=5 -XX:+ExplicitGCInvokesConcurrent -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+CMSParallelRemarkEnabled”

OS specific support. $var must be set to either true or false.

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# vim server.xml

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# vim run_tomcat.sh

!/bin/bash

su - nginx -c “/apps/tomcat/bin/catalina.sh start” tail -f /etc/hosts

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# vim Dockerfile FROM harbor.cropy.cn/baseimages/tomcat-base:v8.5.72

ADD catalina.sh /apps/tomcat/bin/catalina.sh ADD server.xml /apps/tomcat/conf/server.xml ADD app1.tar.gz /data/tomcat/webapps/myapp/ ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh RUN chown -R nginx.nginx /data/ /apps/

EXPOSE 8080 8443 CMD [“/apps/tomcat/bin/run_tomcat.sh”]

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# chmod +x *.sh root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# vim build.sh

!/bin/bash

TAG=$1 docker build -t harbor.cropy.cn/cropy/tomcat-app1:${TAG} . sleep 3 docker push harbor.cropy.cn/cropy/tomcat-app1:${TAG}

root@k8s-master01:~/k8s/web/dockerfile/tomcat_web# sh build.sh

4. k8s 配置构建

1. 准备nfs存储(10.168.56.113)

```bash

root@k8s-ha01:~# mkdir /data/nfs/cropy/tomcat/{images,data} -p

- 准备业务yaml

```bash

root@k8s-master01:~# mkdir /root/k8s/web/yaml/tomcat_app1

root@k8s-master01:~# cd /root/k8s/web/yaml/tomcat_app1

root@k8s-master01:~/k8s/web/yaml/tomcat_app1# vim tomcat.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: cropy-tomcat-app1-deployment-label

name: cropy-tomcat-app1-deployment

namespace: cropy

spec:

replicas: 1

selector:

matchLabels:

app: cropy-tomcat-app1-selector

template:

metadata:

labels:

app: cropy-tomcat-app1-selector

spec:

containers:

- name: cropy-tomcat-app1-container

image: harbor.cropy.cn/cropy/tomcat-app1:v1

imagePullPolicy: Always

ports:

- containerPort: 8080 protocol: TCP name: http env:

- name: “password” value: “123456”

- name: “age” value: “18” resources: limits: cpu: 1 memory: “512Mi” requests: cpu: 500m memory: “512Mi” volumeMounts:

- name: cropy-images mountPath: /usr/local/nginx/html/webapp/images readOnly: false

- name: cropy-static mountPath: /usr/local/nginx/html/webapp/static readOnly: false volumes:

- name: cropy-images nfs: server: 10.168.56.113 path: /data/nfs/cropy/tomcat/images

- name: cropy-static

nfs:

server: 10.168.56.113

path: //data/nfs/cropy/tomcat/data

nodeSelector:

project: cropy

app: tomcat

- name: cropy-tomcat-app1-container

image: harbor.cropy.cn/cropy/tomcat-app1:v1

imagePullPolicy: Always

ports:

kind: Service apiVersion: v1 metadata: labels: app: cropy-tomcat-app1-service-label name: cropy-tomcat-app1-service namespace: cropy spec: type: NodePort ports:

- name: http port: 80 protocol: TCP targetPort: 8080 nodePort: 40003 selector: app: cropy-tomcat-app1-selector

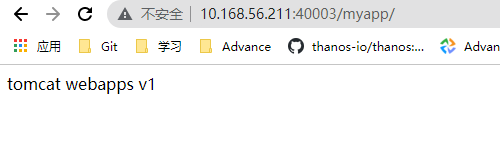

root@k8s-master01:~/k8s/web/yaml/tomcat_app1# kubectl apply -f tomcat.yaml

5. 测试

```bash

root@k8s-master01:~/k8s/web/yaml/tomcat_app1# kubectl get pod -n cropy

NAME READY STATUS RESTARTS AGE

cropy-tomcat-app1-deployment-7dbb854665-gv8mr 1/1 Running 0 35s

root@k8s-master01:~/k8s/web/yaml/tomcat_app1# kubectl get svc -n cropy

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cropy-tomcat-app1-service NodePort 10.100.177.245 <none> 80:40003/TCP 44s

基于ceph存储的k8s实战

搭建ceph集群

- 参考文档: https://www.yuque.com/wanghui-pooq1/ryovus/yf38he

结合rbd存储以及动态存储卷使用案例

ceph侧环境准备

创建并初始化rbd

root@ceph-deploy:~# ceph -s #查看ceph状态 cluster: id: 07d5a4e9-68f6-4172-b716-c6f648d34003 health: HEALTH_OK services: mon: 1 daemons, quorum ceph-mon-mgr1 (age 54m) mgr: ceph-mon-mgr1(active, since 46m) osd: 8 osds: 8 up (since 9m), 8 in (since 9m) data: pools: 1 pools, 128 pgs objects: 0 objects, 0 B usage: 78 MiB used, 160 GiB / 160 GiB avail pgs: 128 active+clean root@ceph-deploy:~# ceph osd pool create k8s01-rbd 64 64 #创建rbd root@ceph-deploy:~# ceph osd pool application enable k8s01-rbd rbd #启用rbd root@ceph-deploy:~# rbd pool init -p k8s01-rbd #初始化rbd创建并验证image

root@ceph-deploy:~# rbd create k8s01-img --size 5G --pool=k8s01-rbd --image-format 2 --image-feature layering root@ceph-deploy:~# rbd create k8s01-img1 --size 5G --pool=k8s01-rbd --image-format 2 --image-feature layering root@ceph-deploy:~# rbd ls --pool k8s01-rbd k8s01-img k8s01-img1 root@ceph-deploy:~# rbd --image k8s01-img --pool k8s01-rbd info #查看img信息创建认证文件

root@ceph-deploy:~# ceph auth add client.k8s-rbd mon 'allow r' osd 'allow rwx pool=k8s01-rbd' root@ceph-deploy:~# ceph auth get client.k8s-rbd #查看权限 root@ceph-deploy:~# ceph-authtool --create-keyring ceph.client.k8s-rbd.keyring #创建keyring root@ceph-deploy:~# ceph auth get client.k8s-rbd -o ceph.client.k8s-rbd.keyring # 认证文件导出到keyringk8s集群操作

k8s集群所有节点安装ceph-common

apt install python2.7 -y ln -sv /usr/bin/python2.7 /usr/bin/python2 apt install ca-certificates wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add - apt-add-repository 'deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-octopus/ focal main' apt update apt install ceph-common -y从ceph-deploy 将ceph.client.k8s-rbd.keyring 和ceph.conf拷贝到所有k8s节点

root@ceph-deploy:~# for i in 192.168.56.21{1..6}; do scp /root/ceph.client.k8s-rbd.keyring $i:/etc/ceph/; done root@ceph-deploy:~# for i in 192.168.56.21{1..6}; do scp /etc/ceph/ceph.conf $i:/etc/ceph/; donek8s节点使用

k8s-rbd用户测试cephroot@k8s-master01:~# ceph -s --user k8s-rbd cluster: id: 07d5a4e9-68f6-4172-b716-c6f648d34003 health: HEALTH_OK services: mon: 1 daemons, quorum ceph-mon-mgr1 (age 88m) mgr: ceph-mon-mgr1(active, since 80m) osd: 8 osds: 8 up (since 43m), 8 in (since 43m) data: pools: 2 pools, 192 pgs objects: 9 objects, 421 B usage: 82 MiB used, 160 GiB / 160 GiB avail pgs: 192 active+cleank8s集群所有节点添加ceph解析

cat << EOF >> /etc/hosts 10.168.56.100 ceph-deploy 10.168.56.101 ceph-mon-mgr1 ceph-mon1 ceph-mgr1 10.168.56.102 ceph-mon-mgr2 ceph-mon2 ceph-mgr2 10.168.56.103 ceph-mon3 10.168.56.104 ceph-data1 10.168.56.105 ceph-data2 10.168.56.106 ceph-data3 EOFbusybox通过keyring配置rbd挂载

yaml文件

root@k8s-master01:~# mkdir /root/k8s/ceph-rbd/ root@k8s-master01:~# vim /root/k8s/ceph-rbd/case1.yaml piVersion: v1 kind: Pod metadata: name: busybox namespace: default spec: containers: - image: busybox command: - sleep - "3600" imagePullPolicy: Always name: busybox #restartPolicy: Always volumeMounts: - name: rbd-data1 mountPath: /data volumes: - name: rbd-data1 rbd: monitors: - '10.168.56.101:6789' - '10.168.56.102:6789' - '10.168.56.103:6789' pool: k8s01-rbd image: k8s01-img fsType: ext4 readOnly: false user: k8s-rbd keyring: /etc/ceph/ceph.client.k8s-rbd.keyringapply 配置

root@k8s-master01:~# kubectl apply -f /root/k8s/ceph-rbd/case1.yaml查看rbd映射 ```bash root@k8s-master01:~# kubectl get pod -o wide #查看pod调度到10.168.56.215节点,然后去10.168.56.215节点执行操作 NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES busybox 1/1 Running 0 49s 10.200.58.222 10.168.56.215

root@k8s-node02:~# rbd showmapped

id pool namespace image snap device

0 k8s01-rbd k8s01-img - /dev/rbd0

<a name="Td71i"></a>

##### k8s通过secret配置rbd挂载

1. 将keyring进行base64加密

```bash

root@k8s-master01:~# cat /etc/ceph/ceph.client.k8s-rbd.keyring | grep key | awk '{print $3}'| base64

QVFBVnlYTmh0L1ZjRkJBQTJBV1ZVTGYvRE1GYXNpeCtWYzhqM3c9PQo=

创建secret

root@k8s-master01:~# mkdir /root/k8s/ceph/secret -p root@k8s-master01:~# cd /root/k8s/ceph/secret/ root@k8s-master01:~/k8s/ceph/secret# vim secret.yaml apiVersion: v1 kind: Secret metadata: name: ceph-secret-rbd type: "kubernetes.io/rbd" data: key: QVFBVnlYTmh0L1ZjRkJBQTJBV1ZVTGYvRE1GYXNpeCtWYzhqM3c9PQo=nginx结合secret配置rbd

root@k8s-master01:~/k8s/ceph/secret# vim ng-ceph-rbd-secret.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 1 selector: matchLabels: #rs or deployment app: ng-deploy-80 template: metadata: labels: app: ng-deploy-80 spec: containers: - name: ng-deploy-80 image: nginx ports: - containerPort: 80 volumeMounts: - name: rbd-data1 mountPath: /data volumes: - name: rbd-data1 rbd: monitors: - '10.168.56.101:6789' - '10.168.56.102:6789' - '10.168.56.103:6789' pool: k8s01-rbd image: k8s01-img fsType: ext4 readOnly: false user: k8s-rbd secretRef: name: ceph-secret-rbd root@k8s-master01:~/k8s/ceph/secret# kubectl apply -f ng-ceph-rbd-secret.yaml查看pod状态

root@k8s-master01:~/k8s/ceph/secret# kubectl get pod NAME READY STATUS RESTARTS AGE net-test3 1/1 Running 0 3d9h nginx-deployment-557cfd7756-tc4st 1/1 Running 0 103s动态创建ceph pv

ceph集群创建admin的base64加密串(ceph-deploy节点执行)

root@ceph-deploy:~# cat /etc/ceph/ceph.client.admin.keyring [client.admin] key = AQCwuXNhx5T8AxAAngmIaN2RO8D2FjhbnErE8w== caps mds = "allow *" caps mgr = "allow *" caps mon = "allow *" caps osd = "allow *" root@ceph-deploy:~# cat /etc/ceph/ceph.client.admin.keyring | grep key| awk '{print $3}'| base64 QVFDd3VYTmh4NVQ4QXhBQW5nbUlhTjJSTzhEMkZqaGJuRXJFOHc9PQo=k8s-master01创建secret

root@k8s-master01:~# mkdir /root/k8s/ceph/dynamic-pv root@k8s-master01:~# cd /root/k8s/ceph/dynamic-pv root@k8s-master01:~/k8s/ceph/dynamic-pv# vim ceph-admin-secret.yaml apiVersion: v1 kind: Secret metadata: name: ceph-secret-admin type: "kubernetes.io/rbd" data: key: QVFDd3VYTmh4NVQ4QXhBQW5nbUlhTjJSTzhEMkZqaGJuRXJFOHc9PQo=创建pv-secret

root@k8s-master01:~/k8s/ceph/dynamic-pv# vim ceph-k8s-user-secret.yaml apiVersion: v1 kind: Secret metadata: name: ceph-secret-rbd type: "kubernetes.io/rbd" data: key: QVFBVnlYTmh0L1ZjRkJBQTJBV1ZVTGYvRE1GYXNpeCtWYzhqM3c9PQo=创建storage-class

root@k8s-master01:~/k8s/ceph/dynamic-pv# vim storage-class.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: ceph-storage-class-rbd annotations: storageclass.kubernetes.io/is-default-class: "true" #设置为默认存储类 provisioner: kubernetes.io/rbd parameters: monitors: 10.168.56.101:6789,10.168.56.102:6789,10.168.56.103:6789 adminId: admin adminSecretName: ceph-secret-admin adminSecretNamespace: default pool: k8s01-rbd userId: k8s-rbd userSecretName: ceph-secret-rbd创建pvc

root@k8s-master01:~/k8s/ceph/dynamic-pv# vim mysql-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mysql-data-pvc spec: accessModes: - ReadWriteOnce storageClassName: ceph-storage-class-rbd resources: requests: storage: '5Gi'创建mysql使用pvc ```bash root@k8s-master01:~/k8s/ceph/dynamic-pv# docker pull mysql:5.6.46 root@k8s-master01:~/k8s/ceph/dynamic-pv# docker tag mysql:5.6.46 harbor.cropy.cn/cropy/mysql:5.6.46 root@k8s-master01:~/k8s/ceph/dynamic-pv# docker push harbor.cropy.cn/cropy/mysql:5.6.46 root@k8s-master01:~/k8s/ceph/dynamic-pv# vim mysql-deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: name: mysql spec: selector: matchLabels: app: mysql strategy: type: Recreate template: metadata: labels:

app: mysqlspec: containers:

- image: harbor.cropy.cn/cropy/mysql:5.6.46

name: mysql

env:

Use secret in real usage

- name: MYSQL_ROOT_PASSWORD value: cropy123456 ports:

- containerPort: 3306 name: mysql volumeMounts:

- name: mysql-persistent-storage mountPath: /var/lib/mysql volumes:

- name: mysql-persistent-storage persistentVolumeClaim: claimName: mysql-data-pvc

- image: harbor.cropy.cn/cropy/mysql:5.6.46

name: mysql

env:

kind: Service apiVersion: v1 metadata: labels: app: mysql-service-label name: mysql-service spec: type: NodePort ports:

- name: http port: 3306 protocol: TCP targetPort: 3306 nodePort: 43306 selector: app: mysql ```

apply& 测试

root@k8s-master01:~/k8s/ceph/dynamic-pv# kubectl apply -f mysql-deploy.yaml root@k8s-master01:~/k8s/ceph/dynamic-pv# kubectl get pod -o wide测试挂载点map ```bash root@k8s-node02:~# rbd showmapped id pool namespace image snap device

0 k8s01-rbd kubernetes-dynamic-pvc-2b9f4648-e0c3-4899-8912-6da74686512d - /dev/rbd0 root@k8s-node02:~# ceph df —user k8s-rbd —- RAW STORAGE —- CLASS SIZE AVAIL USED RAW USED %RAW USED hdd 160 GiB 159 GiB 510 MiB 510 MiB 0.31 TOTAL 160 GiB 159 GiB 510 MiB 510 MiB 0.31

—- POOLS —- POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL device_health_metrics 1 128 0 B 0 0 B 0 50 GiB k8s01-rbd 2 64 125 MiB 66 374 MiB 0.24 50 GiB

<a name="PpXYo"></a>

#### 结合cephfs配置存储

1. 在ceph-mon3节点安装ceph-mds (正常需要高可用部署,高可用部署参考文档:[https://www.yuque.com/wanghui-pooq1/ryovus/byblrg](https://www.yuque.com/wanghui-pooq1/ryovus/byblrg))

```bash

root@ceph-mon3:~# apt install ceph-mds -y

ceph-deploy节点初始化ceph-mds

root@ceph-deploy:~# su - cephstore cephstore@ceph-deploy:~$ cd ceph-clusters/ cephstore@ceph-deploy:~/ceph-clusters$ ceph-deploy mds create ceph-mon3 cephstore@ceph-deploy:~/ceph-clusters$ ceph mds stat创建cephfs专用metadata和data存储池

cephstore@ceph-deploy:~/ceph-clusters$ ceph osd pool create k8s01-cephfs-data 64 64 cephstore@ceph-deploy:~/ceph-clusters$ ceph osd pool create k8s01-cephfs-metadata 32 32创建cephfs 并验证

cephstore@ceph-deploy:~/ceph-clusters$ ceph fs new myk8s-cephfs k8s01-cephfs-metadata k8s01-cephfs-data cephstore@ceph-deploy:~/ceph-clusters$ ceph fs ls name: myk8s-cephfs, metadata pool: k8s01-cephfs-metadata, data pools: [k8s01-cephfs-data ] cephstore@ceph-deploy:~/ceph-clusters$ ceph fs status myk8s-cephfs cephstore@ceph-deploy:~/ceph-clusters$ ceph mds stat创建secret

root@k8s-master01:~# mkdir /root/k8s/ceph/cephfs root@k8s-master01:~# cd /root/k8s/ceph/cephfs root@k8s-master01:~/k8s/ceph/cephfs# vim cephfs-k8s-admin-secret.yaml apiVersion: v1 kind: Secret metadata: name: cephfs-secret-admin type: "kubernetes.io/rbd" data: key: QVFDd3VYTmh4NVQ4QXhBQW5nbUlhTjJSTzhEMkZqaGJuRXJFOHc9PQo=创建nginx使用cephfs

root@k8s-master01:~/k8s/ceph/cephfs# vim cephfs-k8s.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 3 selector: matchLabels: #rs or deployment app: ng-deploy-80 template: metadata: labels: app: ng-deploy-80 spec: containers: - name: ng-deploy-80 image: nginx ports: - containerPort: 80 volumeMounts: - name: myk8s-cephfs mountPath: /usr/share/nginx/html/ volumes: - name: myk8s-cephfs cephfs: monitors: - '192.168.56.101:6789' - '192.168.56.102:6789' - '192.168.56.103:6789' path: / user: admin secretRef: name: cephfs-secret-adminapply&验证

root@k8s-master01:~/k8s/ceph/cephfs# kubectl apply -f cephfs-k8s-admin-secret.yaml root@k8s-master01:~/k8s/ceph/cephfs# kubectl apply -f cephfs-k8s.yamlpod状态和探针

官方文档: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/

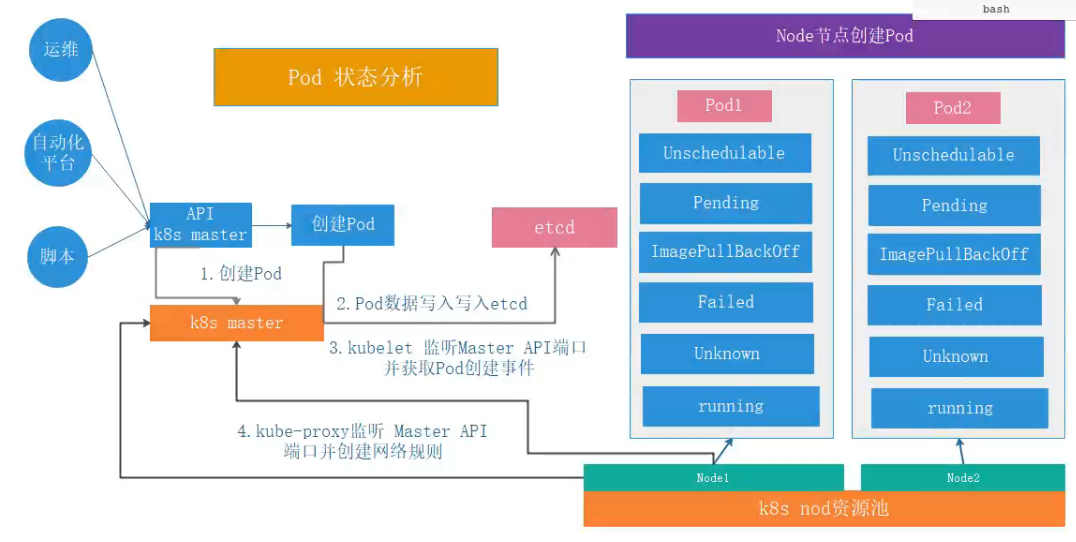

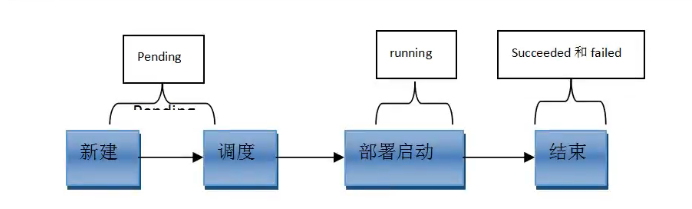

pod状态

第一阶段

- Pending

- Failed

- Unknown

- Secceeded

- 第二阶段

- Unschedulable

- PodScheduled

- Initialized

- ImagePullBackOff

- Running

- Ready

Pod调度过程

- apiserver鉴权

- etcd数据写入

- apiserver到scheduler发送调度请求

- 根据节点资源使用,污点,标签选择适合的节点

- 创建pod

- kubelet上报pod创建信息给apiserver

pod探针

Probe 是由 kubelet 对容器执行的定期诊断。 要执行诊断,kubelet 调用由容器实现的 Handler (处理程序)。有三种类型的处理程序:- ExecAction: 在容器内执行指定命令。如果命令退出时返回码为 0 则认为诊断成功。

- TCPSocketAction: 对容器的 IP 地址上的指定端口执行 TCP 检查。如果端口打开,则诊断被认为是成功的。

- HTTPGetAction: 对容器的 IP 地址上指定端口和路径执行 HTTP Get 请求。如果响应的状态码大于等于 200 且小于 400,则诊断被认为是成功的。

每次探测都将获得以下三种结果之一:

Success(成功):容器通过了诊断。 Failure(失败):容器未通过诊断。 Unknown(未知):诊断失败,因此不会采取任何行动。

针对运行中的容器,kubelet 可以选择是否执行以下三种探针,以及如何针对探测结果作出反应:

- livenessProbe:指示容器是否正在运行。如果存活态探测失败,则 kubelet 会杀死容器, 并且容器将根据其重启策略决定未来。如果容器不提供存活探针, 则默认状态为 Success。

- readinessProbe:指示容器是否准备好为请求提供服务。如果就绪态探测失败, 端点控制器将从与 Pod 匹配的所有服务的端点列表中删除该 Pod 的 IP 地址。 初始延迟之前的就绪态的状态值默认为 Failure。 如果容器不提供就绪态探针,则默认状态为 Success。

- startupProbe: 指示容器中的应用是否已经启动。如果提供了启动探针,则所有其他探针都会被 禁用,直到此探针成功为止。如果启动探测失败,kubelet 将杀死容器,而容器依其 重启策略进行重启。 如果容器没有提供启动探测,则默认状态为 Success。

配置探针

- 官方文档: https://kubernetes.io/zh/docs/tasks/configure-pod-container/configure-liveness-readiness-startup-probes/

- 常见的探针配置字段

- initialDelaySeconds:容器启动后要等待多少秒后存活和就绪探测器才被初始化,默认是 0 秒,最小值是 0。

- periodSeconds:执行探测的时间间隔(单位是秒)。默认是 10 秒。最小值是 1。

- timeoutSeconds:探测的超时后等待多少秒。默认值是 1 秒。最小值是 1。

- successThreshold:探测器在失败后,被视为成功的最小连续成功数。默认值是 1。 存活和启动探测的这个值必须是 1。最小值是 1。

- failureThreshold:当探测失败时,Kubernetes 的重试次数。 存活探测情况下的放弃就意味着重新启动容器。 就绪探测情况下的放弃 Pod 会被打上未就绪的标签。默认值是 3。最小值是 1。

- nginx httpGet探针实例

```bash

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels: #rs or deployment

app: ng-deploy-80

template:

metadata:

labels:

spec: containers:app: ng-deploy-80

apiVersion: v1 kind: Service metadata: name: ng-deploy-80 spec: ports:

- name: http port: 81 targetPort: 80 nodePort: 40012 protocol: TCP type: NodePort selector: app: ng-deploy-80 ```

tcp检查 ```bash apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 1 selector: matchLabels: #rs or deployment app: ng-deploy-80 template: metadata: labels:

app: ng-deploy-80spec: containers:

name: ng-deploy-80 image: nginx:1.17.5 ports:

- containerPort: 80 livenessProbe: tcpSocket: port: 80 initialDelaySeconds: 5 periodSeconds: 3 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3

readinessProbe: tcpSocket:

port: 80initialDelaySeconds: 5 periodSeconds: 3 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3

apiVersion: v1 kind: Service metadata: name: ng-deploy-80 spec: ports:

- name: http port: 81 targetPort: 80 nodePort: 40012 protocol: TCP type: NodePort selector: app: ng-deploy-80 ```

shell cmd检查 ```bash apiVersion: apps/v1 kind: Deployment metadata: name: redis-deployment spec: replicas: 1 selector: matchLabels: #rs or deployment app: redis-deploy-6379 template: metadata: labels:

app: redis-deploy-6379spec: containers:

name: redis-deploy-6379 image: redis ports:

- containerPort: 6379

readinessProbe:

exec:

command:

- /usr/local/bin/redis-cli

- quit initialDelaySeconds: 5 periodSeconds: 3 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3

livenessProbe: exec:

command: - /usr/local/bin/redis-cli - quitinitialDelaySeconds: 5 periodSeconds: 3 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3

- containerPort: 6379

readinessProbe:

exec:

command:

apiVersion: v1 kind: Service metadata: name: redis-deploy-6379 spec: ports:

- name: http port: 6379 targetPort: 6379 nodePort: 40016 protocol: TCP type: NodePort selector: app: redis-deploy-6379

```