特别关注:由于hadoop3xy不兼容hive2xy,如使用hive安装会出现各种问题,故使用hive3作为本次环境搭建

安装mysql

使用mysql5.7版本

# yum仓库安装,安装完成后就会覆盖掉之前的mariadbwget -i -c http://dev.mysql.com/get/mysql57-community-release-el7-10.noarch.rpmrpm -ivh mysql57-community-release-el7-10.noarch.rpmyum -y install mysql-community-serverif [ $0 != 0 ];thenecho "ERROR: 安装失败,尝试导入证书"rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY*yum -y install mysql-community-server || \yum -y install mysql-community-server --nogpgcheckfi# 因为安装了Yum Repository,以后每次yum操作都会自动更新,需要把这个卸载掉:yum -y remove mysql57-community-release-el7-10.noarch

/etc/my.cnf 参考配置如下(可以直接使用):

[mysqld]datadir=/var/lib/mysqlsocket=/var/lib/mysql/mysql.socklog-error=/var/log/mysqld.logpid-file=/var/run/mysqld/mysqld.pidport=3306max_connections=1000max_connect_errors=10character-set-server=UTF8MB4default-storage-engine=INNODBdefault_authentication_plugin=mysql_native_passwordexplicit_defaults_for_timestamp=true[mysql]default-character-set=UTF8MB4[client]port=3306default-character-set=UTF8MB4

启动:service mysqld restart如果启动失败需要检查上面的安装脚本输出内容。

启动后获取临时密码:cat /var/log/mysqld.log|grep password

连接数据库并重置密码:

:::info

mysql -hlocalhost -uroot -p

mysql> ALTER USER ‘root’@’localhost’ IDENTIFIED BY ‘改为你的密码’; # 重置密码

mysql> use mysql;select user,host from user; # 查看用户

:::

用户授权,需要本机执行,使其他机器可以直连

:::info

mysql> grant all privileges on . to ‘root’@’%’ identified by ‘xxx’;

:::

如果不行就:

:::info

mysql> grant all privileges on . to ‘root’@’%’ identified by ‘xxx’ with grant option;

:::

注意需要将上面的’xxx’替换为你的密码。

忘记root密码重置

/etc/my.conf 的[mysqld]中加skip-grant-tables,再执行service mysqld restart

再执行mysql

:::info

mysql> USE mysql;

mysql> UPDATE user SET authentication_string = password(‘xxx’) WHERE User = ‘root’;

mysql> flush privileges;

mysql> quit

:::

再去掉skip-grant-tables,重启数据库:service mysqld restart

登录数据库后再重置密码。

创建源数据表

创建metastore数据库实例: :::info mysql> create database metastore; :::

配置hive 环境

本次环境搭建使用hive3.1.2版本,下载地址 http://archive.apache.org/dist/

安装目录: /opt/hdata/hive

配置文件: /opt/hdata/hive/conf

包解压到/opt/hdata,解压后创建软连接:

:::info

ln -s /opt/hdata/apache-hive-3.1.2-bin /opt/hdata/hive

:::

配置hive-env.sh ,进入conf目

进入/opt/hdata/hive/conf目录

:::info

cp hive-env.sh.template hive-env.sh

:::

打开 hive-env.sh文件:

:::info

HADOOP_HOME=/opt/hdata/hadoop

export HIVE_CONF_DIR=/opt/hdata/hive/conf

:::

配置hive-site.xml

进入conf目录 :::info cp hive-default.xml.template hive-site.xml ::: 打开hive-site.xml文件,对于如下内容有则修改,无则新增:

<property><name>system:java.io.tmpdir</name><value>/user/hive/warehouse</value></property><property><name>system:user.name</name><value>${user.name}</value></property><property><name>hive.metastore.db.type</name><value>mysql</value></property><property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://localhost:3306/metastore?createDatabaseIfNotExist=true&useSSL=false</value></property><property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.jdbc.Driver</value></property><property><name>javax.jdo.option.ConnectionUserName</name><value>root</value><description>user name for connecting to mysql server</description></property><property><name>javax.jdo.option.ConnectionPassword</name><value>root</value><description>password for connecting to mysql server</description></property>

通常 system:java.io.tmpdir system:user.name 节点需要新增,其他节点需要修改,注意替换其中的用户名密码。

创建目录

:::info

hadoop fs -mkdir -p /tmp

hadoop fs -mkdir -p /user/hive/warehouse

hadoop fs -chmod g+w /tmp

hadoop fs -chmod g+w /user/hive/warehouse

:::

替换低版本的guava.jar文件

错误一:Exception in thread “main” java.lang.NoSuchMethodError: com.google.common.base.Preconditions.checkArgument(ZLjava/lang/String;Ljava/lang/Object;)V 原因:hadoop和hive的两个guava.jar版本不一致 两个位置分别位于下面两个目录: - /usr/local/hive/lib/ - /usr/local/hadoop/share/hadoop/common/lib/

解决办法: 除低版本的那个,将高版本的复制到低版本目录下

:::info

rm -f /opt/hdata/hive/lib/guava-19.0.jar

cp /opt/hdata/hadoop/share/hadoop/common/lib/guava-27.0-jre.jar /opt/hdata/hive/lib/

:::

删除hive-site.xml中的特殊字符

错误二:Exception in thread “main” java.lang.RuntimeException: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8 at [row,col,system-id]: [3224,96,”file:/app/hive-3.1.2/conf/hive-site.xml”] 原因: hive-site.xml配置文件中,3224行有特殊字符 解决办法: 进入hive-site.xml文件,跳转到对应行,删除里面的特殊字符即可

上传jdbc驱动至hive/lib目录

错误三: org.apache.hadoop.hive.metastore.HiveMetaException: Failed to load driver Underlying cause: java.lang.ClassNotFoundException : com.mysql.jdbc.Driver 原因:缺少jdbc驱动 解决办法:上传jdbc( mysql-connector-java-5.1.44-bin.jar )驱动到 hive的lib下

驱动可以从这里找: https://developer.aliyun.com/mvn/search

初始化hive

:::info bin/schematool -dbType mysql -initSchema ::: 初始化成功出现:

Initialization script completed schemaTool completed

启动 metastore服务

不启动会报错:HiveException java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient :::info bin/hive —service metastore & :::

进入hive

:::info

bin/hive

:::

注意如果insert时卡住不能向下执行,查看日志(hive的日志文件在:/tmp/{user.name}下)一直在连接8032端口,则是MR没有启动

在命令行输入:

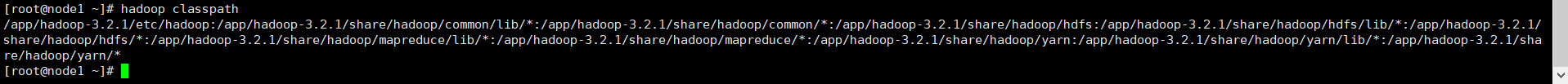

:::info

hadoop classpath

:::

将内容,添加至yarn-site.xml中

<property><name>yarn.application.classpath</name><value>/app/hadoop-3.2.1/etc/hadoop:/app/hadoop-3.2.1/share/hadoop/common/lib/*:/app/hadoop-3.2.1/share/hadoop/common/*:/app/hadoop-3.2.1/share/hadoop/hdfs:/app/hadoop-3.2.1/share/hadoop/hdfs/lib/*:/app/hadoop-3.2.1/share/hadoop/hdfs/*:/app/hadoop-3.2.1/share/hadoop/mapreduce/lib/*:/app/hadoop-3.2.1/share/hadoop/mapreduce/*:/app/hadoop-3.2.1/share/hadoop/yarn:/app/hadoop-3.2.1/share/hadoop/yarn/lib/*:/app/hadoop-3.2.1/share/hadoop/yarn/*</value></property>

yarn-site.xml文件位置: ${HADOOP_HOME}/etc/hadoop/yarn-site.xml

如果yarn没有气筒,则启动 yarn:

:::info

$HADOOP_HOME/sbin/start-yarn.sh

:::

可以通过jps命令查看yarn是否启动,其中NodeManager、ResourceManager为yarn进程。

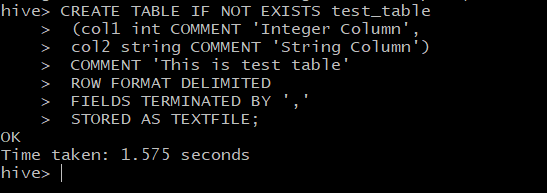

hive创建表

创建表

:::info

hive> CREATE TABLE IF NOT EXISTS test_table

(col1 int COMMENT ‘Integer Column’,

col2 string COMMENT ‘String Column’)

COMMENT ‘This is test table’

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ‘,’

STORED AS TEXTFILE;

:::

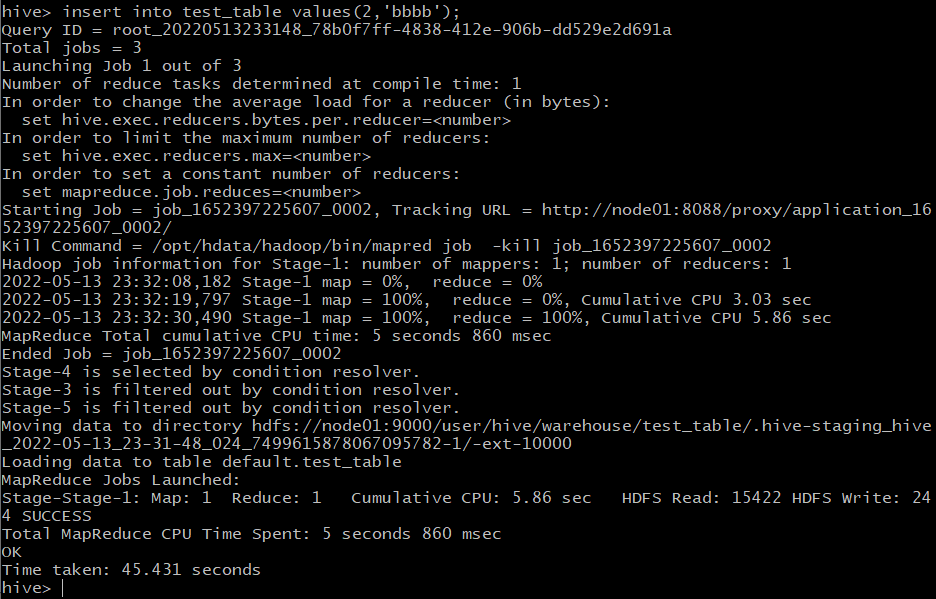

写入

:::info

hive> insert into test_table values(2,’bbbb’);

:::

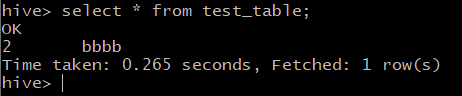

查询

:::info

hive> select * from test_table;

:::

hive调优

Tez 参数优化

优化参参数(在同样条件下,使用了tez从300s+降到200s+)

set hive.execution.engine=tez;set mapred.job.name=recommend_user_profile_$idate;set mapred.reduce.tasks=-1;set hive.exec.reducers.max=160;set hive.auto.convert.join=true;set hive.exec.parallel=true;set hive.exec.parallel.thread.number=16;set hive.optimize.skewjoin=true;set hive.exec.reducers.bytes.per.reducer=100000000;set mapred.max.split.size=200000000;set mapred.min.split.size.per.node=100000000;set mapred.min.split.size.per.rack=100000000;set hive.hadoop.supports.splittable.combineinputformat=true;