8 配置管理

8.1 secret

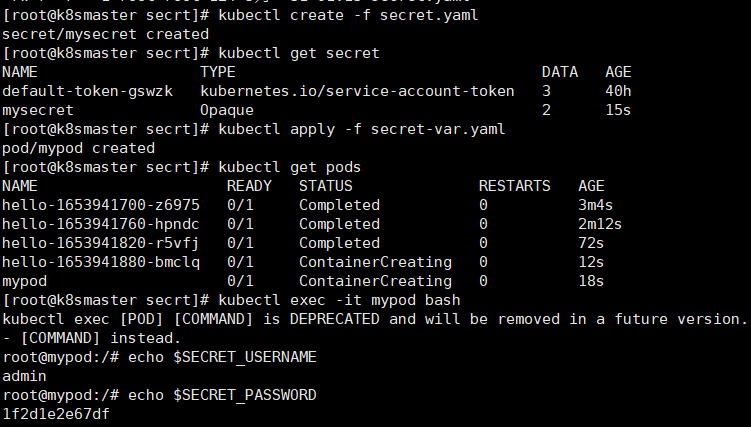

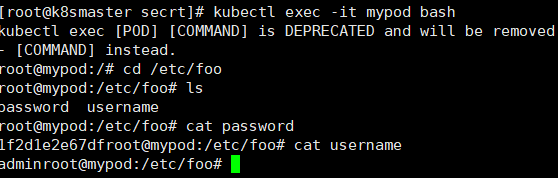

Secret 解决了密码、token、密钥等敏感数据的配置问题,而不需要把这些敏感数据暴露到镜像或者Pod Spec 中。Secret 可以以Volume 或者环境变量的方式使用

Secret有三种类型

- Opaque:base64 编码格式的 secret,用来存储密码、密钥等;但数据也可以通过base64 –decode解码得到原始数据,所有加密性很弱。

- Service Account:用来访问Kubernetes API,由Kubernetes自动创建,并且会自动挂载到Pod的 /run/secrets/kubernetes.io/serviceaccount 目录中。

- kubernetes.io/dockerconfigjson : 用来存储私有docker registry的认证信息。

apiVersion: v1kind: Secretmetadata:name: mysecrettype: Opaquedata:username: YWRtaW4=password: MWYyZDFlMmU2N2Rm

apiVersion: v1kind: Podmetadata:name: mypodspec:containers:- name: nginximage: nginxenv:- name: SECRET_USERNAMEvalueFrom:secretKeyRef:name: mysecretkey: username- name: SECRET_PASSWORDvalueFrom:secretKeyRef:name: mysecretkey: password

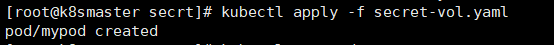

apiVersion: v1kind: Podmetadata:name: mypodspec:containers:- name: nginximage: nginxvolumeMounts:- name: foomountPath: "/etc/foo"readOnly: truevolumes:- name: foosecret:secretName: mysecret

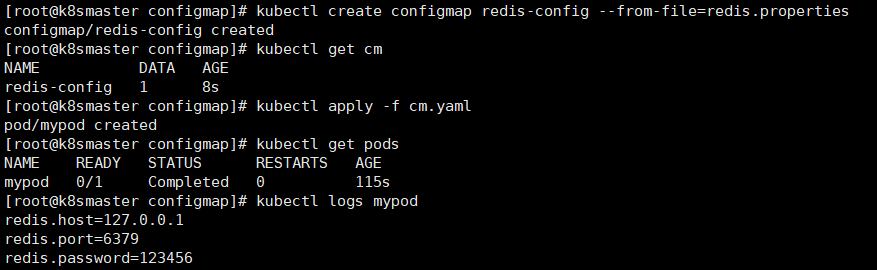

8.2 configMap

存储不加密数据到etcd,让pod以变量或者Volume挂载到容器中,一般的配置文件都可以用这种方式8.2.1 以Volume挂载到pod容器中

redis.host=127.0.0.1redis.port=6379redis.password=123456

apiVersion: v1kind: Podmetadata:name: mypodspec:containers:- name: busyboximage: busyboxcommand: [ "/bin/sh","-c","cat /etc/config/redis.properties" ]volumeMounts:- name: config-volumemountPath: /etc/configvolumes:- name: config-volumeconfigMap:name: redis-configrestartPolicy: Never

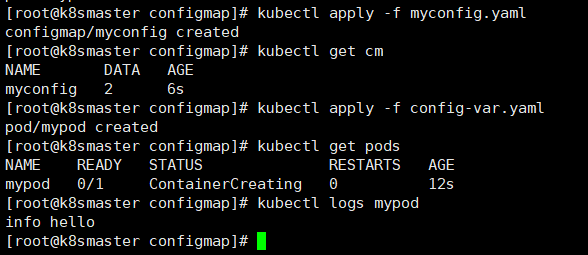

8.2.2 以变量形式挂载到pod容器中

```yaml apiVersion: v1 kind: ConfigMap metadata: name: myconfig namespace: default data: special.level: info special.type: hello

```yamlapiVersion: v1kind: Podmetadata:name: mypodspec:containers:- name: busyboximage: busyboxcommand: [ "/bin/sh", "-c", "echo $(LEVEL) $(TYPE)" ]env:- name: LEVELvalueFrom:configMapKeyRef:name: myconfigkey: special.level- name: TYPEvalueFrom:configMapKeyRef:name: myconfigkey: special.typerestartPolicy: Never

9 集群安全机制RBAC

可参考:https://www.cnblogs.com/jhno1/p/15607638.html

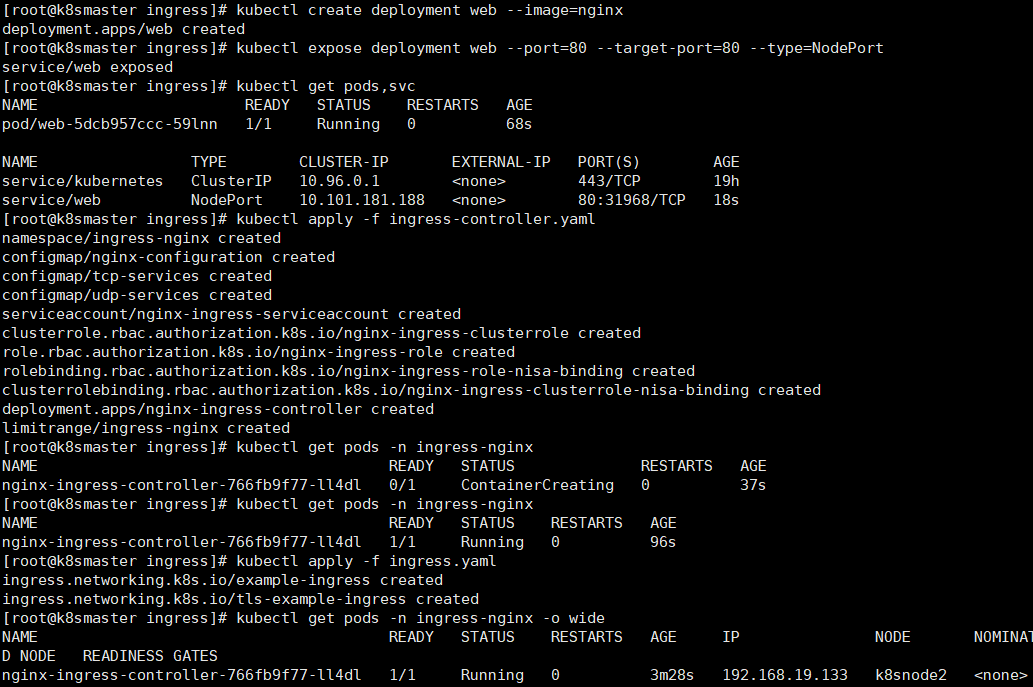

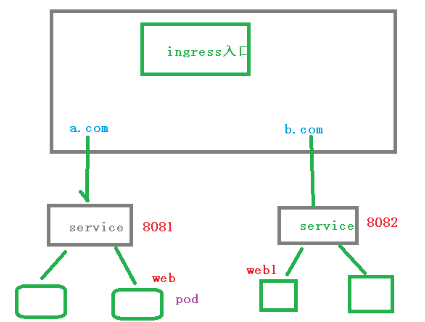

10 Ingress

k8s对外通过NodePort,LoadBalance暴露服务,但是有很大缺点。NodePort会每个应用都会占用一个端口;LoadBalance方式最大的缺点则是每个service一个LB又有点浪费和麻烦,并且需要k8s之外的支持

而用ingress 只需要一个NodePort或者一个LoadBalance就可以满足所有service对外服务

apiVersion: v1kind: Namespacemetadata:name: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginx---kind: ConfigMapapiVersion: v1metadata:name: nginx-configurationnamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginx---kind: ConfigMapapiVersion: v1metadata:name: tcp-servicesnamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginx---kind: ConfigMapapiVersion: v1metadata:name: udp-servicesnamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginx---apiVersion: v1kind: ServiceAccountmetadata:name: nginx-ingress-serviceaccountnamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginx---apiVersion: rbac.authorization.k8s.io/v1beta1kind: ClusterRolemetadata:name: nginx-ingress-clusterrolelabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxrules:- apiGroups:- ""resources:- configmaps- endpoints- nodes- pods- secretsverbs:- list- watch- apiGroups:- ""resources:- nodesverbs:- get- apiGroups:- ""resources:- servicesverbs:- get- list- watch- apiGroups:- ""resources:- eventsverbs:- create- patch- apiGroups:- "extensions"- "networking.k8s.io"resources:- ingressesverbs:- get- list- watch- apiGroups:- "extensions"- "networking.k8s.io"resources:- ingresses/statusverbs:- update---apiVersion: rbac.authorization.k8s.io/v1beta1kind: Rolemetadata:name: nginx-ingress-rolenamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxrules:- apiGroups:- ""resources:- configmaps- pods- secrets- namespacesverbs:- get- apiGroups:- ""resources:- configmapsresourceNames:# Defaults to "<election-id>-<ingress-class>"# Here: "<ingress-controller-leader>-<nginx>"# This has to be adapted if you change either parameter# when launching the nginx-ingress-controller.- "ingress-controller-leader-nginx"verbs:- get- update- apiGroups:- ""resources:- configmapsverbs:- create- apiGroups:- ""resources:- endpointsverbs:- get---apiVersion: rbac.authorization.k8s.io/v1beta1kind: RoleBindingmetadata:name: nginx-ingress-role-nisa-bindingnamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxroleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: nginx-ingress-rolesubjects:- kind: ServiceAccountname: nginx-ingress-serviceaccountnamespace: ingress-nginx---apiVersion: rbac.authorization.k8s.io/v1beta1kind: ClusterRoleBindingmetadata:name: nginx-ingress-clusterrole-nisa-bindinglabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: nginx-ingress-clusterrolesubjects:- kind: ServiceAccountname: nginx-ingress-serviceaccountnamespace: ingress-nginx---apiVersion: apps/v1kind: Deploymentmetadata:name: nginx-ingress-controllernamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxspec:replicas: 1selector:matchLabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxtemplate:metadata:labels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxannotations:prometheus.io/port: "10254"prometheus.io/scrape: "true"spec:hostNetwork: true# wait up to five minutes for the drain of connectionsterminationGracePeriodSeconds: 300serviceAccountName: nginx-ingress-serviceaccountnodeSelector:kubernetes.io/os: linuxcontainers:- name: nginx-ingress-controllerimage: lizhenliang/nginx-ingress-controller:0.30.0args:- /nginx-ingress-controller- --configmap=$(POD_NAMESPACE)/nginx-configuration- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services- --udp-services-configmap=$(POD_NAMESPACE)/udp-services- --publish-service=$(POD_NAMESPACE)/ingress-nginx- --annotations-prefix=nginx.ingress.kubernetes.iosecurityContext:allowPrivilegeEscalation: truecapabilities:drop:- ALLadd:- NET_BIND_SERVICE# www-data -> 101runAsUser: 101env:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespaceports:- name: httpcontainerPort: 80protocol: TCP- name: httpscontainerPort: 443protocol: TCPlivenessProbe:failureThreshold: 3httpGet:path: /healthzport: 10254scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10successThreshold: 1timeoutSeconds: 10readinessProbe:failureThreshold: 3httpGet:path: /healthzport: 10254scheme: HTTPperiodSeconds: 10successThreshold: 1timeoutSeconds: 10lifecycle:preStop:exec:command:- /wait-shutdown---apiVersion: v1kind: LimitRangemetadata:name: ingress-nginxnamespace: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxspec:limits:- min:memory: 90Micpu: 100mtype: Container

---# httpapiVersion: networking.k8s.io/v1beta1kind: Ingressmetadata:name: example-ingressspec:rules:- host: example.ctnrs.comhttp:paths:- path: /backend:serviceName: webservicePort: 80---# httpsapiVersion: networking.k8s.io/v1beta1kind: Ingressmetadata:name: tls-example-ingressspec:tls:- hosts:- sslexample.ctnrs.comsecretName: secret-tlsrules:- host: sslexample.ctnrs.comhttp:paths:- path: /backend:serviceName: webservicePort: 80

11 Helm

11.1 什么是helm

K8S 上的应用对象,都是由特定的资源描述组成,包括deployment、service 等。都保存各自文件中或者集中写到一个配置文件。然后kubectl apply –f 部署。但是在微服务架构中,服务多达几十个,不管是版本控制还是资源管理都有很大问题。

Helm 是一个Kubernetes 的包管理工具,就像Linux 下的包管理器,如yum/apt 等,可以很方便的将之前打包好的yaml 文件部署到kubernetes 上。

11.2 helm的作用

- 使用helm可以把这些yaml作为一个整体管理

- 实现yaml高效复用

-

11.3 helm几个重要概念

Helm:一个命令行客户端工具,主要用于Kubernetes 应用chart 的创建、打包、发布和管理。

- Chart:应用描述,一系列用于描述k8s 资源相关文件的集合。

- Release:基于Chart 的部署实体,一个chart 被Helm 运行后将会生成对应的一个release;将在k8s 中创建出真实运行的资源对象。

- Repository:用于发布和存储Chart的仓库

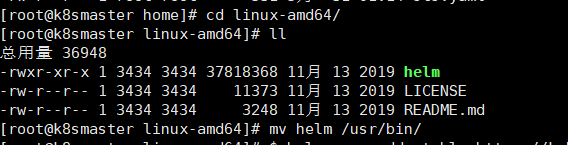

11.4 helm安装(v3版本)

11.4.1 安装并解压helm

# https://github.com/helm/helm/releaseswget https://get.helm.sh/helm-v3.0.0-linux-amd64.tar.gztar -zxvf helm-v3.0.0-linux-amd64.tar.gz

11.4.2 配置helm镜像仓库

#配置镜像仓库 阿里或者微软helm repo add stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/chartshelm repo add stable http://mirror.azure.cn/kubernetes/charts/#更新仓库地址helm repo update#查看仓库源helm repo list#删除仓库源helm repo remove <name>

11.5 使用helm快速部署应用

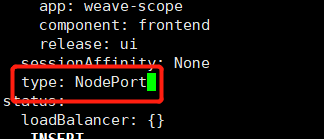

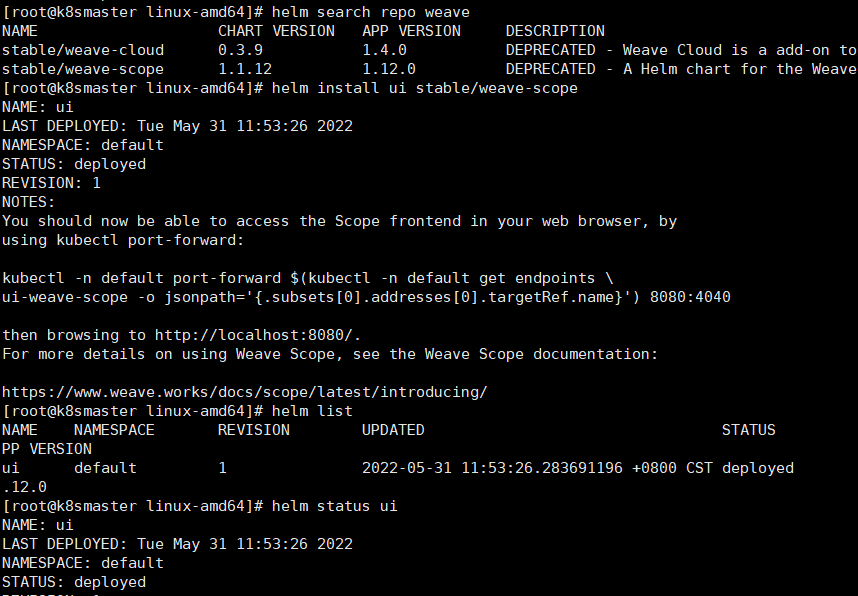

#快速部署一个weave#查找仓库helm search repo weave#安装weavehelm install ui stable/weave-scope#查看已安装列表helm list#修改service type为NodePort对外暴露端口kubectl edit svc ui-weave-scope

11.6 自定义Chart

11.6.1 自定义chart demo运行

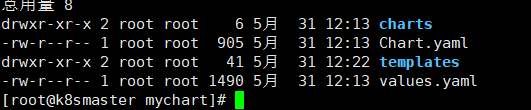

- Chart.yaml:当前chart属性配置信息

- templates:编写yaml文件放在这个目录中

- values.yaml:yaml文件可以使用全局变量

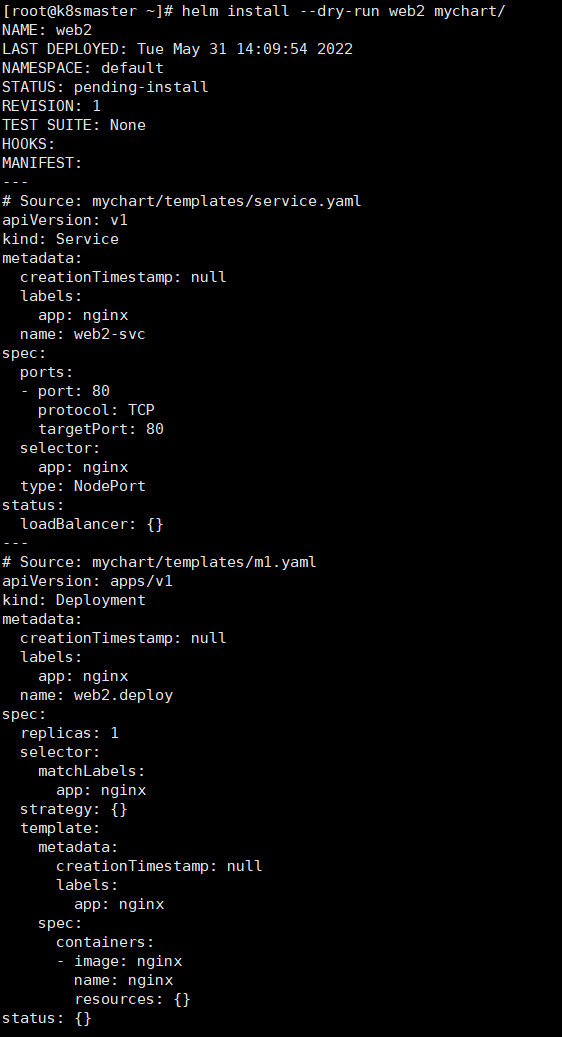

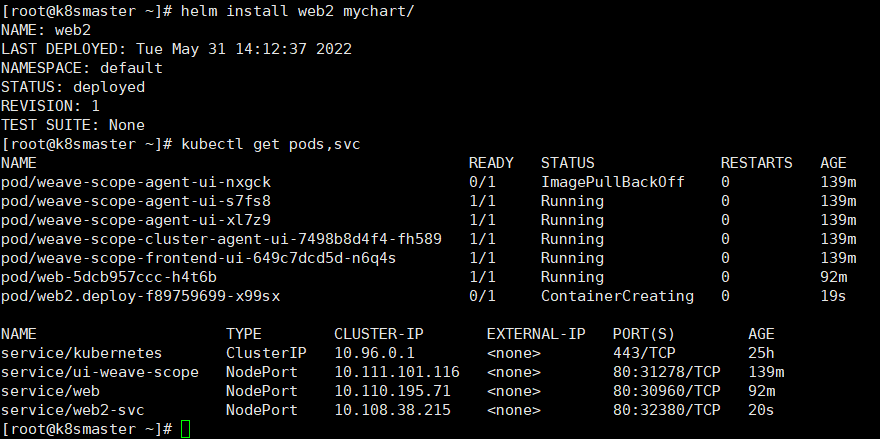

#创建自定义charthelm create mychart#templates 中创建一个yamlkubectl create deployment web --image=nginx -o yaml --dry-run > m1.yaml#执行yaml为了创建service.yamlkubectl apply -f m1.yaml#templates中创建service.yamlkubectl expose deployment web --port=80 --target-port=80 --type=NodePort --dry-run -o yaml >service.yaml#删除创建的podkubectl delete deployment web#使用helm安装mychathelm install web mychart/#更新mychathelm upgrade web mychart/

11.6.2 chart 模板使用

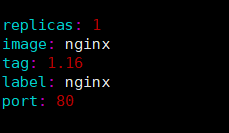

修改values.yaml

在templates的yaml文件中使用values.yaml自定义变量

使用通用表达式方式 {{ .Values.变量名称}}

{{ .Release.Name}} :表示当前版本名称

apiVersion: apps/v1kind: Deploymentmetadata:creationTimestamp: nulllabels:app: {{ .Values.label}}name: {{ .Release.Name}}.deployspec:replicas: 1selector:matchLabels:app: {{ .Values.label}}strategy: {}template:metadata:creationTimestamp: nulllabels:app: {{ .Values.label}}spec:containers:- image: {{ .Values.image}}name: nginxresources: {}status: {}

apiVersion: v1kind: Servicemetadata:creationTimestamp: nulllabels:app: {{ .Values.label}}name: {{ .Release.Name}}.svcspec:ports:- port: {{ .Values.port}}protocol: TCPtargetPort: 80selector:app: {{ .Values.label}}type: NodePortstatus:loadBalancer: {}

12 持久化存储

pod中存储文件在数据卷,emoptydiy中,pod重启数据就丢失了,所有需要对数据持久化存储

12.1 nfs 网络存储

部署nfs网络步骤

- 在一台新的服务器上,node节点上 安装nfs服务端

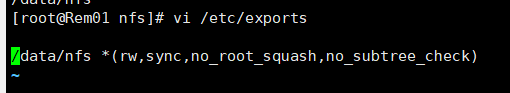

- 设置挂载目录 挂载目录需要在服务器上存在

- 在nfs服务器上启动nfs服务

- 在k8s集群部署应用使用nfs持久网络存储

服务器设置挂载目录 挂载目录需要在服务器上存在#在一台新的服务器上,node节点上 安装nfs服务端yum install -y nfs-utils#启动nfssystemctl start nfs# 挂载目录/data/nfs *(rw,sync,no_root_squash,no_subtree_check)#暴露端口kubectl expose deployment nginx-dep1 --port=80 --target-port=80 --type=NodePort

apiVersion: apps/v1kind: Deploymentmetadata:name: nginx-dep1spec:replicas: 1selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: nginximage: nginxvolumeMounts:- name: wwwrootmountPath: /usr/share/nginx/htmlports:- containerPort: 80volumes:- name: wwwrootnfs:server: 192.168.19.134path: /data/nfs

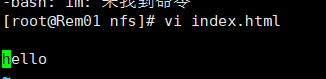

在服务器端创建一个文件

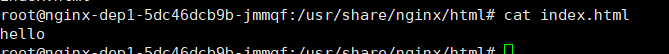

进入pod里面查看 会同步进去

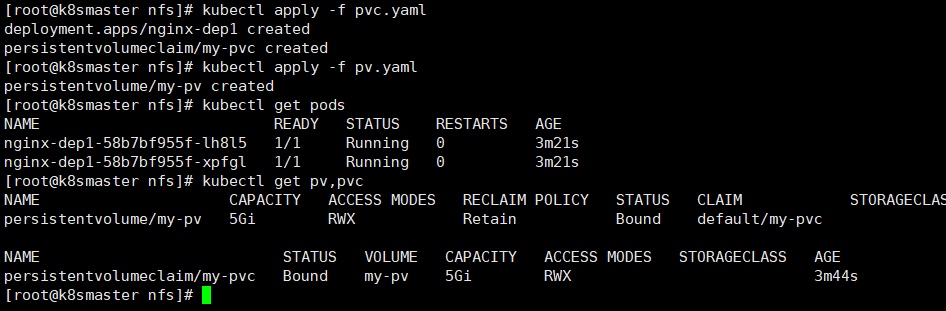

12.2 pc和pvc

PersistentVolume(PV):持久化存储,对存储资源进行抽象,对外提供可以调用的地方(生产者)

PersistentVolumeClaim(PVC):用于调用,不需要关系内部实现细节(消费者)

apiVersion: v1kind: PersistentVolumemetadata:name: my-pvspec:capacity:storage: 5GiaccessModes:- ReadWriteManynfs:path: /data/nfsserver: 192.168.19.134

apiVersion: apps/v1kind: Deploymentmetadata:name: nginx-dep1spec:replicas: 2selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:containers:- name: nginximage: nginxvolumeMounts:- name: wwwrootmountPath: /usr/share/nginx/htmlports:- containerPort: 80volumes:- name: wwwrootpersistentVolumeClaim:claimName: my-pvc---apiVersion: v1kind: PersistentVolumeClaimmetadata:name: my-pvcspec:accessModes:- ReadWriteManyresources:requests:storage: 5Gi