1.基本介绍

1.1 Sharding Sphere

是一款分布式数据库解决方案 ,详情 官网传送门。

1.2 什么是分库分表

数据库数据量不可控的,随着时间和业务发展,造成表里面数据越来越多,如果再去对数据库表curd操作时候,造成性能问题。所以考虑到了对数据库进行拆分。有两个方案

- 硬件层面上 (存在瓶颈,且消耗资源)

-

1.2.1 分库分表的方式

垂直切分:垂直分库和垂直分表

- 水平切分:水平分库和水平分表

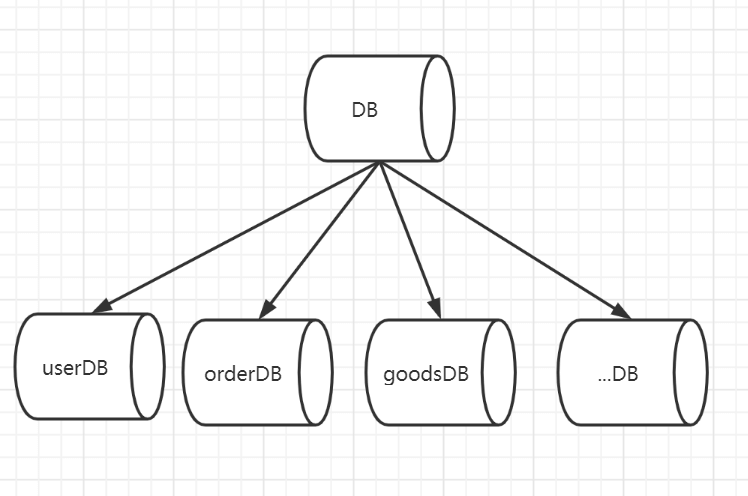

垂直分库: 根据业务场景进行分库

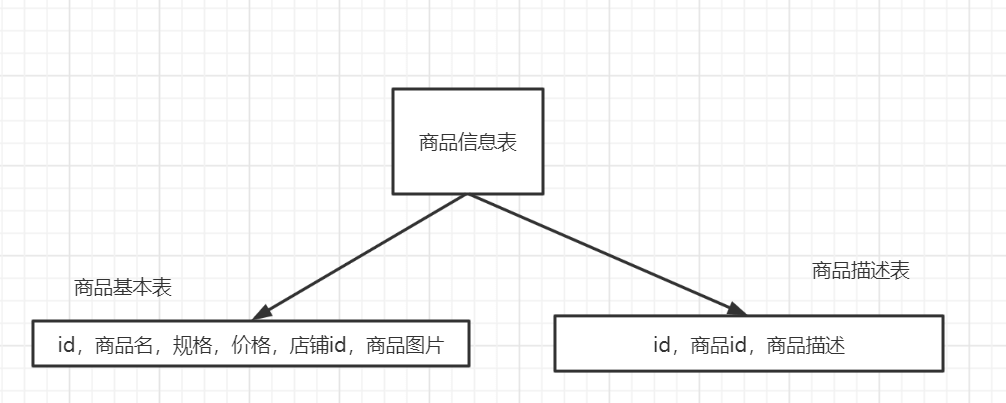

垂直分表:一个表的字段过多或者把该表的常用字段放在一个表中,不常用的字段,大字段放在另一个表中,减少磁盘IO,提高哦访问效率

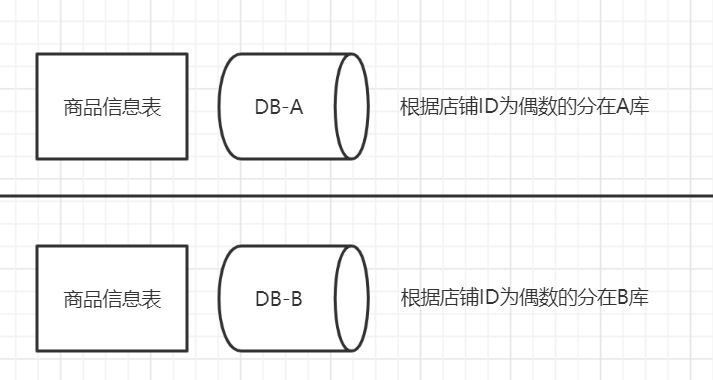

水平分库:同一个表的数据按一定规则拆分到不同的数据库中,每个库可以放在不同的服务器上。解决了单库大数据,高并发的性能瓶颈,提高稳定性和高可用性。

水平分表:同一个数据库内,把同一张表的数据按照一定规则拆到多个表,解决性能问题和避免IO锁表的几率。

1.2.2 分库分表带来的问题

事务一致性问题

跨节点关联查询

如果有跨数据库或者跨节点分库,不在一个库或不在一个服务器内 不能进行关联查询,只能两次查询然后拼接。

跨节点分页、排序函数

跨节点order By limit会比较复杂 , Max、Min、Sum、Count 等函数也会出现相应的问题,都要多次查询然后汇总。

主键避重

在分库分表环境下数据库自增主键将不能使用,为了避免主键重复需要使用分布式ID

公共表

对于一些变动小,频率低的字典,公共表,将这些表在每个数据库都存一份,避免jion查询复杂。所以维护需要同时维护。

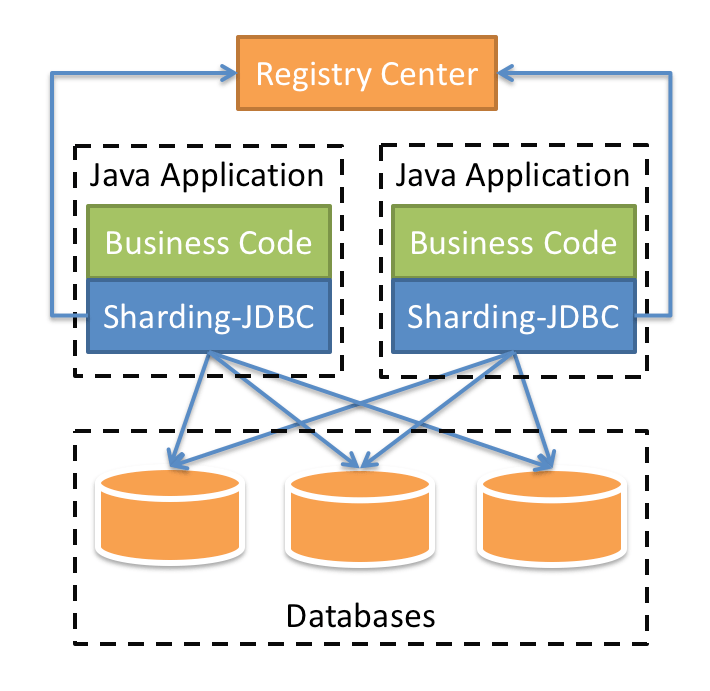

2 Sharding JDBC

定位为轻量级Java框架,在Java的JDBC层提供的额外服务。

2.1 环境搭建

springboot 2.4.2 + mybatisPlus + Lombok + sharding jdbc4.1.1

<?xml version="1.0" encoding="UTF-8"?><project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><parent><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-parent</artifactId><version>2.4.2</version><relativePath/></parent><groupId>com.rem</groupId><artifactId>shardingjdbc</artifactId><version>0.0.1-SNAPSHOT</version><name>shardingjdbc</name><description>Demo project for Spring Boot</description><properties><java.version>1.8</java.version></properties><dependencies><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-test</artifactId></dependency><!--注解处理器 自动解析yml文件到config配置类中--><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-configuration-processor</artifactId><optional>true</optional></dependency><!--连接数据库--><dependency><groupId>com.baomidou</groupId><artifactId>mybatis-plus-boot-starter</artifactId><version>3.4.2</version></dependency><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId></dependency><dependency><groupId>com.alibaba</groupId><artifactId>druid</artifactId><version>1.2.6</version></dependency><!--脚手架--><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><optional>true</optional></dependency><dependency><groupId>org.apache.commons</groupId><artifactId>commons-lang3</artifactId><version>3.8.1</version></dependency><dependency><groupId>cn.hutool</groupId><artifactId>hutool-all</artifactId><version>5.4.0</version></dependency><!--sharding-jdbc--><dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-jdbc-spring-boot-starter</artifactId><version>4.1.1</version></dependency></dependencies><build><plugins><plugin><groupId>org.springframework.boot</groupId><artifactId>spring-boot-maven-plugin</artifactId><configuration><excludes><exclude><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId></exclude></excludes></configuration></plugin></plugins></build></project>

2.2 单库双表水平分片

数据库准备

-- auto-generated definitioncreate table t_order_1(id bigint auto_incrementprimary key,orderCode varchar(100) null,buyId bigint null,amount decimal(11, 2) null,productId bigint null,productName varchar(100) null)comment '订单表';-- auto-generated definitioncreate table t_order_2(id bigint auto_incrementprimary key,orderCode varchar(100) null,buyId bigint null,amount decimal(11, 2) null,productId bigint null,productName varchar(100) null)comment '订单表';

#shardingjdbc分片策略 单库双表水平分片spring:shardingsphere:datasource:#配置数据源,给数据源起名称names: m1m1:#配置数据源具体内容,包含连接池,驱动,地址,用户名和密码type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://192.168.19.128:3306/so?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8driverClassName: com.mysql.cj.jdbc.Driverusername: rootpassword: 123456sharding:tables:#指定order表分布情况,配置表在哪个数据库里面,表名称都是什么 m1.t_order_1 , m1.t_order_2t_order:actualDataNodes: m1.t_order_$->{1..2}# 指定t_order表里面主键id 生成策略 SNOWFLAKEkeyGenerator:column: idtype: SNOWFLAKE# 限定表=====指定分片策略 约定id值偶数添加到t_order_1表,如果id是奇数添加到t_order_2表tableStrategy:inline:shardingColumn: idalgorithmExpression: t_order_${id % 2 +1}# 全局=====指定分片策略 约定id值偶数添加到t_order_1表,如果id是奇数添加到t_order_2表# defaultTableStrategy:# inline:# shardingColumn: id# algorithmExpression: t_order_$->{id % 2 +1}# 打开sql输出日志props:sql:show: true# 一个实体类对应两张表,覆盖main:allowBeanDefinitionOverriding: truemybatisPlus:configuration:# log-impl: org.apache.ibatis.logging.stdout.StdOutImplmapUnderscoreToCamelCase: falsecallSettersOnNulls: true

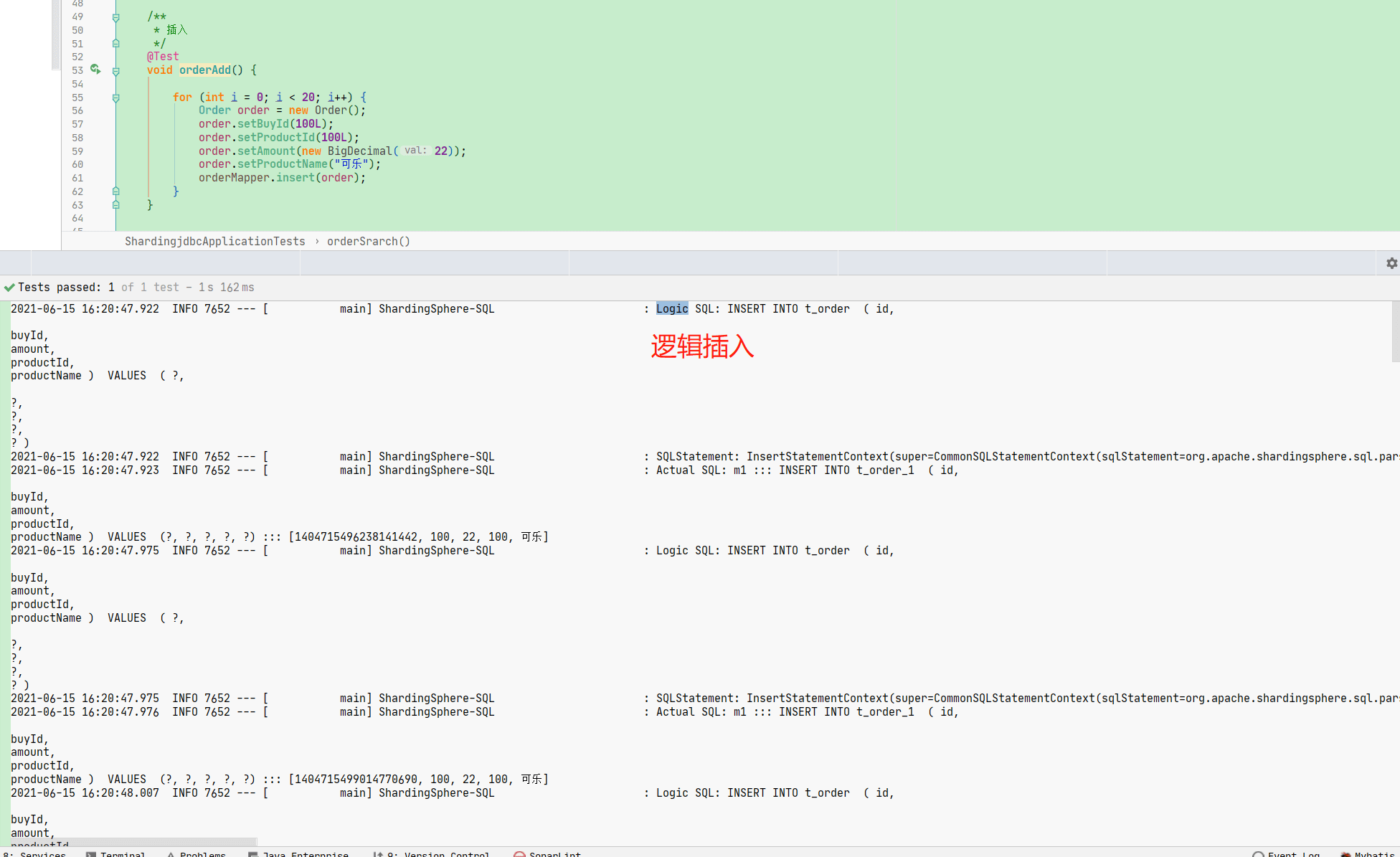

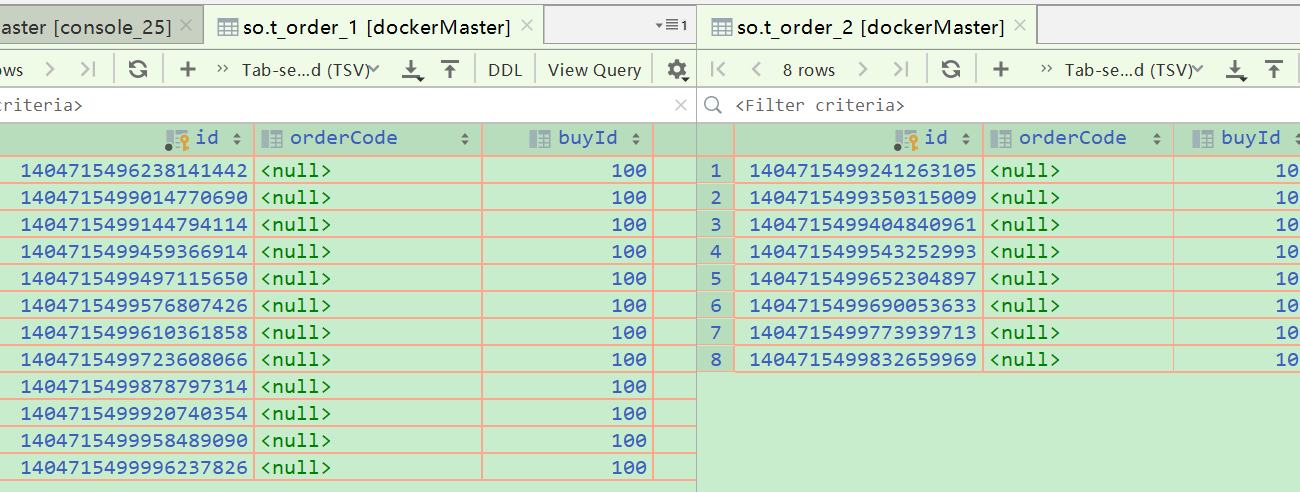

演示结果 偶数插入1表,奇数插入到2表

数据库结果

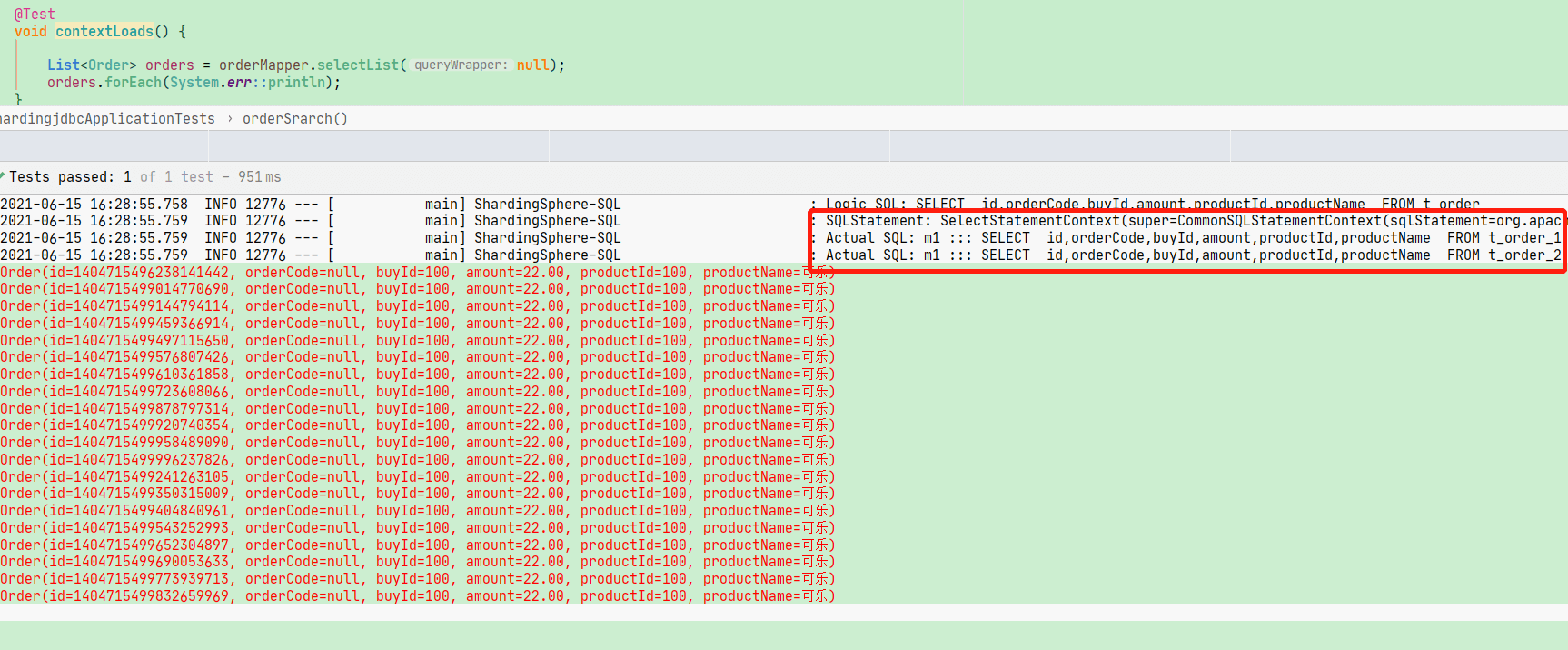

查询

2.3 双库双表水平分片

数据库准备

-- auto-generated definitioncreate table t_course_1(cid bigint auto_incrementprimary key,cname varchar(100) null,userId bigint null,cstatus varchar(100) null);-- auto-generated definitioncreate table t_course_2(cid bigint auto_incrementprimary key,cname varchar(100) null,userId bigint null,cstatus varchar(100) null);

#shardingjdbc分片策略 双库双表水平分片 实际上一张表根据两个字段被分成4个表,在实际中很少用spring:shardingsphere:datasource:#配置数据源,给数据源起名称names: m1,m2m1:#配置数据源具体内容,包含连接池,驱动,地址,用户名和密码type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://192.168.19.128:3306/edu_db_1?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8driverClassName: com.mysql.cj.jdbc.Driverusername: rootpassword: 123456m2:#配置数据源具体内容,包含连接池,驱动,地址,用户名和密码type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://192.168.19.128:3306/edu_db_2?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8driverClassName: com.mysql.cj.jdbc.Driverusername: rootpassword: 123456sharding:tables:#指定course表分布情况,配置表在哪个数据库里面,表名称都是什么 m1.t_course_1 , m1.t_course_2t_course:actualDataNodes: m$->{1..2}.t_course_$->{1..2}# 指定t_course表里面主键id 生成策略 SNOWFLAKEkeyGenerator:column: cidtype: SNOWFLAKE# 限定表=====指定分片策略 约定id值偶数添加到t_course_1表,如果id是奇数添加到t_course_2表tableStrategy:inline:shardingColumn: cidalgorithmExpression: t_course_${cid % 2 +1}# 限定库=====指定分片策略 约定userId是偶数添加m1,是奇数添加m2databaseStrategy:inline:shardingColumn: userIdalgorithmExpression: m$->{userId % 2 + 1}# 全局库# default:# databaseStrategy:# inline: user_id# shardingColumn: m$->{userId % 2 + 1}# 打开sql输出日志props:sql:show: true# 一个实体类对应两张表,覆盖main:allowBeanDefinitionOverriding: truemybatis-plus:configuration:map-underscore-to-camel-case: falsecall-setters-on-nulls: true

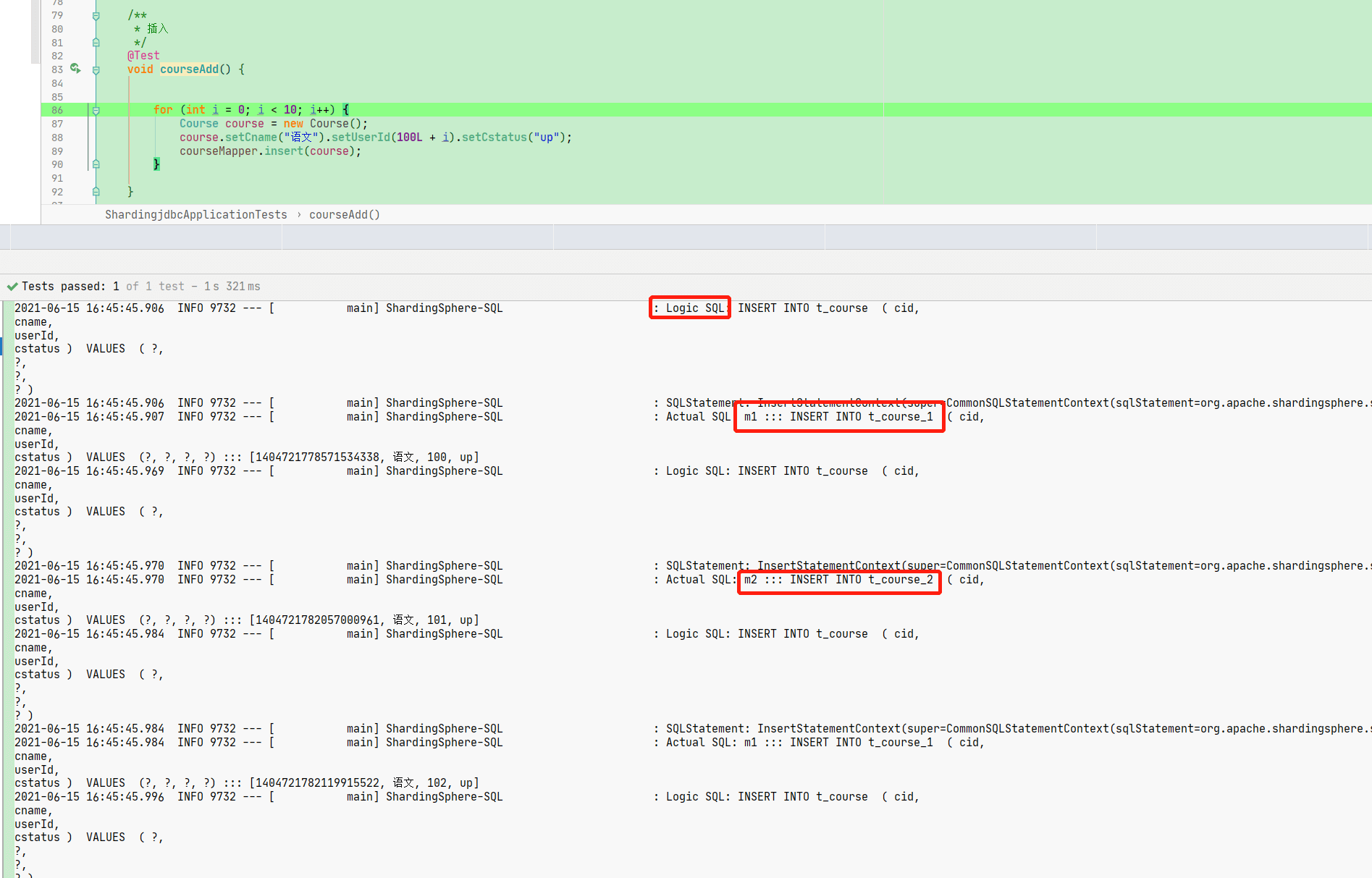

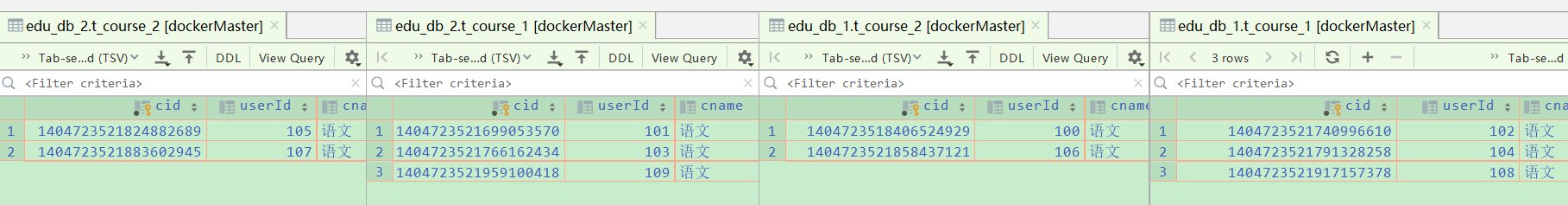

插入数据 根据数据插入不同的库和表

数据库结果

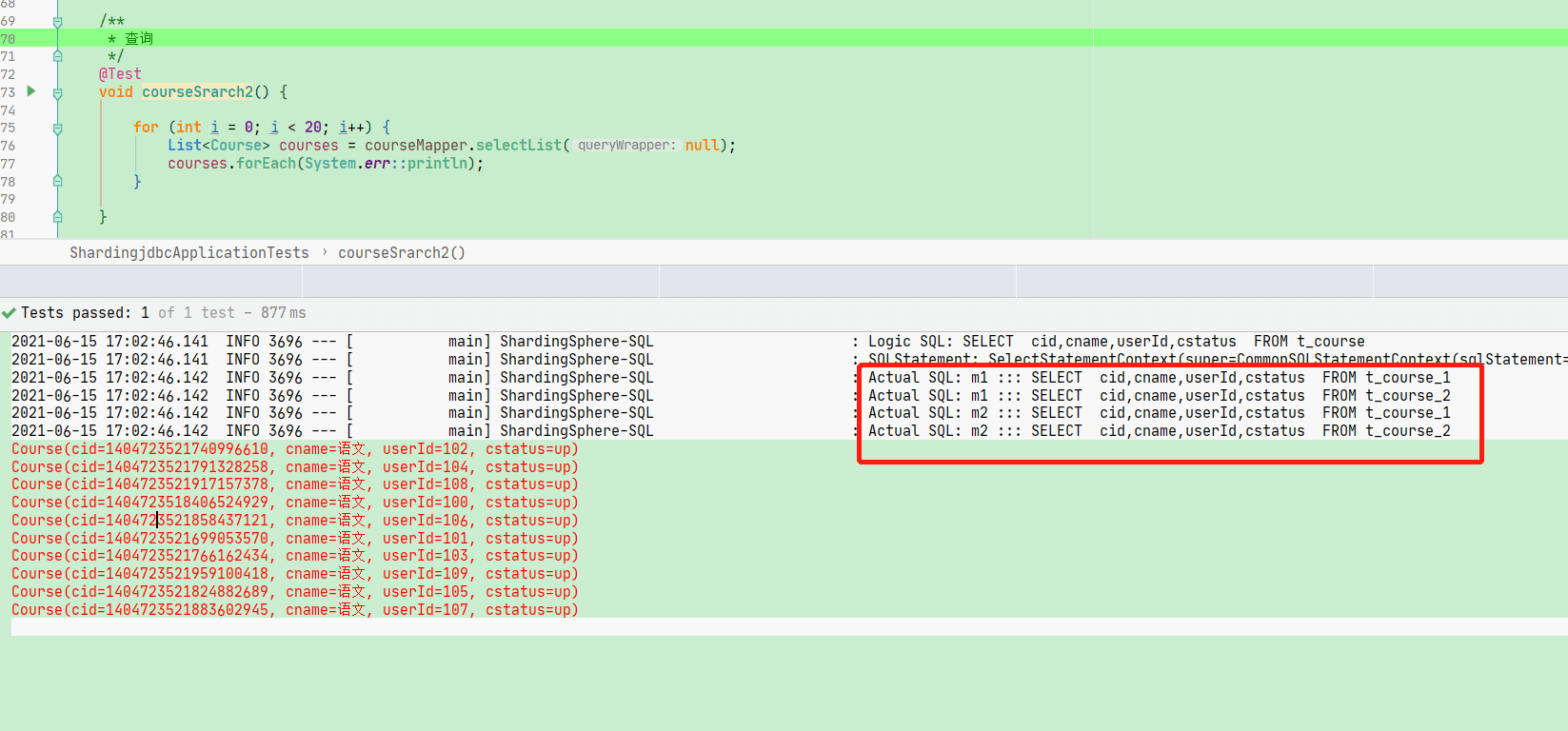

查询结果:

2.4 垂直分库

数据库准备

-- auto-generated definitioncreate table t_user(userId bigint auto_incrementprimary key,username varchar(100) null,ustatus varchar(100) null);

yml配置

#shardingjdbc分片策略 垂直分库 专库专表使用spring:shardingsphere:datasource:#配置数据源,给数据源起名称names: m1,m2,v0m1:#配置数据源具体内容,包含连接池,驱动,地址,用户名和密码type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://192.168.19.128:3306/edu_db_1?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8driverClassName: com.mysql.cj.jdbc.Driverusername: rootpassword: 123456m2:#配置数据源具体内容,包含连接池,驱动,地址,用户名和密码type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://192.168.19.128:3306/edu_db_2?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8driverClassName: com.mysql.cj.jdbc.Driverusername: rootpassword: 123456v0:#配置数据源具体内容,包含连接池,驱动,地址,用户名和密码type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://192.168.19.128:3306/user_db?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8driverClassName: com.mysql.cj.jdbc.Driverusername: rootpassword: 123456sharding:tables:#指定course表分布情况,配置表在哪个数据库里面,表名称都是什么 m1.t_course_1 , m1.t_course_2t_course:actualDataNodes: m$->{1..2}.t_course_$->{1..2}# 指定t_course表里面主键id 生成策略 SNOWFLAKEkeyGenerator:column: cidtype: SNOWFLAKE# 限定表=====指定分片策略 约定id值奇数添加到t_course_1表,如果id是偶数添加到t_course_2表tableStrategy:inline:shardingColumn: cidalgorithmExpression: t_course_${cid % 2 +1}# 限定库=====指定分片策略 约定userId是偶数添加m1,是奇数添加m2databaseStrategy:inline:shardingColumn: userIdalgorithmExpression: m$->{userId % 2 + 1}# 垂直分库 专表专用t_user:actualDataNodes: v$->{0}.t_userkeyGenerator:type: SNOWFLAKEcolumn: userId# 打开sql输出日志props:sql:show: true# 一个实体类对应两张表,覆盖main:allowBeanDefinitionOverriding: truemybatis-plus:configuration:map-underscore-to-camel-case: falsecall-setters-on-nulls: true

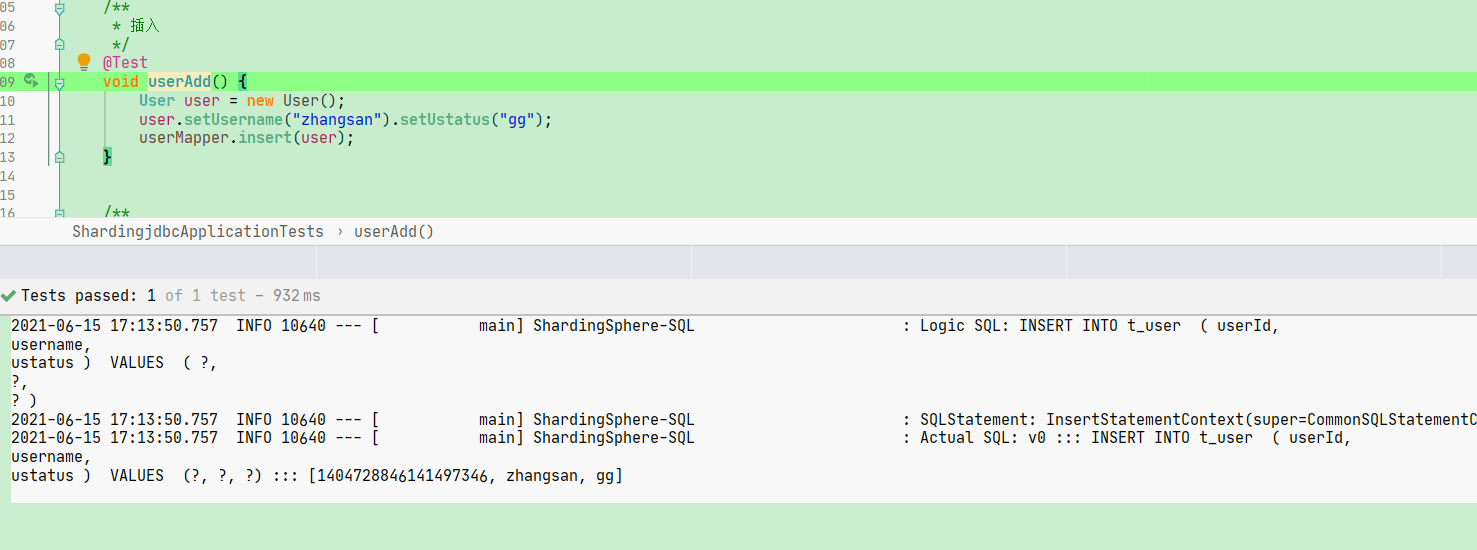

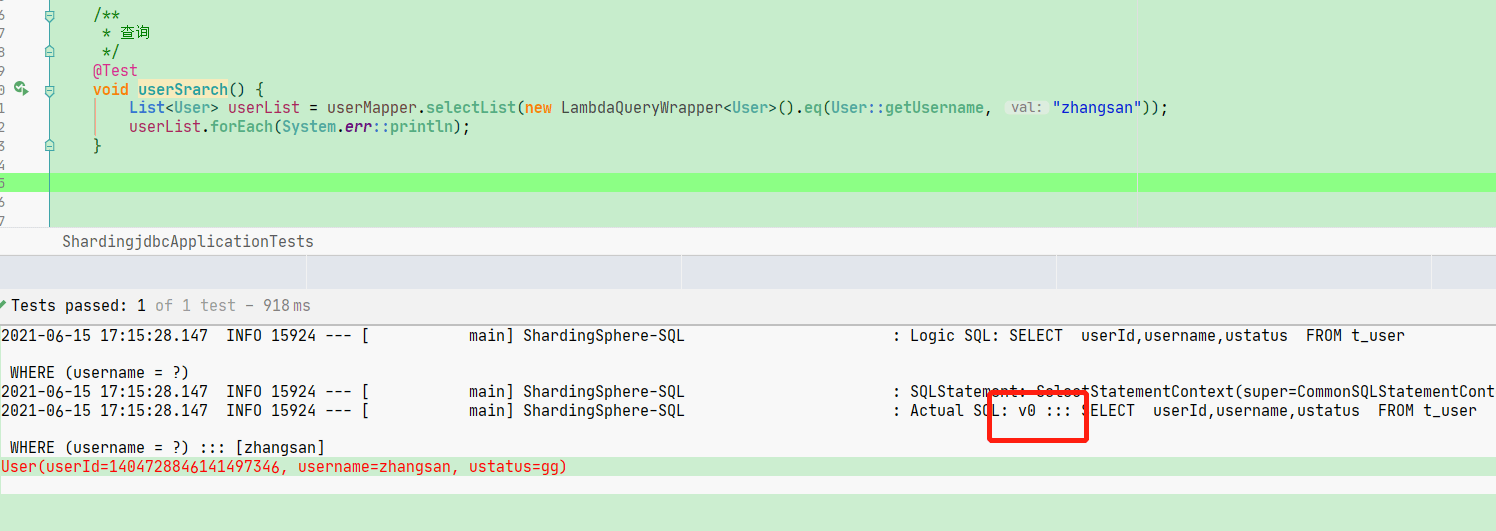

演示结果:

查询:

2.5 广播表配置

在每个数据库都创建这张表

-- auto-generated definitioncreate table t_dict(dictId bigint auto_incrementprimary key,status varchar(100) null,value varchar(100) null);

#shardingjdbc分片策略 广播配置,配置公共表spring:shardingsphere:datasource:#配置数据源,给数据源起名称names: m1,m2,v0m1:#配置数据源具体内容,包含连接池,驱动,地址,用户名和密码type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://192.168.19.128:3306/edu_db_1?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8driverClassName: com.mysql.cj.jdbc.Driverusername: rootpassword: 123456m2:#配置数据源具体内容,包含连接池,驱动,地址,用户名和密码type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://192.168.19.128:3306/edu_db_2?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8driverClassName: com.mysql.cj.jdbc.Driverusername: rootpassword: 123456v0:#配置数据源具体内容,包含连接池,驱动,地址,用户名和密码type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://192.168.19.128:3306/user_db?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8driverClassName: com.mysql.cj.jdbc.Driverusername: rootpassword: 123456sharding:# 广播配置 全局公共配置表broadcastTables: t_dicttables:# 广播表t_dict:keyGenerator:column: dictIdtype: SNOWFLAKE#指定course表分布情况,配置表在哪个数据库里面,表名称都是什么 m1.t_course_1 , m1.t_course_2t_course:actualDataNodes: m$->{1..2}.t_course_$->{1..2}# 指定t_course表里面主键id 生成策略 SNOWFLAKEkeyGenerator:column: cidtype: SNOWFLAKE# 限定表=====指定分片策略 约定id值奇数添加到t_course_1表,如果id是偶数添加到t_course_2表tableStrategy:inline:shardingColumn: cidalgorithmExpression: t_course_${cid % 2 +1}# 限定库=====指定分片策略 约定userId是偶数添加m1,是奇数添加m2databaseStrategy:inline:shardingColumn: userIdalgorithmExpression: m$->{userId % 2 + 1}# 垂直分库t_user:actualDataNodes: v0.t_userkeyGenerator:column: userIdtype: SNOWFLAKE# 无需分库分表的数据源配置default-data-source-name: v0# 打开sql输出日志props:sql:show: true# 一个实体类对应两张表,覆盖main:allowBeanDefinitionOverriding: truemybatis-plus:configuration:map-underscore-to-camel-case: falsecall-setters-on-nulls: true

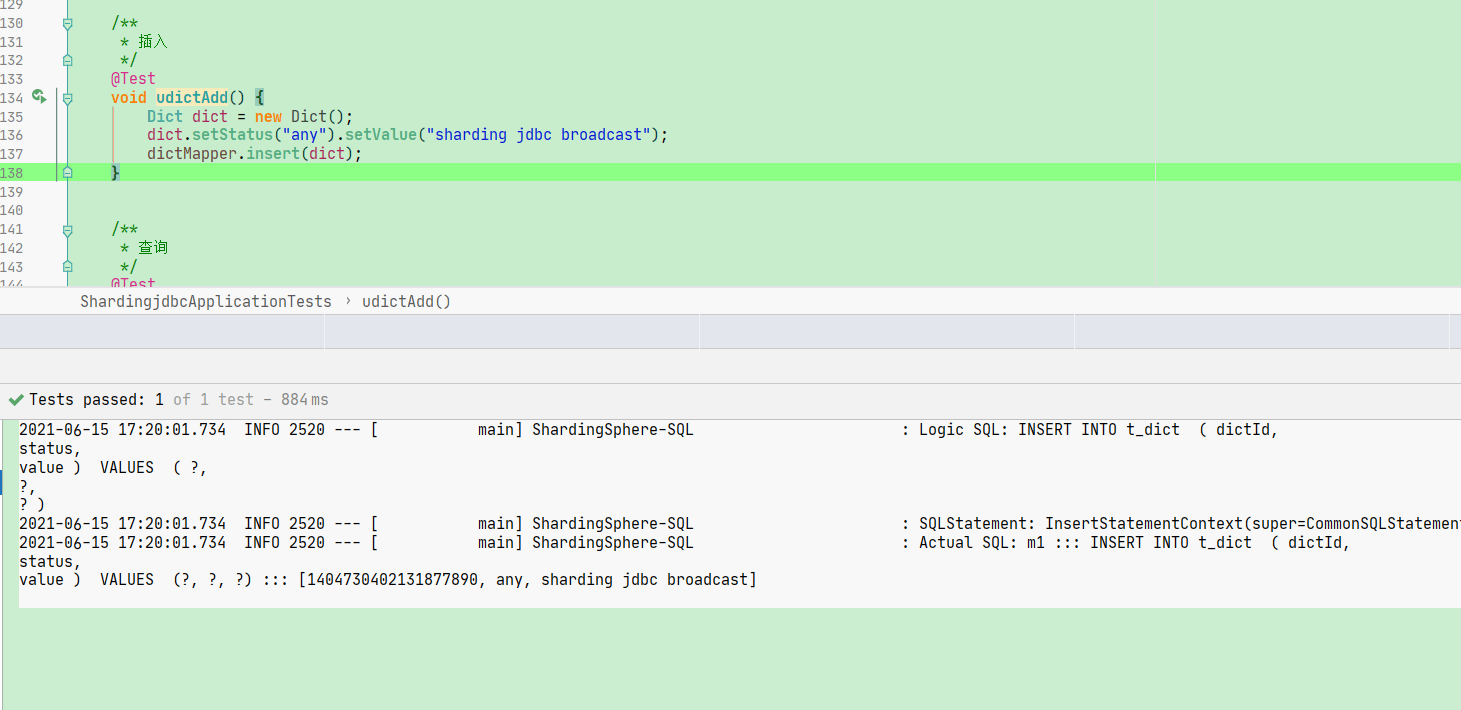

插入一条数据,每个库里的表都会创建一条数据

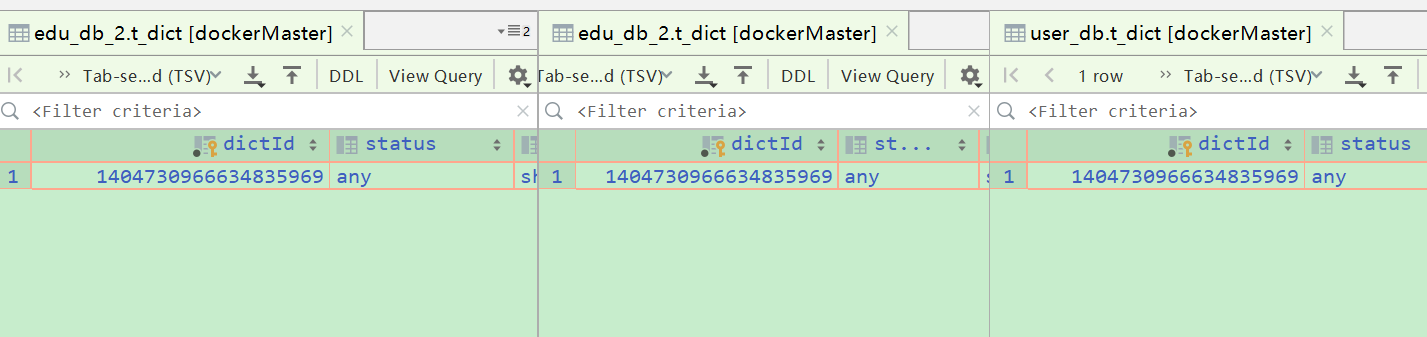

数据库显示

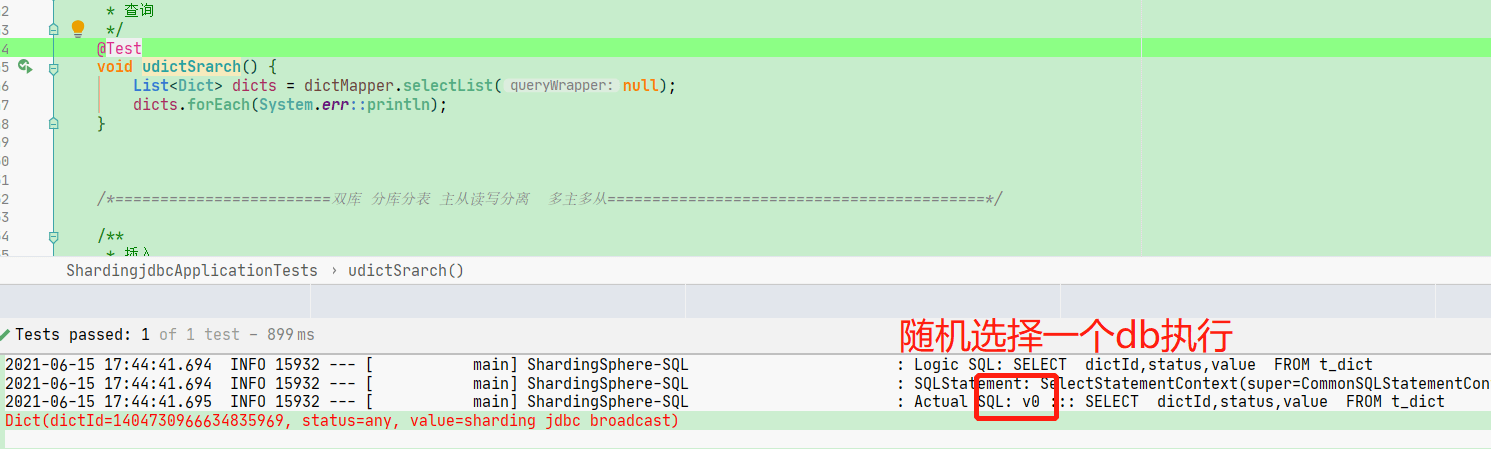

查询数据

2.6 水平分库 + 读写分离

准备主从数据库配置 docker配置简单 传送门

配置一主两从服务器

######################################水平分库分表+读写分离配置##################################################################################################一主多从配置############################################################spring:shardingsphere:datasource:#配置数据源 两主两从names: m1,s1,s2m1:type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://192.168.19.128:3306/so?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8driverClassName: com.mysql.cj.jdbc.Driverusername: rootpassword: 123456s1:type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://192.168.19.128:3307/so?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8driverClassName: com.mysql.cj.jdbc.Driverusername: rootpassword: 123456s2:type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://192.168.19.128:3308/so?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8driverClassName: com.mysql.cj.jdbc.Driverusername: rootpassword: 123456sharding:tables:#表配置 结合数据源t_order:actualDataNodes: ds0.t_order_$->{1..2}# 分表策略tableStrategy:inline:shardingColumn: idalgorithmExpression: t_order_${id % 2 +1}keyGenerator:column: idtype: SNOWFLAKE# 主从配置masterSlaveRules:ds0:masterDataSourceName: m1slaveDataSourceNames: s1,s2bindingTables: t_courseprops:sql:show: true# 一个实体类对应两张表,覆盖main:allowBeanDefinitionOverriding: truemybatisPlus:configuration:# log-impl: org.apache.ibatis.logging.stdout.StdOutImplmapUnderscoreToCamelCase: falsecallSettersOnNulls: true######################################多主多从配置#############################################################spring:# shardingsphere:# datasource:# #配置数据源 两主两从# names: m1,m2,s1,s2# m1:# type: com.alibaba.druid.pool.DruidDataSource# url: jdbc:mysql://192.168.19.128:3306/edu_db_1?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8# driverClassName: com.mysql.cj.jdbc.Driver# username: root# password: 123456# m2:# type: com.alibaba.druid.pool.DruidDataSource# url: jdbc:mysql://192.168.19.128:3306/edu_db_2?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8# driverClassName: com.mysql.cj.jdbc.Driver# username: root# password: 123456# s1:# type: com.alibaba.druid.pool.DruidDataSource# url: jdbc:mysql://192.168.19.128:3307/edu_db_1?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8# driverClassName: com.mysql.cj.jdbc.Driver# username: root# password: 123456# s2:# type: com.alibaba.druid.pool.DruidDataSource# url: jdbc:mysql://192.168.19.128:3307/edu_db_2?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8# driverClassName: com.mysql.cj.jdbc.Driver# username: root# password: 123456# sharding:# tables:# #表配置 结合数据源# t_course:# actualDataNodes: ds$->{0..1}.t_course_$->{1..2}# # 分表策略# tableStrategy:# inline:# shardingColumn: cid# algorithmExpression: t_course_${cid % 2 +1}# keyGenerator:# column: cid# type: SNOWFLAKE# # 分库策略# databaseStrategy:# inline:# shardingColumn: userId# algorithmExpression: ds$->{userId % 2}# # 主从配置# masterSlaveRules:# ds0:# masterDataSourceName: m1# slaveDataSourceNames: s1,s2# ds1:# masterDataSourceName: m2# slaveDataSourceNames: s1,s2# # bindingTables: t_course# props:# sql:# show: true# # 一个实体类对应两张表,覆盖# main:# allowBeanDefinitionOverriding: true###mybatisPlus:# configuration:# # log-impl: org.apache.ibatis.logging.stdout.StdOutImpl# mapUnderscoreToCamelCase: false# callSettersOnNulls: true

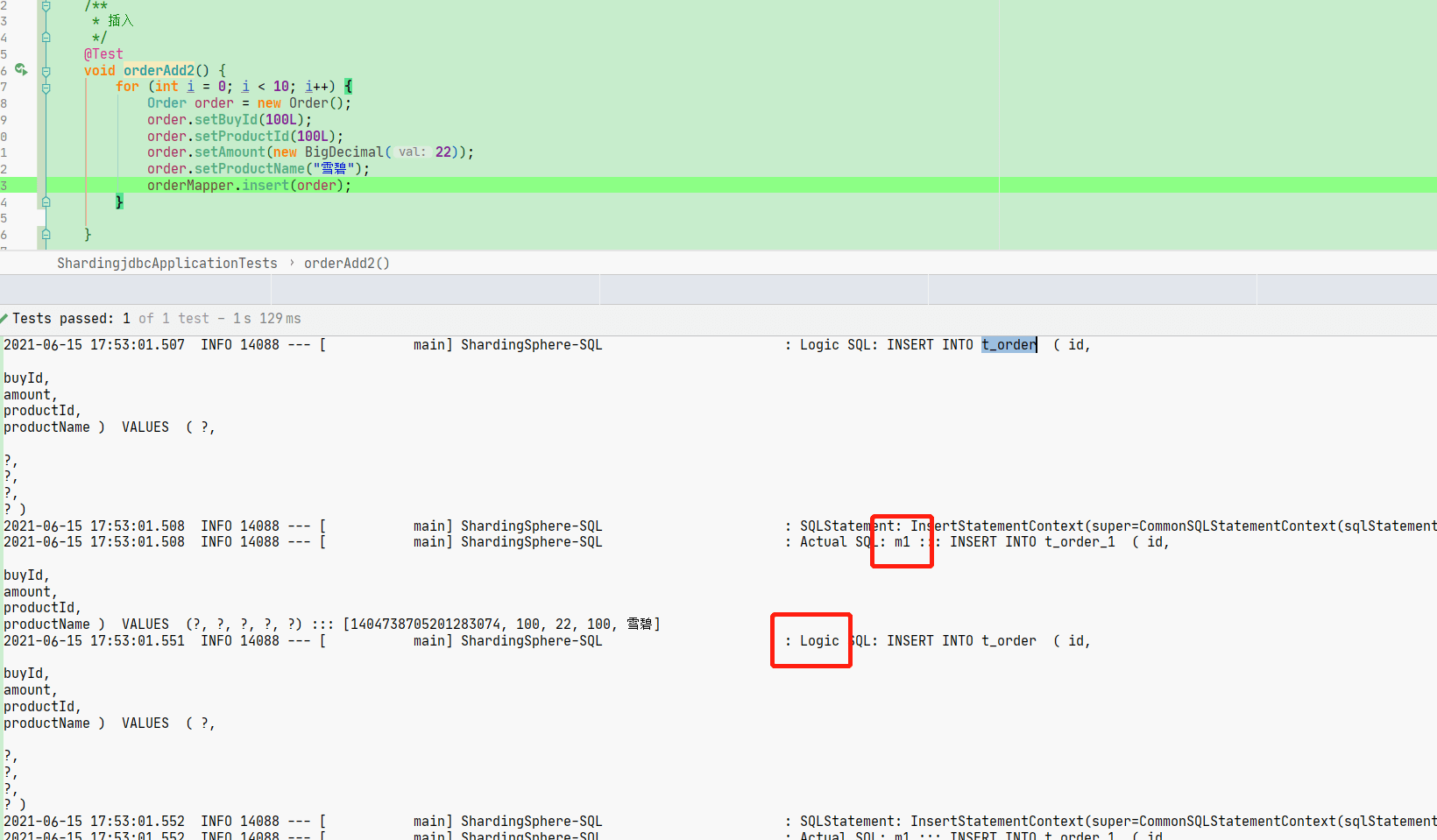

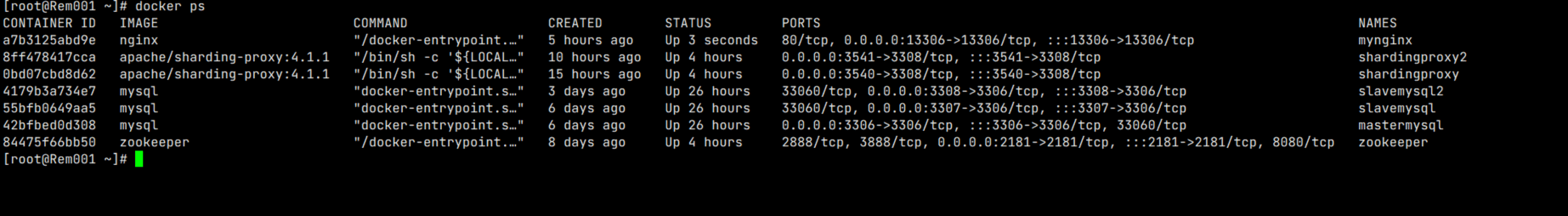

插入数据 master 逻辑插入

查询数据 默认slave会轮询去查询数据

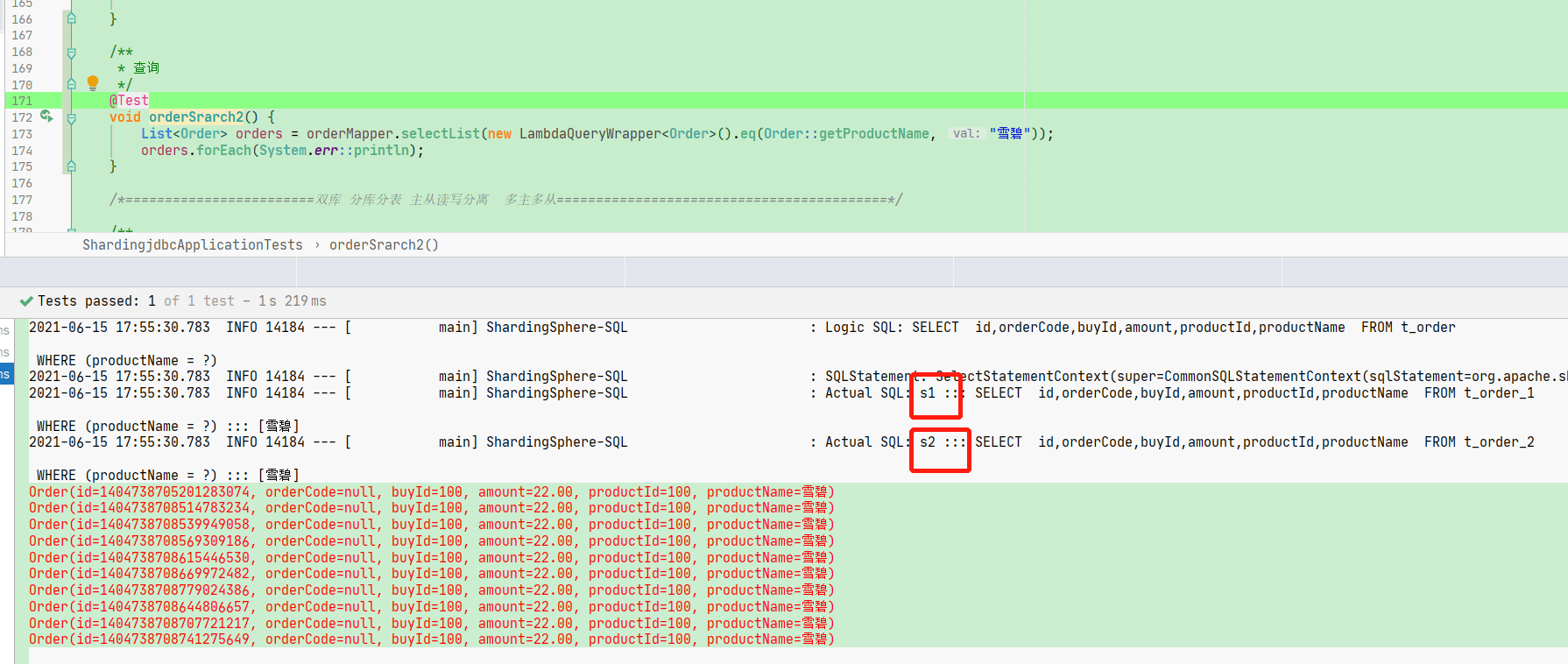

3 sharding proxy

3.1 什么是 sharding proxy

透明化的数据库代理端,用于对异构语言的支持。

下载地址 https://archive.apache.org/dist/shardingsphere/

下载完成后解压 windows环境会存在jar包过长导致解压时文件名会截断 ,解决办法:

tar zxvf apache-shardingsphere-${RELEASE.VERSION}-sharding-proxy-bin.tar.gz

3.2 sharding proxy配置

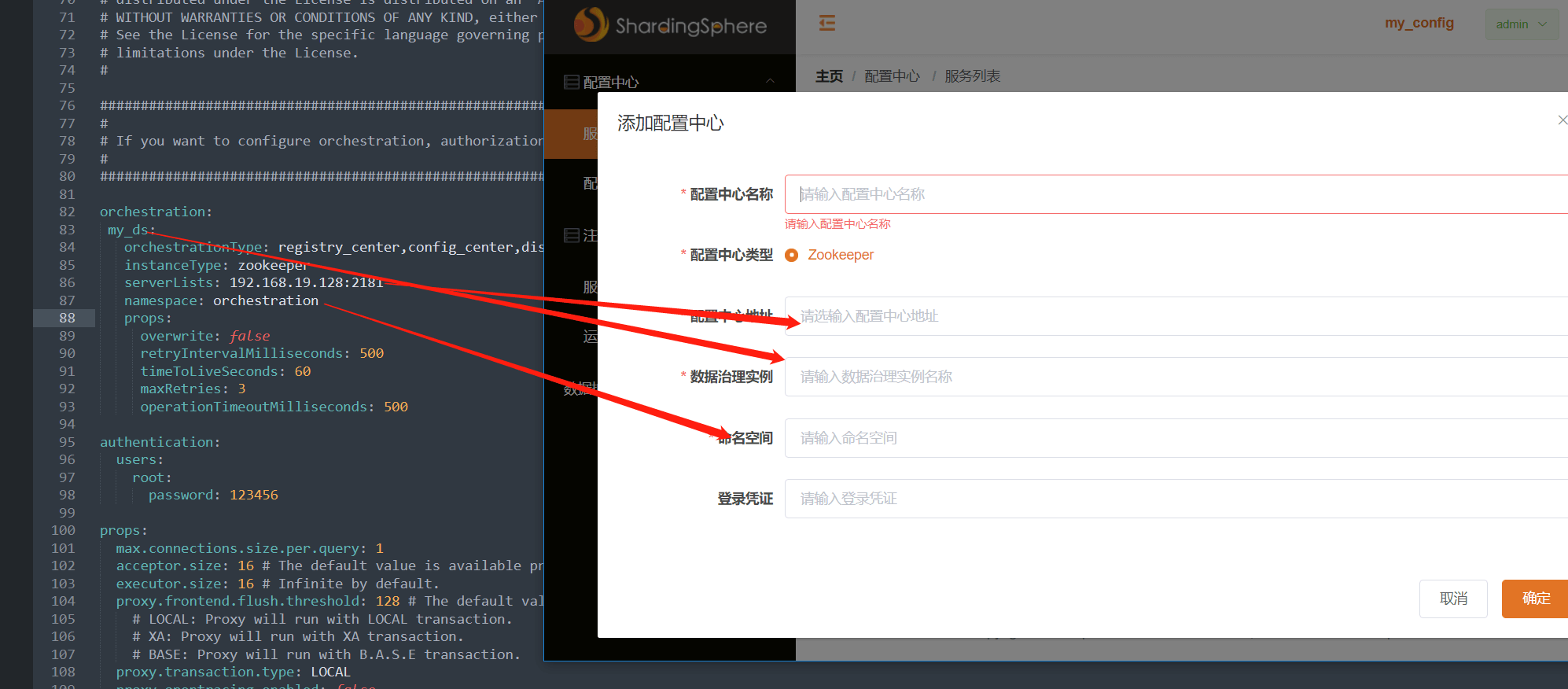

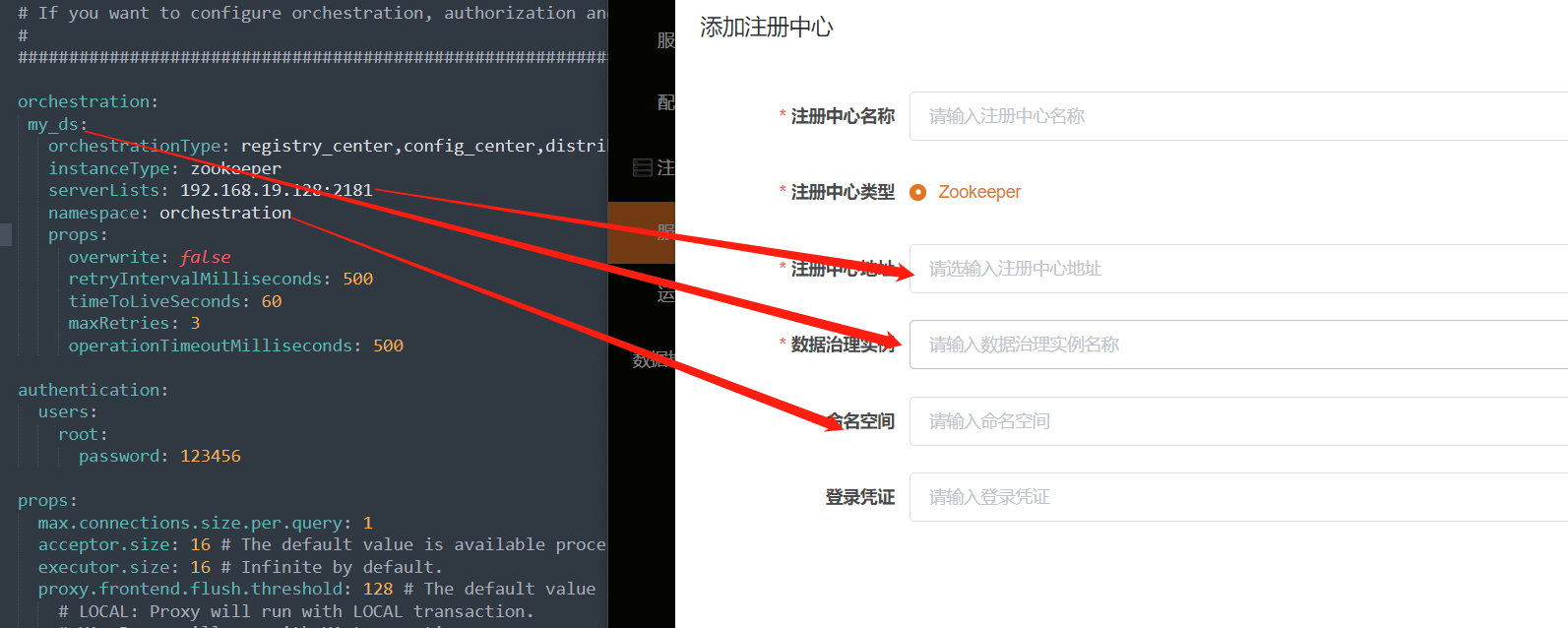

server.yaml配置

不使用服务治理的情况下, 不开启zookeeper

# 服务治理,开启zookeeper 也可以使用nacos等orchestration:orchestration_ds:orchestrationType: registry_center,config_center,distributed_lock_managerinstanceType: zookeeperserverLists: localhost:2181namespace: orchestrationprops:overwrite: falseretryIntervalMilliseconds: 500timeToLiveSeconds: 60maxRetries: 3operationTimeoutMilliseconds: 500# 权限验证authentication:users:root: # 自定义用户名password: 123456 # 自定义密码sharding:password: 123456authorizedSchemas: sharding_db # 该用户授权可访问的数据库,多个用逗号分隔。缺省将拥有root权限,可访问全部数据库。#全局配置props:max.connections.size.per.query: 1acceptor.size: 16 #用于设置接收客户端请求的工作线程个数,默认为CPU核数*2executor.size: 16 # Infinite by default.proxy.frontend.flush.threshold: 128 # The default value is 128.# LOCAL: Proxy will run with LOCAL transaction.# XA: Proxy will run with XA transaction.# BASE: Proxy will run with B.A.S.E transaction.proxy.transaction.type: LOCALproxy.opentracing.enabled: false #是否开启链路追踪功能,默认为不开启。详情请参见[链路追踪](/cn/features/orchestration/apm/)proxy.hint.enabled: falsequery.with.cipher.column: true #是否使用密文列查询sql.show: true # 是否显示sql语句allow.range.query.with.inline.sharding: false

config-sharding.yaml 配置数据分片

schemaName: sharding_dbdataSources:m1:url: jdbc:mysql://192.168.19.128:3306/so?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8username: rootpassword: 123456connectionTimeoutMilliseconds: 30000idleTimeoutMilliseconds: 60000maxLifetimeMilliseconds: 1800000maxPoolSize: 50shardingRule:tables:t_order:actualDataNodes: m1.t_order_$->{1..2}keyGenerator:column: idtype: SNOWFLAKEtableStrategy:inline:shardingColumn: idalgorithmExpression: t_order_${id % 2 +1}

config-master_slave.yaml 配置读写分离 或读写分离+数据分片

# 读写分离schemaName: master_slave_dbdataSources:m1:url: jdbc:mysql://192.168.19.128:3306/so?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8username: rootpassword: 123456connectionTimeoutMilliseconds: 30000idleTimeoutMilliseconds: 60000maxLifetimeMilliseconds: 1800000maxPoolSize: 50s1:url: jdbc:mysql://192.168.19.128:3306/so?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8username: rootpassword: 123456connectionTimeoutMilliseconds: 30000idleTimeoutMilliseconds: 60000maxLifetimeMilliseconds: 1800000maxPoolSize: 50s2:url: jdbc:mysql://192.168.19.128:3308/so?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8username: rootpassword: 123456connectionTimeoutMilliseconds: 30000idleTimeoutMilliseconds: 60000maxLifetimeMilliseconds: 1800000maxPoolSize: 50masterSlaveRule:name: ms_dsmasterDataSourceName: m1slaveDataSourceNames:- s1- s2######################################################################################################## If you want to connect to MySQL, you should manually copy MySQL driver to lib directory.######################################################################################################## 读写分离 + 一主多从# schemaName: sharding_master_slave_db# dataSources:# ds0:# url: jdbc:mysql://192.168.19.128:3306/so?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8# username: root# password: 123456# connectionTimeoutMilliseconds: 30000# idleTimeoutMilliseconds: 60000# maxLifetimeMilliseconds: 1800000# maxPoolSize: 65# ds0_slave0:# url: jdbc:mysql://192.168.19.128:3307/so?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8# username: root# password: 123456# connectionTimeoutMilliseconds: 30000# idleTimeoutMilliseconds: 60000# maxLifetimeMilliseconds: 1800000# maxPoolSize: 65# ds0_slave1:# url: jdbc:mysql://192.168.19.128:3308/so?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8# username: root# password: 123456# connectionTimeoutMilliseconds: 30000# idleTimeoutMilliseconds: 60000# maxLifetimeMilliseconds: 1800000# maxPoolSize: 65# shardingRule:# tables:# t_order:# actualDataNodes: ms_ds${0}.t_order_${1..2}# databaseStrategy:# inline:# shardingColumn: buyId# algorithmExpression: ms_ds${0}# tableStrategy:# inline:# shardingColumn: id# algorithmExpression: t_order_${id % 2 +1}# keyGenerator:# type: SNOWFLAKE# column: id# bindingTables:# - t_order# broadcastTables:# - t_config# defaultDataSourceName: ds0# defaultTableStrategy:# none:# masterSlaveRules:# ms_ds0:# masterDataSourceName: ds0# slaveDataSourceNames:# - ds0_slave0# - ds0_slave1# loadBalanceAlgorithmType: ROUND_ROBIN

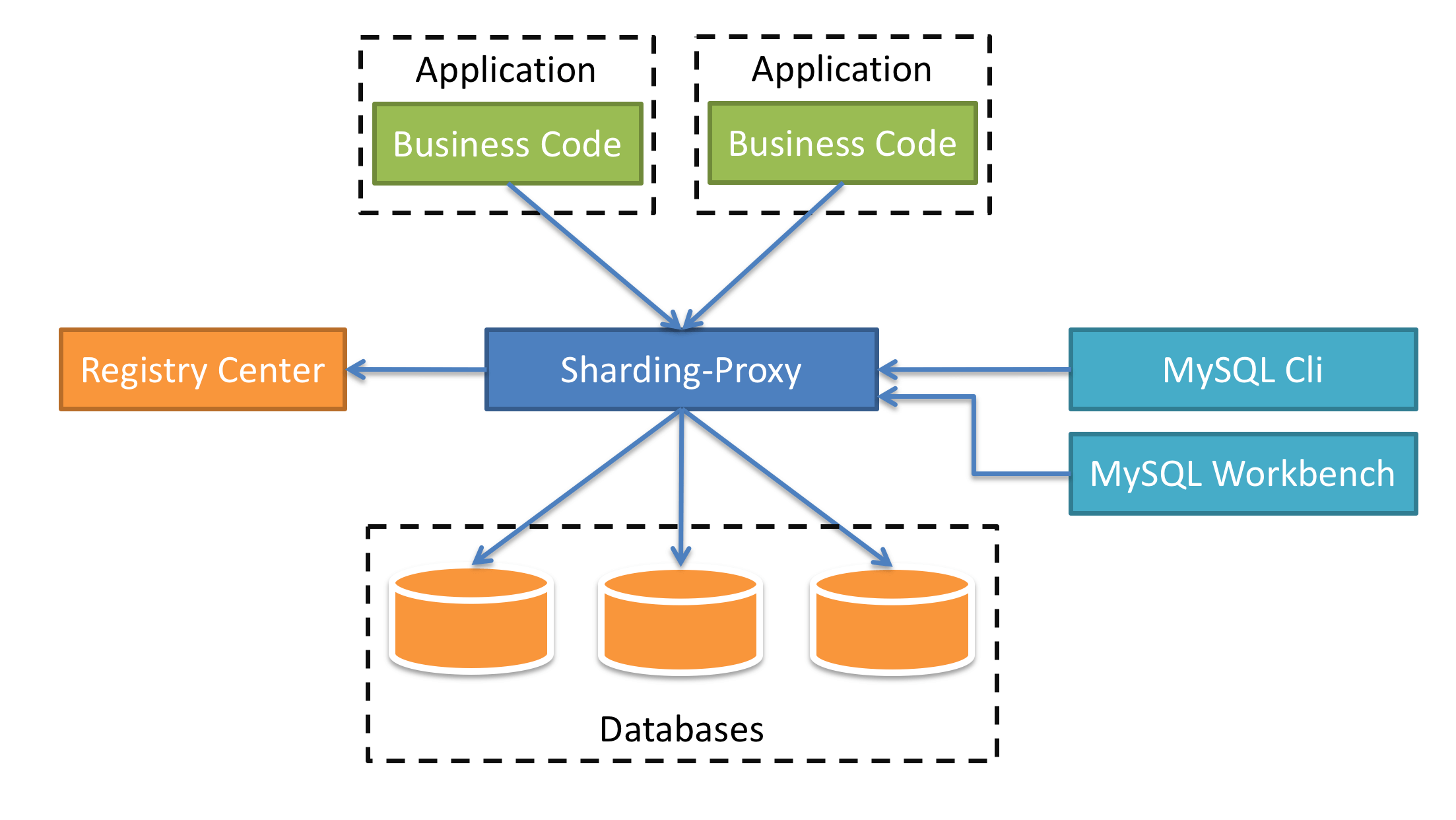

3.3 使用docker 配置sharding proxy

使用稳定版本4.1.1 ,配置一个zookeeper,两个proxy,三个mysql(一主两从),一个nginx

docker简单使用

docker pull apache/sharding-proxy:4.1.1

proxy启动端口3308 ,映射外部端口3540, 配置mysqly需要外部挂载配置目录和驱动 在本地centos做好准备/home/sharding/conf,创建,/home/sharding/ext-lib

docker run --name shardingproxy -d -v /home/sharding/conf:/opt/sharding-proxy/conf -v /home/sharding/ext-lib:/opt/sharding-proxy/ext-lib --env PORT=3308 -p3540:3308 apache/sharding-proxy:4.1.1

nginx配置

worker_processes 1;events {worker_connections 1024;}stream {# sharding proxyupstream sharding {server 192.168.19.128:3540;server 192.168.19.128:3541;}server {listen 13306;proxy_pass sharding;}}http {include mime.types;default_type application/octet-stream;sendfile on;keepalive_timeout 65;server {listen 80;server_name localhost;location / {root html;index index.html index.htm;}error_page 500 502 503 504 /50x.html;location = /50x.html {root html;}}}

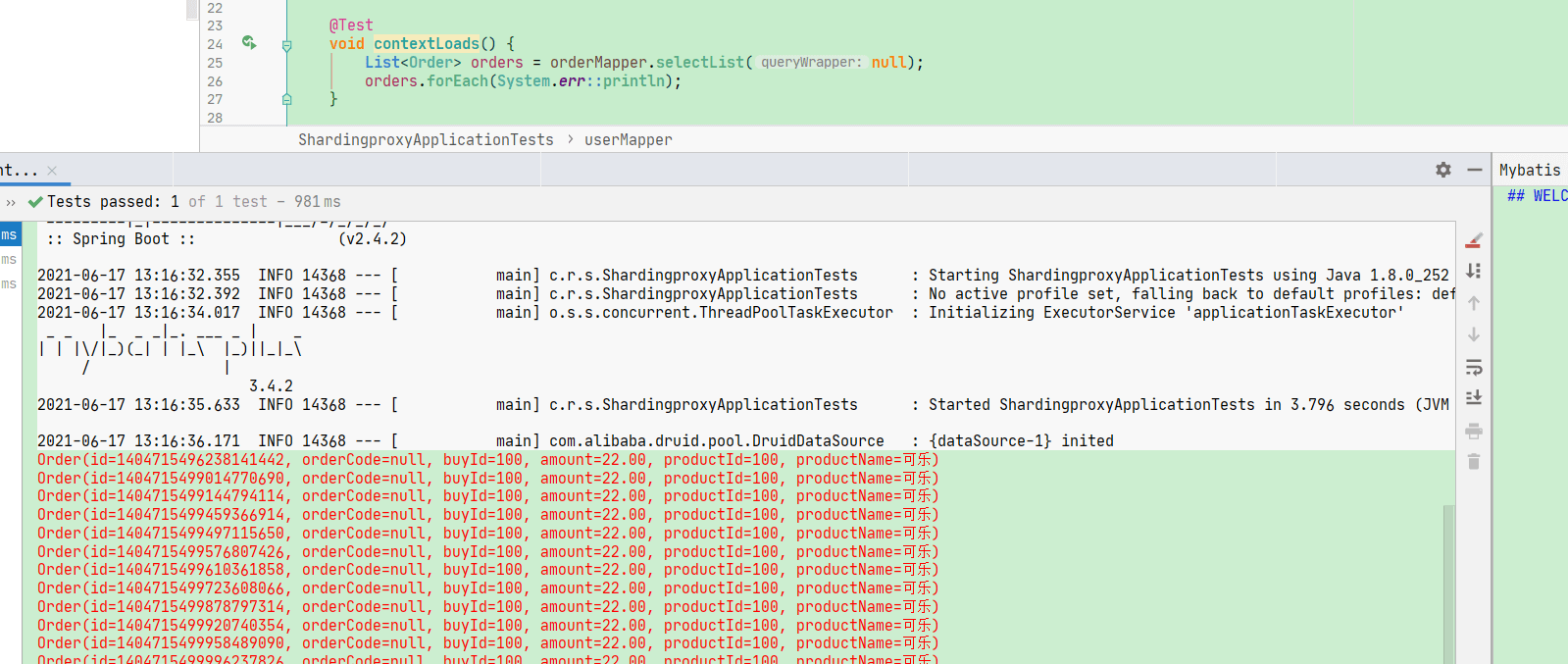

创建一个sprigboot 项目 配置

spring:datasource:driver-class-name: com.mysql.cj.jdbc.Driverurl: jdbc:mysql://192.168.19.128:13306/master_slave_db?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8&allowPublicKeyRetrieval=true#url: jdbc:mysql://192.168.19.128:3540/master_slave_db?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=utf8&allowPublicKeyRetrieval=trueusername: rootpassword: 123456type: com.alibaba.druid.pool.DruidDataSourcemybatis-plus:configuration:map-underscore-to-camel-case: falsecall-setters-on-nulls: true#配置自定义的mapper文件 (多表查询适用)mapper-locations: classpath*:mapper/*.xml#配置实体对象扫描包===在mapper.xml中简化使用type-aliases-package: com.rem.shardingproxy.pojo

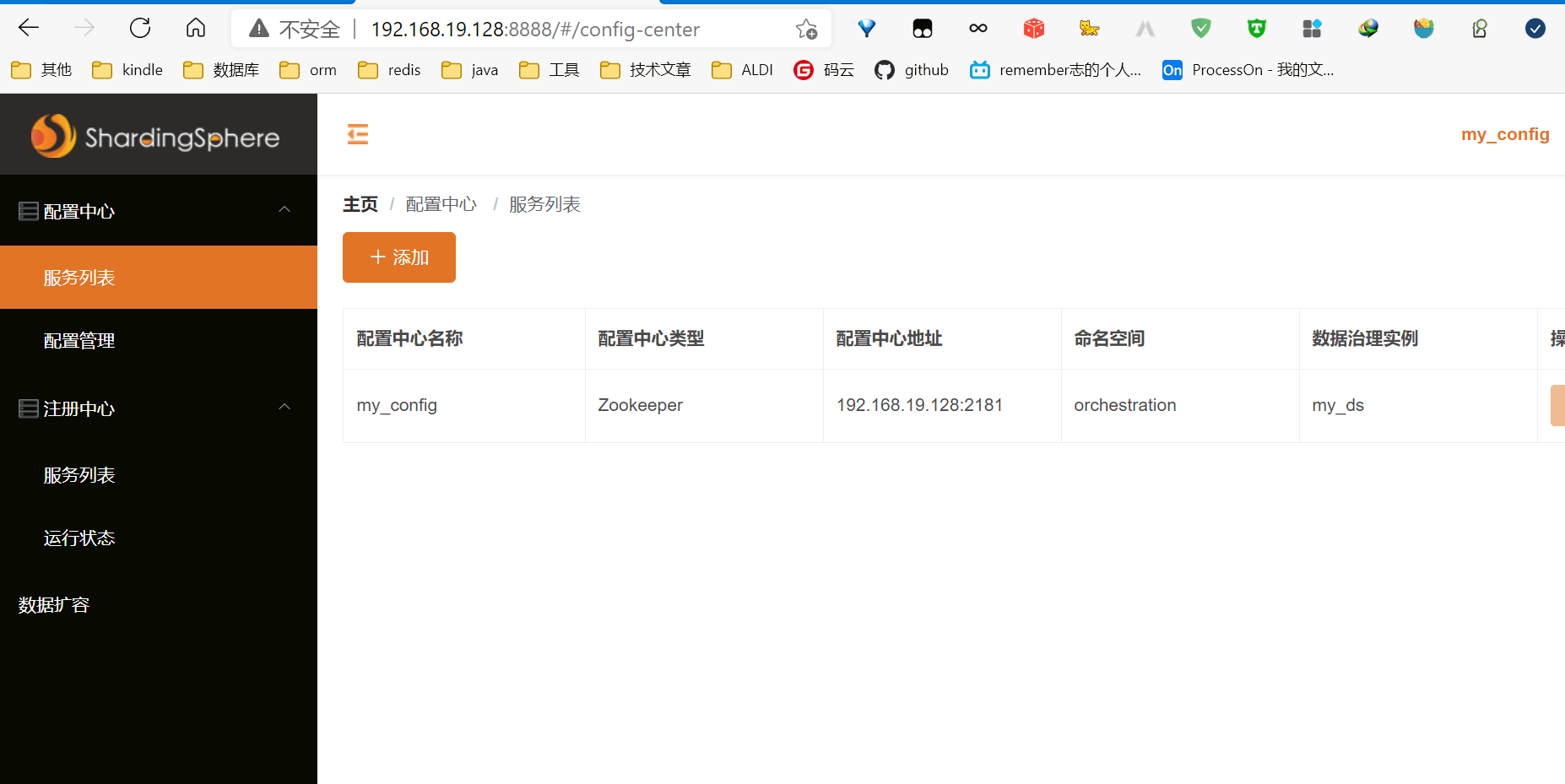

4 sharding -ui 选用

官网下载

linux 解压后 直接在bin目录下运行

也可以在conf下修改配置

可以查看节点信息 也可以进行动态修改信息

server.port=8888user.admin.username=adminuser.admin.password=admin

访问 192.168.19.128:8888