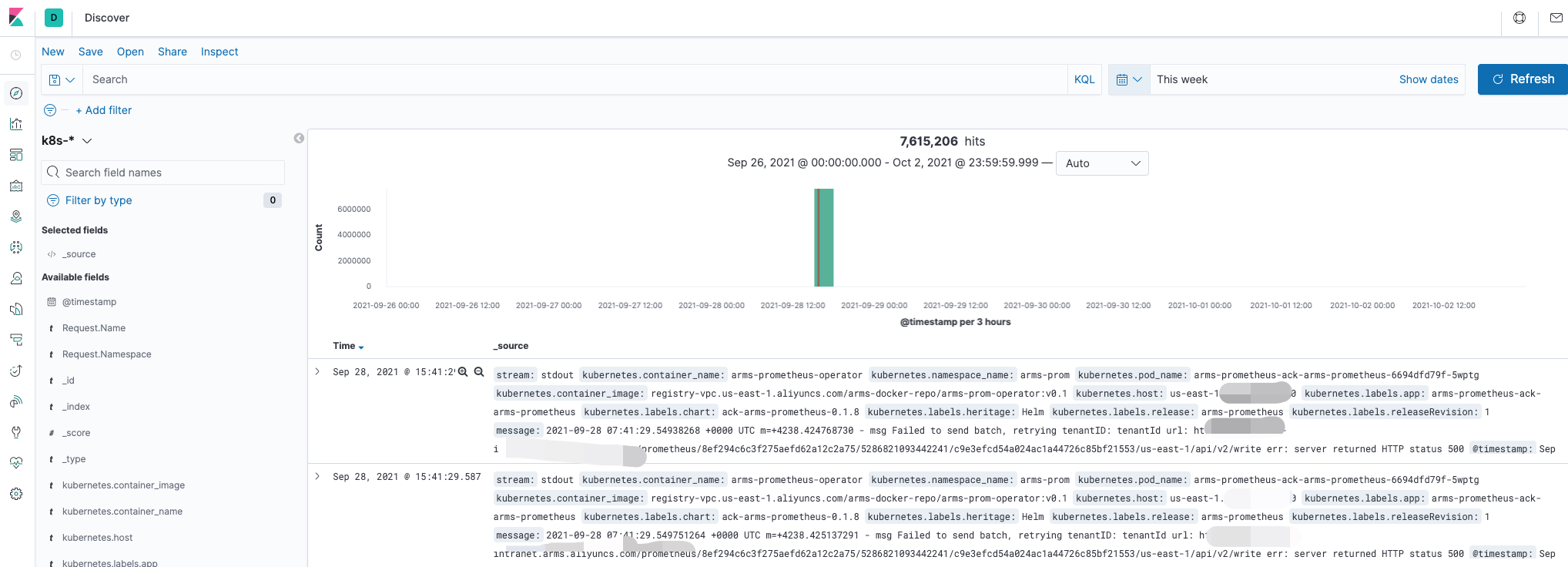

在阿里云k8s集群中搭建了一套EFK系统来收集集群中pod日志信息,刚才收集日志没有任何信息,运行了一段时间后,今天突然发现kibana没有收集任何日志信息,单独检查了ES集群,fluentd-es集群和kibana运行情况,没有任何明显的运行失败情况。

检查了各个服务运行日志,也没有明显的报错信息,

ES 运行日志:......{"type": "server", "timestamp": "2021-09-28T03:31:53,896Z", "level": "WARN", "component": "r.suppressed", "cluster.name": "k8s-logs", "node.name": "es-0", "message": "path: /k8s-*/_search, params: {rest_total_hits_as_int=true, ignore_unavailable=true, preference=1632799861635, ignore_throttled=true, index=k8s-*, timeout=30000ms}", "cluster.uuid": "aJJbeYotSx21-HRJA3lLvA", "node.id": "zbzEyAT5S8a6xL2uPZLxWQ" ,"stacktrace": ["org.elasticsearch.action.search.SearchPhaseExecutionException: all shards failed","at org.elasticsearch.action.search.AbstractSearchAsyncAction.onPhaseFailure(AbstractSearchAsyncAction.java:545) [elasticsearch-7.6.2.jar:7.6.2]","at org.elasticsearch.action.search.AbstractSearchAsyncAction.executeNextPhase(AbstractSearchAsyncAction.java:306) [elasticsearch-7.6.2.jar:7.6.2]","at org.elasticsearch.action.search.AbstractSearchAsyncAction.onPhaseDone(AbstractSearchAsyncAction.java:574) [elasticsearch-7.6.2.jar:7.6.2]","at org.elasticsearch.action.search.AbstractSearchAsyncAction.onShardFailure(AbstractSearchAsyncAction.java:386) [elasticsearch-7.6.2.jar:7.6.2]","at org.elasticsearch.action.search.AbstractSearchAsyncAction.access$200(AbstractSearchAsyncAction.java:66) [elasticsearch-7.6.2.jar:7.6.2]",......

ES 的报错信息只是说ES分片失败了,但是没有提示什么原因造成的。

把kibana单独重新部署了一遍,再次检查kibana日志

kibana报错......Error: Request to Elasticsearch failed: {"message":"An internal server error occurred","statusCode":500,"error":"Internal Server Error"}at SearchSource.fetch$ (https://kibana.123.top/bundles/commons.bundle.js:3:1284890)at s (https://kibana.123.top/bundles/kbn-ui-shared-deps/kbn-ui-shared-deps.js:338:774550)at Generator._invoke (https://kibana.123.top/bundles/kbn-ui-shared-deps/kbn-ui-shared-deps.js:338:774303)......

kibana的报错信息显示请求ES 时返回500

由以上kibana信息可以看出应该是ES服务本身出问题了,接下来看ES的日志

ES报错......"stacktrace": ["org.elasticsearch.transport.RemoteTransportException: [es-1][10.32.0.4:9300][cluster:monitor/nodes/info[n]]","Caused by: org.elasticsearch.common.breaker.CircuitBreakingException: [parent] Data too large, data for [<transport_request>] would be [507132452/483.6mb], which is larger than the limit of [501746892/478.5mb], real usage: [507131048/483.6mb], new bytes reserved: [1404/1.3kb], usages [request=0/0b, fielddata=650/650b, in_flight_requests=74883294/71.4mb, accounting=198341819/189.1mb]","at org.elasticsearch.indices.breaker.HierarchyCircuitBreakerService.checkParentLimit(HierarchyCircuitBreakerService.java:343) ~[elasticsearch-7.6.2.jar:7.6.2]","at org.elasticsearch.common.breaker.ChildMemoryCircuitBreaker.addEstimateBytesAndMaybeBreak(ChildMemoryCircuitBreaker.java:128) ~[elasticsearch-7.6.2.jar:7.6.2]",......

这里就可以看到明显的日志报错信息了,“Caused by: org.elasticsearch.common.breaker.CircuitBreakingException: [parent] Data too large, data for [

......- name: ES_JAVA_OPTSvalue: "-Xms512m -Xmx512m"......

这就可以验证错误信息了,ES的JVM参数设置的为最大加载512M

所以问题的原因是:

jvm 堆内存不够当前查询加载数据所以会报 data too large, 请求被熔断,indices.breaker.request.limit默认为 jvm heap 的 60%,因此可以通过调整 ES 的 Heap Size 来解决该问题

当我们把这个jvm参数调整为2048M,重启ES的时候,再去打开kibana时候就发现数据已经被采集了。

深层次原因剖析

造成上面的问题其实最根本的原因是EFK收集的数据没有进行分类显示,每次查询时都显示所有的日志信息,对刚开始日志量小的时候,可以通过更改JVM的参数来解决显示日志的问题,但是随着业务量越来越大,这种方法就不足以解决问题,因此最终还是要通过将各类日志信息分类,然后定期清除过期日志。