1、添加nexus仓库

#Add repositoryhelm repo add sonatype https://sonatype.github.io/helm3-charts/

2、更改values.yaml文件

配置文件values.yaml中定义了所有我们需要的配置,我们可以自定义内容

- 修改公共镜像为私有镜像

```css

image:

Sonatype Official Public Image

repository: sonatype/nexus3 tag: 3.33.1 pullPolicy: IfNotPresent

拉取公共镜像sonatype/nexus3:3.33.1 并推送到私有仓库,防止官方镜像变更,影响服务稳定

- **修改服务访问ingress域名**```css......ingress:enabled: trueannotations: {kubernetes.io/ingress.class: nginx}# kubernetes.io/ingress.class: nginx# kubernetes.io/tls-acme: "true"hostPath: /hostRepo: nexus.123.toptls:- secretName: nexus-123-tlshosts:- nexus.123.top......

- 数据持久化

在容器中运行的服务默认随着服务死亡,数据释放,而nexus 中存放着我们所需要的依赖jar包,不能随意丢失数据。

我这里使用的是阿里云上提供的storageClass,只需要申明需要的磁盘类型和大小即可。

.......persistence:enabled: trueaccessMode: ReadWriteOnce## If defined, storageClass: <storageClass>## If set to "-", storageClass: "", which disables dynamic provisioning## If undefined (the default) or set to null, no storageClass spec is## set, choosing the default provisioner. (gp2 on AWS, standard on## GKE, AWS & OpenStack)### existingClaim:# annotations:# "helm.sh/resource-policy": keepstorageClass: "alicloud-disk-essd"storageSize: 100Gi# If PersistentDisk already exists you can create a PV for it by including the 2 following keypairs.# pdName: nexus-data-disk# fsType: ext4......

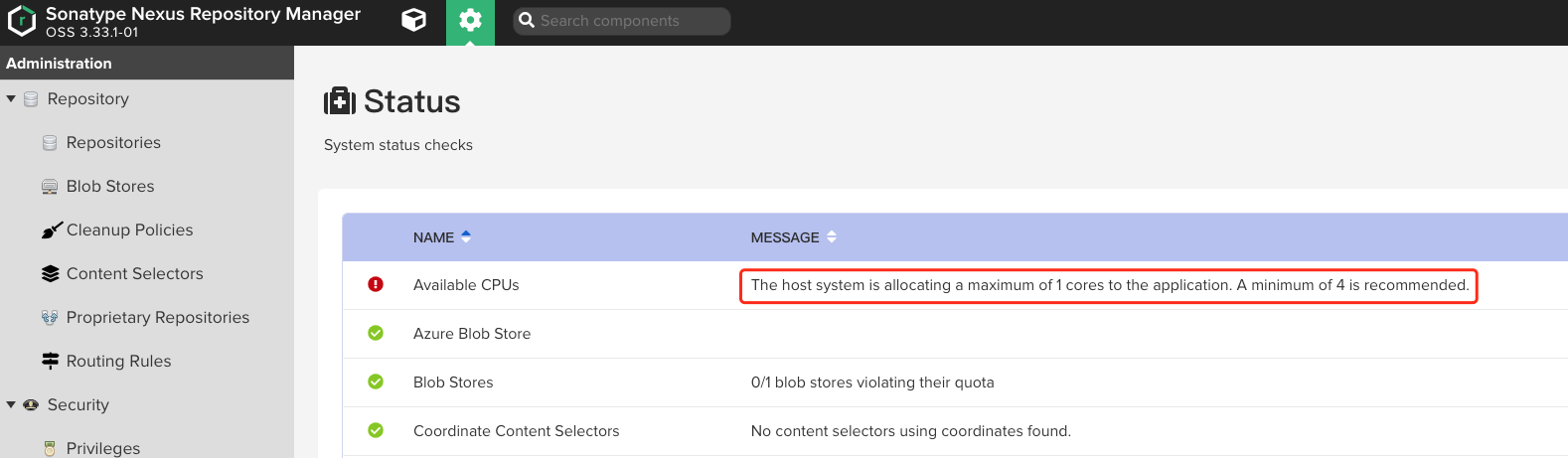

- java垃圾回收器可用CPU内核数

在nexus 3.16.1 以后启动运行后会提示下面错误

java垃圾回收器会计算其用于各种操作 (例如GC和ForkJoinPool) 的线程池大小的CPU数量,我们可以根据提示在java运行环境变量(JAVA_OPTS)中添加 “-XX:ActiveProcessorCount=4”

......env:- name: install4jAddVmParamsvalue: "-Xms1200M -Xmx1200M -XX:MaxDirectMemorySize=2G -XX:+UnlockExperimentalVMOptions -XX:+UseCGroupMemoryLimitForHeap -XX:ActiveProcessorCount=4"- name: NEXUS_SECURITY_RANDOMPASSWORDvalue: "true"......

完整values.yaml文件如下:

statefulset:# This is not supportedenabled: false# By default deploymentStrategy is set to rollingUpdate with maxSurge of 25% and maxUnavailable of 25% . you can change type to `Recreate` or can uncomment `rollingUpdate` specification and adjust them to your usage.deploymentStrategy: Recreateimage:# Sonatype Official Public Imagerepository: sonatype/nexus3tag: 3.33.1pullPolicy: IfNotPresentnexus:docker:enabled: falseregistries: []# - host: chart.local# port: 5000# secretName: registrySecretenv:- name: install4jAddVmParamsvalue: "-Xms1200M -Xmx1200M -XX:MaxDirectMemorySize=2G -XX:+UnlockExperimentalVMOptions -XX:+UseCGroupMemoryLimitForHeap"- name: NEXUS_SECURITY_RANDOMPASSWORDvalue: "true"properties:override: falsedata:nexus.scripts.allowCreation: true# See this article for ldap configuratioon options https://support.sonatype.com/hc/en-us/articles/216597138-Setting-Advanced-LDAP-Connection-Properties-in-Nexus-Repository-Manager#nexus.ldap.env.java.naming.security.authentication: simple# nodeSelector:# cloud.google.com/gke-nodepool: default-poolresources: {}# requests:## Based on https://support.sonatype.com/hc/en-us/articles/115006448847#mem## and https://twitter.com/analytically/status/894592422382063616:## Xms == Xmx## Xmx <= 4G## MaxDirectMemory >= 2G## Xmx + MaxDirectMemory <= RAM * 2/3 (hence the request for 4800Mi)## MaxRAMFraction=1 is not being set as it would allow the heap## to use all the available memory.# cpu: 250m# memory: 4800Mi# The ports should only be changed if the nexus image uses a different portnexusPort: 8081securityContext:fsGroup: 2000podAnnotations: {}livenessProbe:initialDelaySeconds: 30periodSeconds: 30failureThreshold: 6timeoutSeconds: 10path: /readinessProbe:initialDelaySeconds: 30periodSeconds: 30failureThreshold: 6timeoutSeconds: 10path: /# hostAliases allows the modification of the hosts file inside a containerhostAliases: []# - ip: "192.168.1.10"# hostnames:# - "example.com"# - "www.example.com"imagePullSecrets: []nameOverride: ""fullnameOverride: ""deployment:# # Add annotations in deployment to enhance deployment configurationsannotations: {}# # Add init containers. e.g. to be used to give specific permissions for nexus-data.# # Add your own init container or uncomment and modify the given example.initContainers:# - name: fmp-volume-permission# image: busybox# imagePullPolicy: IfNotPresent# command: ['chown','-R', '200', '/nexus-data']# volumeMounts:# - name: nexus-data# mountPath: /nexus-data# # Uncomment and modify this to run a command after starting the nexus container.postStart:command: # '["/bin/sh", "-c", "ls"]'preStart:command: # '["/bin/rm", "-f", "/path/to/lockfile"]'terminationGracePeriodSeconds: 120additionalContainers:additionalVolumes:additionalVolumeMounts:ingress:enabled: trueannotations: {kubernetes.io/ingress.class: nginx}# kubernetes.io/ingress.class: nginx# kubernetes.io/tls-acme: "true"hostPath: /hostRepo: nexus.123.toptls:- secretName: nexus-123-tlshosts:- nexus.123.topservice:name: nexus3enabled: truelabels: {}annotations: {}serviceType: ClusterIProute:enabled: falsename: dockerportName: dockerlabels:annotations:# path: /dockernexusProxyRoute:enabled: falselabels:annotations:# path: /nexuspersistence:enabled: trueaccessMode: ReadWriteOnce## If defined, storageClass: <storageClass>## If set to "-", storageClass: "", which disables dynamic provisioning## If undefined (the default) or set to null, no storageClass spec is## set, choosing the default provisioner. (gp2 on AWS, standard on## GKE, AWS & OpenStack)### existingClaim:# annotations:# "helm.sh/resource-policy": keepstorageClass: "alicloud-disk-essd"storageSize: 100Gi# If PersistentDisk already exists you can create a PV for it by including the 2 following keypairs.# pdName: nexus-data-disk# fsType: ext4tolerations: []# # Enable configmap and add data in configmapconfig:enabled: falsemountPath: /sonatype-nexus-confdata: []# # To use an additional secret, set enable to true and add datasecret:enabled: falsemountPath: /etc/secret-volumereadOnly: truedata: []serviceAccount:# Specifies whether a service account should be createdcreate: true# Annotations to add to the service accountannotations: {}# The name of the service account to use.# If not set and create is true, a name is generated using the fullname templatename: ""psp:create: false

3、安装

#Install charthelm install nexus -f values.yaml sonatype/nexus-repository-manager --version 33.1.0 -n infra

3.1、登陆kubernetes控制台查看服务安装结果

从控制台中我们可以看到服务在运行中,而且我们设置的ingress访问域名也成功设置,接下来我们就可以去拿着端点IP解析这个域名了。

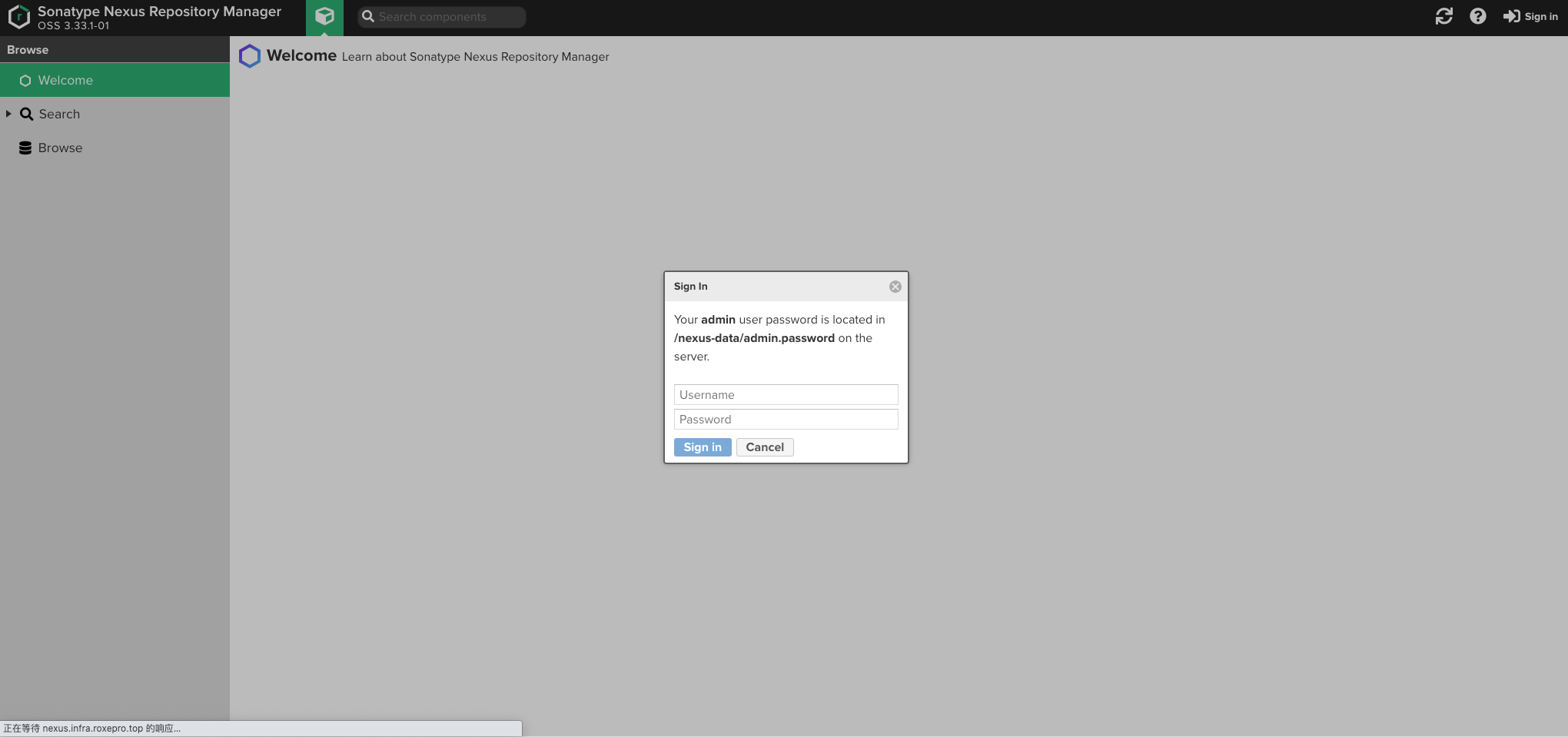

3.2、获取集群中nexus服务登陆密码

根据提示我们知道默认用户名为admin, 而密码存放于pod中/nexus-data/admin.password文件中。

$ kubectl get pod -n infraNAME READY STATUS RESTARTS AGE......nexus-nexus-repository-manager-679b5fcdc9-bjffh 1/1 Running 0 11m$ kubectl exec -it nexus-nexus-repository-manager-679b5fcdc9-bjffh -n infra /bin/bashkubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.bash-4.4$ cat /nexus-data/admin.passwordfd1f7941e1adca25b166 bash-4.4$

3.3、重新设置密码

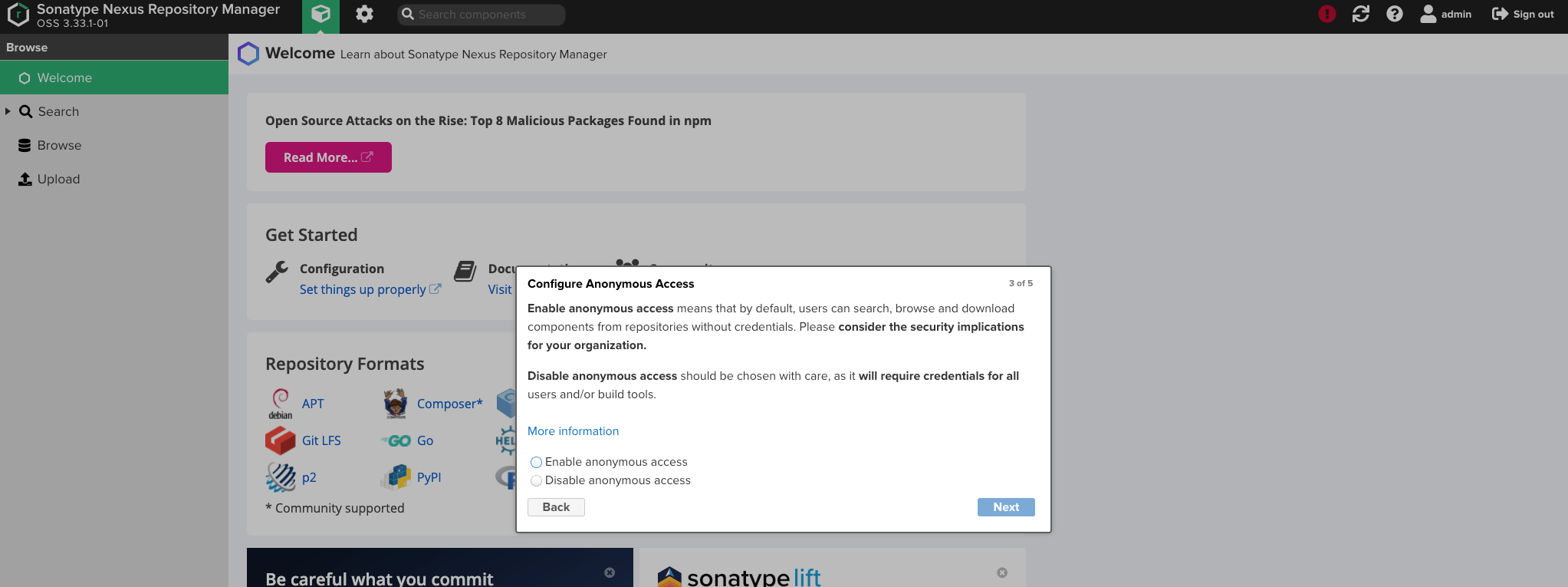

成功获取原始密码后,接下来系统会提示我们重新设置新的密码。

3.4、禁用匿名用户访问

根据提示直接下一步,即可完成初始化过程。

4、更新配置

helm upgrade --namespace infra -f values.yaml nexus sonatype/nexus-repository-manager --version 33.1.0