1. 拉取镜像

docker pull leolee32/kubernetes-library:kubernetes-zookeeper1.0-3.4.10docker pull k8s.gcr.io/kubernetes-zookeeper:1.0-3.4.10

2. 创建命名空间

# 创建命名空间kubectl create ns bigdata# 查看命名空kubectl get nskubectl get namespace

3. 创建pv

cd /home/bigdata/k8svi zkpv.yaml

编排pv:

kind: PersistentVolumeapiVersion: v1metadata:name: pv-zk1namespace: bigdataannotations:volume.beta.kubernetes.io/storage-class: "anything"labels:type: localspec:capacity:storage: 3GiaccessModes:- ReadWriteOncehostPath:path: "/data/zookeeper1"persistentVolumeReclaimPolicy: Recycle---kind: PersistentVolumeapiVersion: v1metadata:name: pv-zk2namespace: bigdataannotations:volume.beta.kubernetes.io/storage-class: "anything"labels:type: localspec:capacity:storage: 3GiaccessModes:- ReadWriteOncehostPath:path: "/data/zookeeper2"persistentVolumeReclaimPolicy: Recycle---kind: PersistentVolumeapiVersion: v1metadata:name: pv-zk3namespace: bigdataannotations:volume.beta.kubernetes.io/storage-class: "anything"labels:type: localspec:capacity:storage: 3GiaccessModes:- ReadWriteOncehostPath:path: "/data/zookeeper3"persistentVolumeReclaimPolicy: Recycle

创建pv:

kubectl create -f zkpv.yaml

查看pv:

kubectl get pv

4. 编排ZooKeeper**

cd /home/bigdata/k8svi zksts.yaml

服务发现实例编排:

apiVersion: v1kind: Servicemetadata:name: zk-hsnamespace: bigdatalabels:app: zkspec:ports:- port: 2888name: server- port: 3888name: leader-electionclusterIP: Noneselector:app: zk---apiVersion: v1kind: Servicemetadata:name: zk-csnamespace: bigdatalabels:app: zkspec:type: NodePortports:- port: 2181nodePort: 32181name: clientselector:app: zk---apiVersion: policy/v1beta1kind: PodDisruptionBudgetmetadata:name: zk-pdbnamespace: bigdataspec:selector:matchLabels:app: zkmaxUnavailable: 1---apiVersion: apps/v1kind: StatefulSetmetadata:name: zknamespace: bigdataspec:selector:matchLabels:app: zkserviceName: zk-hsreplicas: 3updateStrategy:type: RollingUpdatepodManagementPolicy: ParallelupdateStrategy:type: RollingUpdatetemplate:metadata:labels:app: zkspec:containers:- name: kubernetes-zookeeperimagePullPolicy: IfNotPresentimage: "leolee32/kubernetes-library:kubernetes-zookeeper1.0-3.4.10"resources:requests:memory: "128Mi"cpu: "0.1"ports:- containerPort: 2181name: client- containerPort: 2888name: server- containerPort: 3888name: leader-electioncommand:- sh- -c- "start-zookeeper \--servers=3 \--data_dir=/var/lib/zookeeper/data \--data_log_dir=/var/lib/zookeeper/data/log \--conf_dir=/opt/zookeeper/conf \--client_port=2181 \--election_port=3888 \--server_port=2888 \--tick_time=2000 \--init_limit=10 \--sync_limit=5 \--heap=512M \--max_client_cnxns=60 \--snap_retain_count=3 \--purge_interval=12 \--max_session_timeout=40000 \--min_session_timeout=4000 \--log_level=INFO"readinessProbe:exec:command:- sh- -c- "zookeeper-ready 2181"initialDelaySeconds: 10timeoutSeconds: 5livenessProbe:exec:command:- sh- -c- "zookeeper-ready 2181"initialDelaySeconds: 10timeoutSeconds: 5volumeMounts:- name: datadirmountPath: /var/lib/zookeepervolumeClaimTemplates:- metadata:name: datadirannotations:volume.beta.kubernetes.io/storage-class: "anything"spec:accessModes: [ "ReadWriteOnce" ]resources:requests:storage: 3Gi

注意:暴露端口,需要遵守“The range of valid ports is 30000-32767”。

创建sts:

kubectl create -f zksts.yaml

5. 验证

查看pods:

kubectl get pods -n bigdata

如果三个pod都处于“running”状态则说明启动成功了。

查看主机名:

for i in 0 1 2; do kubectl exec zk-$i -n bigdata -- hostname; done

查看myid:

for i in 0 1 2; do echo "myid zk-$i";kubectl exec zk-$i -n bigdata -- cat /var/lib/zookeeper/data/myid; done

查看完整域名:

for i in 0 1 2; do kubectl exec zk-$i -n bigdata -- hostname -f; done

查看ZooKeeper状态:

for i in 0 1 2; do kubectl exec zk-$i -n bigdata -- zkServer.sh status; done

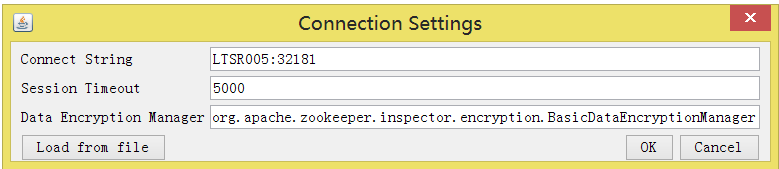

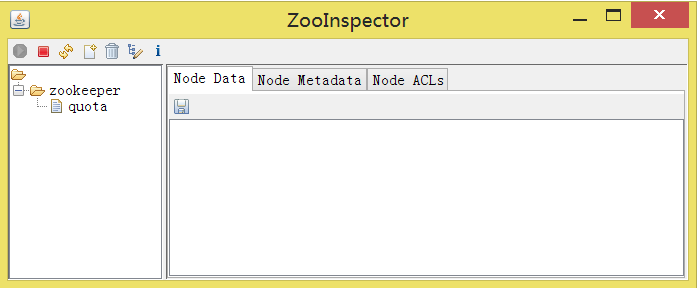

客户端查看(ZooInspector):

使用K8s的任意节点加上暴露端口号(LTSR003:32181/LTSR005:32181/LTSR006:32181)均可连接ZooKeeper。