0. 安装准备

1. NFS安装配置

1. 安装NFS

yum install -y nfs-utilsyum -y install rpcbind

2. 配置NFS服务端数据目录

mkdir -p /nfs/k8s/chmod 755 /nfs/k8svim /etc/exports

内容如下:

/nfs/k8s/ *(async,insecure,no_root_squash,no_subtree_check,rw)

3. 启动服务查看状态

# ltsr007systemctl start nfs.servicesystemctl enable nfs.serviceshowmount -e

4. 客户端

# 客户端不需要启动nfs服务# 开机启动sudo systemctl enable rpcbind.service# 启动rpcbind服务sudo systemctl start rpcbind.service# 检查NFS服务器端是否有目录共享showmount -e ltsr007

2. PV配置

1. 创建Provisioner

mkdir -p ~/k8smkdir ~/k8s/kafka-helmcd ~/k8s/kafka-helmvi nfs-client.yaml

内容如下:

apiVersion: apps/v1kind: Deploymentmetadata:name: nfs-client-provisionerspec:replicas: 1selector:matchLabels:app: nfs-client-provisionerstrategy:type: Recreatetemplate:metadata:labels:app: nfs-client-provisionerspec:serviceAccountName: default-admincontainers:- name: nfs-client-provisionerimage: quay.io/external_storage/nfs-client-provisioner:latestvolumeMounts:- name: nfs-client-rootmountPath: /persistentvolumesenv:- name: PROVISIONER_NAMEvalue: fuseim.pri/ifs- name: NFS_SERVERvalue: 192.168.0.17- name: NFS_PATHvalue: /nfs/k8svolumes:- name: nfs-client-rootnfs:server: 192.168.0.17path: /nfs/k8s

执行yaml:

kubectl create -f nfs-client.yaml

2. 创建ServiceAccount

给Provisioner授权,使得Provisioner拥有对NFS增删改查的权限。

vi nfs-client-sa.yaml

内容如下:

---apiVersion: v1kind: ServiceAccountmetadata:labels:k8s-app: gmoname: default-adminnamespace: default---apiVersion: rbac.authorization.k8s.io/v1beta1kind: ClusterRoleBindingmetadata:name: default-crbroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: cluster-adminsubjects:- kind: ServiceAccountname: default-adminnamespace: default

其中的ServiceAccount name需要和nfs-client.yaml中的serviceAccountName一致。

kubectl create -f nfs-client-sa.yaml

3. 创建StorageClass对象

vi zookeeper/nfs-zookeeper-class.yaml

内容如下:

apiVersion: storage.k8s.io/v1kind: StorageClassmetadata:name: zookeeper-nfs-storageprovisioner: fuseim.pri/ifs

provisioner需要和nfs-client.yaml中的PROVISIONER_NAME一致。

kubectl create -f zookeeper/nfs-zookeeper-class.yaml

1. 拉取镜像

1. 创建存放资源的文件夹

mkdir -p ~/k8smkdir ~/k8s/zookeeper-helmcd ~/k8s/zookeeper-helm

2. 从官方Helm库拉取镜像

helm search repo zookeeper# zookeeper-3.5.7helm fetch aliyun-hub/zookeeper --version 5.4.2

2. 解压缩

tar -zxvf zookeeper-5.4.2.tgz

3. 更改配置

vi zookeeper/zk-config.yaml

配置如下:

persistence:enabled: truestorageClass: "zookeeper-nfs-storage"accessMode: ReadWriteOncesize: 8Gi

3. 启动Chart

helm install zookeeper -n bigdata -f zookeeper/zk-config.yaml zookeeper --set replicaCount=3kubectl get pods -n bigdatakubectl get pvc -n bigdatakubectl get svc -n bigdata# 查看详情,语法:kubectl describe pod <pod-id> -n bigdatakubectl describe pod zookeeper-0 -n bigdata# 参考(动态传参)helm install zookeeper -n bigdata -f zookeeper/config.yaml aliyun-hub/zookeeper --version 5.4.2 --set replicaCount=3

4. 暴露端口

创建svc文件。

rm -rf zookeeper/zk-expose.yamlvi zookeeper/zk-expose.yaml

内容如下:

apiVersion: v1kind: Servicemetadata:name: zkexposelabels:name: zkexposespec:type: NodePort # 这里代表是NodePort类型的,暴露端口需要此类型ports:- port: 2181 # 这里的端口就是要暴露的,供内部访问targetPort: 2181 # 端口一定要和暴露出来的端口对应protocol: TCPnodePort: 30081 # 所有的节点都会开放此端口,此端口供外部调用,需要大于30000selector:app.kubernetes.io/component: zookeeperapp.kubernetes.io/instance: zookeeperapp.kubernetes.io/name: zookeeper

上述文件的selector要和我们此时的环境对应上,可以通过下面命令查看:

kubectl edit svc zookeeper -n bigdata

修改内容如下:

selector:app.kubernetes.io/component: zookeeperapp.kubernetes.io/instance: zookeeperapp.kubernetes.io/name: zookeepersessionAffinity: Nonetype: ClusterIPstatus:loadBalancer: {}

开启端口。

kubectl apply -f zookeeper/zk-expose.yaml -n bigdatakubectl get svc -n bigdata

5. 验证

查看pods:

kubectl get pods -n bigdatakubectl get all -n bigdata

如果三个pod都处于“running”状态则说明启动成功了。

查看主机名:for i in 0 1 2; do kubectl exec zookeeper-$i -n bigdata -- hostname; done

查看myid:

for i in 0 1 2; do echo "myid zk-$i";kubectl exec zookeeper-$i -n bigdata -- cat /bitnami/zookeeper/data/myid; done

查看完整域名:

for i in 0 1 2; do kubectl exec zookeeper-$i -n bigdata -- hostname -f; done

查看ZooKeeper状态:

for i in 0 1 2; do kubectl exec zookeeper-$i -n bigdata -- zkServer.sh status; done

登录终端:

kubectl exec zookeeper-0 -n bigdata bash -it

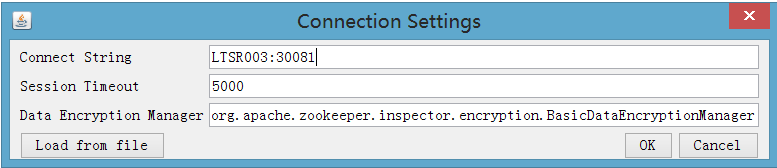

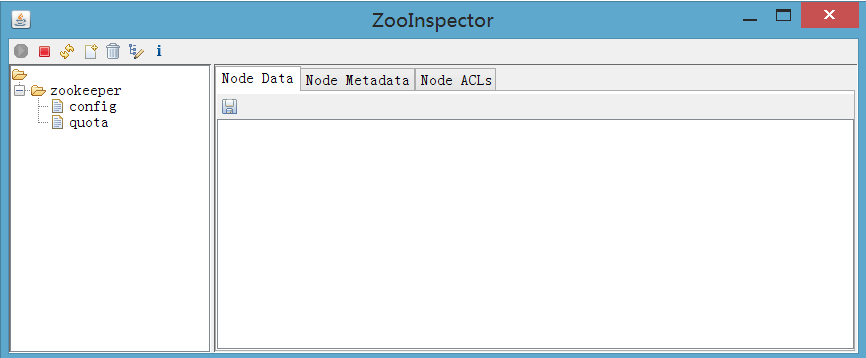

客户端查看(ZooInspector):

使用K8s的任意节点加上暴露端口号(LTSR003:30081/LTSR005:30081/LTSR006:30081)均可连接ZooKeeper。

6. 卸载

kubectl delete -f zookeeper/zk-expose.yaml -n bigdatahelm uninstall zookeeper -n bigdata