概念部分

Linux 内存分配

- Kernel memory: memory needed by the OS to manage itself

- Private memory: memory used by user programs

Shared memory: memory that can be used by multiple programs simultaneously

Kernel Part

mem_map[]. This is a kernel structure used to manage and reclaim memory. it grows larger as the amount of installed RAM increases. It uses ~1.36% of memory on 64-bit and 0.78% on 32-bit kernels.

- memory cgroup structures.

- Kernel hash tables. Used to index kernel caches like dentry and inode caches. Larger caches generally mean higher performance and the kernel assumes that, as system RAM increases, it should devote more and more to these tables.

- Architecture-specific reservations

Private Part

Page Cache

The Linux Page Cache (“Cached:” from meminfo ) is the largest single consumer of RAM on most systems. Any time you do a read() from a file on disk, that data is read into memory, and goes into the page cache。当我们第二次读取该文件,就会直接从内存中读取,比从硬盘上加载快很多。

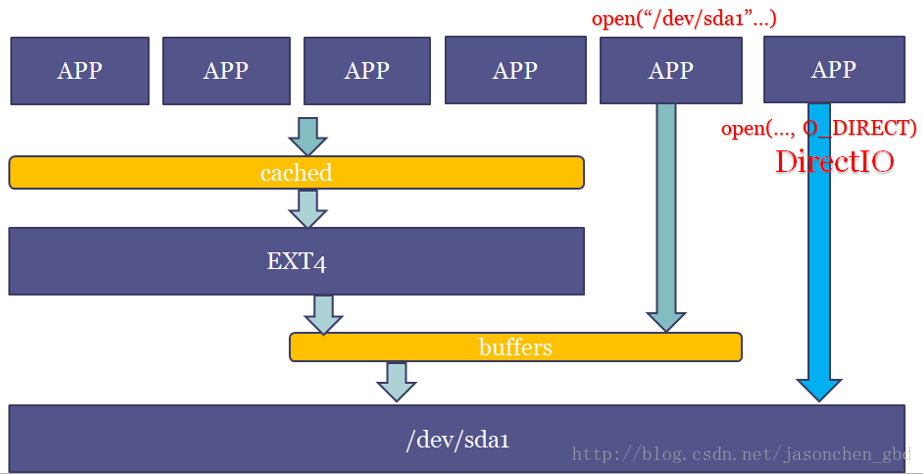

通过free命令可以看到当前page cache占用内存的大小,free命令中会打印buffers和cached(有的版本free命令将二者放到一起了)。通过文件系统来访问文件(挂载文件系统,通过文件名打开文件)产生的缓存就由cached记录,而直接操作裸盘(打开/dev/sda设备去读写)产生的缓存就由buffers记录。

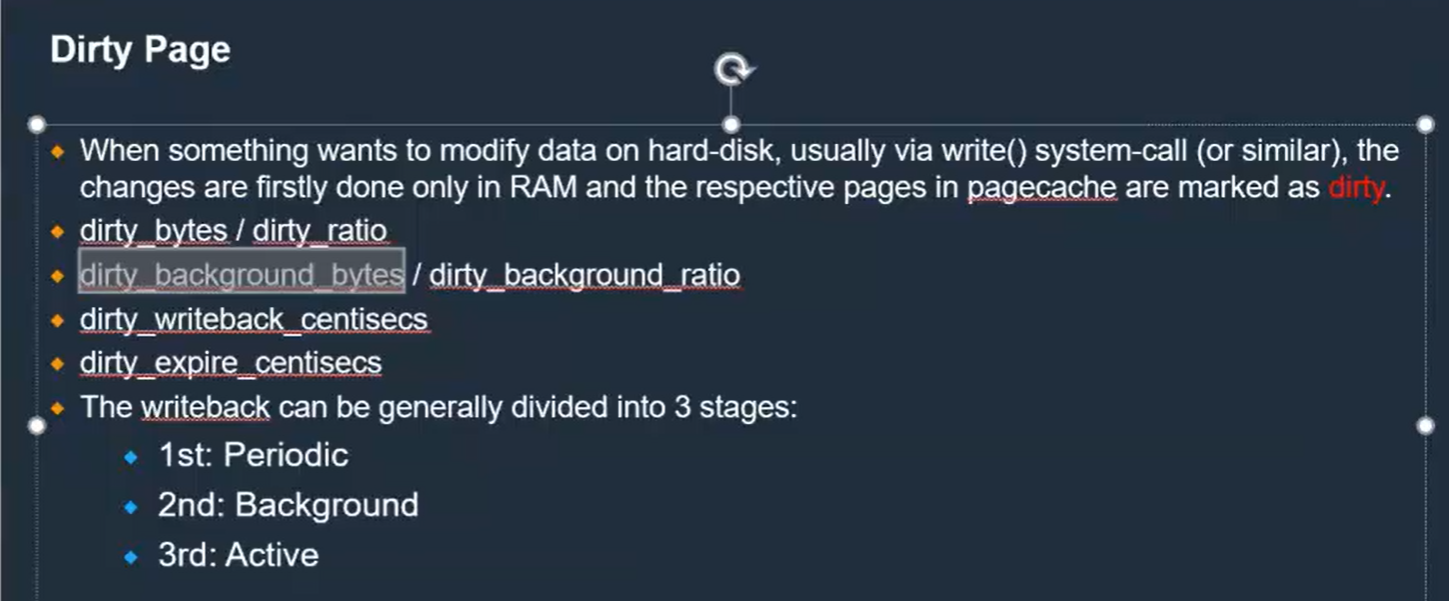

Dirty Page

如果内存中的数据发生了修改,page每次修改后都会调用SetPageDirty(page)将page标识为dirty。

操作系统会自动的将dirty page 写回到硬盘,dirty page 中的数据会在断电后消失。

Dirty page 写回规则

- dirty_ratio: 一个写磁盘的进程所产生的脏页到达这个比例时,这个进程自己就会去回写脏页。

- dirty_expire_centisecs: 脏页的到期时间,或理解为老化时间,单位是1/100s,内核中的flusher thread会检查驻留内存的时间超过dirty_expire_centisecs的脏页,超过的就回写。

- dirty_writeback_centisecs: 内核的flusher thread周期性被唤醒(wakeup_flusher_threads())的时间间隔,每次被唤醒都会去检查是否有脏页老化了。如果将这个值置为0,则flusher线程就完全不会被唤醒了。

- dirty_background_ratio: 如果脏页的数量超过这个比例时,flusher线程就会启动脏页回写。

因此可以看出,脏页回写的时机由时间(dirty_expire_centisecs/dirty_writeback_centisecs)和空间(dirty_ratio/dirty_background_ratio)两方面共同控制:

即使只有一个脏页,那如果它超时了,也会被写回。防止脏页在内存驻留太久。dirty_expire_centisecs这个值默认是3000,即30s,可以将其设置得短一些,这样掉电后丢失的数据会更少,但磁盘写操作也更密集。

不能有太多的脏页,否则会给磁盘IO造成很大压力,例如在内存不够做内存回收时,还要先回写脏页,也会明显耗时。

sync是用来回写脏页的,脏页不能在内存中呆的太久,因为如果突然断电没有写到硬盘的脏数据就丢了,另一方面如果攒了很多一起写回也会明显占用CPU时间。

脏页都是指有文件背景的页面,匿名页不会存在脏页。从/proc/meminfo的’Dirty’一行可以看到当前系统的脏页有多少,用sync命令可以刷掉。

https://blog.csdn.net/jasonchen_gbd/article/details/79462014

https://manybutfinite.com/post/page-cache-the-affair-between-memory-and-files/

dentry/inode 缓存

Each time you do an ‘ls’ (or any other operation: open(), stat(), etc…) on a filesystem, the kernel needs data which are on the disk. The kernel parses these data on the disk and puts it in some filesystem-independent structures so that it can be handled in the same way across all different filesystems.

感觉应该是文件系统的缓存。

[root@ip-172-31-15-250 ~]# head -2 /proc/slabinfo; cat /proc/slabinfo | egrep dentry\|inodeslabinfo - version: 2.1# name <active_objs> <num_objs> <objsize> <objperslab> <pagesperslab> : tunables <limit> <batchcount> <sharedfactor> : slabdata <active_slabs> <num_slabs> <sharedavail>xfs_inode 4238 7095 1088 15 4 : tunables 0 0 0 : slabdata 473 473 0mqueue_inode_cache 16 16 1024 16 4 : tunables 0 0 0 : slabdata 1 1 0hugetlbfs_inode_cache 24 24 680 12 2 : tunables 0 0 0 : slabdata 2 2 0sock_inode_cache 782 782 704 23 4 : tunables 0 0 0 : slabdata 34 34 0shmem_inode_cache 1049 1470 760 21 4 : tunables 0 0 0 : slabdata 70 70 0proc_inode_cache 1808 1980 728 22 4 : tunables 0 0 0 : slabdata 90 90 0inode_cache 16425 16584 656 12 2 : tunables 0 0 0 : slabdata 1382 1382 0dentry 22580 30933 192 21 1 : tunables 0 0 0 : slabdata 1473 1473 0selinux_inode_security 25624 52224 40 102 1 : tunables 0 0 0 : slabdata 512 512 0

Buffer Cache

he buffer cache (“Buffers:” in meminfo) is a close relative to the dentry/inode caches. The dentries and inodes in memory represent structures on disk, but are laid out very differently. This might be because we have a kernel structure like a pointer in the in-memory copy, but not on disk. It might also happen that the on-disk format is a different endianness than CPU.

In any case, when we need to fetch an inode or dentry to populate the caches, we must first bring in a the page from disk on which those structures are represented. This can not be a part of the page cache because it is not actually the contents of a file, rather it is the raw contents of the disk. A page in the buffer cache may have dozens of on-disk inodes inside of it, although we only created an in-memory inode for one. The buffer cache is, again, a bet that the kernel will need another in the same group of inodes and will save a trip to the disk by keeping this buffer page in memory.

磁盘的元数据的缓存。

实际上文件系统本身再读写文件就是操作裸分区的方式,用户态也可以直接操作裸盘,像dd命令操作一个设备名也是直接访问裸分区。那么,通过文件系统读写的时候,就会既有cached又有buffers。从图中可以看到,文件名等元数据和文件系统相关,是进cached,实际的数据缓存还是在buffers。例如,read一个文件(如ext4文件系统)的时候,如果文件cache命中了,就不用走到ext4层,从vfs层就返回了。

[

](https://blog.csdn.net/jasonchen_gbd/article/details/79462014)

anonymous page 匿名页

没有文件背景的页面,即匿名页(anonymous page),如堆,栈,数据段等,不是以文件形式存在,因此无法和磁盘文件交换,但可以通过硬盘上划分额外的swap交换分区或使用交换文件进行交换。

Shared Memory Part

用于进程间通信,或者动态链接库

查看Shared memory 信息

[root@ip-172-16-1-245 ~]# cat /proc/sysvipc/shmkey shmid perms size cpid lpid nattch uid gid cuid cgid atime dtime ctime rss swap[root@ip-172-16-1-245 ~]# ipcs -lm------ Shared Memory Limits --------max number of segments = 4096max seg size (kbytes) = 18014398509465599max total shared memory (kbytes) = 18014398509481980min seg size (bytes) = 1

文件系统的共享内存

写到 /dev/shm 中的内容都会放到内存当中

[root@ip-172-16-1-245 ~]# df -hFilesystem Size Used Avail Use% Mounted ontmpfs 890M 0 890M 0% /dev/shm

共享内存可以由Key attach到多个进程,但是如果进程没有正常 detach 这部分内存并释放,这部分内存就会一直存在。可以通过ipcs -m找到这部分内存,使用 ipcrm -m id 来删除这部分内存。

https://datacadamia.com/os/linux/shared_memory#:~:text=Shared%20memory%20%28SHM%29%20in%20Linux.%20The%20shared%20memory,an%20entry%20in%20%2Fetc%2Ffstab%20to%20mount%20%2Fdev%2Fshm%20.

https://datacadamia.com/os/memory/shared

[

](https://manybutfinite.com/post/page-cache-the-affair-between-memory-and-files/)

HugePage

https://zhuanlan.zhihu.com/p/34659353

查看内存

/proc/meminfo

$ cat /proc/meminfo

$ egrep —color ‘Mem|Cache|Swap’ /proc/meminfo

参考文档:

https://access.redhat.com/solutions/225303

https://www.cyberciti.biz/faq/linux-check-memory-usage/

•Interpreting /proc/meminfo and free output for Red Hat Enterprise Linux

•https://access.redhat.com/solutions/406773

[root@ip-172-31-15-250 ~]# cat /proc/meminfoMemTotal: 1821396 kBMemFree: 210848 kBMemAvailable: 1239920 kBBuffers: 1720 kBCached: 1137580 kBSwapCached: 0 kBActive: 709636 kBInactive: 642320 kBActive(anon): 221788 kBInactive(anon): 19008 kBActive(file): 487848 kBInactive(file): 623312 kBUnevictable: 0 kBMlocked: 0 kBSwapTotal: 0 kBSwapFree: 0 kBDirty: 0 kBWriteback: 0 kBAnonPages: 212752 kBMapped: 122012 kBShmem: 28140 kBKReclaimable: 97276 kBSlab: 189084 kBSReclaimable: 97276 kBSUnreclaim: 91808 kBKernelStack: 2512 kBPageTables: 8980 kBNFS_Unstable: 0 kBBounce: 0 kBWritebackTmp: 0 kBCommitLimit: 910696 kBCommitted_AS: 677216 kBVmallocTotal: 34359738367 kBVmallocUsed: 0 kBVmallocChunk: 0 kBPercpu: 1168 kBHardwareCorrupted: 0 kBAnonHugePages: 45056 kBShmemHugePages: 0 kBShmemPmdMapped: 0 kBHugePages_Total: 0HugePages_Free: 0HugePages_Rsvd: 0HugePages_Surp: 0Hugepagesize: 2048 kBHugetlb: 0 kBDirectMap4k: 135080 kBDirectMap2M: 1923072 kBDirectMap1G: 0 kB

字段详解

- Total : The total amount of RAM installed in my system. In this case 30Gi.

- Used : The total amount of RAM used. It is calculated as: Total – (free + buffers + cache)

- Free : The amount of unused or free memory for your apps.

- Shared : Amount of memory mostly used by the tmpfs file systems. In other words, Shmem in /proc/meminfo.

- 共享内存是进程之间共享数据的一种方式。两个进程定义相同的内存区域共享,然后他们可以简单地通过写入来交换信息。

- 也可以用于动态链接库

- 实现方式:

- System V IPC

- BSD mmap

- Buff/cache : It is sum of buffers and cache. Buff is amount of memory used by Linux kernel for buffers. Cache is memory used by the page cache and slabs.

- A buffer is something that has yet to be “written” to disk.

- A cache is something that has been “read” from the disk and stored for later use.

- Available : This is an estimation of how much memory is available for starting new applications on Linux system, without swapping.

https://cloud.tencent.com/developer/article/1375108

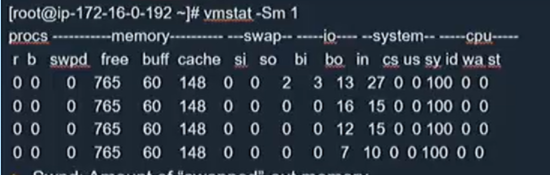

vmstat

vmstat 5procs -----------memory---------- ---swap--- -----io---- -system-- ----cpu----r b swpd free buff cache si so bi bo in cs us sy id wa3 0 833704 54824 25196 328672 10 0 343 18 510 1382 96 4 0 06 0 833704 54556 25092 324584 0 0 333 22 504 1180 93 7 0 04 0 833704 51516 25112 320856 33 0 315 19 508 1234 95 5 0 03 0 833704 54836 24984 314404 6 0 223 27 498 1191 95 5 0 03 0 833704 53072 24944 307844 4 0 216 22 518 1375 96 4 0 05 0 833704 53928 24888 304076 6 0 262 18 548 1665 94 6 0 03 4 843964 50192 184 58064 16 2416 16 2464 570 1451 78 22 0 03 7 908244 48756 224 47760 118 13645 149 13664 730 1245 76 16 0 83 2 922064 54280 340 49228 1470 2838 1817 2865 711 1481 88 12 0 04 2 932644 54068 424 52204 1972 2195 2596 2211 678 1388 90 10 0 02 3 944012 56304 492 52292 2986 2591 3063 2615 735 1562 89 11 0 02 4 957304 54604 572 51964 4042 3414 4096 3438 852 1808 88 12 0 0...

字段详解

r 代表runnable的进程

b 代表block的进程,D状态

内存压力

内存压力会发生什么

在 Linux 中,这意味着我们将扫描和回收内存。我们会写出脏数据,尝试丢弃之前缓存的数据,或者尝试交换内存。

检查是否有内存压力

/proc/zoneinfo

low:当剩余内存慢慢减少,触到这个水位时,就会触发kswapd线程的内存回收。

min:如果剩余内存减少到触及这个水位,可认为内存严重不足,当前进程就会被堵住,kernel会直接在这个进程的进程上下文里面做内存回收(direct reclaim)。

high: 进行内存回收时,内存慢慢增加,触到这个水位时,就停止回收。

pages free 1984

min 98

low 122

high 146

内存压力来源

/sys/kernel/debug/tracing/events/kmem/mm_page_alloc/

Sar

[root@ip-172-16-1-245 ~]# sar -BLinux 4.18.0-348.2.1.el8_5.x86_64 (ip-172-16-1-245.cn-north-1.compute.internal) 12/21/2021 _x86_64_ (2 CPU)12:00:19 AM pgpgin/s pgpgout/s fault/s majflt/s pgfree/s pgscank/s pgscand/s pgsteal/s %vmeff12:10:19 AM 3.51 2.34 813.44 0.03 1208.32 0.00 0.00 0.00 0.00pgscank pgscand 代表内存回收扫描

释放内存

释放页面缓存、inode和dentry 缓存

To free pagecache:# echo 1 > /proc/sys/vm/drop_cachesTo free dentries and inodes:# echo 2 > /proc/sys/vm/drop_cachesTo free pagecache, dentries and inodes:echo 3 > /proc/sys/vm/drop_caches

不是很有意义。因为程序如果用到会再次读入内存。

min_free_kbytes

/proc/sys/vm/min_free_kbytes

保证系统间可用的最小 KB 数。这个值可用来计算每个低内存区的水印值,然后为其大小按比例分配保留的可用页。

这部分保留的内存应用不可以用。保留给内核。如果调大这个值,那么可用的memory就会减少。并且会花费大量的CPU进行清缓存的任务。

系统中的/proc/zoneinfo 中三个水线的值就是这么算的

https://www.dazhuanlan.com/sanword/topics/989350

https://www.jianshu.com/p/20a627abe05e

https://blog.csdn.net/reliveIT/article/details/112578216

Dirty Writeout

当系统低于min_free_kbytes值时,系统会尝试释放page cache。在发生释放页缓存时,我们可以看到bo的值会上升。就是使用sync 来写出所有脏数据。

[

](https://access.redhat.com/articles/45002)

内存回收算法

Swap

Swap

只能放匿名页和(unmap的)共享内存。其他的不行

https://access.redhat.com/solutions/33375

swap cache

将内存页放入swap里面

https://www.halolinux.us/kernel-architecture/the-swap-cache.html

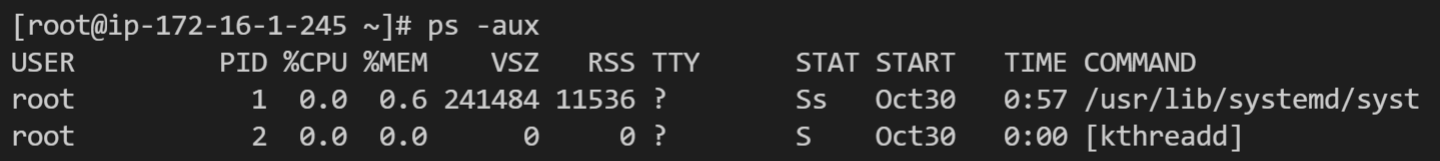

带[] 的都是共享内存

Swap cache

https://www.halolinux.us/kernel-architecture/the-swap-cache.html

Swapping

重点关注si,so 这两项,代表了swap in/out。

vmstat 5procs -----------memory---------- ---swap--- -----io---- -system-- ----cpu----r b swpd free buff cache si so bi bo in cs us sy id wa3 0 833704 54824 25196 328672 10 0 343 18 510 1382 96 4 0 06 0 833704 54556 25092 324584 0 0 333 22 504 1180 93 7 0 04 0 833704 51516 25112 320856 33 0 315 19 508 1234 95 5 0 03 0 833704 54836 24984 314404 6 0 223 27 498 1191 95 5 0 03 0 833704 53072 24944 307844 4 0 216 22 518 1375 96 4 0 05 0 833704 53928 24888 304076 6 0 262 18 548 1665 94 6 0 03 4 843964 50192 184 58064 16 2416 16 2464 570 1451 78 22 0 03 7 908244 48756 224 47760 118 13645 149 13664 730 1245 76 16 0 83 2 922064 54280 340 49228 1470 2838 1817 2865 711 1481 88 12 0 04 2 932644 54068 424 52204 1972 2195 2596 2211 678 1388 90 10 0 02 3 944012 56304 492 52292 2986 2591 3063 2615 735 1562 89 11 0 02 4 957304 54604 572 51964 4042 3414 4096 3438 852 1808 88 12 0 0...

参考文档

https://linux-mm.org/LinuxMMDocumentation

https://www.linuxatemyram.com/

•What is difference between cache and buffer ?

•https://access.redhat.com/solutions/636263

•What is cache in “free -m” output and why is memory utilization high for cache?

•https://access.redhat.com/solutions/67610

•Dirty page

•https://access.redhat.com/articles/45002