https://github.com/kestra-io/kestra

Kestra is an infinitely scalable orchestration and scheduling platform, creating, running, scheduling, and monitoring millions of complex pipelines.

What is Kestra ?

Kestra is an infinitely scalable orchestration and scheduling platform, creating, running, scheduling, and monitoring millions of complex pipelines.- 🔀 Any kind of workflow: Workflows can start simple and progress to more complex systems with branching, parallel, dynamic tasks, flow dependencies

- 🎓 Easy to learn: Flows are in simple, descriptive language defined in YAML—you don’t need to be a developer to create a new flow.

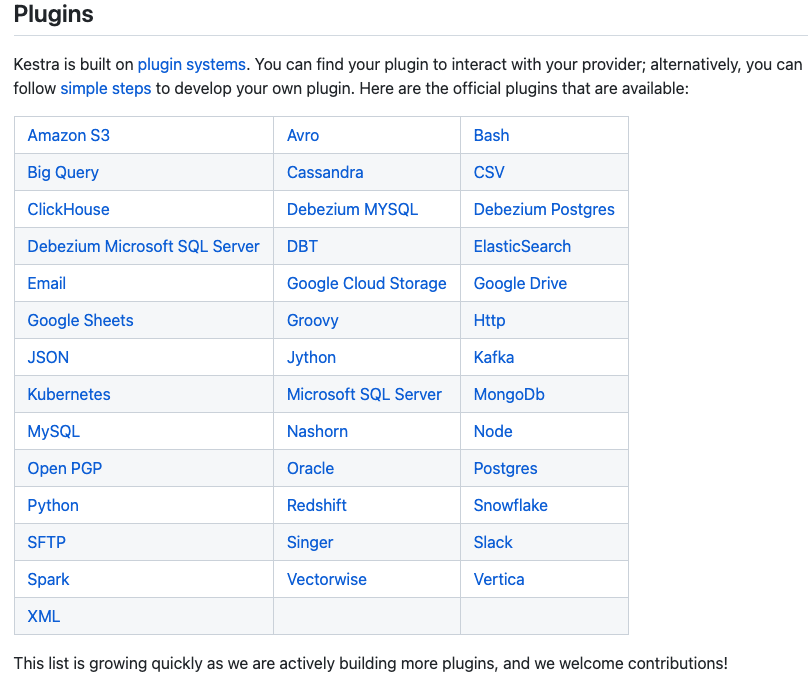

- 🔣 Easy to extend: Plugins are everywhere in Kestra, many are available from the Kestra core team, but you can create one easily.

- 🆙 Any triggers: Kestra is event-based at heart—you can trigger an execution from API, schedule, detection, events

- 💻 A rich user interface: The built-in web interface allows you to create, run, and monitor all your flows—no need to deploy your flows, just edit them.

- ⏩ Enjoy infinite scalability: Kestra is built around top cloud native technologies—scale to millions of executions stress-free.

Principles

- Simple: Kestra workflows are defined as yaml, no code here, just a simple declarative syntax allowing even complex workflows.

- Extensible: The entire foundation of Kestra is built upon plugins, find an existing one or build your own to fit your business needs.

- Real time: Kestra is built by thinking in real time. Simply create a flow, run it and see all the logs in realtime.

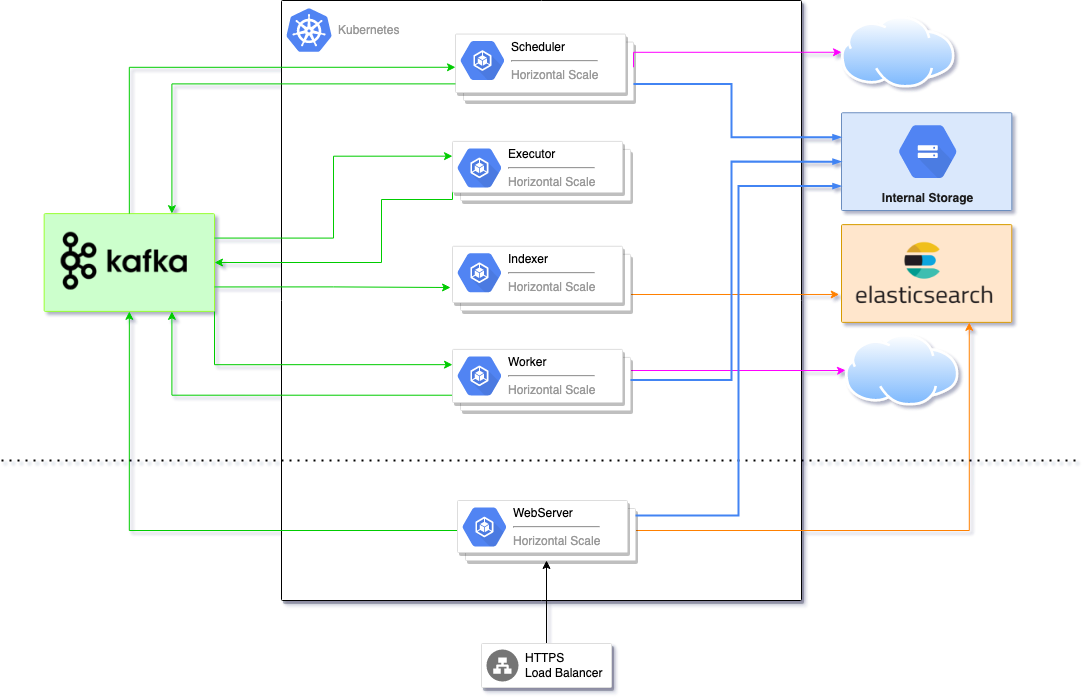

- Scalable: Kestra users enjoy its almost infinite scalability. It is built with top technologies like Kafka & Elasticsearch, and can scale to millions of executions without any difficulty.

- Cloud Native: Built with the cloud in mind, Kestra uses top cloud native technology and allows you to deploy everywhere, whether in cloud or on-premise.

- Open source: Kestra is built with the Apache 2 license, contribute(opens new window)on our core or plugins as you can.

Usages

Kestra can be used as:- Data orchestrator: Handle complex workflows, and move large datasets, extracting, transforming and loading them in the manner of your choice (ETL or ELT).

- Distributed crontab: Schedule work on multiple workloads and monitor each and every process.

- Events Driven workflow: React to external events such as api calls to get things done instantly.

- …

Architecture