启动 Seata Server

环境准备

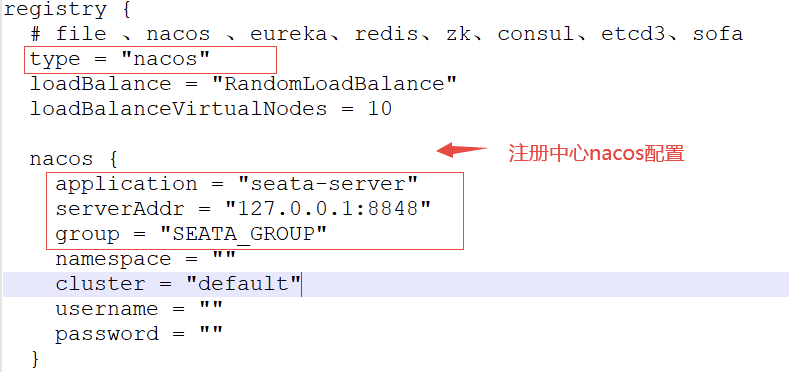

指定 nacos 作为配置中心和注册中心

修改 registry.conf 文件:

注意:客户端配置registry.conf使用nacos时也要注意group要和seata server中的group一致,默认group是”DEFAULT_GROUP”

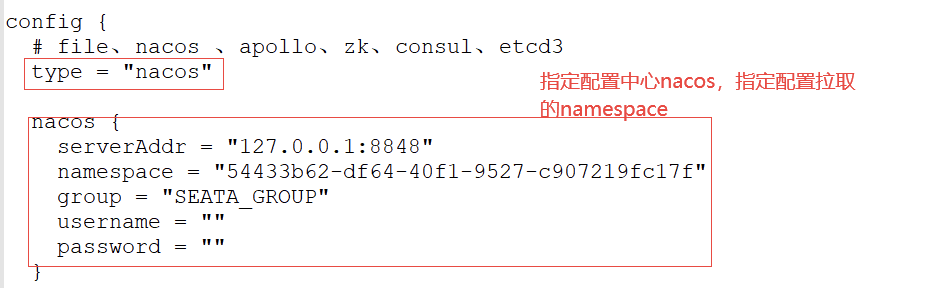

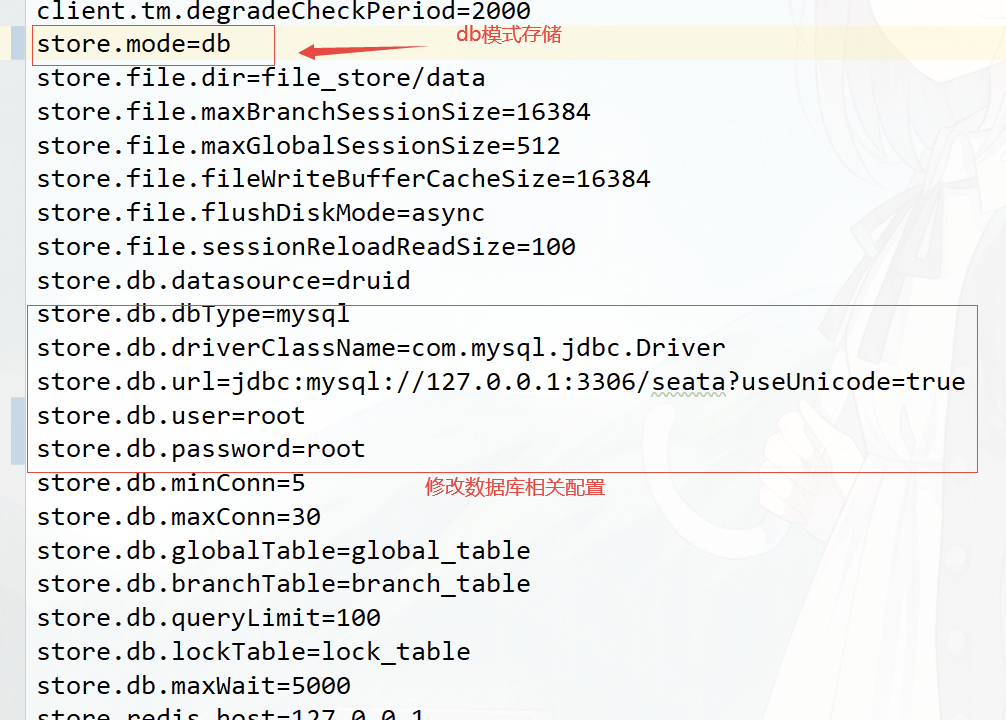

同步 seata server 的配置到 nacos

获取/seata/script/config-center/config.txt,修改配置信息:

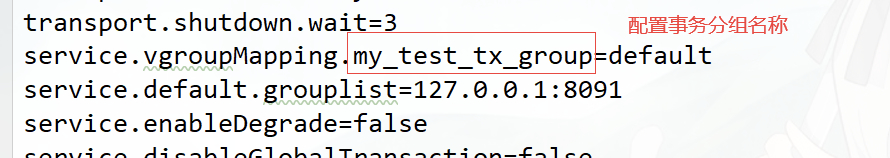

配置事务分组, 要与客户端配置的事务分组一致

(客户端properties配置:spring.cloud.alibaba.seata.tx‐service‐group=my_test_tx_group)

配置参数同步到 nacos:

sh ${SEATAPATH}/script/config-center/nacos/nacos-config.sh -h localhost -p 8848 -g SEATA_GROUP -t 5a3c7d6c-f497-4d68-a71a-2e5e3340b3ca

参数说明: -h: host,默认值 localhost -p: port,默认值 8848 -g: 配置分组,默认值为 ‘SEATA_GROUP’ -t: 租户信息,对应 Nacos 的命名空间ID字段, 默认值为空 ‘’

启动 Seata Server

bin/seata-server.sh

在注册中心中可以查看到seata-server注册成功:

Spring Cloud 整合 Seata

环境准备:

seata: v1.4.0

spring cloud&spring cloud alibaba:

<spring-cloud.version>Greenwich.SR3</spring-cloud.version><spring-cloud-alibaba.version>2.1.1.RELEASE</spring-cloud-alibaba.version>

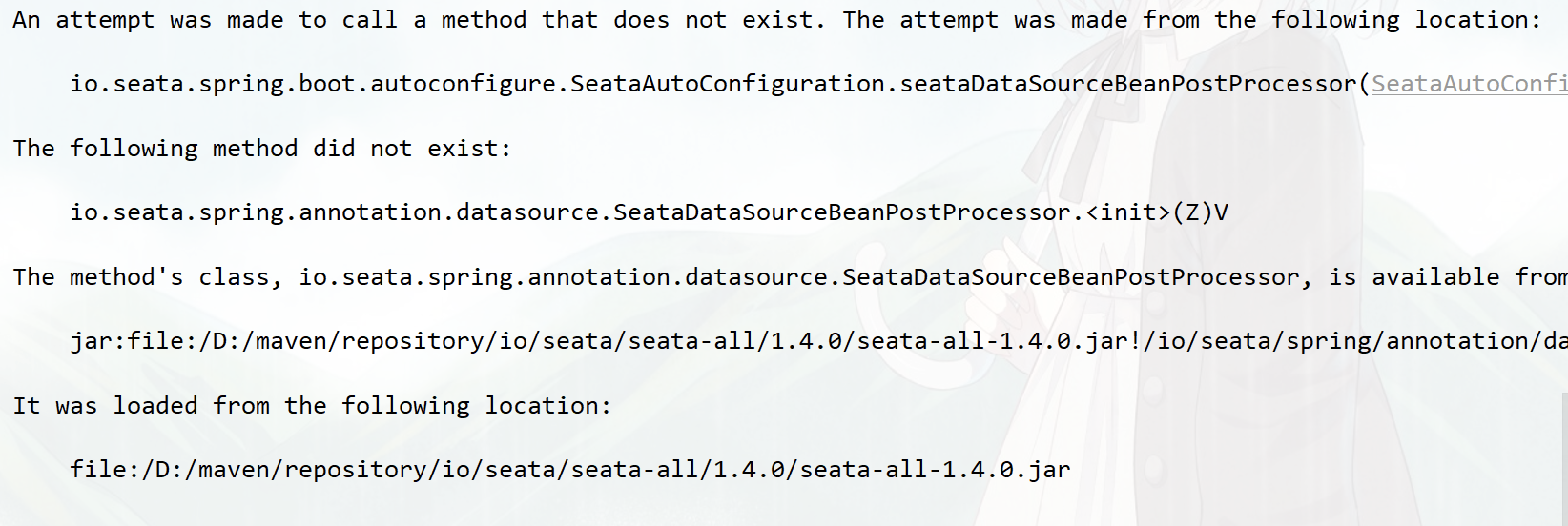

注意版本选择问题:

spring cloud alibaba 2.1.2 及其以上版本使用seata1.4.0会出现如下异常 (支持seata 1.3.0)

导入依赖

<!-- seata--><dependency><groupId>com.alibaba.cloud</groupId><artifactId>spring-cloud-starter-alibaba-seata</artifactId><exclusions><exclusion><groupId>io.seata</groupId><artifactId>seata-all</artifactId></exclusion></exclusions></dependency><dependency><groupId>io.seata</groupId><artifactId>seata-all</artifactId><version>1.4.0</version></dependency><!--nacos 注册中心--><dependency><groupId>com.alibaba.cloud</groupId><artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId></dependency><dependency><groupId>org.springframework.cloud</groupId><artifactId>spring-cloud-starter-openfeign</artifactId></dependency><dependency><groupId>com.alibaba</groupId><artifactId>druid-spring-boot-starter</artifactId><version>1.1.21</version></dependency><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><scope>runtime</scope><version>8.0.16</version></dependency><dependency><groupId>org.mybatis.spring.boot</groupId><artifactId>mybatis-spring-boot-starter</artifactId><version>2.1.1</version></dependency>

微服务对应数据库中添加undo_log表

CREATE TABLE `undo_log` (`id` bigint(20) NOT NULL AUTO_INCREMENT,`branch_id` bigint(20) NOT NULL,`xid` varchar(100) NOT NULL,`context` varchar(128) NOT NULL,`rollback_info` longblob NOT NULL,`log_status` int(11) NOT NULL,`log_created` datetime NOT NULL,`log_modified` datetime NOT NULL,PRIMARY KEY (`id`),UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`)) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

微服务需要使用seata DataSourceProxy代理自己的数据源

/**

* 需要用到分布式事务的微服务都需要使用seata DataSourceProxy代理自己的数据源

*/

@Configuration

@MapperScan("com.tuling.datasource.mapper")

public class MybatisConfig {

/**

* 从配置文件获取属性构造datasource,注意前缀,这里用的是druid,根据自己情况配置,

* 原生datasource前缀取"spring.datasource"

*

* @return

*/

@Bean

@ConfigurationProperties(prefix = "spring.datasource")

public DataSource druidDataSource() {

DruidDataSource druidDataSource = new DruidDataSource();

return druidDataSource;

}

/**

* 构造datasource代理对象,替换原来的datasource

* @param druidDataSource

* @return

*/

@Primary

@Bean("dataSource")

public DataSourceProxy dataSourceProxy(DataSource druidDataSource) {

return new DataSourceProxy(druidDataSource);

}

@Bean(name = "sqlSessionFactory")

public SqlSessionFactory sqlSessionFactoryBean(DataSourceProxy dataSourceProxy) throws Exception {

SqlSessionFactoryBean factoryBean = new SqlSessionFactoryBean();

//设置代理数据源

factoryBean.setDataSource(dataSourceProxy);

ResourcePatternResolver resolver = new PathMatchingResourcePatternResolver();

factoryBean.setMapperLocations(resolver.getResources("classpath*:mybatis/**/*-mapper.xml"));

org.apache.ibatis.session.Configuration configuration=new org.apache.ibatis.session.Configuration();

//使用jdbc的getGeneratedKeys获取数据库自增主键值

configuration.setUseGeneratedKeys(true);

//使用列别名替换列名

configuration.setUseColumnLabel(true);

//自动使用驼峰命名属性映射字段,如userId ---> user_id

configuration.setMapUnderscoreToCamelCase(true);

factoryBean.setConfiguration(configuration);

return factoryBean.getObject();

}

}

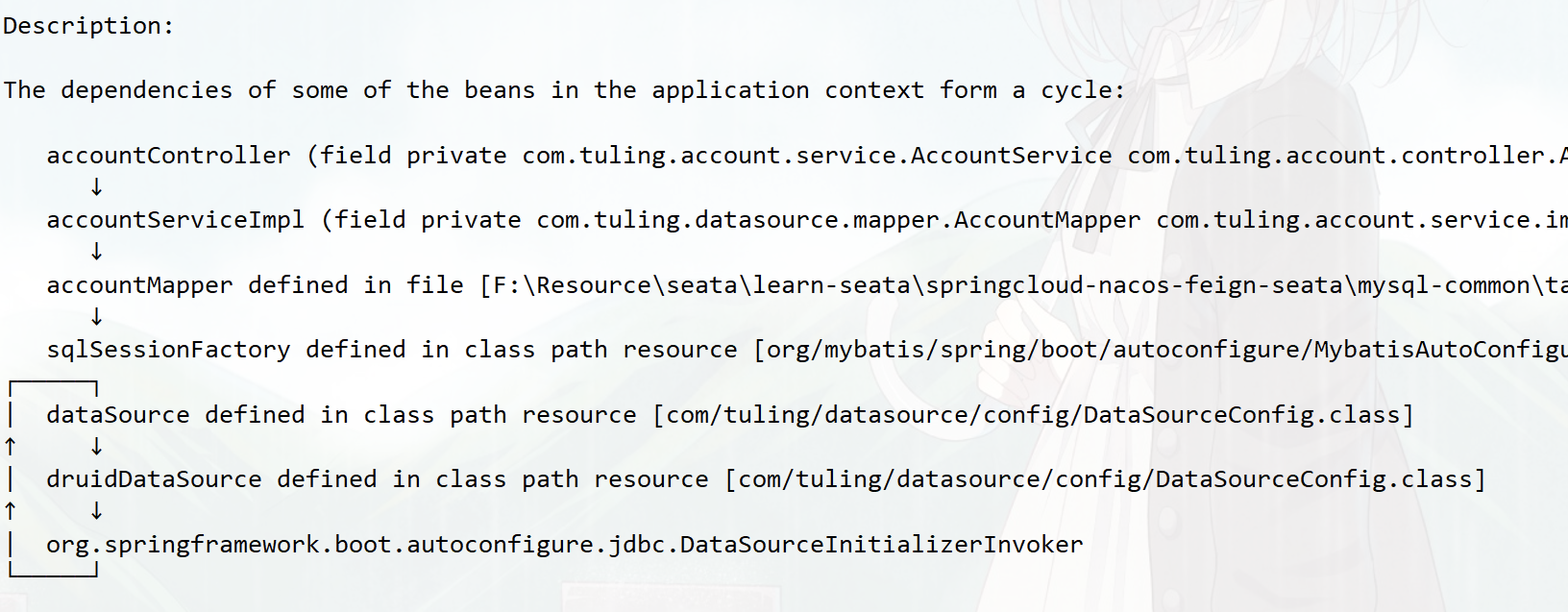

注意: 启动类上需要排除DataSourceAutoConfiguration,否则会出现循环依赖的问题 启动类排除DataSourceAutoConfiguration.class

启动类排除DataSourceAutoConfiguration.class

@SpringBootApplication(scanBasePackages = "com.tuling",exclude = DataSourceAutoConfiguration.class)

public class AccountServiceApplication {

public static void main(String[] args) {

SpringApplication.run(AccountServiceApplication.class, args);

}

}

添加 Seata 配置

将registry.conf文件拷贝到resources目录下,指定注册中心和配置中心都是nacos

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

serverAddr = "192.168.65.232:8848"

namespace = ""

cluster = "default"

group = "SEATA_GROUP"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3、springCloudConfig

type = "nacos"

nacos {

serverAddr = "192.168.65.232:8848"

namespace = "29ccf18e-e559-4a01-b5d4-61bad4a89ffd"

group = "SEATA_GROUP"

}

}

在yml中指定事务分组(和配置中心的service.vgroup_mapping 配置一一对应)

spring:

application:

name: account-service

cloud:

nacos:

discovery:

server-addr: 127.0.0.1:8848

alibaba:

seata:

tx-service-group:

my_test_tx_group # seata 服务事务分组

spring cloud alibaba 2.1.4 之后支持yml中配置seata属性,可以用来替换registry.conf文件

配置支持实现在seata-spring-boot-starter.jar中,也可以引入依赖

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

<version>1.4.0</version>

</dependency>

在yml中配置:

seata:

# seata 服务分组,要与服务端nacos-config.txt中service.vgroup_mapping的后缀对应

tx-service-group: my_test_tx_group

registry:

# 指定nacos作为注册中心

type: nacos

nacos:

server-addr: 127.0.0.1:8848

namespace: ""

group: SEATA_GROUP

config:

# 指定nacos作为配置中心

type: nacos

nacos:

server-addr: 127.0.0.1:8848

namespace: "54433b62-df64-40f1-9527-c907219fc17f"

group: SEATA_GROUP

在事务发起者中添加@GlobalTransactional注解

@Override

//@Transactional

@GlobalTransactional(name="createOrder")

public Order saveOrder(OrderVo orderVo){

log.info("=============用户下单=================");

log.info("当前 XID: {}", RootContext.getXID());

// 保存订单

Order order = new Order();

order.setUserId(orderVo.getUserId());

order.setCommodityCode(orderVo.getCommodityCode());

order.setCount(orderVo.getCount());

order.setMoney(orderVo.getMoney());

order.setStatus(OrderStatus.INIT.getValue());

Integer saveOrderRecord = orderMapper.insert(order);

log.info("保存订单{}", saveOrderRecord > 0 ? "成功" : "失败");

//扣减库存

storageFeignService.deduct(orderVo.getCommodityCode(),orderVo.getCount());

//扣减余额

accountFeignService.debit(orderVo.getUserId(),orderVo.getMoney());

//更新订单

Integer updateOrderRecord = orderMapper.updateOrderStatus(order.getId(),OrderStatus.SUCCESS.getValue());

log.info("更新订单id:{} {}", order.getId(), updateOrderRecord > 0 ? "成功" : "失败");

return order;

}

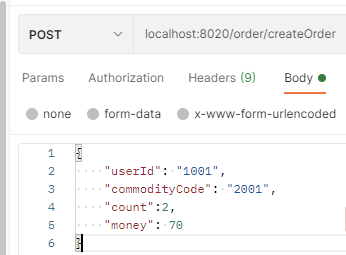

测试分布式事务是否生效

用户下单账户余额不足,库存是否回滚