对于理解swarm的网络来讲,个人认为最重要的两个点:

- 第一是外部如何访问部署运行在swarm集群内的服务,可以称之为

入方向流量,在swarm里我们通过ingress来解决 - 第二是部署在swarm集群里的服务,如何对外进行访问,这部分又分为两块:

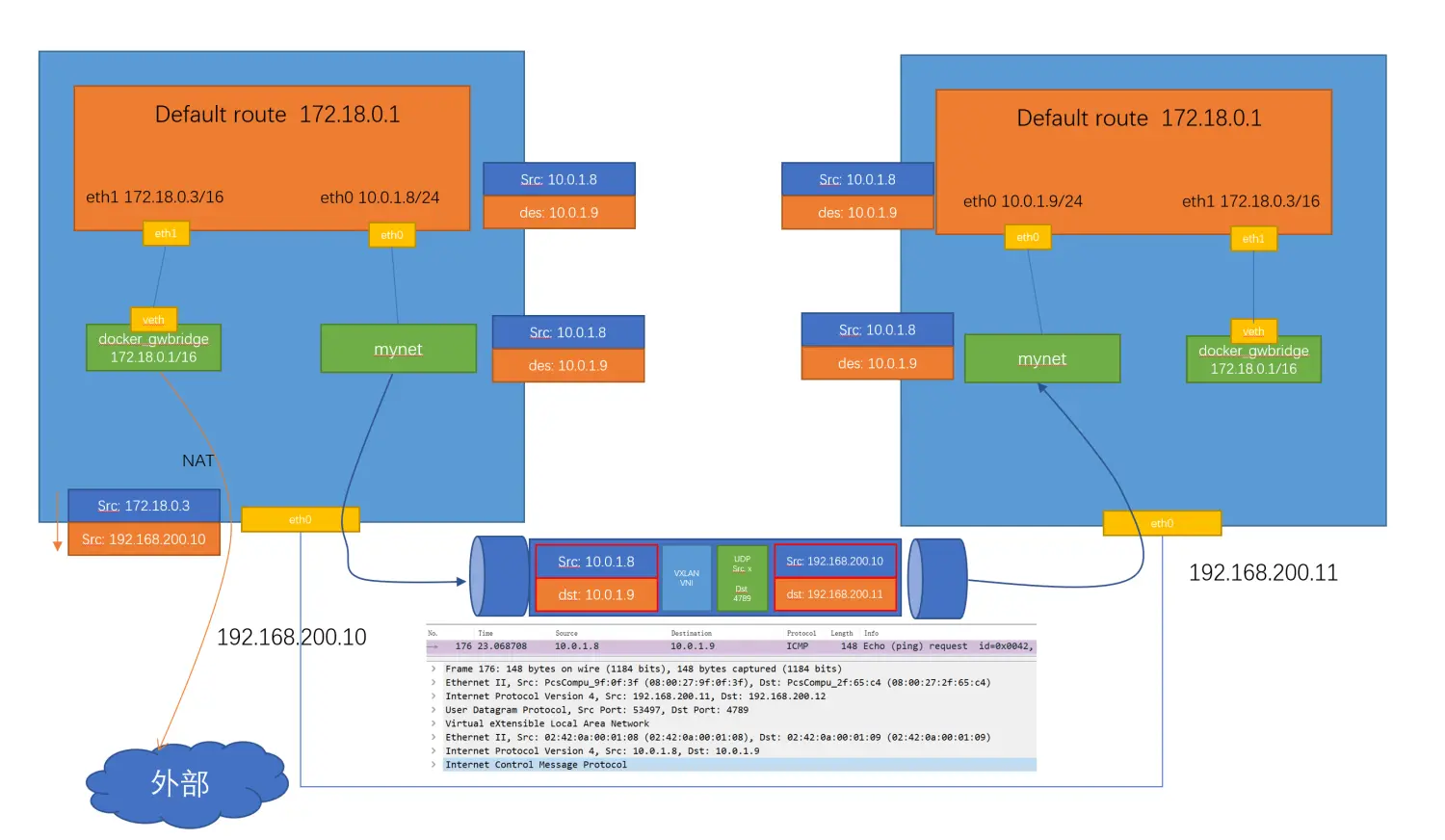

1. `东西向流量` ,也就是不同swarm节点上的容器之间如何通信,swarm通过 `overlay` 网络来解决;1. `南北向流量` ,也就是swarm集群里的容器如何对外访问,比如互联网,这个是 `Linux bridge + iptables NAT` 来解决的

创建 overlay 网络

这个网络会同步到所有的swarm节点上vagrant@swarm-manager:~$ docker network create -d overlay mynet

创建服务

创建一个服务连接到这个 overlay网络, name 是 test , replicas 是 2

可以看到这两个容器分别被创建在worker1和worker2两个节点上vagrant@swarm-manager:~$ docker service create --network mynet --name test --replicas 2 busybox ping 8.8.8.8vagrant@swarm-manager:~$ docker service ps testID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSyf5uqm1kzx6d test.1 busybox:latest swarm-worker1 Running Running 18 seconds ago3tmp4cdqfs8a test.2 busybox:latest swarm-worker2 Running Running 18 seconds ago

网络查看

到worker1和worker2上分别查看容器的网络连接情况

这个容器有两个接口 eth0和eth1, 其中eth0是连到了mynet这个网络,eth1是连到docker_gwbridge这个网络vagrant@swarm-worker1:~$ docker container lsCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMEScac4be28ced7 busybox:latest "ping 8.8.8.8" 2 days ago Up 2 days test.1.yf5uqm1kzx6dbt7n26e4akhsuvagrant@swarm-worker1:~$ docker container exec -it cac sh/ # ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever24: eth0@if25: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueuelink/ether 02:42:0a:00:01:08 brd ff:ff:ff:ff:ff:ffinet 10.0.1.8/24 brd 10.0.1.255 scope global eth0valid_lft forever preferred_lft forever26: eth1@if27: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueuelink/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ffinet 172.18.0.3/16 brd 172.18.255.255 scope global eth1valid_lft forever preferred_lft forever/ #

在这个容器里是可以直接ping通worker2上容器的IP 10.0.1.9的vagrant@swarm-worker1:~$ docker network lsNETWORK ID NAME DRIVER SCOPEa631a4e0b63c bridge bridge local56945463a582 docker_gwbridge bridge local9bdfcae84f94 host host local14fy2l7a4mci ingress overlay swarmlpirdge00y3j mynet overlay swarmc1837f1284f8 none null local

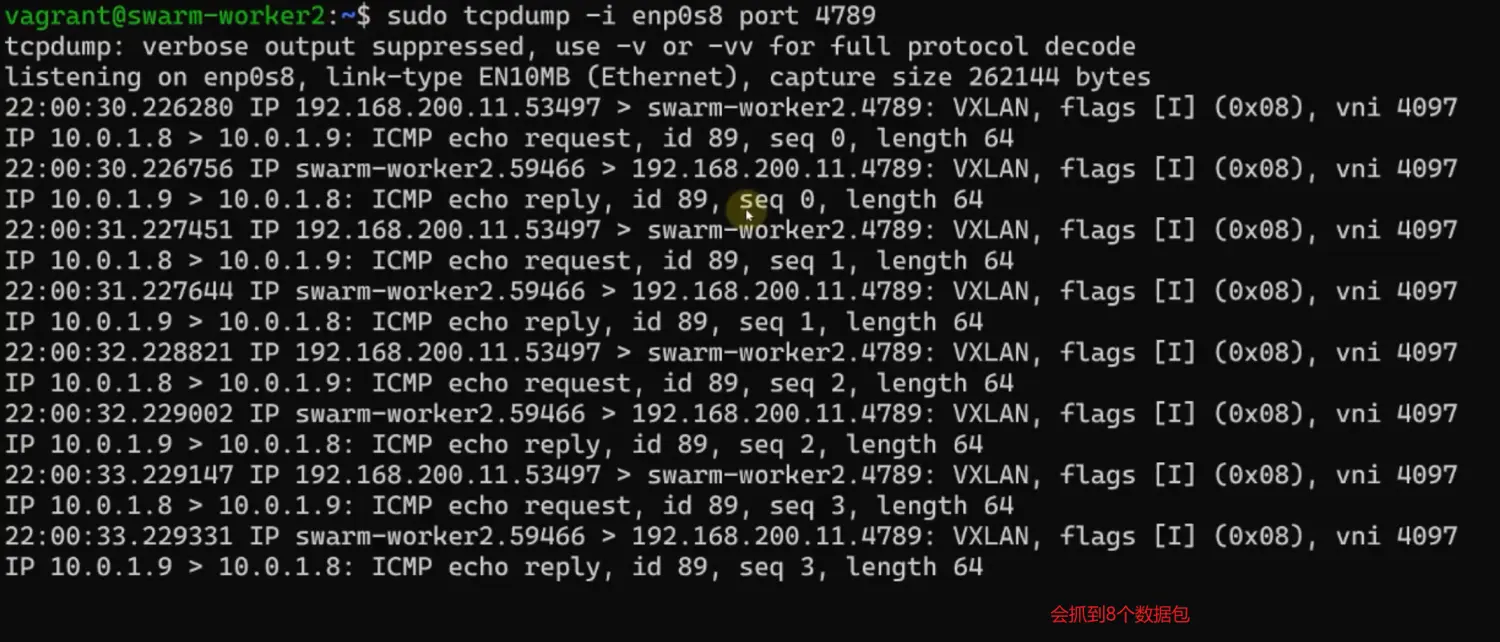

网络抓包

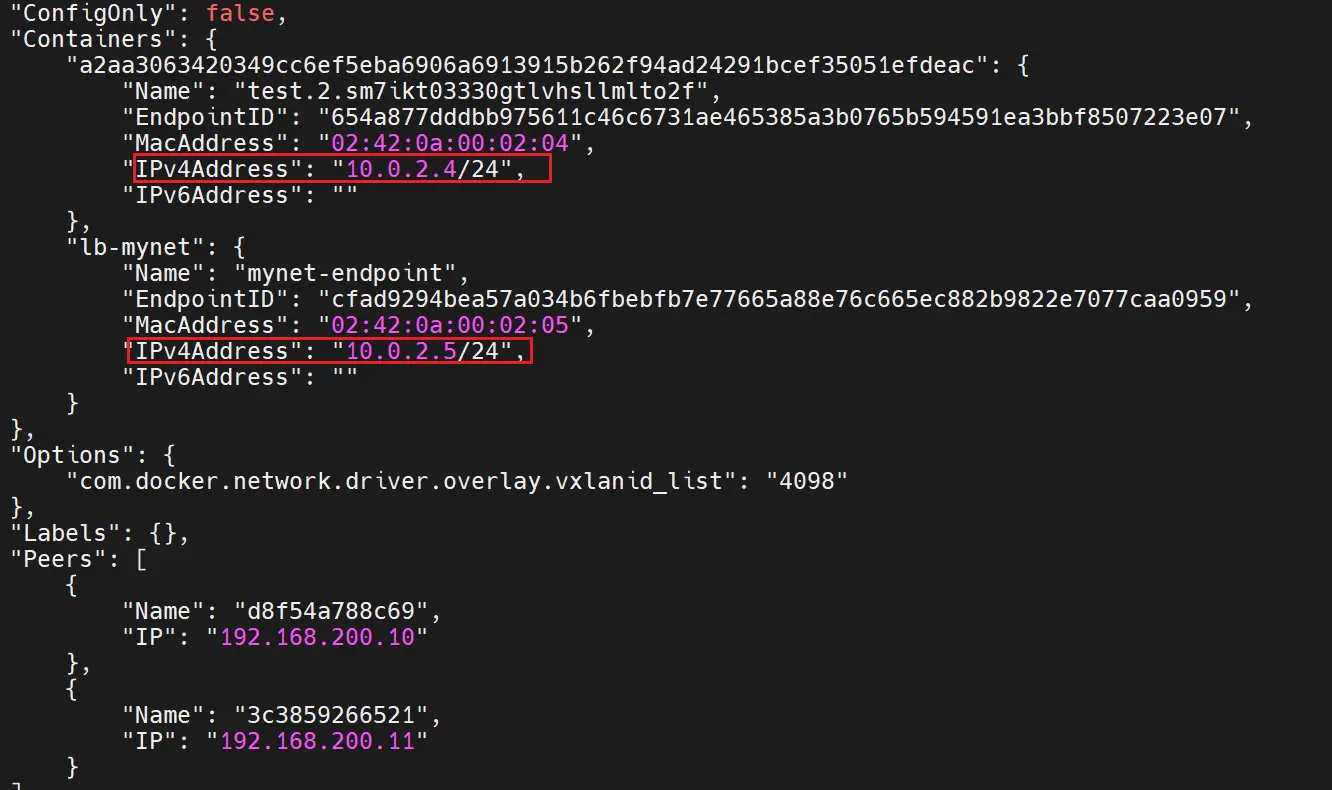

查看新建的overlay网络mynet中的容器的ip有

10.0.2.4和10.0.2.5docker network inspect mynet

进入work1的容器中查看网络信息

[vagrant@swarm-worker1 ~]$ docker exec -it 6f sh/ # ip routedefault via 172.18.0.1 dev eth110.0.2.0/24 dev eth0 scope link src 10.0.2.3172.18.0.0/16 dev eth1 scope link src 172.18.0.3/ #/ # ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever169: eth0@if170: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueuelink/ether 02:42:0a:00:02:03 brd ff:ff:ff:ff:ff:ffinet 10.0.2.3/24 brd 10.0.2.255 scope global eth0valid_lft forever preferred_lft forever171: eth1@if172: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueuelink/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ffinet 172.18.0.3/16 brd 172.18.255.255 scope global eth1valid_lft forever preferred_lft forever

进入work2的容器中查看网络信息

[vagrant@swarm-worker2 ~]$ docker exec -it 7a sh/ #/ # ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever162: eth0@if163: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueuelink/ether 02:42:0a:00:02:07 brd ff:ff:ff:ff:ff:ffinet 10.0.2.7/24 brd 10.0.2.255 scope global eth0valid_lft forever preferred_lft forever164: eth1@if165: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueuelink/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ffinet 172.18.0.3/16 brd 172.18.255.255 scope global eth1valid_lft forever preferred_lft forever/ #/ # ip routedefault via 172.18.0.1 dev eth110.0.2.0/24 dev eth0 scope link src 10.0.2.7172.18.0.0/16 dev eth1 scope link src 172.18.0.3

在work1容器中ping 我work2容器的eth0口的overlay网络

10.0.2.7,然后在work2中进行抓包tcpdump -i eth0 port 4789,其中4789端口是vxlan的端口。会抓到8个数据包,包括四个请求和四个响应。