https://pcl.readthedocs.io/projects/tutorials/en/latest/#keypoints

https://pointclouds.org/documentation/group__keypoints.html

博客

http://robot.czxy.com/docs/pcl/chapter02/keypoints/

PCL关键点(1)

此模块个人代码:https://github.com/HuangCongQing/pcl-learning/tree/master/16keypoints

关键点也称为兴趣点,它是 2D 图像或 3D 点云或曲面模型上,可以通过检测标准来获取的具有稳定性、区别性的点集。从技术上来说,关键点的数量比原始点云或图像的数据量少很多,其与局部特征描述子结合组成关键点描述子。常用来构成原始数据的紧凑表示 ,具有代表性与描述性,从而加快后续识别、追踪等对数据的处理速度 。

固而,关键点提取就成为 2D 与 3D 信息处理中不可或缺的关键技术 。

NARF介绍

NARF(Normal Aligned Radial Feature)关键点是为了从深度图像中识别物体而提出的

关键点探测的重要一步是减少特征提取时的搜索空间,把重点放在重要的结构上,对 NARF 关键点提取过程有以下要求:

- 提取的过程必须考虑边缘以及物体表面变化信息

- 即使换了不同的视角,关键点的位置必须稳定的可以被重复探测

- 关键点所在的位置必须有稳定的支持区域,可以计算描述子和估计唯一的法向量。

为了满足上述要求,可以通过以下探测步骤来进行关键点提取:

- 遍历每个深度图像点,通过寻找在近邻区域有深度突变的位置进行边缘检测;

- 遍历每个深度图像点,根据近邻区域的表面变化决定一测度表面变化的系数,以及变化的主方向;

- 根据第2步找到的主方向计算兴趣值,表征该方向与其他方向的不同**,以及该处表面的变化情况,即该点有多稳定;**

- 对兴趣值进行平滑过滤;

进行无最大值压缩找到最终的关键点,即为 NARF 关键点。

关于NARF的更为具体的描述请查看这篇博客www.cnblogs.com/ironstark/p/5051533.html

PCL中keypoints模块及类的介绍

(1)class pcl::Keypoint 类keypoint是所有关键点检测相关类的基类,定义基本接口,具体实现由子类来完成,

具体介绍:

| Public Member Functions | |

|---|---|

| virtual void | setSearchSurface (const PointCloudInConstPtr &cloud) |

| 设置搜索时所用搜索点云,cloud为指向点云对象的指针引用 | |

| void | setSearchMethod (const KdTreePtr &tree) 设置内部算法实现时所用的搜索对象,tree为指向kdtree或者octree对应的指针 |

| void | setKSearch (int k) 设置K近邻搜索时所用的K参数 |

| void | setRadiusSearch (double radius) 设置半径搜索的半径的参数 |

| int | searchForNeighbors (int index, double parameter, std::vector< int > &indices, std::vector< float > &distances) const |

| 采用setSearchMethod设置搜索对象,以及setSearchSurface设置搜索点云,进行近邻搜索,返回近邻在点云中的索引向量, indices以及对应的距离向量distance其中为查询点的索引,parameter为搜索时所用的参数半径或者K |

(2)class pcl::HarrisKeypoint2D

类HarrisKeypoint2D实现基于点云的强度字段的harris关键点检测子,其中包括多种不同的harris关键点检测算法的变种,其关键函数的说明如下:

| Public Member Functions | |

|---|---|

| HarrisKeypoint2D (ResponseMethod method=HARRIS, int window_width=3, int window_height=3, int min_distance=5, float threshold=0.0) | |

| 重构函数,method需要设置采样哪种关键点检测方法,有HARRIS,NOBLE,LOWE,WOMASI四种方法,默认为HARRIS,window_width window_height为检测窗口的宽度和高度min_distance 为两个关键点之间 容许的最小距离,threshold为判断是否为关键点的感兴趣程度的阀值,小于该阀值的点忽略,大于则认为是关键点 | |

| void | setMethod (ResponseMethod type)设置检测方式 |

| void | setWindowWidth (int window_width) 设置检测窗口的宽度 |

| void | setWindowHeight (int window_height) 设置检测窗口的高度 |

| void | setSkippedPixels (int skipped_pixels) 设置在检测时每次跳过的像素的数目 |

| void | setMinimalDistance (int min_distance) 设置候选关键点之间的最小距离 |

| void | setThreshold (float threshold) 设置感兴趣的阀值 |

| void | setNonMaxSupression (bool=false) 设置是否对小于感兴趣阀值的点进行剔除,如果是true则剔除,否则返回这个点 |

| void | setRefine (bool do_refine)设置是否对所得的关键点结果进行优化, |

| void | setNumberOfThreads (unsigned int nr_threads=0) 设置该算法如果采用openMP并行机制,能够创建线程数目 |

(3)pcl::HarrisKeypoint3D< PointInT, PointOutT, NormalT >

类HarrisKeypoint3D和HarrisKeypoint2D类似,但是没有在点云的强度空间检测关键点,而是利用点云的3D空间的信息表面法线向量来进行关键点检测,关于HarrisKeypoint3D的类与HarrisKeypoint2D相似,除了

HarrisKeypoint3D (ResponseMethod method=HARRIS, float radius=0.01f, float threshold=0.0f)

重构函数,method需要设置采样哪种关键点检测方法,有HARRIS,NOBLE,LOWE,WOMASI四种方法,默认为HARRIS,radius为法线估计的搜索半径,threshold为判断是否为关键点的感兴趣程度的阀值,小于该阀值的点忽略,大于则认为是关键点。

(4)pcl::HarrisKeypoint6D< PointInT, PointOutT, NormalT >

类HarrisKeypoint6D和HarrisKeypoint2D类似,只是利用了欧式空间域XYZ或者强度域来候选关键点,或者前两者的交集,即同时满足XYZ域和强度域的关键点为候选关键点,<br />[HarrisKeypoint6D](http://docs.pointclouds.org/trunk/classpcl_1_1_harris_keypoint6_d.html#acdf288a88556dc936f8331aea5ce17ed) (float radius=0.01, float threshold=0.0) 重构函数,此处并没有方法选择的参数,而是默认采用了Tomsai提出的方法实现关键点的检测,radius为法线估计的搜索半径,threshold为判断是否为关键点的感兴趣程度的阀值,小于该阀值的点忽略,大于则认为是关键点。

(5)pcl::SIFTKeypoint< PointInT, PointOutT >

**类SIFTKeypoint是将二维图像中的SIFT算子调整后移植到3D空间的SIFT算子的实现,输入带有XYZ坐标值和强度的点云,输出为点云中的SIFT关键点**,其关键函数的说明如下:

| void | setScales (float min_scale, int nr_octaves, int nr_scales_per_octave) |

|---|---|

| 设置搜索时与尺度相关的参数,min_scale在点云体素尺度空间中标准偏差,点云对应的体素栅格中的最小尺寸 | |

| int nr_octaves是检测关键点时体素空间尺度的数目,nr_scales_per_octave为在每一个体素空间尺度下计算高斯空间的尺度所需要的参数 | |

| void | setMinimumContrast (float min_contrast) 设置候选关键点对应的对比度下限 |

(6)还有很多不再一一介绍

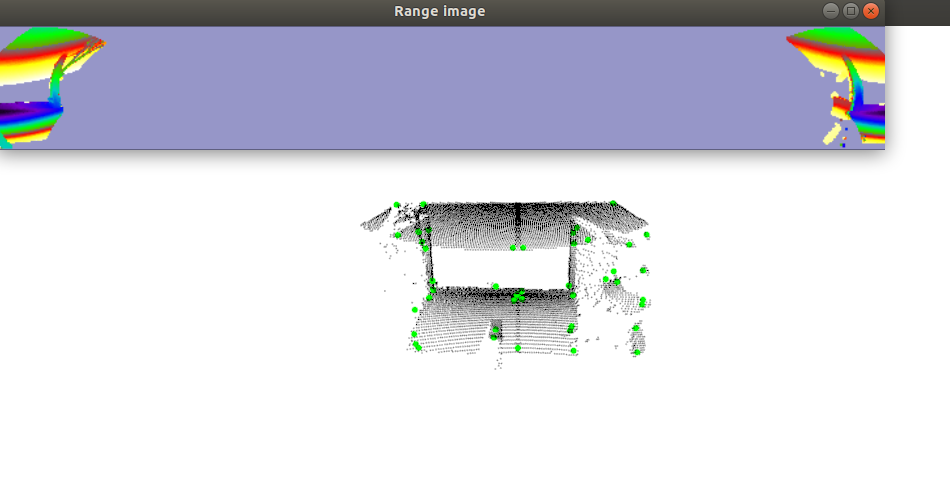

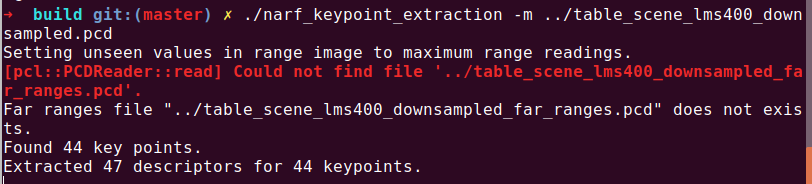

从深度图像中提取 NARF(Normal Aligned Radial Feature)关键点

官方Tutorials:How to extract NARF keypoint from a range image

实验实现提取NARF关键点,并且用图像和3D显示的方式进行可视化

| class | pcl::NarfKeypoint |

|---|---|

| NARF (Normal Aligned Radial Feature) keypoints. More… |

代码实现

创建文件:narf_keypoint_extraction.cpp

准备资源:../table_scene_lms400_downsampled.pcd

编译执行:./narf_keypoint_extraction -m ../table_scene_lms400_downsampled.pcd

创建RangeImageBorderExtractor对象,它是用来进行边缘提取的,因为NARF的第一步就是需要探测出深度图像的边缘,

pcl::RangeImageBorderExtractor range_image_border_extractor; //用来提取边缘

pcl::NarfDescriptor

个人代码:16keypoints关键点/1从深度图像中提取 NARF关键点

/*

* @Description: 提取NARF关键点,并且用图像和3D显示的方式进行可视化

* http://robot.czxy.com/docs/pcl/chapter02/keypoints/#_2

* https://www.cnblogs.com/li-yao7758258/p/6476359.html

* @Author: HCQ

* @Company(School): UCAS

* @Email: 1756260160@qq.com

* @Date: 2020-10-21 16:54:11

* @LastEditTime: 2020-10-21 17:26:45

* @FilePath: /pcl-learning/16keypoints关键点/1从深度图像中提取 NARF关键点/narf_keypoint_extraction.cpp

*/

#include <iostream>

#include <boost/thread/thread.hpp>

#include <pcl/range_image/range_image.h>

#include <pcl/io/pcd_io.h>

#include <pcl/visualization/range_image_visualizer.h> // 深度图像可视化

#include <pcl/visualization/pcl_visualizer.h>

#include <pcl/features/range_image_border_extractor.h> // 深度图像边界提取

#include <pcl/keypoints/narf_keypoint.h> // narf_keypoint关键点检查

#include <pcl/features/narf_descriptor.h>

#include <pcl/console/parse.h>

typedef pcl::PointXYZ PointType;

// --------------------

// -----Parameters-----

// --------------------

float angular_resolution = 0.5f; ////angular_resolution为模拟的深度传感器的角度分辨率,即深度图像中一个像素对应的角度大小

float support_size = 0.2f; //点云大小的设置

pcl::RangeImage::CoordinateFrame coordinate_frame = pcl::RangeImage::CAMERA_FRAME; //设置坐标系

bool setUnseenToMaxRange = false;

bool rotation_invariant = true;

// --------------

// -----Help-----

// --------------

void printUsage(const char *progName)

{

std::cout << "\n\nUsage: " << progName << " [options] <scene.pcd>\n\n"

<< "Options:\n"

<< "-------------------------------------------\n"

<< "-r <float> angular resolution in degrees (default " << angular_resolution << ")\n"

<< "-c <int> coordinate frame (default " << (int)coordinate_frame << ")\n"

<< "-m Treat all unseen points to max range\n"

<< "-s <float> support size for the interest points (diameter of the used sphere - "

"default "

<< support_size << ")\n"

<< "-o <0/1> switch rotational invariant version of the feature on/off"

<< " (default " << (int)rotation_invariant << ")\n"

<< "-h this help\n"

<< "\n\n";

}

void setViewerPose(pcl::visualization::PCLVisualizer &viewer, const Eigen::Affine3f &viewer_pose) //设置视口的位姿

{

Eigen::Vector3f pos_vector = viewer_pose * Eigen::Vector3f(0, 0, 0); //视口的原点pos_vector

Eigen::Vector3f look_at_vector = viewer_pose.rotation() * Eigen::Vector3f(0, 0, 1) + pos_vector; //旋转+平移look_at_vector

Eigen::Vector3f up_vector = viewer_pose.rotation() * Eigen::Vector3f(0, -1, 0); //up_vector

viewer.setCameraPosition(pos_vector[0], pos_vector[1], pos_vector[2], //设置照相机的位姿

look_at_vector[0], look_at_vector[1], look_at_vector[2],

up_vector[0], up_vector[1], up_vector[2]);

}

// --------------

// -----Main-----

// --------------

int main(int argc, char **argv)

{

// --------------------------------------

// -----Parse Command Line Arguments-----

// --------------------------------------

if (pcl::console::find_argument(argc, argv, "-h") >= 0)

{

printUsage(argv[0]);

return 0;

}

if (pcl::console::find_argument(argc, argv, "-m") >= 0)

{

setUnseenToMaxRange = true;

cout << "Setting unseen values in range image to maximum range readings.\n";

}

if (pcl::console::parse(argc, argv, "-o", rotation_invariant) >= 0)

cout << "Switching rotation invariant feature version " << (rotation_invariant ? "on" : "off") << ".\n";

int tmp_coordinate_frame;

if (pcl::console::parse(argc, argv, "-c", tmp_coordinate_frame) >= 0)

{

coordinate_frame = pcl::RangeImage::CoordinateFrame(tmp_coordinate_frame);

cout << "Using coordinate frame " << (int)coordinate_frame << ".\n";

}

if (pcl::console::parse(argc, argv, "-s", support_size) >= 0)

cout << "Setting support size to " << support_size << ".\n";

if (pcl::console::parse(argc, argv, "-r", angular_resolution) >= 0)

cout << "Setting angular resolution to " << angular_resolution << "deg.\n";

angular_resolution = pcl::deg2rad(angular_resolution);

// ------------------------------------------------------------------

// -----Read pcd file or create example point cloud if not given-----

// ------------------------------------------------------------------

pcl::PointCloud<PointType>::Ptr point_cloud_ptr(new pcl::PointCloud<PointType>);

pcl::PointCloud<PointType> &point_cloud = *point_cloud_ptr;

pcl::PointCloud<pcl::PointWithViewpoint> far_ranges;

Eigen::Affine3f scene_sensor_pose(Eigen::Affine3f::Identity());

std::vector<int> pcd_filename_indices = pcl::console::parse_file_extension_argument(argc, argv, "pcd"); // 读取pcd文件

if (!pcd_filename_indices.empty())

{

std::string filename = argv[pcd_filename_indices[0]];

if (pcl::io::loadPCDFile(filename, point_cloud) == -1) // 不能打开文件名

{

cerr << "Was not able to open file \"" << filename << "\".\n";

printUsage(argv[0]);

return 0;

}

scene_sensor_pose = Eigen::Affine3f(Eigen::Translation3f(point_cloud.sensor_origin_[0], //场景传感器的位置

point_cloud.sensor_origin_[1],

point_cloud.sensor_origin_[2])) *

Eigen::Affine3f(point_cloud.sensor_orientation_);

std::string far_ranges_filename = pcl::getFilenameWithoutExtension(filename) + "_far_ranges.pcd";

if (pcl::io::loadPCDFile(far_ranges_filename.c_str(), far_ranges) == -1)

std::cout << "Far ranges file \"" << far_ranges_filename << "\" does not exists.\n";

}

else

{

setUnseenToMaxRange = true;

cout << "\nNo *.pcd file given => Genarating example point cloud.\n\n";

for (float x = -0.5f; x <= 0.5f; x += 0.01f)

{

for (float y = -0.5f; y <= 0.5f; y += 0.01f)

{

PointType point;

point.x = x;

point.y = y;

point.z = 2.0f - y;

point_cloud.points.push_back(point);

}

}

point_cloud.width = (int)point_cloud.points.size();

point_cloud.height = 1;

}

// -----------------------------------------------

// -----Create RangeImage from the PointCloud-----

// -----------------------------------------------

float noise_level = 0.0;

float min_range = 0.0f;

int border_size = 1;

boost::shared_ptr<pcl::RangeImage> range_image_ptr(new pcl::RangeImage);

pcl::RangeImage &range_image = *range_image_ptr;

range_image.createFromPointCloud(point_cloud, angular_resolution, pcl::deg2rad(360.0f), pcl::deg2rad(180.0f),

scene_sensor_pose, coordinate_frame, noise_level, min_range, border_size);

range_image.integrateFarRanges(far_ranges);

if (setUnseenToMaxRange)

range_image.setUnseenToMaxRange();

// --------------------------------------------

// -----Open 3D viewer and add point cloud-----点云展示

// --------------------------------------------

pcl::visualization::PCLVisualizer viewer("3D Viewer");

viewer.setBackgroundColor(1, 1, 1);

pcl::visualization::PointCloudColorHandlerCustom<pcl::PointWithRange> range_image_color_handler(range_image_ptr, 0, 0, 0);

viewer.addPointCloud(range_image_ptr, range_image_color_handler, "range image");

viewer.setPointCloudRenderingProperties(pcl::visualization::PCL_VISUALIZER_POINT_SIZE, 1, "range image");

//viewer.addCoordinateSystem (1.0f, "global");

//PointCloudColorHandlerCustom<PointType> point_cloud_color_handler (point_cloud_ptr, 150, 150, 150);

//viewer.addPointCloud (point_cloud_ptr, point_cloud_color_handler, "original point cloud");

viewer.initCameraParameters();

setViewerPose(viewer, range_image.getTransformationToWorldSystem());

// --------------------------

// -----Show range image----- 深度图展示

// --------------------------

pcl::visualization::RangeImageVisualizer range_image_widget("Range image");

range_image_widget.showRangeImage(range_image);

/*********************************************************************************************************

创建RangeImageBorderExtractor对象,它是用来进行边缘提取的,因为NARF的第一步就是需要探测出深度图像的边缘,

*********************************************************************************************************/

// --------------------------------

// -----Extract NARF keypoints-----

// --------------------------------

pcl::RangeImageBorderExtractor range_image_border_extractor; //用来提取边缘

pcl::NarfKeypoint narf_keypoint_detector; //用来检测关键点

narf_keypoint_detector.setRangeImageBorderExtractor(&range_image_border_extractor); //

narf_keypoint_detector.setRangeImage(&range_image);

narf_keypoint_detector.getParameters().support_size = support_size; //设置NARF的参数

pcl::PointCloud<int> keypoint_indices;

narf_keypoint_detector.compute(keypoint_indices);

std::cout << "Found " << keypoint_indices.points.size() << " key points.\n";

// ----------------------------------------------

// -----Show keypoints in range image widget-----

// ----------------------------------------------

//for (size_t i=0; i<keypoint_indices.points.size (); ++i)

//range_image_widget.markPoint (keypoint_indices.points[i]%range_image.width,

//keypoint_indices.points[i]/range_image.width);

// -------------------------------------

// -----Show keypoints in 3D viewer-----

// -------------------------------------

pcl::PointCloud<pcl::PointXYZ>::Ptr keypoints_ptr(new pcl::PointCloud<pcl::PointXYZ>);

pcl::PointCloud<pcl::PointXYZ> &keypoints = *keypoints_ptr;

keypoints.points.resize(keypoint_indices.points.size());

for (size_t i = 0; i < keypoint_indices.points.size(); ++i)

keypoints.points[i].getVector3fMap() = range_image.points[keypoint_indices.points[i]].getVector3fMap();

pcl::visualization::PointCloudColorHandlerCustom<pcl::PointXYZ> keypoints_color_handler(keypoints_ptr, 0, 255, 0);

viewer.addPointCloud<pcl::PointXYZ>(keypoints_ptr, keypoints_color_handler, "keypoints");

viewer.setPointCloudRenderingProperties(pcl::visualization::PCL_VISUALIZER_POINT_SIZE, 7, "keypoints");

// ------------------------------------------------------

// -----Extract NARF descriptors for interest points-----

// ------------------------------------------------------

std::vector<int> keypoint_indices2;

keypoint_indices2.resize(keypoint_indices.points.size());

for (unsigned int i = 0; i < keypoint_indices.size(); ++i) // This step is necessary to get the right vector type

keypoint_indices2[i] = keypoint_indices.points[i];

pcl::NarfDescriptor narf_descriptor(&range_image, &keypoint_indices2);

narf_descriptor.getParameters().support_size = support_size;

narf_descriptor.getParameters().rotation_invariant = rotation_invariant;

pcl::PointCloud<pcl::Narf36> narf_descriptors;

narf_descriptor.compute(narf_descriptors);

cout << "Extracted " << narf_descriptors.size() << " descriptors for "

<< keypoint_indices.points.size() << " keypoints.\n";

//--------------------

// -----Main loop-----

//--------------------

while (!viewer.wasStopped())

{

range_image_widget.spinOnce(); // process GUI events

viewer.spinOnce();

pcl_sleep(0.01);

}

}

输出结果

运行

./narf_keypoint_extraction -m ../table_scene_lms400_downsampled.pcd

实现效果