3.3 部署mysql集群

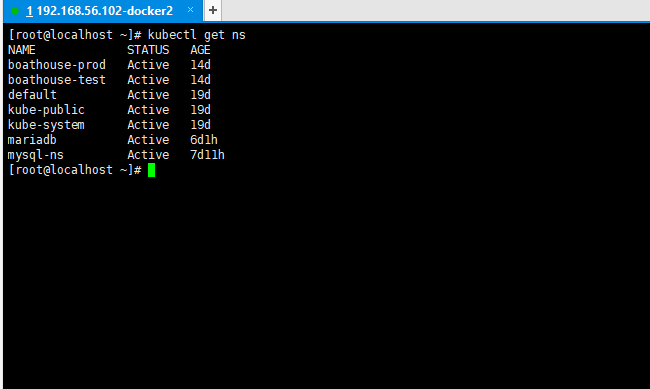

3.3.1 创建namespace

在本地windows机器所安装的kubectl工具所在的目录,创建名为”mariadb”的namespace,指令为:kubectl create namespace mariadb

3.3.2 创建etcd-cluster

创建mysql集群中的集群网络环境,用于主从节点之间的通信。

在本地windows机器所安装的kubectl工具所在的目录,创建etcd-cluster.yml文件,然后执行如下指令:

kubectl create -f etcd-cluster.yml -n mariadb

apiVersion: v1kind: Servicemetadata:name: etcd-clientspec:ports:- name: etcd-client-portport: 2379protocol: TCPtargetPort: 2379selector:app: etcd---apiVersion: v1kind: Podmetadata:labels:app: etcdetcd_node: etcd0name: etcd0spec:containers:- command:- /usr/local/bin/etcd- --name- etcd0- --initial-advertise-peer-urls- http://etcd0:2380- --listen-peer-urls- http://0.0.0.0:2380- --listen-client-urls- http://0.0.0.0:2379- --advertise-client-urls- http://etcd0:2379- --initial-cluster- etcd0=http://etcd0:2380,etcd1=http://etcd1:2380,etcd2=http://etcd2:2380- --initial-cluster-state- newimage: quay.io/coreos/etcd:latestname: etcd0ports:- containerPort: 2379name: clientprotocol: TCP- containerPort: 2380name: serverprotocol: TCPrestartPolicy: Never---apiVersion: v1kind: Servicemetadata:labels:etcd_node: etcd0name: etcd0spec:ports:- name: clientport: 2379protocol: TCPtargetPort: 2379- name: serverport: 2380protocol: TCPtargetPort: 2380selector:etcd_node: etcd0---apiVersion: v1kind: Podmetadata:labels:app: etcdetcd_node: etcd1name: etcd1spec:containers:- command:- /usr/local/bin/etcd- --name- etcd1- --initial-advertise-peer-urls- http://etcd1:2380- --listen-peer-urls- http://0.0.0.0:2380- --listen-client-urls- http://0.0.0.0:2379- --advertise-client-urls- http://etcd1:2379- --initial-cluster- etcd0=http://etcd0:2380,etcd1=http://etcd1:2380,etcd2=http://etcd2:2380- --initial-cluster-state- newimage: quay.io/coreos/etcd:latestname: etcd1ports:- containerPort: 2379name: clientprotocol: TCP- containerPort: 2380name: serverprotocol: TCPrestartPolicy: Never---apiVersion: v1kind: Servicemetadata:labels:etcd_node: etcd1name: etcd1spec:ports:- name: clientport: 2379protocol: TCPtargetPort: 2379- name: serverport: 2380protocol: TCPtargetPort: 2380selector:etcd_node: etcd1---apiVersion: v1kind: Podmetadata:labels:app: etcdetcd_node: etcd2name: etcd2spec:containers:- command:- /usr/local/bin/etcd- --name- etcd2- --initial-advertise-peer-urls- http://etcd2:2380- --listen-peer-urls- http://0.0.0.0:2380- --listen-client-urls- http://0.0.0.0:2379- --advertise-client-urls- http://etcd2:2379- --initial-cluster- etcd0=http://etcd0:2380,etcd1=http://etcd1:2380,etcd2=http://etcd2:2380- --initial-cluster-state- newimage: quay.io/coreos/etcd:latestname: etcd2ports:- containerPort: 2379name: clientprotocol: TCP- containerPort: 2380name: serverprotocol: TCPrestartPolicy: Never---apiVersion: v1kind: Servicemetadata:labels:etcd_node: etcd2name: etcd2spec:ports:- name: clientport: 2379protocol: TCPtargetPort: 2379- name: serverport: 2380protocol: TCPtargetPort: 2380selector:etcd_node: etcd2

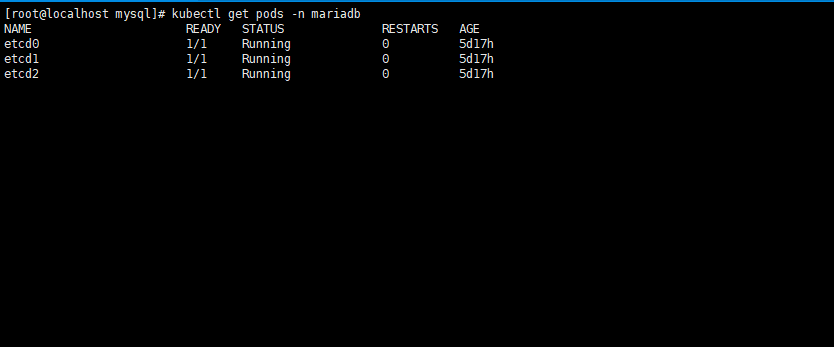

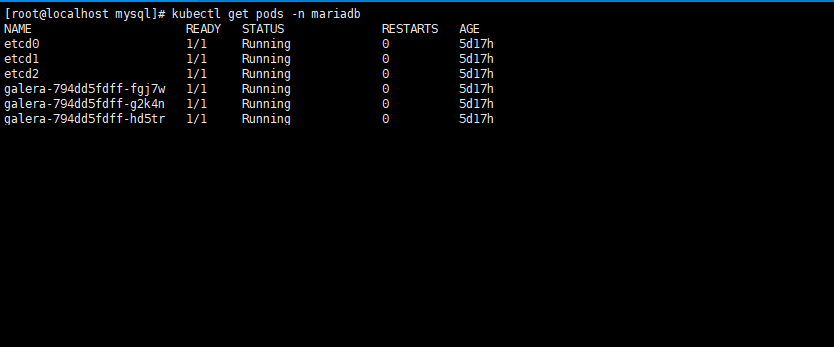

查看创建结果:

3.3.3 创建pvc

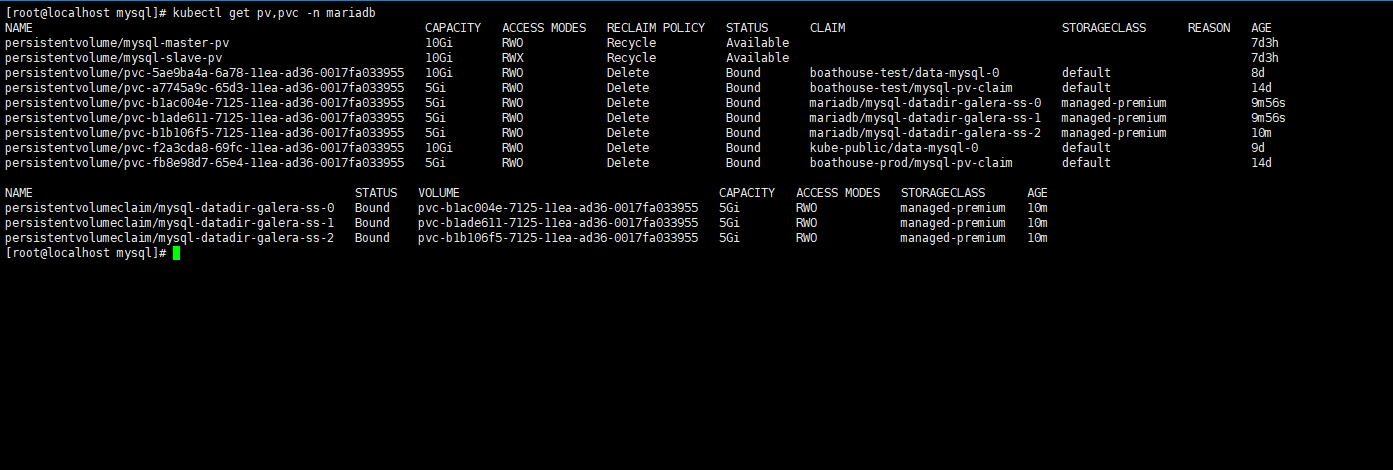

用于存储mysql的数据文件,因为有三套主从节点,因此创建三个pvc。

在本地windows机器所安装的kubectl工具所在的目录,创建mariadb-pvc.yml文件,然后执行如下指令:

kubectl create -f mariadb-pvc.yml -n mariadb

apiVersion: v1kind: PersistentVolumeClaimmetadata:name: mysql-datadir-galera-ss-0spec:accessModes:- ReadWriteOncestorageClassName: managed-premiumresources:requests:storage: 5Gi---apiVersion: v1kind: PersistentVolumeClaimmetadata:name: mysql-datadir-galera-ss-1spec:accessModes:- ReadWriteOncestorageClassName: managed-premiumresources:requests:storage: 5Gi---apiVersion: v1kind: PersistentVolumeClaimmetadata:name: mysql-datadir-galera-ss-2spec:accessModes:- ReadWriteOncestorageClassName: managed-premiumresources:requests:storage: 5Gi

查看创建结果:

3.3.4 创建rs

创建主节点,提供对外访问的endpoint。

在本地windows机器所安装的kubectl工具所在的目录,创建mariadb-rs.yml文件,然后执行如下指令:

kubectl create -f mariadb-rs.yml -n mariadb

apiVersion: v1kind: Servicemetadata:name: galera-rslabels:app: galera-rsspec:type: NodePortports:- nodePort: 30000port: 3306selector:app: galera---apiVersion: extensions/v1beta1kind: Deploymentmetadata:name: galeralabels:app: galeraspec:replicas: 3strategy:type: Recreatetemplate:metadata:labels:app: galeraspec:containers:- name: galeraimage: severalnines/mariadb:10.1env:# kubectl create secret generic mysql-pass --from-file=password.txt- name: MYSQL_ROOT_PASSWORDvalue: myrootpassword- name: DISCOVERY_SERVICEvalue: etcd-client:2379- name: XTRABACKUP_PASSWORDvalue: password- name: CLUSTER_NAMEvalue: mariadb_galera- name: MYSQL_DATABASEvalue: mydatabase- name: MYSQL_USERvalue: myuser- name: MYSQL_PASSWORDvalue: myuserpasswordports:- name: mysqlcontainerPort: 3306readinessProbe:exec:command:- /healthcheck.sh- --readinessinitialDelaySeconds: 120periodSeconds: 1livenessProbe:exec:command:- /healthcheck.sh- --livenessinitialDelaySeconds: 120periodSeconds: 1

查看创建结果,会有三个pod:

3.3.5 创建ss

创建从节点,从节点会去连接之前创建好的主节点。

在本地windows机器所安装的kubectl工具所在的目录,创建mariadb-ss.yml文件(文件内容请参考附件),然后执行如下指令:

kubectl create -f mariadb-ss.yml -n mariadb

apiVersion: v1kind: Servicemetadata:name: galera-sslabels:app: galera-ssspec:ports:- port: 3306name: mysqlclusterIP: Noneselector:app: galera-ss---apiVersion: apps/v1beta1kind: StatefulSetmetadata:name: galera-ssspec:serviceName: "galera-ss"replicas: 3template:metadata:labels:app: galera-ssspec:containers:- name: galeraimage: jijeesh/mariadb:10.1ports:- name: mysqlcontainerPort: 3306env:# kubectl create secret generic mysql-pass --from-file=password.txt- name: MYSQL_ROOT_PASSWORDvalue: myrootpassword- name: DISCOVERY_SERVICEvalue: etcd-client:2379- name: XTRABACKUP_PASSWORDvalue: password- name: CLUSTER_NAMEvalue: mariadb_galera_ss- name: MYSQL_DATABASEvalue: mydatabase- name: MYSQL_USERvalue: myuser- name: MYSQL_PASSWORDvalue: myuserpasswordreadinessProbe:exec:command:- /healthcheck.sh- --readinessinitialDelaySeconds: 120periodSeconds: 1livenessProbe:exec:command:- /healthcheck.sh- --livenessinitialDelaySeconds: 120periodSeconds: 1volumeMounts:- name: mysql-datadirmountPath: /var/lib/mysqlvolumeClaimTemplates:- metadata:name: mysql-datadirspec:accessModes: [ "ReadWriteOnce" ]resources:requests:storage: 5Gi

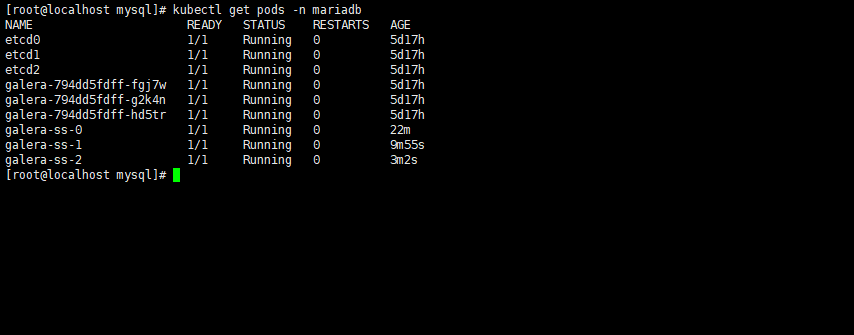

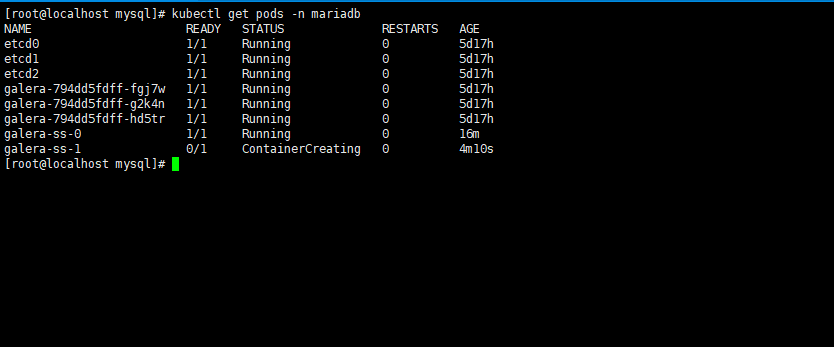

查看创建的pod,每个pod连接成功之后,才进行下一个pod的创建:

3.3.6 查看pod

以上步骤都执行完成之后,查看pod的数量,如果有以下的pod,则表示部署成功: