public class Application {private static String appName = "spark.demo";private static String master = "local[*]";public static void main(String[] args) {SparkSession spark = SparkSession.builder().appName("Java Spark Hive Example").master("local[*]")// .config("spark.sql.warehouse.dir","hdfs://172.29.108.184:8020/hive").enableHiveSupport().getOrCreate();spark.sql("show databases").show();spark.sql("show tables").show();spark.sql("desc tmp.parse_log").show();////// JavaSparkContext sc = null;// try {// //初始化 JavaSparkContext// SparkConf conf = new SparkConf()// .setAppName(appName)// .setMaster(master)// .set("hive.metastore.uris", "thrift://172.29.108.183:9083")// .set("spark.sql.sources.partitionOverwriteMode", "dynamic");//// sc = new JavaSparkContext(conf);//// List<SqlJob.LogStore> list = SqlJob.getList();//// SQLContext sqlContext = new SQLContext(sc);// // 注册成表// Dataset<org.apache.spark.sql.Row> dataFrame = sqlContext.createDataFrame(list, SqlJob.LogStore.class);//// sqlContext.table("parse_log").show();// dataFrame.createOrReplaceTempView("tmp_table");// System.out.println( "注册表 OK" );// // 写入数据库// sqlContext.sql("INSERT OVERWRITE TABLE tmp.parse_log partition (day='20200331') select tableNames, sql from tmp_table");// sql.toDF().write().saveAsTable("tmp.parse_log");// System.out.println( "写入数据表 OK" );// sqlContext.sql("show tables").show();// } catch (Exception e) {// e.printStackTrace();// } finally {// if (sc != null) {// sc.close();// }// }}}

...<spark.version>2.4.0</spark.version><dependency><groupId>org.apache.spark</groupId><artifactId>spark-core_2.11</artifactId><version>${spark.version}</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-sql_2.11</artifactId><version>${spark.version}</version></dependency><dependency><groupId>org.apache.spark</groupId><artifactId>spark-hive_2.11</artifactId><version>${spark.version}</version></dependency>...

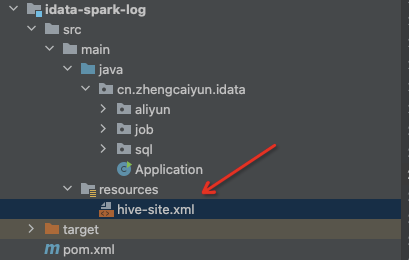

远端hive配置hive-site.xml