1. ElasticSearch 高级

2. 批量操作

Bulk 批量操作是将文档的增删改查一系列操作 通过一次请求 全部完成 减少网络传输次数

2.1. 脚本操作

GET person/_search# 批量操作POST _bulk{"delete":{"_index":"person","_id":"3"}}{"create":{"_index":"person","_id":"8"}}{"name":"hhh","age":88,"address":"qqqq"}{"update":{"_index":"person","_id":"2"}}{"doc":{"name":"qwedqd"}}

2.2. API

//批量操作 bulk@Testpublic void testBulk() throws IOException {//创建bulkrequest对象 整合所有操作BulkRequest bulkRequest = new BulkRequest();//添加操作//删除1号操作DeleteRequest deleteRequest = new DeleteRequest("person","1");bulkRequest.add(deleteRequest);//添加6号操作Map map = new HashMap();map.put("name","测试");IndexRequest indexRequest = new IndexRequest("person").id("6").source(map);bulkRequest.add(indexRequest);//修改3号操作Map map2 = new HashMap();map2.put("name","测试3号");UpdateRequest updateReqeust = new UpdateRequest("person","3").doc(map2);bulkRequest.add(updateReqeust);//执行批量操作BulkResponse response = restHighLevelClient.bulk(bulkRequest, RequestOptions.DEFAULT);RestStatus status = response.status();System.out.println(status);}

3. 导入数据

将数据库中表数据导入到ElasticSearch中

- 创建索引 并添加mapping

- 创建pojo类 映射mybatis

- 查询数据库

- 使用Bulk 批量导入

3.1. 使用fastjson 转换对象不转换该成员变量

在成员变量加上注解 使用@JSONField(serialize =false) 此成员变量不参与json转换

4. matchALL查询

matchALL查询 查询所有文档

2.1. 脚本操作

# 默认情况下,es一次只展示10条数据 通过from控制页码 size控制每页展示条数GET person/_search{"query": {"match_all": {}},"from": 0,"size": 100}

4.2. Api操作

//matchall查询所有 分页操作@Testpublic void testMatchALL() throws IOException {//2通过索引查询SearchRequest searchRequest = new SearchRequest("person");//4创建查询条件构建器SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();//5查询条件QueryBuilder query = QueryBuilders.matchAllQuery(); //查询所有文档//6指定查询条件sourceBuilder.query(query);//分页查询sourceBuilder.from(0); //第几页sourceBuilder.size(100); //每页显示数//3添加查询条件构建器searchRequest.source(sourceBuilder);//1查询 获取查询结果SearchResponse search = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);//7获取命中对象 hitsSearchHits searchHits = search.getHits();//获取总条数long value = searchHits.getTotalHits().value;System.out.println("总记录数" + value);//获取hits数组SearchHit[] hits = searchHits.getHits();for (SearchHit hit : hits) {String sourceAsString = hit.getSourceAsString(); //获取json字符串格式的数据System.out.println(sourceAsString);}}

5. term查询

5.1. 脚本查询

# term查询 词条查询 一般用于查分类GET person/_search{"query": {"term": {"name": {"value": "hhh"}}}}

5.2. api查询

//termQuery 词条查询@Testpublic void testTermQuery() throws IOException {SearchRequest searchRequest = new SearchRequest("person");SearchSourceBuilder sourceBulider=new SearchSourceBuilder();QueryBuilder query= QueryBuilders.termQuery("name","hhh");sourceBulider.query(query);searchRequest.source(sourceBulider);SearchResponse search = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);SearchHits searchHits = search.getHits();//获取总条数long value = searchHits.getTotalHits().value;System.out.println("总记录数" + value);//获取hits数组SearchHit[] hits = searchHits.getHits();for (SearchHit hit : hits) {String sourceAsString = hit.getSourceAsString(); //获取json字符串格式的数据System.out.println(sourceAsString);}}

6. match查询

- 会对查询条件进行分词

- 然后将分词后的查询条件和词条进行等值匹配

- 默认取并集(OR) 交集为(AND)

2.1. 脚本操作

# match 查询GET person/_search{"query": {"match": {"name": {"query": "hhh","operator": "or"}}}}

6.2. API操作

//match 词条查询@Testpublic void testMatchQuery() throws IOException {SearchRequest searchRequest = new SearchRequest("person");SearchSourceBuilder sourceBulider=new SearchSourceBuilder();MatchQueryBuilder query= QueryBuilders.matchQuery("name","hhh");query.operator(Operator.AND); //并集sourceBulider.query(query);searchRequest.source(sourceBulider);SearchResponse search = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);SearchHits searchHits = search.getHits();//获取总条数long value = searchHits.getTotalHits().value;System.out.println("总记录数" + value);//获取hits数组SearchHit[] hits = searchHits.getHits();for (SearchHit hit : hits) {String sourceAsString = hit.getSourceAsString(); //获取json字符串格式的数据System.out.println(sourceAsString);}}

7. 模糊查询

- wildcard查询 会对查询条件进行分词 可以使用通配符 ?(任意单个字符) 和 * (0或多个字符)

- regexp 查询 正则查询

- prefix 前缀查询

5.1. 脚本查询

# wildcard 查询 查询条件分词 模糊查询GET person/_search{"query": {"wildcard": {"name": {"value": "h"}}}}# 正则查询 查询条件分词 模糊查询GET person/_search{"query": {"regexp": {"name": "\\q+(.)*"}}}# 前缀查询GET person/_search{"query": {"prefix": {"name": {"value": "qwe"}}}}

7.2. api操作

//wildcard 模糊查询@Testpublic void testWildcardQuery() throws IOException {SearchRequest searchRequest = new SearchRequest("person");SearchSourceBuilder sourceBulider=new SearchSourceBuilder();WildcardQueryBuilder query = QueryBuilders.wildcardQuery("name", "h*");sourceBulider.query(query);searchRequest.source(sourceBulider);SearchResponse search = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);SearchHits searchHits = search.getHits();//获取总条数long value = searchHits.getTotalHits().value;System.out.println("总记录数" + value);//获取hits数组SearchHit[] hits = searchHits.getHits();for (SearchHit hit : hits) {String sourceAsString = hit.getSourceAsString(); //获取json字符串格式的数据System.out.println(sourceAsString);}}//regexp 正则查询@Testpublic void testrRegexpQuery() throws IOException {SearchRequest searchRequest = new SearchRequest("person");SearchSourceBuilder sourceBulider=new SearchSourceBuilder();RegexpQueryBuilder query = QueryBuilders.regexpQuery("name", "\\h*");sourceBulider.query(query);searchRequest.source(sourceBulider);SearchResponse search = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);SearchHits searchHits = search.getHits();//获取总条数long value = searchHits.getTotalHits().value;System.out.println("总记录数" + value);//获取hits数组SearchHit[] hits = searchHits.getHits();for (SearchHit hit : hits) {String sourceAsString = hit.getSourceAsString(); //获取json字符串格式的数据System.out.println(sourceAsString);}}//prefix 前缀查询@Testpublic void testrPrefixQuery() throws IOException {SearchRequest searchRequest = new SearchRequest("person");SearchSourceBuilder sourceBulider=new SearchSourceBuilder();PrefixQueryBuilder query = QueryBuilders.prefixQuery("name", "qwe");sourceBulider.query(query);searchRequest.source(sourceBulider);SearchResponse search = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);SearchHits searchHits = search.getHits();//获取总条数long value = searchHits.getTotalHits().value;System.out.println("总记录数" + value);//获取hits数组SearchHit[] hits = searchHits.getHits();for (SearchHit hit : hits) {String sourceAsString = hit.getSourceAsString(); //获取json字符串格式的数据System.out.println(sourceAsString);}}

8. 范围查询

range范围查询 查询指定字段在指定范围内包含值

5.1. 脚本查询

#范围查询GET perso/_search{"query": {"range": {"age": {"gte": 10,"lte": 30}}},"sort": [{"age": {"order": "desc"}}]}

5.2. api查询

//range 范围查询@Testpublic void testRangeQuery() throws IOException {SearchRequest searchRequest = new SearchRequest("person");SearchSourceBuilder sourceBulider=new SearchSourceBuilder();RangeQueryBuilder query = QueryBuilders.rangeQuery("age");//指定字段query.gte("10"); //小于等于query.lte("30"); //大于等于sourceBulider.query(query);sourceBulider.sort("age", SortOrder.ASC); //排序searchRequest.source(sourceBulider);SearchResponse search = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);SearchHits searchHits = search.getHits();//获取总条数long value = searchHits.getTotalHits().value;System.out.println("总记录数" + value);//获取hits数组SearchHit[] hits = searchHits.getHits();for (SearchHit hit : hits) {String sourceAsString = hit.getSourceAsString(); //获取json字符串格式的数据System.out.println(sourceAsString);}}

9. queryString查询

- 会对查询条件进行分词

- 然后将分词后的查询条件和词条进行等值匹配

- 默认取并集

- 可以指定多个查询字段

5.1. 脚本查询

# queryString 查询GET person/_search{"query": {"query_string": {"fields": ["name","address"],"query": "华为 OR 手机"}}}# SimpleQueryStringQuery是QueryStringQuery的简化版,其本身不支持 AND OR NOT 布尔运算关键字,这些关键字会被当做普通词语进行处理。# 可以通过 default_operator 指定查询字符串默认使用的运算方式,默认为 ORGET person/_search{"query": {"simple_query_string": {"fields": ["name","address"],"query": "华为 OR 手机"}}}

9.2. Api查询

//queryString@Testpublic void testQueryStringQuery() throws IOException {SearchRequest searchRequest = new SearchRequest("person");SearchSourceBuilder sourceBulider=new SearchSourceBuilder();QueryStringQueryBuilder query = QueryBuilders.queryStringQuery("华为").field("name").field("address").defaultOperator(Operator.OR);sourceBulider.query(query);searchRequest.source(sourceBulider);SearchResponse search = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);SearchHits searchHits = search.getHits();//获取总条数long value = searchHits.getTotalHits().value;System.out.println("总记录数" + value);//获取hits数组SearchHit[] hits = searchHits.getHits();for (SearchHit hit : hits) {String sourceAsString = hit.getSourceAsString(); //获取json字符串格式的数据System.out.println(sourceAsString);}}

10. 布尔查询

boolQuery 对多个查询条件连接 组合查询

- must (and) 条件必须成立

- must_not (not) 条件必须不成立

- should(or) 条件可以成立

- filter 条件必须成功 性能比must高 不会计算得分 (当查询结果符合查询条件越多则得分越多)

5.1. 脚本查询

# bool查询GET person/_search{"query": {"bool": {"must": [{"term": {"name": {"value": "张三"}}}]}}}#filterGET person/_search{"query": {"bool": {"filter": [{"term": {"name": {"value": "张三"}}}]}}}# 组合多条件查询GET person/_search{"query": {"bool": {"must": [{"term": {"name": {"value": "张三"}}}],"filter": [{"term": {"address": "5G"}}]}}}

10.2. API查询

//boolQuery@Testpublic void testBoolQueryQuery() throws IOException {SearchRequest searchRequest = new SearchRequest("person");SearchSourceBuilder sourceBuilder=new SearchSourceBuilder();//构建boolQueryBoolQueryBuilder query = QueryBuilders.boolQuery();//构建各个查询条件TermQueryBuilder termQuery = QueryBuilders.termQuery("name", "张三");//查询名字为张三的query.must(termQuery);MatchQueryBuilder matchQuery = QueryBuilders.matchQuery("address", "5G"); //查询地址包含5g的query.must(matchQuery);sourceBuilder.query(query);searchRequest.source(sourceBuilder);SearchResponse search = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);SearchHits searchHits = search.getHits();//获取总条数long value = searchHits.getTotalHits().value;System.out.println("总记录数" + value);//获取hits数组SearchHit[] hits = searchHits.getHits();for (SearchHit hit : hits) {String sourceAsString = hit.getSourceAsString(); //获取json字符串格式的数据System.out.println(sourceAsString);}}

11. 聚合查询

- 指标聚合 相当于mysql的聚合函数 max min avg sum等

- 桶聚合 相当于mysql的group by . 不要对text类型的数据进行分组 会失败

5.1. 脚本查询

# 聚合查询# 指标聚合 聚合函数GET person/_search{"query": {"match": {"name": "张三"}},"aggs": {"NAME": {"max": {"field": "age"}}}}# 桶聚合 分组 通过 aggsGET person/_search{"query": {"match": {"name": "张三"}},"aggs": {"zdymc": {"terms": {"field": "age","size": 10}}}}

9.2. Api查询

//聚合查询 桶聚合 分组@Testpublic void testAggsQuery() throws IOException {SearchRequest searchRequest = new SearchRequest("person");SearchSourceBuilder sourceBuilder=new SearchSourceBuilder();MatchQueryBuilder query = QueryBuilders.matchQuery("name", "张三");sourceBuilder.query(query);/*** terms 查询后结果名称* field 条件字段* size 每页展示的条数*/TermsAggregationBuilder aggs = AggregationBuilders.terms("自定义名称").field("age").size(10);sourceBuilder.aggregation(aggs);searchRequest.source(sourceBuilder);SearchResponse search = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);SearchHits searchHits = search.getHits();//获取总条数long value = searchHits.getTotalHits().value;System.out.println("总记录数" + value);//获取hits数组SearchHit[] hits = searchHits.getHits();for (SearchHit hit : hits) {String sourceAsString = hit.getSourceAsString(); //获取json字符串格式的数据System.out.println(sourceAsString);}//获取聚合结果Aggregations aggregations = search.getAggregations();Map<String, Aggregation> aggregationMap = aggregations.asMap(); //将结果转为mapTerms zdymc = (Terms) aggregationMap.get("自定义名称");List<? extends Terms.Bucket> buckets = zdymc.getBuckets();ArrayList list = new ArrayList<>();for (Terms.Bucket bucket : buckets) {Object key = bucket.getKey();list.add(key);}for (Object o : list) {System.out.println(o);}}

12. 高亮查询

- 高亮字段

- 前缀

- 后缀 如果不设置前后缀 默认为em标签

2.1. 脚本操作

# 高亮查询GET person/_search{"query": {"match": {"address": "手机"}},"highlight": {"fields": {"address": {"pre_tags": "<font color='red'>","post_tags": "</font>"}}}}

4.2. Api操作

//highlight 高亮查询@Testpublic void testHighlightQuery() throws IOException {SearchRequest searchRequest = new SearchRequest("person");SearchSourceBuilder sourceBuilder=new SearchSourceBuilder();MatchQueryBuilder query = QueryBuilders.matchQuery("address", "手机");sourceBuilder.query(query);HighlightBuilder highlightBuilder = new HighlightBuilder();//高亮对象highlightBuilder.field("address"); //字段highlightBuilder.preTags("<font color='red'>"); //前缀highlightBuilder.postTags("</font>"); //后缀sourceBuilder.highlighter(highlightBuilder);searchRequest.source(sourceBuilder);SearchResponse search = restHighLevelClient.search(searchRequest, RequestOptions.DEFAULT);SearchHits searchHits = search.getHits();//获取总条数long value = searchHits.getTotalHits().value;System.out.println("总记录数" + value);//获取hits数组SearchHit[] hits = searchHits.getHits();for (SearchHit hit : hits) {String sourceAsString = hit.getSourceAsString(); //获取json字符串格式的数据//todo 将对象转为jsonMap<String, HighlightField> highlightFields = hit.getHighlightFields(); //获取高亮中的对象元素HighlightField address = highlightFields.get("address");Text[] fragments = address.fragments(); //获取元素中的高亮数组结果//替换json中的成员变量数据//todoSystem.out.println(sourceAsString);System.out.println(Arrays.toString(fragments));}}

13. 重建索引&索引别名

ES的索引一旦创建,只允许添加字段 不允许改变字段 因为改变字段 需要重建倒排索引 影响内部缓存结构

此时需要重建一个新的索引 并将原有索引的数据导入到新索引中

# 重建索引# 新建索引 索引名称必须全部小写PUT stdent_index_v1{"mappings": {"properties": {"birthday":{"type": "date"}}}}GET stdent_index_v1PUT stdent_index_v1/_doc/1{"birthday":"1999-01-01"}GET stdent_index_v1/_search# 现在stdent_index_v1需要存储birthday为一个字符串# 1.创建新的索引v2PUT stdent_index_v2{"mappings": {"properties": {"birthday":{"type": "text"}}}}#2.将旧索引的数据拷贝到新索引 使用_reindexPOST _reindex{"source": {"index": "stdent_index_v1"},"dest": {"index": "stdent_index_v2"}}GET stdent_index_v2/_searchPUT stdent_index_v2/_doc/2{"birthday":"199年124日"}# 索引别名 因为旧索引已经不使用 而我们代码中写的是旧索引名 无法正常运行 则需要别名# 1.删除旧索引DELETE stdent_index_v1# 2. 给新索引起别名为旧索引名POST stdent_index_v2/_alias/stdent_index_v1

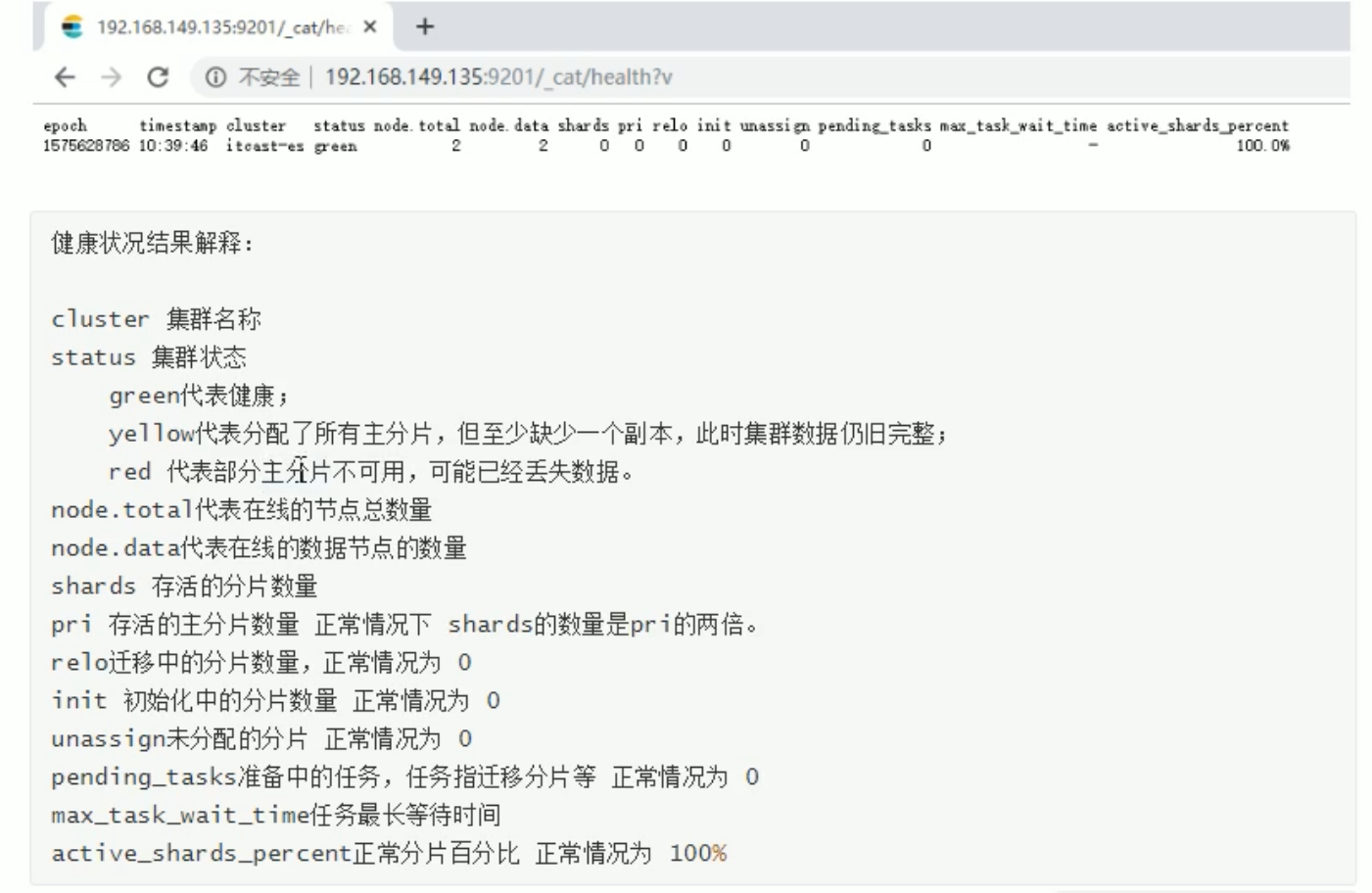

14. ES集群

ES天然支持分布式,并且分布式自动配置

- 集群(cluster) 一组拥有共同的cluster name 节点

- 节点(node) 集合中的一个ES实例

- 索引(index) es存储数据的地方

- 分片(shard) 索引可以被拆分为不同的部分进行存储 称为分片 在集群环境下 一个索引的不同可以拆分到不同节点中

- 主分片(Primary shard) 相当于副本分片的定义

- 副本分片 每个主分片可以有一个或多个副本 数据与主分片一样

14.1. 搭建

- 准备3个集群 此处作伪集群 使用端口号区分

cp -r elasticsearch-7.15.0 elasticsearch-7.15.0-1cp -r elasticsearch-7.15.0 elasticsearch-7.15.0-2cp -r elasticsearch-7.15.0 elasticsearch-7.15.0-3

- 创建日志和data目录 并授权给iekr用户 ```sh cd /opt mkdir logs mkdir data

授权

chown -R iekr:iekr ./logs chown -R iekr:iekr ./data

chown -R iekr:iekr ./elasticsearch-7.15.0-1 chown -R iekr:iekr ./elasticsearch-7.15.0-2 chown -R iekr:iekr ./elasticsearch-7.15.0-3

3.修改三个集群的配置文件```shvim /opt/elasticsearch-7.15.0-1/config/elasticsearch.yml

# 集群名称 各个集群必须一致cluster.name: itcast-es# 节点名称 不能一致node.name: iekr-1#是否有资格主节点node.master: true#是否存储数据node.data: true#最大集群数node.max_local_storage_nodes: 3#ip地址network.host: 0.0.0.0# 端口http.port: 9201#内部节点之间沟通端口transport.tcp.port: 9700#节点发现 es7.x才有discovery.seed_hosts: ["localhost:9700","localhost:9800","localhost:9900"]#初始化一个新的集群时需要此配置来选举mastercluster.initial_master_nodes: ["iekr-1","iekr-2","iekr-3"]#数据和存储路径path.data: /opt/datapath.logs: /opt/logs

vim /opt/elasticsearch-7.15.0-2/config/elasticsearch.yml

# 集群名称 各个集群必须一致cluster.name: itcast-es# 节点名称 不能一致node.name: iekr-2#是否有资格主节点node.master: true#是否存储数据node.data: true#最大集群数node.max_local_storage_nodes: 3#ip地址network.host: 0.0.0.0# 端口http.port: 9202#内部节点之间沟通端口transport.tcp.port: 9800#节点发现 es7.x才有discovery.seed_hosts: ["localhost:9700","localhost:9800","localhost:9900"]#初始化一个新的集群时需要此配置来选举mastercluster.initial_master_nodes: ["iekr-1","iekr-2","iekr-3"]#数据和存储路径path.data: /opt/datapath.logs: /opt/logs

vim /opt/elasticsearch-7.15.0-3/config/elasticsearch.yml

# 集群名称 各个集群必须一致cluster.name: itcast-es# 节点名称 不能一致node.name: iekr-3#是否有资格主节点node.master: true#是否存储数据node.data: true#最大集群数node.max_local_storage_nodes: 3#ip地址network.host: 0.0.0.0# 端口http.port: 9203#内部节点之间沟通端口transport.tcp.port: 9900#节点发现 es7.x才有discovery.seed_hosts: ["localhost:9700","localhost:9800","localhost:9900"]#初始化一个新的集群时需要此配置来选举mastercluster.initial_master_nodes: ["iekr-1","iekr-2","iekr-3"]#数据和存储路径path.data: /opt/datapath.logs: /opt/logs

- ES默认占用1G 我们通过配置文件修改

vim /opt/elasticsearch-7.15.0-1/config/jvm.options

-Xms256m-Xmx256m

- 分别启动

systemctl stop firewalldsu iekrcd /opt/elasticsearch-7.15.0-1/bin/./elasticsearch

14.2. 使用Kibana配置和管理集群

- 复制kibana

cd /optcp -r kibana-7.15.0-linux-x86_64 kibana-7.15.0-linux-x86_64-cluster

- 修改kibana集群配置

vim /opt/kibana-7.15.0-linux-x86_64-cluster/config/kibana.yml#修改以下内容elasticsearch.hosts: ["http://localhost:9201","http://localhost:9202","http://localhost:9203"]

- 启动

cd /opt/kibana-7.15.0-linux-x86_64-cluster/bin/./kibana --allow-root

- 访问 http://192.168.130.124:5601/app/monitoring 查询集群节点信息

14.3. JavaApi访问集群

- application.yml

elasticsearch:host: 192.168.130.124port: 9201host2: 192.168.130.124port2: 9202host3: 192.168.130.124port3: 9203

- config类 并注册ioc容器 ```java package com.itheima.elasticsearchdemo.config;

import org.apache.http.HttpHost; import org.elasticsearch.client.RestClient; import org.elasticsearch.client.RestHighLevelClient; import org.springframework.boot.context.properties.ConfigurationProperties; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration;

@Configuration @ConfigurationProperties(prefix = “elasticsearch”) public class ElasticSearchConfig {

private String host;private int port;private String host2;private int port2;private String host3;private int port3;public String getHost() {return host;}public void setHost(String host) {this.host = host;}public int getPort() {return port;}public void setPort(int port) {this.port = port;}@Beanpublic RestHighLevelClient client(){return new RestHighLevelClient(RestClient.builder(new HttpHost(host,port,"http"),new HttpHost(host2,port2,"http"),new HttpHost(host3,port3,"http")));}

}

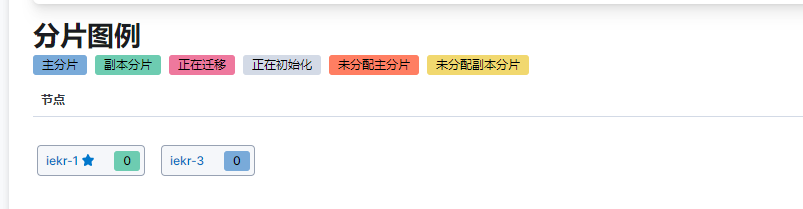

<a name="03684b4b"></a>## 14.4. 集群原理<a name="58346d8b"></a>### 14.4.1. 分片配置-在创建索引时 如果不指定分配配置 默认主分片1 副本分片1- -在创建索引时 可以通过settings设置分片-```aplPUT stdent_index_v3{"mappings": {"properties": {"birthday":{"type": "date"}}},"settings": {"number_of_shards": 3,"number_of_replicas": 1}}

分片与自平衡: es默认会交错存储分片 如果其中一个节点失效不影响访问 并且会自动将失效的分片归并到目前仍在线的节点上 节点重新上线归还分片

ES每个查询在每个分片中是单线程执行 但是可以并行处理多个分片

分片数量一旦确定好了 不能修改 但是可以通过重建索引和索引别名来迁移

索引分片推荐配置方案

- 每个分片推荐大小10-30GB

- 分片数量推荐 = 节点数 * 1 ~ 3 倍

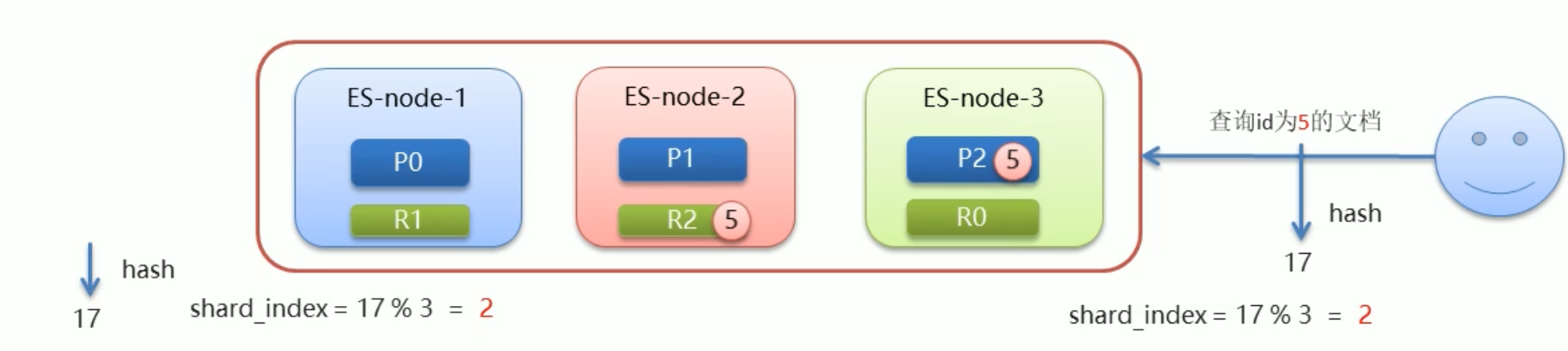

14.4.2. 路由原理

- 文档存入对应的分片 es计算分片编号的过程 称为路由

- 路由算法 shard_index = hash(id) % number_of_shards

14.5. 脑裂

- 一个正常es集群中只有一个主节点 主节点负责管理整个集群 如创建或删除索引 跟踪哪些节点是集群的一部分 并决定哪些分片分配给相关的节点

- 集群的所有节点都会选择同一个节点作为主节点

- 脑裂问题的出现是因为从节点在选择主节点上出现分歧导致一个集群出现多个主节点从而集群分离,使得集群处于异常状态

14.5.1. 脑裂原因

网络原因: 网络延迟 一般出现在外网集群

节点负责 主节点的角色即为master又为data 当数据访问量较大时 可能导致Master节点停止响应(假死状态)

#是否有资格主节点node.master: true#是否存储数据node.data: true

JVM内存回收

- 当Master节点设置的JVM内存较小时 引发JVM的大规模内存回收 造成ES进程失去响应

14.5.2. 避免脑裂

- 网络原因: discovery.zen.ping.timeout 超时时间配置大一些 默认为3S

- 节点负责 角色分离 当主节点就不要当数据存储 当数据存储的就不要当主节点

- 修改 jvm.options 的最大内存和最小内存 为服务器的内存一半

15. 集群扩容

- 修改所有集群中的 配置文件 添加新的集群

- 全部启动