- 1.K8s环境安装

- vi storage.yaml

- kubectl apply -f xxx.yaml

- replace with namespace where provisioner is deployed

- replace with namespace where provisioner is deployed

- replace with namespace where provisioner is deployed

- replace with namespace where provisioner is deployed

- replace with namespace where provisioner is deployed

- replace with namespace where provisioner is deployed

- 2.Metrics-Server安装

- 3.KubeSphere安装

- 4.附录(KubeSphere安装Yaml)

1.K8s环境安装

1.1Docker安装

1.2K8s安装

1.3NFS文件系统安装

略

- 配置默认存储

```yaml

vi storage.yaml

kubectl apply -f xxx.yaml

创建了一个存储类

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: nfs-storage annotations: storageclass.kubernetes.io/is-default-class: “true” provisioner: k8s-sigs.io/nfs-subdir-external-provisioner parameters: archiveOnDelete: “true” ## 删除pv的时候,pv的内容是否要备份

apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner

replace with namespace where provisioner is deployed

namespace: default spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers:

- name: nfs-client-provisionerimage: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2# resources:# limits:# cpu: 10m# requests:# cpu: 10mvolumeMounts:- name: nfs-client-rootmountPath: /persistentvolumesenv:- name: PROVISIONER_NAMEvalue: k8s-sigs.io/nfs-subdir-external-provisioner- name: NFS_SERVERvalue: 172.31.0.4 ## 指定自己nfs服务器地址- name: NFS_PATHvalue: /nfs/data ## nfs服务器共享的目录volumes:- name: nfs-client-rootnfs:server: 172.31.0.4path: /nfs/data

apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner

replace with namespace where provisioner is deployed

namespace: default

kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules:

- apiGroups: [“”] resources: [“nodes”] verbs: [“get”, “list”, “watch”]

- apiGroups: [“”] resources: [“persistentvolumes”] verbs: [“get”, “list”, “watch”, “create”, “delete”]

- apiGroups: [“”] resources: [“persistentvolumeclaims”] verbs: [“get”, “list”, “watch”, “update”]

- apiGroups: [“storage.k8s.io”] resources: [“storageclasses”] verbs: [“get”, “list”, “watch”]

- apiGroups: [“”] resources: [“events”] verbs: [“create”, “update”, “patch”]

kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

replace with namespace where provisioner is deployed

namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io

kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner

replace with namespace where provisioner is deployed

namespace: default rules:

- apiGroups: [“”] resources: [“endpoints”] verbs: [“get”, “list”, “watch”, “create”, “update”, “patch”]

kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner

replace with namespace where provisioner is deployed

namespace: default subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

replace with namespace where provisioner is deployed

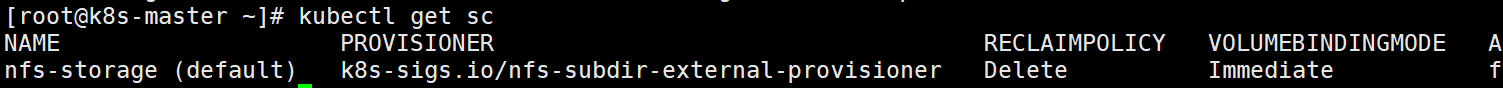

namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io```yaml#确认配置是否生效kubectl get sc

2.Metrics-Server安装

集群指标监控组件 提前安装。

apiVersion: v1kind: ServiceAccountmetadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:labels:k8s-app: metrics-serverrbac.authorization.k8s.io/aggregate-to-admin: "true"rbac.authorization.k8s.io/aggregate-to-edit: "true"rbac.authorization.k8s.io/aggregate-to-view: "true"name: system:aggregated-metrics-readerrules:- apiGroups:- metrics.k8s.ioresources:- pods- nodesverbs:- get- list- watch---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:labels:k8s-app: metrics-servername: system:metrics-serverrules:- apiGroups:- ""resources:- pods- nodes- nodes/stats- namespaces- configmapsverbs:- get- list- watch---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata:labels:k8s-app: metrics-servername: metrics-server-auth-readernamespace: kube-systemroleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: extension-apiserver-authentication-readersubjects:- kind: ServiceAccountname: metrics-servernamespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:labels:k8s-app: metrics-servername: metrics-server:system:auth-delegatorroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:auth-delegatorsubjects:- kind: ServiceAccountname: metrics-servernamespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:labels:k8s-app: metrics-servername: system:metrics-serverroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:metrics-serversubjects:- kind: ServiceAccountname: metrics-servernamespace: kube-system---apiVersion: v1kind: Servicemetadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-systemspec:ports:- name: httpsport: 443protocol: TCPtargetPort: httpsselector:k8s-app: metrics-server---apiVersion: apps/v1kind: Deploymentmetadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-systemspec:selector:matchLabels:k8s-app: metrics-serverstrategy:rollingUpdate:maxUnavailable: 0template:metadata:labels:k8s-app: metrics-serverspec:containers:- args:- --cert-dir=/tmp- --kubelet-insecure-tls- --secure-port=4443- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname- --kubelet-use-node-status-portimage: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/metrics-server:v0.4.3imagePullPolicy: IfNotPresentlivenessProbe:failureThreshold: 3httpGet:path: /livezport: httpsscheme: HTTPSperiodSeconds: 10name: metrics-serverports:- containerPort: 4443name: httpsprotocol: TCPreadinessProbe:failureThreshold: 3httpGet:path: /readyzport: httpsscheme: HTTPSperiodSeconds: 10securityContext:readOnlyRootFilesystem: truerunAsNonRoot: truerunAsUser: 1000volumeMounts:- mountPath: /tmpname: tmp-dirnodeSelector:kubernetes.io/os: linuxpriorityClassName: system-cluster-criticalserviceAccountName: metrics-servervolumes:- emptyDir: {}name: tmp-dir---apiVersion: apiregistration.k8s.io/v1kind: APIServicemetadata:labels:k8s-app: metrics-servername: v1beta1.metrics.k8s.iospec:group: metrics.k8s.iogroupPriorityMinimum: 100insecureSkipTLSVerify: trueservice:name: metrics-servernamespace: kube-systemversion: v1beta1versionPriority: 100

3.KubeSphere安装

3.1下载核心配置文件

wget https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yamlwget https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml

3.2修改cluster-configuration

在 cluster-configuration.yaml中指定我们需要开启的功能 参照官网“启用可插拔组件” https://kubesphere.com.cn/docs/pluggable-components/overview/

3.3执行安装

kubectl apply -f kubesphere-installer.yamlkubectl apply -f cluster-configuration.yaml

3.4查看进度(3~4分钟)

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f#查看端口kubectl get svc/ks-console -n kubesphere-system

3.5登录验证

访问任意机器的 30880端口账号 : admin密码 : P@88w0rd

3.6etcd监控证书问题解决

kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs --from-file=etcd-client-ca.crt=/etc/kubernetes/pki/etcd/ca.crt --from-file=etcd-client.crt=/etc/kubernetes/pki/apiserver-etcd-client.crt --from-file=etcd-client.key=/etc/kubernetes/pki/apiserver-etcd-client.key

4.附录(KubeSphere安装Yaml)

4.1kubesphere-installer.yaml

---apiVersion: apiextensions.k8s.io/v1beta1kind: CustomResourceDefinitionmetadata:name: clusterconfigurations.installer.kubesphere.iospec:group: installer.kubesphere.ioversions:- name: v1alpha1served: truestorage: truescope: Namespacednames:plural: clusterconfigurationssingular: clusterconfigurationkind: ClusterConfigurationshortNames:- cc---apiVersion: v1kind: Namespacemetadata:name: kubesphere-system---apiVersion: v1kind: ServiceAccountmetadata:name: ks-installernamespace: kubesphere-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:name: ks-installerrules:- apiGroups:- ""resources:- '*'verbs:- '*'- apiGroups:- appsresources:- '*'verbs:- '*'- apiGroups:- extensionsresources:- '*'verbs:- '*'- apiGroups:- batchresources:- '*'verbs:- '*'- apiGroups:- rbac.authorization.k8s.ioresources:- '*'verbs:- '*'- apiGroups:- apiregistration.k8s.ioresources:- '*'verbs:- '*'- apiGroups:- apiextensions.k8s.ioresources:- '*'verbs:- '*'- apiGroups:- tenant.kubesphere.ioresources:- '*'verbs:- '*'- apiGroups:- certificates.k8s.ioresources:- '*'verbs:- '*'- apiGroups:- devops.kubesphere.ioresources:- '*'verbs:- '*'- apiGroups:- monitoring.coreos.comresources:- '*'verbs:- '*'- apiGroups:- logging.kubesphere.ioresources:- '*'verbs:- '*'- apiGroups:- jaegertracing.ioresources:- '*'verbs:- '*'- apiGroups:- storage.k8s.ioresources:- '*'verbs:- '*'- apiGroups:- admissionregistration.k8s.ioresources:- '*'verbs:- '*'- apiGroups:- policyresources:- '*'verbs:- '*'- apiGroups:- autoscalingresources:- '*'verbs:- '*'- apiGroups:- networking.istio.ioresources:- '*'verbs:- '*'- apiGroups:- config.istio.ioresources:- '*'verbs:- '*'- apiGroups:- iam.kubesphere.ioresources:- '*'verbs:- '*'- apiGroups:- notification.kubesphere.ioresources:- '*'verbs:- '*'- apiGroups:- auditing.kubesphere.ioresources:- '*'verbs:- '*'- apiGroups:- events.kubesphere.ioresources:- '*'verbs:- '*'- apiGroups:- core.kubefed.ioresources:- '*'verbs:- '*'- apiGroups:- installer.kubesphere.ioresources:- '*'verbs:- '*'- apiGroups:- storage.kubesphere.ioresources:- '*'verbs:- '*'- apiGroups:- security.istio.ioresources:- '*'verbs:- '*'- apiGroups:- monitoring.kiali.ioresources:- '*'verbs:- '*'- apiGroups:- kiali.ioresources:- '*'verbs:- '*'- apiGroups:- networking.k8s.ioresources:- '*'verbs:- '*'- apiGroups:- kubeedge.kubesphere.ioresources:- '*'verbs:- '*'- apiGroups:- types.kubefed.ioresources:- '*'verbs:- '*'---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: ks-installersubjects:- kind: ServiceAccountname: ks-installernamespace: kubesphere-systemroleRef:kind: ClusterRolename: ks-installerapiGroup: rbac.authorization.k8s.io---apiVersion: apps/v1kind: Deploymentmetadata:name: ks-installernamespace: kubesphere-systemlabels:app: ks-installspec:replicas: 1selector:matchLabels:app: ks-installtemplate:metadata:labels:app: ks-installspec:serviceAccountName: ks-installercontainers:- name: installerimage: kubesphere/ks-installer:v3.1.1imagePullPolicy: "Always"resources:limits:cpu: "1"memory: 1Girequests:cpu: 20mmemory: 100MivolumeMounts:- mountPath: /etc/localtimename: host-timevolumes:- hostPath:path: /etc/localtimetype: ""name: host-time

4.2cluster-configuration.yaml

---apiVersion: installer.kubesphere.io/v1alpha1kind: ClusterConfigurationmetadata:name: ks-installernamespace: kubesphere-systemlabels:version: v3.1.1spec:persistence:storageClass: "" # If there is no default StorageClass in your cluster, you need to specify an existing StorageClass here.authentication:jwtSecret: "" # Keep the jwtSecret consistent with the Host Cluster. Retrieve the jwtSecret by executing "kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret" on the Host Cluster.local_registry: "" # Add your private registry address if it is needed.etcd:monitoring: true # Enable or disable etcd monitoring dashboard installation. You have to create a Secret for etcd before you enable it.endpointIps: 172.31.0.4 # etcd cluster EndpointIps. It can be a bunch of IPs here.port: 2379 # etcd port.tlsEnable: truecommon:redis:enabled: trueopenldap:enabled: trueminioVolumeSize: 20Gi # Minio PVC size.openldapVolumeSize: 2Gi # openldap PVC size.redisVolumSize: 2Gi # Redis PVC size.monitoring:# type: external # Whether to specify the external prometheus stack, and need to modify the endpoint at the next line.endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090 # Prometheus endpoint to get metrics data.es: # Storage backend for logging, events and auditing.# elasticsearchMasterReplicas: 1 # The total number of master nodes. Even numbers are not allowed.# elasticsearchDataReplicas: 1 # The total number of data nodes.elasticsearchMasterVolumeSize: 4Gi # The volume size of Elasticsearch master nodes.elasticsearchDataVolumeSize: 20Gi # The volume size of Elasticsearch data nodes.logMaxAge: 7 # Log retention time in built-in Elasticsearch. It is 7 days by default.elkPrefix: logstash # The string making up index names. The index name will be formatted as ks-<elk_prefix>-log.basicAuth:enabled: falseusername: ""password: ""externalElasticsearchUrl: ""externalElasticsearchPort: ""console:enableMultiLogin: true # Enable or disable simultaneous logins. It allows different users to log in with the same account at the same time.port: 30880alerting: # (CPU: 0.1 Core, Memory: 100 MiB) It enables users to customize alerting policies to send messages to receivers in time with different time intervals and alerting levels to choose from.enabled: true # Enable or disable the KubeSphere Alerting System.# thanosruler:# replicas: 1# resources: {}auditing: # Provide a security-relevant chronological set of records,recording the sequence of activities happening on the platform, initiated by different tenants.enabled: true # Enable or disable the KubeSphere Auditing Log System.devops: # (CPU: 0.47 Core, Memory: 8.6 G) Provide an out-of-the-box CI/CD system based on Jenkins, and automated workflow tools including Source-to-Image & Binary-to-Image.enabled: true # Enable or disable the KubeSphere DevOps System.jenkinsMemoryLim: 2Gi # Jenkins memory limit.jenkinsMemoryReq: 1500Mi # Jenkins memory request.jenkinsVolumeSize: 8Gi # Jenkins volume size.jenkinsJavaOpts_Xms: 512m # The following three fields are JVM parameters.jenkinsJavaOpts_Xmx: 512mjenkinsJavaOpts_MaxRAM: 2gevents: # Provide a graphical web console for Kubernetes Events exporting, filtering and alerting in multi-tenant Kubernetes clusters.enabled: true # Enable or disable the KubeSphere Events System.ruler:enabled: truereplicas: 2logging: # (CPU: 57 m, Memory: 2.76 G) Flexible logging functions are provided for log query, collection and management in a unified console. Additional log collectors can be added, such as Elasticsearch, Kafka and Fluentd.enabled: true # Enable or disable the KubeSphere Logging System.logsidecar:enabled: truereplicas: 2metrics_server: # (CPU: 56 m, Memory: 44.35 MiB) It enables HPA (Horizontal Pod Autoscaler).enabled: false # Enable or disable metrics-server.monitoring:storageClass: "" # If there is an independent StorageClass you need for Prometheus, you can specify it here. The default StorageClass is used by default.# prometheusReplicas: 1 # Prometheus replicas are responsible for monitoring different segments of data source and providing high availability.prometheusMemoryRequest: 400Mi # Prometheus request memory.prometheusVolumeSize: 20Gi # Prometheus PVC size.# alertmanagerReplicas: 1 # AlertManager Replicas.multicluster:clusterRole: none # host | member | none # You can install a solo cluster, or specify it as the Host or Member Cluster.network:networkpolicy: # Network policies allow network isolation within the same cluster, which means firewalls can be set up between certain instances (Pods).# Make sure that the CNI network plugin used by the cluster supports NetworkPolicy. There are a number of CNI network plugins that support NetworkPolicy, including Calico, Cilium, Kube-router, Romana and Weave Net.enabled: true # Enable or disable network policies.ippool: # Use Pod IP Pools to manage the Pod network address space. Pods to be created can be assigned IP addresses from a Pod IP Pool.type: calico # Specify "calico" for this field if Calico is used as your CNI plugin. "none" means that Pod IP Pools are disabled.topology: # Use Service Topology to view Service-to-Service communication based on Weave Scope.type: none # Specify "weave-scope" for this field to enable Service Topology. "none" means that Service Topology is disabled.openpitrix: # An App Store that is accessible to all platform tenants. You can use it to manage apps across their entire lifecycle.store:enabled: true # Enable or disable the KubeSphere App Store.servicemesh: # (0.3 Core, 300 MiB) Provide fine-grained traffic management, observability and tracing, and visualized traffic topology.enabled: true # Base component (pilot). Enable or disable KubeSphere Service Mesh (Istio-based).kubeedge: # Add edge nodes to your cluster and deploy workloads on edge nodes.enabled: true # Enable or disable KubeEdge.cloudCore:nodeSelector: {"node-role.kubernetes.io/worker": ""}tolerations: []cloudhubPort: "10000"cloudhubQuicPort: "10001"cloudhubHttpsPort: "10002"cloudstreamPort: "10003"tunnelPort: "10004"cloudHub:advertiseAddress: # At least a public IP address or an IP address which can be accessed by edge nodes must be provided.- "" # Note that once KubeEdge is enabled, CloudCore will malfunction if the address is not provided.nodeLimit: "100"service:cloudhubNodePort: "30000"cloudhubQuicNodePort: "30001"cloudhubHttpsNodePort: "30002"cloudstreamNodePort: "30003"tunnelNodePort: "30004"edgeWatcher:nodeSelector: {"node-role.kubernetes.io/worker": ""}tolerations: []edgeWatcherAgent:nodeSelector: {"node-role.kubernetes.io/worker": ""}tolerations: []