计算学习理论

- 研究的是关于通过“计算”来进行“学习”的理论,即机器学习的理论基础。

- 目的: 分析学习任务的困难本质,为学习算法提供理论保证,并根据分析结果指导算法设计。

Basic Concept

Basic terminology

| X: input space | the set of all possible examples or instances | | —- | —- | | y: target space | all possible labels or target values | | D: distribution | the distribution of X | | c: concept | a concept is a mapping from X to y.

In the case y = {0,1} , it can be represented by a subset of X. | | C: concept class | a set of concepts to learn | | H: hypothesis set | a fixed set of possible concepts considered by learner.

might not necessarily concide with C. | | | consistent: The H used contains the concept to learn | | | inconsistent: The concept to learn is not in the H used | | S: samples | drwan i.i.d. according to D | | labels | are based on a specific target concept to learn | | learning task | use the labeled sample S to select a hypothesis h that has a small generalization error with respect to the concept c. |

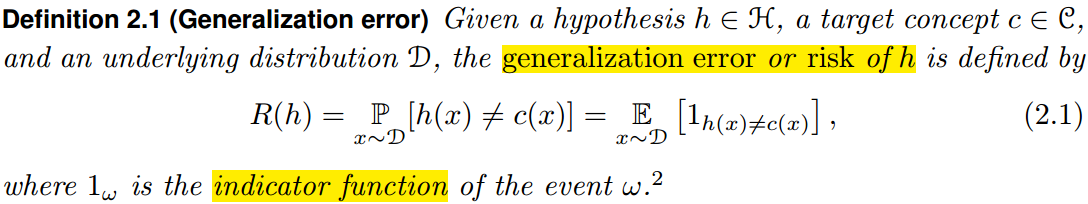

Generalization error and Empirical error

Generalization error

泛化误差往往不能直接获得,因为样本分布、目标概念都是未知的。

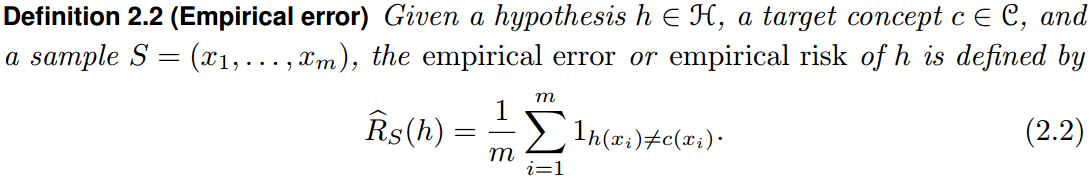

Empirical error

经验误差是在学习使用的样本集上的平均误差。

h与S一致(conistent):则h在数据集S上的经验误差为0

二者的关系

对于某个固定的

,当S由D独立同分布地采样获得时,经验误差的期望是泛化误差。

证明思路:利用期望的线性性以及独立同分布采样的性质,可得:The Probably Approximately Correct (PAC) Learning model

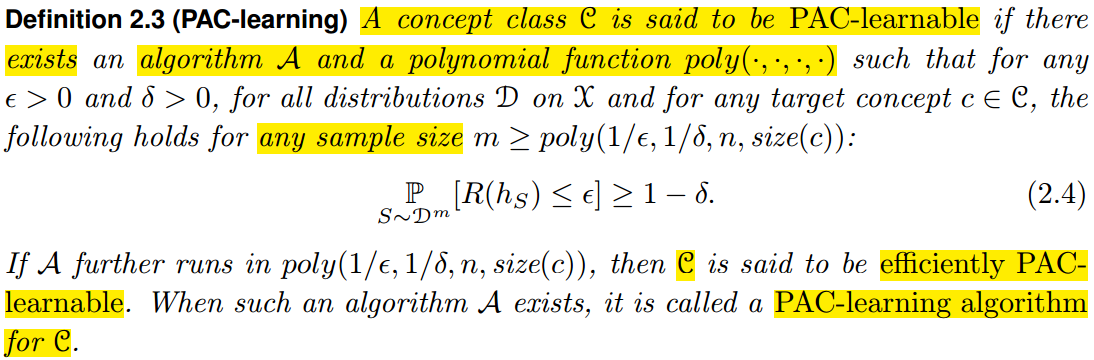

PAC Learning

两个概念

- generalization bound:让取到边界值,则进而可以得到

的generalization bound。

- 样本复杂度:满足PAC算法所需的m的最小值称为学习算法的样本复杂度。

- generalization bound:让取到边界值,则进而可以得到

Notes

- 参数的作用

- accuracy:

;confidence:

- accuracy:

- PAC Framework is a distribution-free model

- training/test samples used to define the error are drawen according to the same distribution

- PAC Framework deals with the

question of learnability for a concept class and not a particular concept

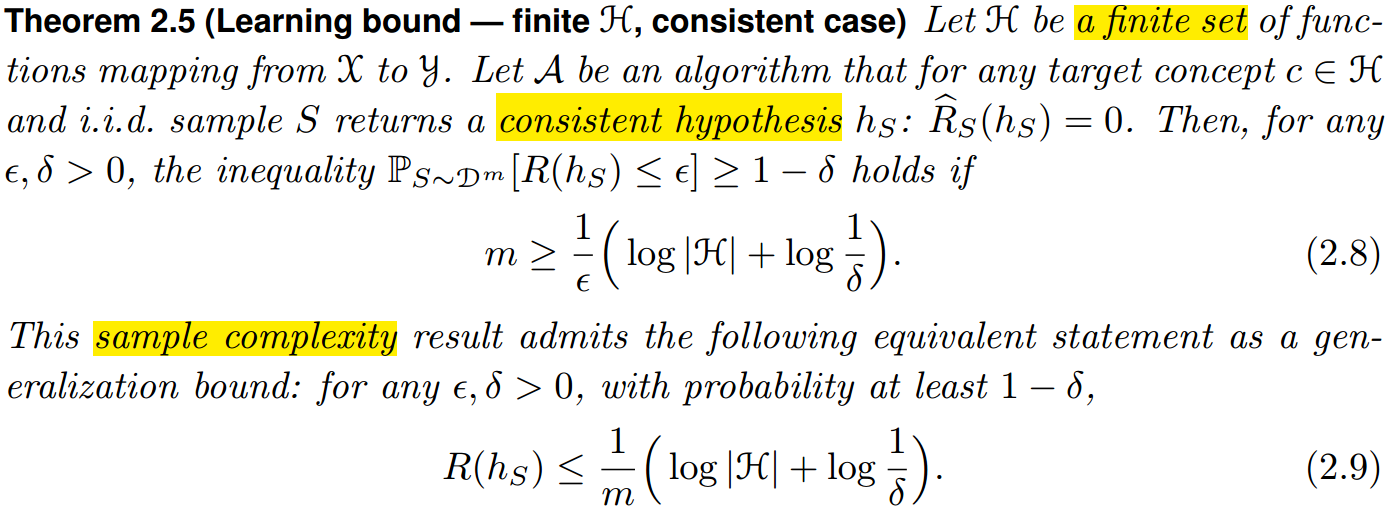

Learning bound - finite H, consistent case

- 参数的作用

证明思路

- 采用的学习算法:不断增加

中样本的数目,然后逐步排除

中与

不一致的假设。由于样本数量限制,最后会剩下一批与

一致的等效假设,最后要从中选择一个近似目标。

- 由于无法确定最后选中了哪个等效假设,为了确保获得

近似,需要保证剩下的这些等效假设的误差均不大于

。

- 证明技巧从反面角度考虑。令

,需要保证这些假设都被剔除掉,即不出现在最后的等效假设中。其中单个假设出现在最后(即与

一致)的概率为

。进而,由Union Bound可知:

- 采用的学习算法:不断增加

- 进而有:

。因此,存在m使得

- Notes

- 当假设集为有限集时,一个consistent algorithm是一个PAC-learning算法

- generalization error的上界是一个关于m单调递减的函数,学习算法受益于较大的样本量,decrease rate是

。

- 使用consistent algorithm的代价:需要使用一个较大的假设集,确保

。

- 上界随

增大而增大,不过是对数级别。

与

只差一个常数系数,后者可以看作表示

需要的比特数。